Smart Mobility and Sensor Fusion

To go back to the mainpage: PRE2017 3 Groep6.

Explaining feedback requirements

To start off with, the mobility scooter should contain a (touchscreen-)display on which users can view data that is relevant to them and their current location/situation. For example, the estimated time of arrival can be displayed alongside a weather notification to ensure the user does not accidentally travel through a rainstorm.

Other items to display are of course the battery status, tire pressure, current velocity, available range with the current battery charge, connectivity information such as contacts and (video)calls and means of entertainment such as music and videos.

The purpose of the display and speakers is not only to entertain the user and provide useful information. It could also be seen as a more subtle attempt at keeping the user occupied in order to prevent them taking over the wheel without proper cause. Of course, this is at the same time a disadvantage considering the user will be less aware of their environment when viewing the screen.

Aside from the screen, there is also need for auditory feedback. Not only for the user of the mobility scooter, but also for their direct environment (such as pedestrians). In case of a sudden stop, the user will prefer to be informed of the reason. In case a pedestrian is not paying attention to the road (using their phone, for example) they should also receive a warning sound from the mobility scooter, preventing them from walking into it.

Considering there can be many physical reasons for a person to be in need of an autonomous mobility scooter, taking over control in case of an emergency should be possible through different approaches. Some users may want to use a joystick, while other can prefer voice controlled operation or even no further movement at all, meaning a physical kill-switch is a necessity.

Connecting to devices like smartphones or smartwatches can be seen as a luxury more than a necessity, but these can actually be helpful in using the scooter. For example, the user can check their scooter's battery status before even leaving their couch, as well as entering their destination beforehand. This can be used for many functionalities.

In case of an (medical) emergency, it would be highly beneficial for the scooter to send out an emergency distress signal to both the authorities and close family members or friends of the victim.

Communication between different mobility scooters can be applied to increase the efficiency of the navigation system, as well as improving the accuracy of several measurement systems, such as a local weather estimate and maybe even a sensor checking whether certain roads are slippery during the winter. A mobility scooter that is currently located in a supermarket which is swamped with customers, can "warn" other scooters throughout the network to alter their current plan. Their respective users can then receive a notification asking them whether they want to continue to the supermarket or maybe start with the postal office instead.

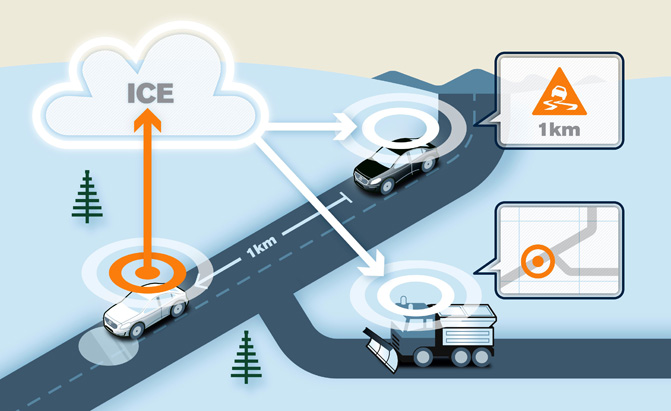

This is an example displaying the use of machine-to-machine communication, in the way of warning others for a slippery road.

This is an example displaying the use of machine-to-machine communication, in the way of warning others for a slippery road.

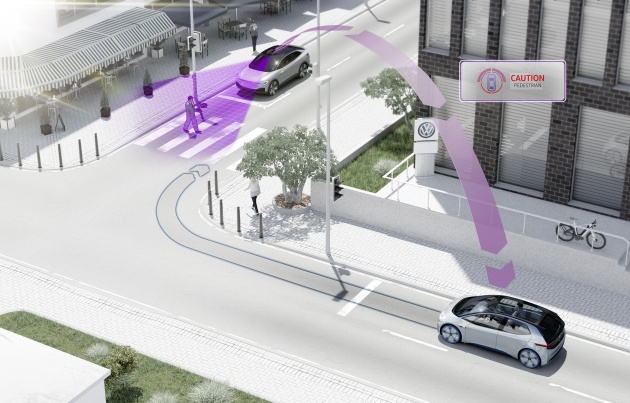

This example refers to scooters giving others a headsup of the amount of pedestrians in certain locations.

This example refers to scooters giving others a headsup of the amount of pedestrians in certain locations.

As soon as this system works, as it already does amongs several car brands, it can be extended in the way that scooters can also start communicating with cars and other vehicles instead of solely with other scooters. Imagine a car needs to turn back because of a road being (suddenly) closed, this can be communicated to scooters as well, to save the users time and battery usage, improving their travel efficiency and reducing the chance they run out of juice.

https://www.technologyreview.com/s/534981/car-to-car-communication/ (explaining how car to car communication already works, this can be extended to mobility scooters)

Multi-Sensor Data Fusion

In order for the autonomous mobility scooter to be "aware" of its own surroundings, several different types of sensors will be included in the final model. However, every sensor has its own way to process sensory information through hardware as well as software. Considering all data needs to be taken into account at the same time, there is need for some way to combine the different sensor outputs. The technique that is required in this case is that of Sensor Fusion. The idea here, is to create a method to combine various outputs from different types of sensors, or simply to combine several outputs of the same sensor created over a longer period of time.

An example of the application of Sensor Fusion in the autonomous mobility scooter would be to scan the distance from the scooter to objects around it repeatedly in short intervals. By doing so with all attached proximity sensors, the scooter can be aware of potential collisions before they occur, and also adjust its speed to pedestrians walking behind or in front of it.

The idea would be that this combined data package from all proximity sensors can also be combined with information provided by other hardware, such as Radar/LIDAR and camera(s).

For a visual explanation, see the image below. This is an example created for autonomous cars, which can be extended to the application in autonomous mobility scooters as well.