PRE2023 3 Group3

Group members

| Name | Student ID | Current Study Programme |

|---|---|---|

| Patryk Stefanski | 1830872 | CSE |

| Raul Sanchez Flores | 1844512 | CSE |

| Briana Isaila | 1785923 | CSE |

| Raul Hernandez Lopez | 1833197 | CSE |

| Ilie Rareş Alexandru | 1805975 | CSE |

| Ania Barbulescu | 1823612 | CSE |

Problem statement

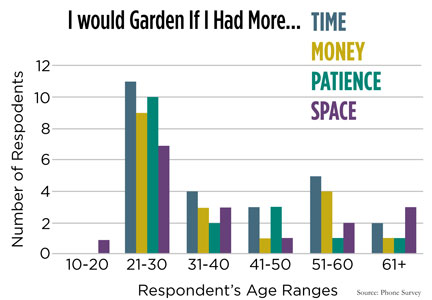

In western society, having a family, a house, and a good job are what many people aspire to have. As people strive to achieve such aspirations, their spending power increases, which allows them to be able to afford to buy a nice home for their future family, with a nice garden for the kids and pets. However, as with many things, in our capitalist world, this usually comes at a sacrifice: free time. According to a study conducted by a student team at Manchester University [2], the three main reasons why people don't garden which made up 60% of the survey responses included time constraints, lack of knowledge/information, and space restraints. Gardening should be encouraged, due to its environmental benefits and many other advantages[3].

In the past decade, robotics has been advancing across multiple fields rapidly as tedious and difficult tasks become increasingly automated [4], this is not any different in the field of agriculture and gardening [5]. In recent years, many robots have become available that aid farmers in important aspects such as irrigation, plantation, and weeding. These robots are large mechanical structures sold at a very high price meaning their only true usage is in large-scale farming operations. Unfortunately, one common user group has been left behind and not considered when developing many features of this new technology in gardening and agriculture, the amateur gardener. Amateur gardeners, often lacking in-depth knowledge about plants and gardening practices, face challenges in maintaining their gardens. Identifying issues with specific plants, understanding their individual needs, and implementing corrective measures can be overwhelming for their limited expertise. It is no surprise that traditional gardening tools and resources often fall short of providing the necessary guidance for optimal plant care, so another solution must be found. This is the problem that our team's robot will be the solution to. We cannot help the fact that some people do not have a space to garden, but we can address the other two common problems. So, the questions we asked ourselves were:

"How do we make gardening more accessible for the amateur gardeners?"

"How do we provide the necessary guidance and information?"

"How can we make our product aid time efficiency?"

Objectives

The objectives for the project that we hope to accomplish throughout the 8 weeks that are given to us are the following:

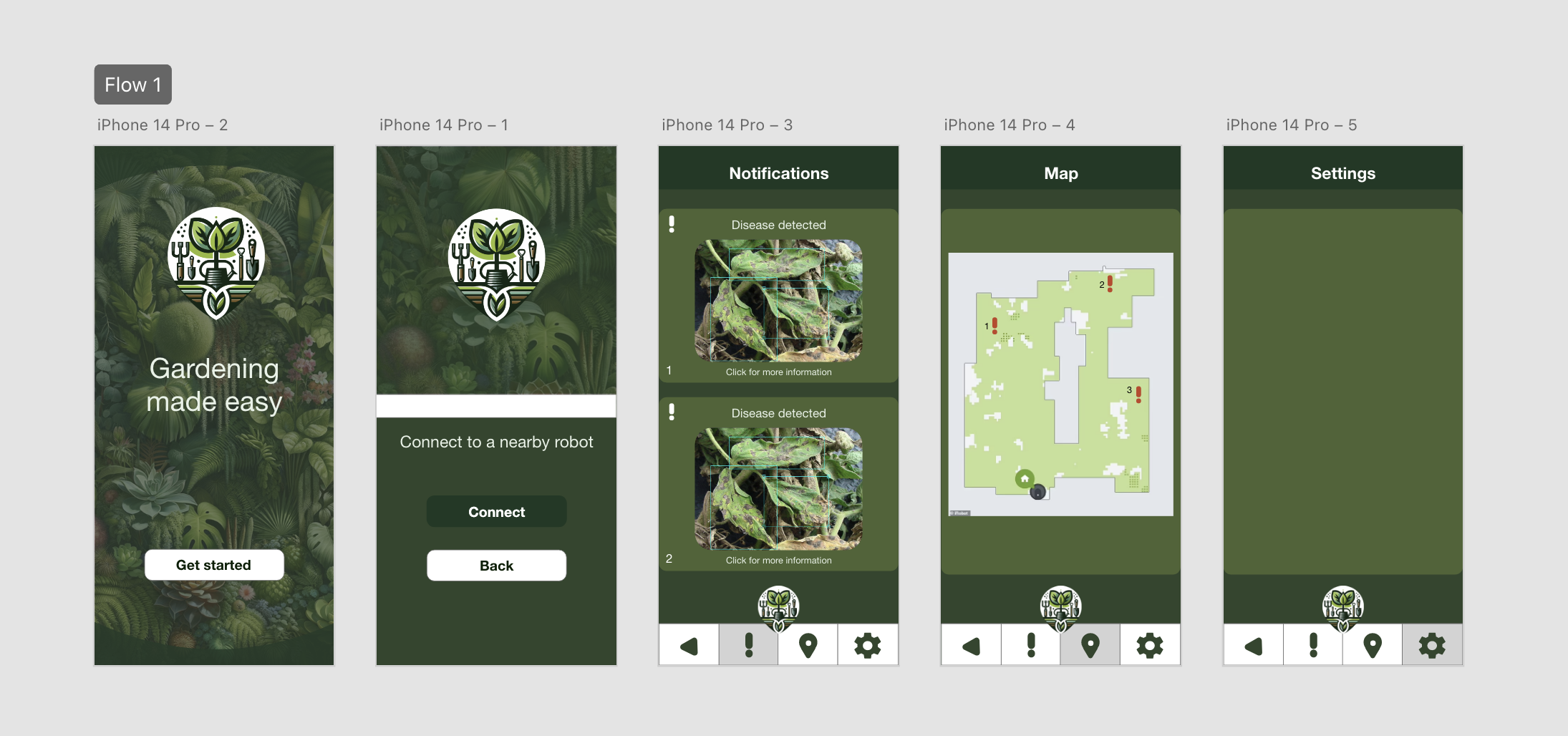

- Create a mobile application prototype that connects to a robot.

- Look into state-of-the-art technology that can be brought to an amateur user's fingertips through an application.

- The robot's application should be user-friendly and easy to use.

- The robot should map out the garden's explorable terrain.

- The robot should be able to scan and identify a plant and if it is healthy. (the type of plant as well?)

- The robot should be able to cut grass while scanning for diseases for time efficiency purposes.

- The robot should be able to recommend specific actions after spotting a disease/infestation.

- The application should display the location on the map at which it found an unhealthy plant and a picture of the plant, and recommend actions.

Users

Who are the users?

The users of the product are garden-owners who need assistance in monitoring and maintaining their garden. This could be due to the fact that the users do not have required knowledge to properly maintain all different types of plants in their garden, or would prefer a quick and easy set of instruction of what to do with each unhealthy plant and where that plant is located. This would optimise the users routine of gardening without taking away the joy and passion that inspired the user to invest into plants in their garden in the first place.

What do the users require?

The users require a robot which is easy to operate and does not need unnecessary maintenance and setup. The robot should be easily controllable through a user interface that is tailored to the users needs and that displays all required information to the user in a clear and concise way. The user also requires that the robot may effectively map their garden and identify where certain plants are located. Lastly, the user requires that the robot is able to accurately describe what actions must be taken, if any are necessary, for a specific plant at a specific location in the garden.

Deliverables

- Research into AI plant detection mapping a garden and best ways of manoeuvring through it.

- Research into AI identifying plant diseases and infestations.

- Survey confirming that the problem we have selected to solve is a solution users desire.

- Interactive UI of an app that will allow the user to control the robot remotely that implements the user requirements that we will obtain from the survey. The UI will be able to be run on a phone and all its features will be able to be accessed through a mobile application.

- Interview with a specialist in biology or AI

- This wiki page which will document the progress of the group's work, decisions that have been made, and results we obtained.

State of Art

Automated Gardening Robots

TrimBot2020

The TrimBot2020 was the first concept for an automated gardening robot for bush trimming and rose pruning. It began as a collaboration project between multiple universities, including ETH Zurich, University of Groningen and University of Amsterdam. Trimbot2020 was designed to autonomously navigate through garden spaces, manoeuvring around obstacles and identifying optimal paths to reach target plants for trimming, which was done with a robot arm extending a blade.

EcoFlow Blade

Standing at nearly 2600€, the EcoFlow Blade is an automated grass trimming robot, meant to reduce the time needed to maintain the user’s garden. At first use after purchase, the user will use a built-in application on their smartphone to direct the robot, tracing the edges of their garden. This feature saves the user the need to add barriers to their garden, allowing a more straightforward interaction with the user. Once done, the robot will have a map of where to cut, for it to work automatically. TMoreover, the robot comes with x-vision technology designed to avoid obstacles in real time, ensuring that it doesn't break and that it won't destroy objects or hurt people.

Greenworks Pro Optimow 50H Robotic Lawn Mower

Standing at 1600€, the Greenworks gardening robot also focuses on mowing gardens. Greenworks has made multiple versions for different garden sizes, spanning from 450-1500m2. The Pro Optimow’s features are also integrated with their own app, which allow the user to schedule and track the robot, as well as specifying any areas that need to be managed more carefully, like areas that are more prone to flooding. The boundaries of the garden are set with a wire, and the robot navigates the garden with random patterns, cutting small amounts at a time.

Husqvarna Automower 435X AWD

Finally, the Husqvarna Automower is designed for large, hilly landscapes, capable of mowing up to 3500m2 of lawn, as well as having great manoeuvrability and grip for rough and slanted terrains. This robot again has an integrated app, which works with the robot’s built-in GPS to create a virtual map of the user’s lawn. Moreover, the app allows the user to customise the robot’s behaviour in different areas, whether it be cutting heights, zones to avoid, etc. The Husqvarna gardening robot also uses ultrasonic sensors to detect objects and avoid them. The robot also requires the user to set up boundary wires to map out the garden. Finally, the Husqvarna is integrated with voice controls such as Amazon Alexa and Google Home, allowing the user to command the robot easily.

Plant (Disease) Detection Systems

LeafSnap

LeafSnap is an app on iOS and Android that claims to have plant identification and disease identification built in, by scanning images through the camera. They claim to have an accuracy rate of 95% at identifying the species of plant, as well as having instructions for how to care for each specific species. Moreover, it sends reminders to the user to water, fertilise and prune their plants. LeafSnap is able to identify plants thanks to a database with more than 30000 species.

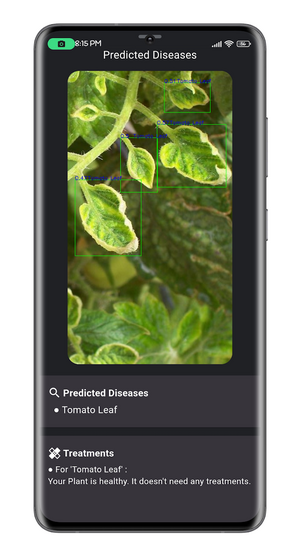

PlantMD

PlantMD is an application that employs machine learning to detect plant diseases. More specifically, they used TensorFlow, an open-source software library for machine learning developed by Google, focused on neural networks. The development of PlantMD was inspired by PlantVillage, a dataset from Penn State University, which created Nuru, an app aimed at helping farmers improve cassava cultivation in Africa.

Agrio

The app allows farmers to utilise machine learning algorithms for diagnosing crop issues and determining treatment needs. Users can snap photos of their plants to receive diagnosis and treatment recommendations. Additionally, the app features AI algorithms capable of rapid learning to identify new diseases and pests in various crops, enabling less experienced workers to actively participate in plant protection efforts. Geotagged images help predict future problems, while supervisors can build image libraries for comparison and diagnosis. Users can edit treatment recommendations and add specific agriculture input products tailored to crop type, pathology, and geographic location. Treatment outcomes are monitored using remote sensing data, including multispectral imaging for various resolutions and visit frequencies. The app provides hyper-local weather forecasts, crucial for predicting insect migration, egg hatching, fungal spore development, and more. Inspectors can upload images during field inspections, with algorithms providing alerts before symptoms are visible.

Inspection Robots in Agriculture

Tortuga AgTech [1]

The winners of Agricultural Robot of the Year 2024 award, Tortuga AgTech revolutionised the field of automated harvesting robots. The Tortuga Harvesting Robot are autonomous robots designed for harvesting strawberries and grapes, using two robotic arms that “identify, pick and handle fruit gently”. To do this, each arm has a camera at its end, and the AI algorithms identify the stem of the fruit, and command its two fingers to remove the fruit from the stem. Moreover, the AI has the ability to “differentiate between ripe and unripe fruit”, to ensure that fruit is picked only when it should be. After picking a fruit, it will place them in one of the many containers it has in its body, having the ability to pick “tens of thousands of berries every day”.

VegeBot [2]

Designed at the University of Cambridge, the VegeBot is a robot made for harvesting iceberg lettuce, a crop that is particularly difficult to harvest with robots, due to its fragility and growing “relatively flat to the ground”. This makes it more prone to damage the soil or other lettuces that are in the robots surroundings. The VegeBot has a built-in camera, which is used to identify the iceberg lettuce, and to check its condition, including its maturity and health. From there, its machine learning algorithm decides whether to pick it off, and if so, cuts the lettuce off the ground, and gently picks it up and places it on its body.

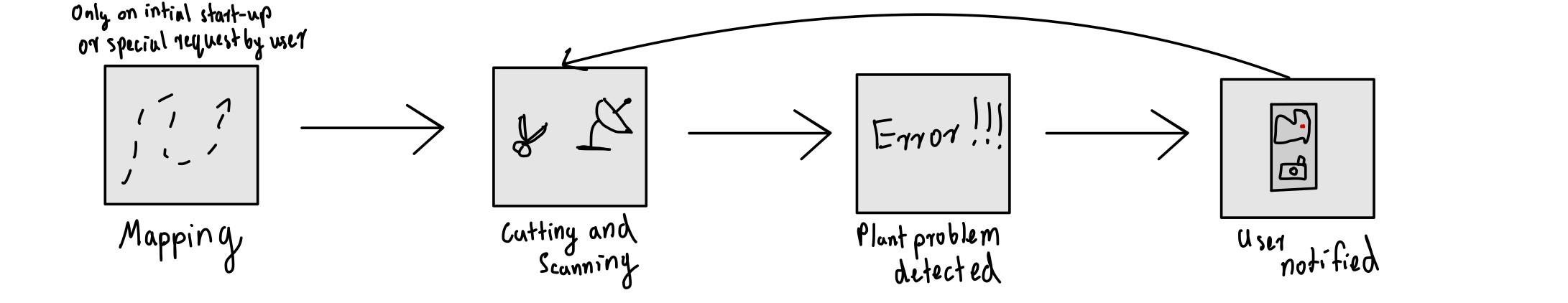

Regular Robot Operation

As with any piece of technology it is important that the users are aware of its proper operation method and how the robot functions is general. It is important that this is clear for our robot as well. Upon the robot's first use in a new garden or when the garden owner has made some changes to the garden layout, the mapping process must be instantiated in the app. This mapping will be a 2D map of the garden which will then later allow the robot to efficiently traverse the entire garden during its regular operation without leaving any part of the garden unvisited. In order to better understand this feature one, can compare it to the iRobot Roomba. After the initial setup phase has been completed the robot will be able to begin its normal operation. Normal operation includes the robot being let out into the garden from its storage place, and traversing through the garden cutting grass while its camera scans the plants in its surroundings. Whenever the robot detects an irregularity in one of the plants, it will notify the user through the user of the app, where the robot will send over a picture of the plant with an issue as well as its location on the map of the garden. The user will then able to navigate in the app to view all plants that need to be taken care of in his garden. This means that not only will the user have a lawn which is well kept but also be aware of all unhealthy plants keeping the users garden in optimal condition at all times.

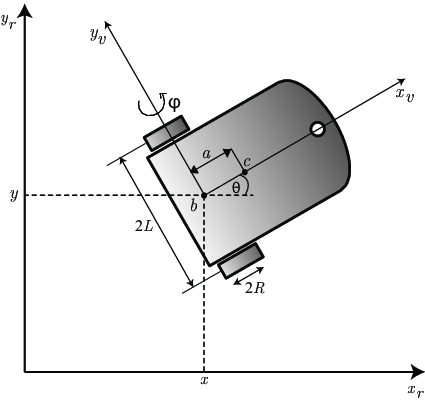

Maneuvering: Patryk

Movement

One of the most important design decisions when creating a robot or machine with some form of mobility is deciding what mechanism the robot will use to traverse its operational environment. This decision is not always easy as many options exist which have their unique pros and cons. Therefore is is important to consider the pros and cons of all methods and then decide which method is most appropriate for a given scenario. In the following section I will explore these different methods and see which are expected to be most beneficial and work the best in the task environment our robot will be required to function in.

Wheeled Robots

It may be no surprise that the most popular method for movement within the robot industry is still a robot with circular wheels. This is due to the fact that robots with wheels are simply much easier to design and model[7]. They do not require complex mechanism of flexing or rotating a actuator but can be fully functional by simply altering rotating a motor in one of two directions. Essentially they allow the engineer to focus on the main functionality of the robot without having to worry about the many complexities that could arise with other movement mechanisms when that is not necessary. Wheeled robots are also convenient in design as they rarely take up a lot of space in the robot. Furthermore, as stated by Zedde and Yao from the University of Wagenigen, these types of robots are most often used in industry due to their simple operation and simple design[8]. Although wheeled robots seem as a single simple category there are a few subcategories of this movement mechanism that are important to distinguish as they each have their benefits and issues they face.

Differential Drive

Differential drive focuses on independent rotation of all wheels on the robot. Essentially one could say that each wheel has its own functionality and operates independently of the other wheels present on the robot. Although rotation is independent it is important to note that all wheels on the robot work as one unit to optimize turning and movement. The robot does this by varying the relative speed of rotation of its wheels which allow the robot to move in any direction without an additional steering mechanism[9]. In order to better illustrate this idea consider the following scenario - suppose a robot wants to turn sharp left, the left wheels would become idle and the right wheel would rotate at maximum speed. As can be seen both wheels are rotating independently but are doing so to reach the same movement goal.

| Pros | Cons |

|---|---|

| Easy to design | Difficulty in straight line motion on uneven terrains |

| Cost-effective | Wheel skidding can completely mess up algorithm and confuse the robot of its location |

| Easy maneiveribility | Sensitive to weight distribution - big issue with moving water in container |

| Robust - less prone to mechanical failures | |

| Easy control |

Omni Directional Wheels

Omni-directional wheels are a specialized type of wheel designed with rollers or casters set at angles around their circumference. This specific configuration allows a robot which has these wheels to easily move in any direction, whether this is lateral, diagonal, or rotational motion[10]. By allowing each wheel to rotate independently and move at any angle, these wheels provide great agility and precision, which makes this method ideal for applications which require navigation and precise positioning. The main difference between this method and differential drive is the fact that omni directional wheels are able to move in any direction easily and do not require turning of the whole robot when that is not necessary due to their specially designed roller on each wheel.

| Pros | Cons |

|---|---|

| Allows complex movement patterns | Complex design and implementation |

| Superior maneuverability in any direction | Limited load-bearing capacity |

| Efficient rotation and lateral movement | Higher manufacturing costs |

| Ideal for tight spaces and precision tasks | Reduced traction on uneven terrains |

| Enhanced agility and flexibility | Susceptible to damage in rugged environments |

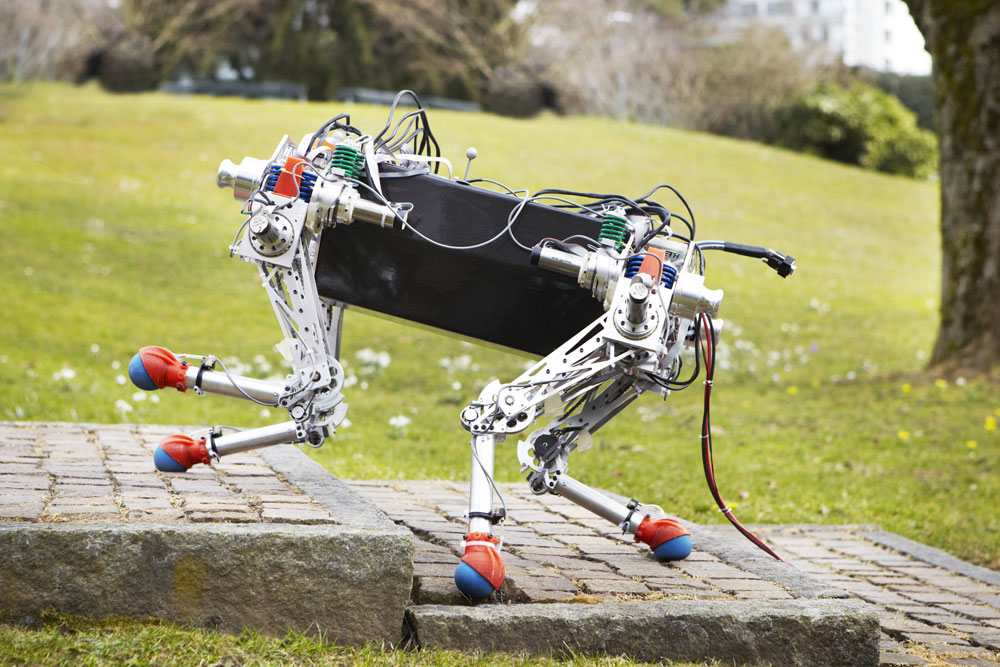

Legged Robots

Over millions of year organisms have evolved in thousands of different ways, giving rise to many different methods of brain functioning, how an organisms perceives the world and what is important in our current discussion, movement. It is no coincidence that many land animals have evolved to have some form of legs to traverse their habitats, it is simply a very effective method which allows a lot of versatility and adaptability to any obstacle or problem an animal might face[11]. This is no different when discussing the use of legged robots, legs provide superior functionality to many other movement mechanisms due to the fact that they are able to rotate and operate freely in all axis's. However, with great mobility comes the great cost of their very difficult design, a design with which top institutions and companies struggle with to this day[12].

| Pros | Cons |

|---|---|

| Versatility in Terrain | Complexity in Design |

| Obstacle Negotiation | Power Consumption |

| Stability on Uneven Ground | Sensitivity to Environmental Changes |

| Human-Like Interaction | Limited Speed |

| Efficiency in Locomotion | Maintenance Challenges |

Tracked Robots

Tracked robots, which can be characterized by their continuous track systems, offer a dependable method of traversing a terrain that can be found in applications across various industries. The continuous tracks, consisting of connected links, are looped around wheels or sprockets, providing a continuous band that allows for effective and reliable movement on many different surfaces, terrains and obstacles[13]. It is therefore no surprise that their most well known usages include vehicles which operate in uneven and unpredictable, such as tanks. Since tracks are flexible it is even common that such robots can simply avoid small obstacles by driving over them without experiencing any issues. This is particularly favorable for the robot we are designing as naturally gardens are never perfectly flat surfaces often littered by many naturally cause obstacles such as stone, dents in the surface or even possibly branches that have fallen on the ground due to rough wind.

| Pros of Tracked Robots | Cons of Tracked Robots |

|---|---|

| Superior Stability | Complex Mechanical Design |

| Effective Traction | Limited Maneuverability |

| Versatility in Terrain | Terrain Alteration |

| High Payload Capacity | Increased Power Consumption |

| Efficient Over Obstacles | |

| Consistent Speed |

Hovering/Flying Robots

Hovering/Flying robots provide without a doubt the most unique way of movement from the previously listed. This method unlocks a whole new wide range of possibilities as the robot no longer has to consider on-ground obstacles; whether that is rocks or uneven terrain. The robot is able to view and monitor a very large terrain from one position due to its ability to position itself at a high altitude and quickly detect major problems in a very large area. This method also unlocks the possibility of the robot to optimize its movement distance as it is able to move from point A to point B directly in a straight line saving energy and time. However, as is the case with any solution, flying/hovering has its major problems. It is by far the most expensive method, as flying apparatus is far more costly and high maintenance than any other solution. This makes this unreliable and likely a method far out of the technological needs and requirements of our gardening robot. Furthermore, its operation is best in large open fields which perfectly suits the large farms of the agriculture industry, however, this is not the aim of the robot we are designing. Most private gardens are of a small size meaning its main strength could not be used. Additionally, it is likely that a robot which has aerial abilities would find difficulty in maneuvering through the tight spaces of a private garden and would have to avoid many low hanging branches or pushes ultimately making its operation unsafe.

| Pros | Cons |

|---|---|

| Versatile Aerial Mobility | Limited Payload Capacity |

| Rapid Deployment | Limited Endurance |

| Remote Sensing | Susceptibility to Weather |

| Reduced Ground Impact | Regulatory Restrictions |

| Dynamic Surveillance | Security and Privacy Concerns |

| Efficient Data Collection | Initial Cost and Maintenance |

Sensors are a fundamental component of any robot that is required to interact with its environment, as they aim to replicate our sensory organs which allow us to perceive and better understand the environment around us[14]. However, unlike with living organisms, engineers are given the choice to decide what exact sensors their robot needs and must be careful with this decision in order to pick the sufficient options to be able to allow the robot to have its full functionality without picking any redundant options that will make the robot unnecessarily expensive. This decision is often based on researching and considering all possible sensors that are available on the market which are related to the problem the engineer is trying to solve and selecting the one which fulfills the requirements of the the robot most accurately[15]. In this section we will specifically be looking into sensors which will aid our robot in traversing our environment, a garden. This means that we must consider the fact that the sensors we select must be able to work in environments where the lighting level is constantly changing as well as possible mis inputs due to high winds and or uneven terrain. Additionally, it is important to note that unlike the discussion in the previous section, one type of sensor/system is rarely sufficient to fulfill the requirements and most robots must implement some form of sensor fusion in order to operate appropriately, this is no different in our robot[16].

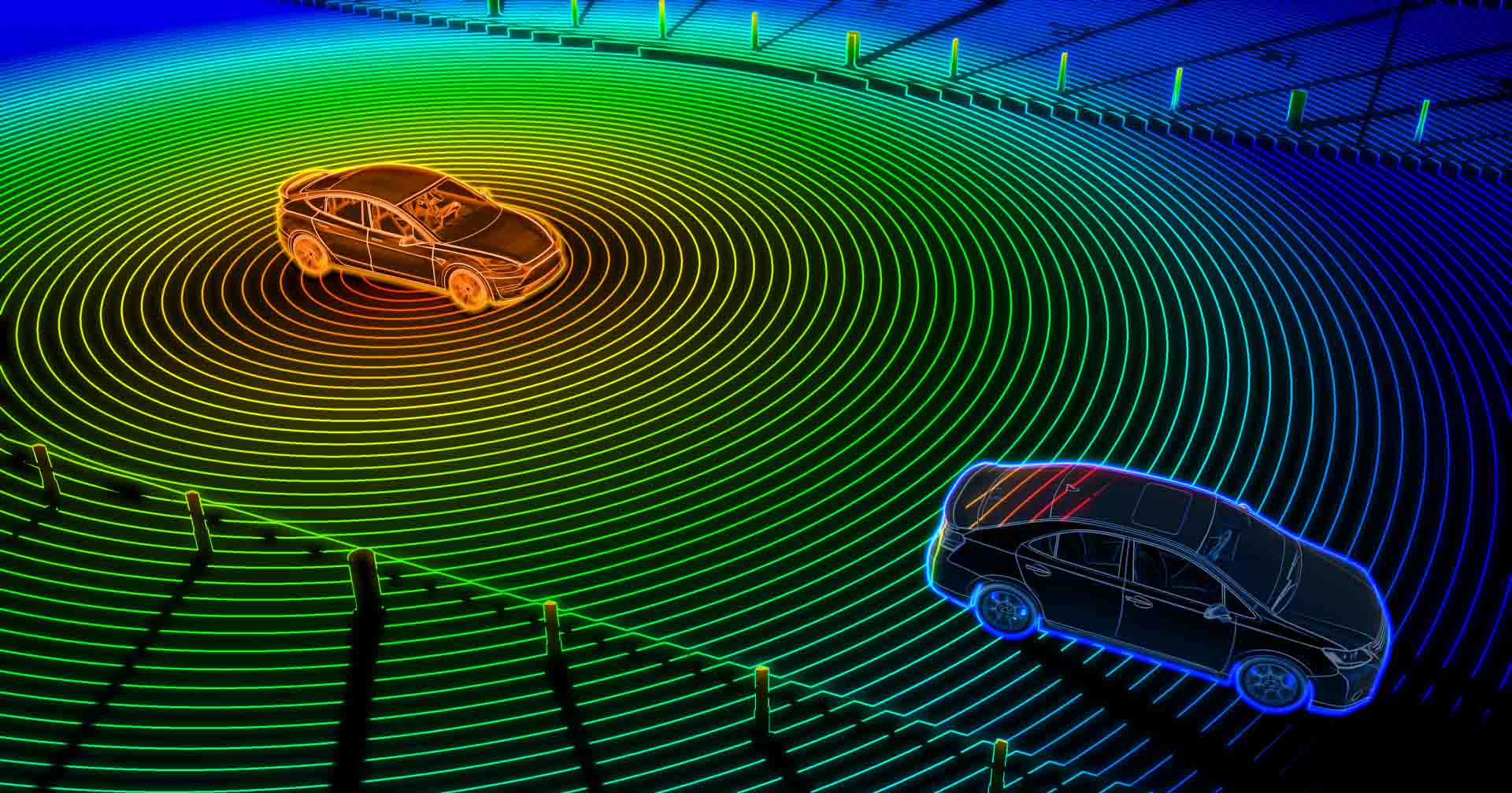

LIDAR sensors

LIDAR stands for Light Detection and Ranging. These types of sensors allow robots which utilize them to effectively navigate the environment they are placed in as they provide the robot with object perception, object identification and collision avoidance[17]. These sensors function through sending lasers into the environment and then calculating how long it takes the signals they send to return back to the receiver to determine the distance to the nearest objects and their shapes. As can be seen, LIDAR’s provide robots with a vast amount of crucial information and even allow them to see the world in a 3D perspective. This means that not only are robots able to see their closest object, whenever faced with an obstacle they can instantaneously derive possible methods of avoidance and to traverse around it[18].

LIDAR’s are often the preferred option by engineers in robots that operate outdoors as they are minimally influenced by weather conditions[19]. Often sensors rely on visual imaging or sound sensors which both get heavily disturbed in more difficult weather conditions, whether that is rain on a camera lens or the sound of rain disturbing sound sensors, this is not the case with LIDAR's as their laser technology does not malfunction in these scenarios. However, an issue that our robot is likely to face when utilizing a LIDAR sensor is that of sunlight contamination[20]. Sunlight contamination is the effect the sun has on generating noise in the sensor’s data during the daytime and therefore possibly introducing errors within it. Since our robot needs to work optimally during the daytime it is crucial that this is considered. However, the LIDAR possesses many additionally positive aspects that would be truly beneficial to our robot such as the ability to function in complete darkness and immediate data retrieval. This would allow the users of our robot to turn on the robot before they go to sleep at night and wake up to a complete report of their garden status. Furthermore, these features are necessary for the robot as they would allow it to work in a dynamic and constantly changing environment, which is of high importance to where our robot wants to operate in the garden. The outdoors can never be a fully controlled environment and that has to be considered into the design of the robot.

As can be seen the LIDAR sensor has many excellent features that our robot will likely require, therefore it is a very important candidate when making our next design decisions.

Boundary Wire

A boundary wire is likely the most cost efficient and commonly implemented technique in state-of-the-art garden robots that are on the private consumer market today. It is not a complicated technology but still a very effective one when it comes to robot navigation. A boundary wire in the garden acts as a virtual barrier that the robot cannot cross, similar to a geo-cage in drone operation[21]. In order to begin utilizing it, the robot user must first lay out the wire on the boundaries of their garden and then dig them approximately 10 cm below the ground's surface, so that the wire is safe from any external factors. This is a tedious task for the user but has to only be completed once and the robot is now fully operational and will never leave the boundaries set by the user. It is important for the user to take their time in the first setup as any change they will want to make will require digging up many meters of wire and once again putting it in the ground after relocation.

The boundary wire communicates with the robot by emitting a low voltage, around 24V, signal which is picked up by a sensor on the robot[22]. This means that when the robot detects the signal it knows that the wire is underneath it and it should not to continue moving in its direction. As is displayed above, the boundary wire is a very simple technology which with a slight amount of effort of the user can perform the basic navigability tasks. However, its functionality is fairly limited, it cannot detect any objects within the area of its operation and therefore avoid them meaning that its environment has to be maintained and clear throughout its operation.

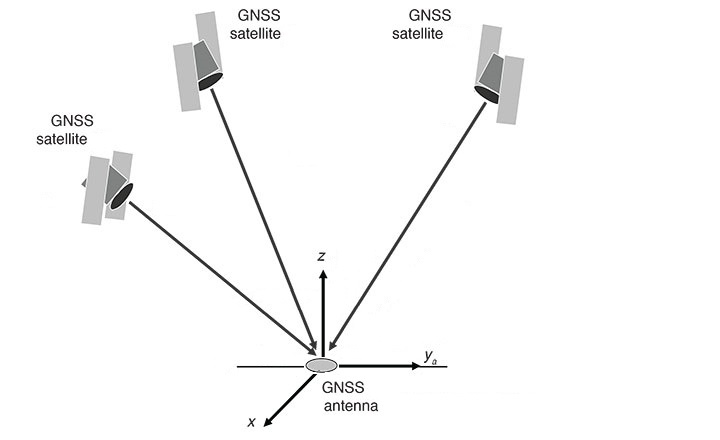

GPS/GNSS

GPS/GNSS are groups of satellites deployed in space that allow robots and devices to receive signals from them which aid them in positioning. Over the past few years these systems have gotten extremely accurate and can position devices to the nearest meter[23]. This happens through a process called triangulation, where multiple satellites calculate their distance to a device and establish its location[24]. The usage of this sensor in our robot is very encouraging as it has been proven to be effective in the large scale gardening industry for many years, more specifically including the precision farming domain[25]. An important distinction to note is that of GPS and GNSS. Although GPS is likely the term many are more familiar with from navigation applications they have used in the past, it is really just a subpart of GNSS which represents all Constellation Satellite Systems and GPS is simply one of them. If equipped with a sensor that can communicate with satellites and fetch its location at all times, our robot will be able to precisely ping the location it has found sick plants or any plants that need care and send that information to the users device. Once again promising to be a very key component in our robot design.

Bump Sensors

Bump sensors, commonly referred to as collision or impact sensors, are sensors designed to detect physical contact or force a robot could encounter while traversing its environment[26]. These sensors can be seen being utilized across various industries in robotics in order to increase the safety and allow for greater automation of the robots they are integrated into. Additionally, these devices are crucial in robotics and many different types of vehicles as they allow the robot to replicate the human ability of touch and have a tactile interface with the environment.

In order to have this feature, bump sensors are typically composed of accelerometers, devices that are able to detect and measure a change in acceleration forces, or simply a switch which gets pressed as soon as the robot applies pressure on it from factors in its environment[27]. In its many applications the contact the robot experiences is with larger objects so the sensor must not be extremely sensitive. This could not be the case in our gardening robot as the robot would have to consider smaller and more fragile objects requiring the sensor to have a much higher sensitivity. Bump sensors are most commonly used to make sure a robot does not drive into and collide with large objects, it allows the robot to detect that a change of direction in its motion must occur before continuing its operation. Although in many industries bump sensors are a last resort form of defense against the robot breaking or destroying important elements in our environment, in the robot we are designing it is a lot less of an issue if the robot were to detect an object through this sensor[28]. The robot would have to signal contact that it has collided with an object and change its direction of motion without further applications. However, this should still be unlikely to happen as in our robot the LIDAR sensor should have detected the problem beforehand and dealt with it, nevertheless technology is not always reliable and having a backup system ensures the robot experiences fewer errors in its operation especially with the possibility of faults of the LIDAR due to sun contamination.

Ultrasonic sensors

Ultrasonic sensors, or put in more simple terms, sound sensors, are another type of sensor which allows a robot to measure distances between objects in its environment and its current position. These sensors also find widespread applications in robotics whether that is liquid level detection, wire break detection or even counting the number of people in an area. Their strength is that it allows robot that have them to replicate human depth perception in a method similar to that of a dolphin[29].

Ultrasonic sensors function by emitting high-frequency sound waves through its transmitters and measuring the time it takes for the waves to bounce back and be received by its receivers. This data allows the robot to calculate the distance to the object, enabling precise navigation and obstacle detection. Although this once again is similar to the function of a LIDAR sensor, it allows the robot to work in a frequently changing environment without the use of state-of-the-art and expensive technology. One thing that must be considered in the usage of this sensor is that it tends to perform worse when attempting to detect softer materials which our team will have to take into account and make sure the sensor is able to detect plants it is approaching[30].

In our gardening robot, ultrasonic sensors could once again play an important role in supplementing the functionality of more advanced sensors like the LIDAR. Through its simple and reliable solution, ultrasonic sensors provide essential functionality, improving the robot's operational reliability in a very wide range of gardens it could encounter in its deployment.

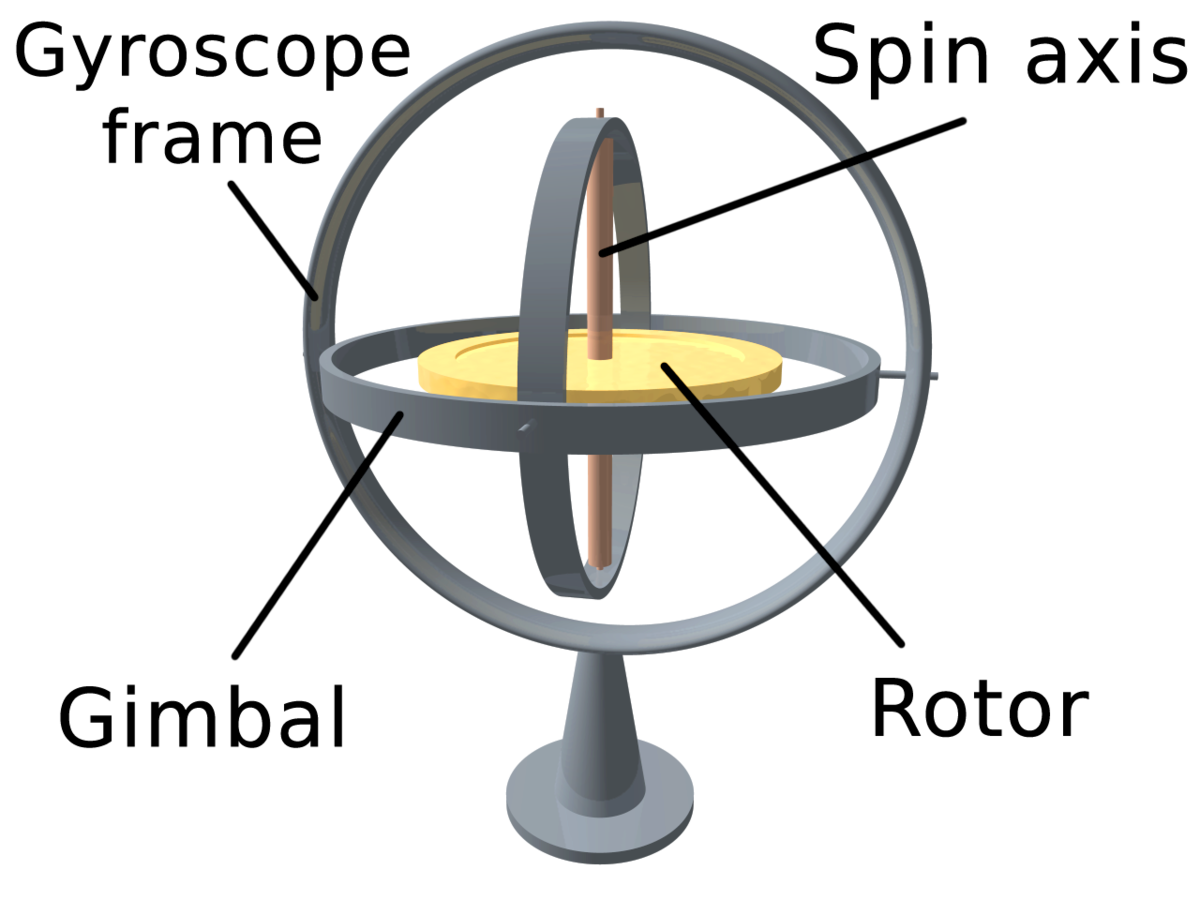

Gyroscopes

Gyroscopes are essential components in the field of robotics that help in providing stability and precise orientation control in a wide range of industrial areas. These devices use the principles of angular momentum to constantly maintain the same reference direction, in order to not change its current orientation[31]. This allows robots to improve their stability and therefore enhance their operational abilities.

In order to perform their functionality, gyroscopes consist of a spinning mass, mounted on a set of gimbals. When the orientation of the gyroscope changes, its mechanism of conserving angular momentum means that it will apply counteracting force essentially keeping it in the same orientation. This feature is very important in the field of robotics as it allows the robot to know its current angle with regards to the ground and when that gets too large the gyroscope helps the robot to not flip over or fall during its operation.

Since thousands of relevant sensors exist, we can only discuss the most important ones. Sensors such as lift sensors, incline sensors and camera systems can also be included in the robot for navigation purposes, however in the design of our robot they are either too complex or unnecessary.

Mapping

Mapping will be one of the most important features of our robot as it will be the very first thing the robot performs after being taken out of its box by the user and will rely on the quality of this process for the rest of its operation. Mapping is the idea of letting the robot familiarize itself with its operational environment by traversing it freely without performing any of its regular operations and simply analyzing where the boundaries of the environment are and how it is roughly shaped. This allows the robot to gain the required knowledge so that during its normal operation throughout its lifecycle it is aware of its positioning in the garden, areas it has visited in the current job and areas it still must visit. Essentially it turns the robot from a simple-reflex-agent to a robot which has knowledge stored in its database and can access it to make better informed decisions for more efficient operation. Mapping can be done both in 3D and in 2D depending on the needs of the robot and user. Initially, we considered 3D mapping in this project, enabling the robot to also memorize plant locations in the garden for easier access in the future, however since plants grow very rapidly this environment would change quickly and the mapping process would have had to be repeated on a daily basis, a very inefficient process. Now that the decision was made to implement 2D mapping, similar to that of the Roomba vacuum cleaning robots, the purpose of the map would be to learn the dimensions and shape of the garden. It may come at no surprise but as is the case in the design problems, there is rarely one solution and that is no different in the case of mapping. Nonetheless, the most optimal method for our robot is simply Random Exploration Mapping.

Random Exploration Mapping

Random Exploration Mapping bases its operation on the robot freely moving across the garden and detecting obstacles on its way and boundaries it cannot cross. Eventually after sufficient time has passed for the robot to explore its surroundings it will be able to localize all areas that are available for its traversal and connect them into one coherent map. Although this method is likely going to take quite a bit of time, after the robot begins discovering areas and closing them off with all boundaries that are present within it, it will continue to new areas and gain knowledge effectively.

Occupancy Grid Mapping

VSLAM (Visual Simultaneous Localization and Mapping)

Hardware

Lawn Mowing Mechanism

Rotary Lawn Mowers

Rotary lawn mowers are the most common lawn mowers. They have 1 or 2 steel blades spinning at around 3000 rpm horizontally near the surface(1). Because the blades cut a specific height of grass, grass that is not sitting straight up won’t be cut well, and thus they sometimes don’t cut short grass evenly (2). Rotary lawn mowers usually have a cover over the blades, ensuring the safety of any people or animals that get close to the lawn mower, as well as ensuring that the grass doesn’t fly everywhere, potentially staining the user’s clothes (1). These types of lawn mowers usually have internal combustion engines, however many are powered using electricity, through either a cord, or a rechargeable battery such as lithium-ion batteries (1).

| Pros | Cons |

|---|---|

| Compact | Not the best at cutting low grass |

| Simple | Noisy |

Reel Lawn Mowers

The reel lawn mower is a type of lawn mower that is most commonly manual, that is, the user walks it around and it has no engine to spin the blades(1). Specifically, as the reel lawn mower is moved along the grass, the central axis of the cylinder rotates, causing the blades to rotate with it(1). This type of lawn mower is great for cutting low grass, as the blades “create an updraft that makes the grass stand up so it can be cut”(1). However, it is not great at cutting tall or moist grass, as its blades can get stuck and not cut properly(2). Manual reel lawn mowers tend to be much cheaper than rotary lawn mowers, however, motorized ones can be just as expensive(2).

| Pros | Cons |

|---|---|

| Great at cutting low grass evenly | Not the best at cutting high grass |

| Doesn’t make any noise | Mechanism is very separate from engine, can be more bulky |

| Bad with damp grass |

Robust Construction

Cameras

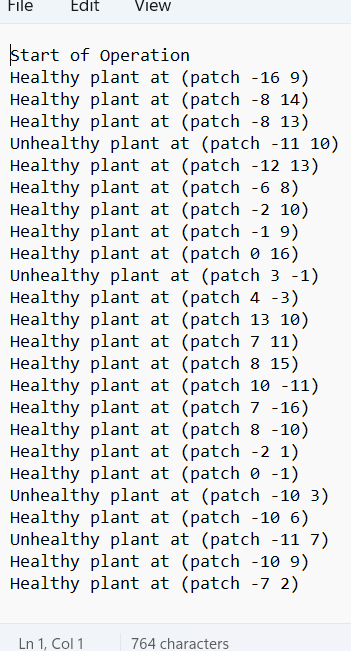

NetLogo Simulation

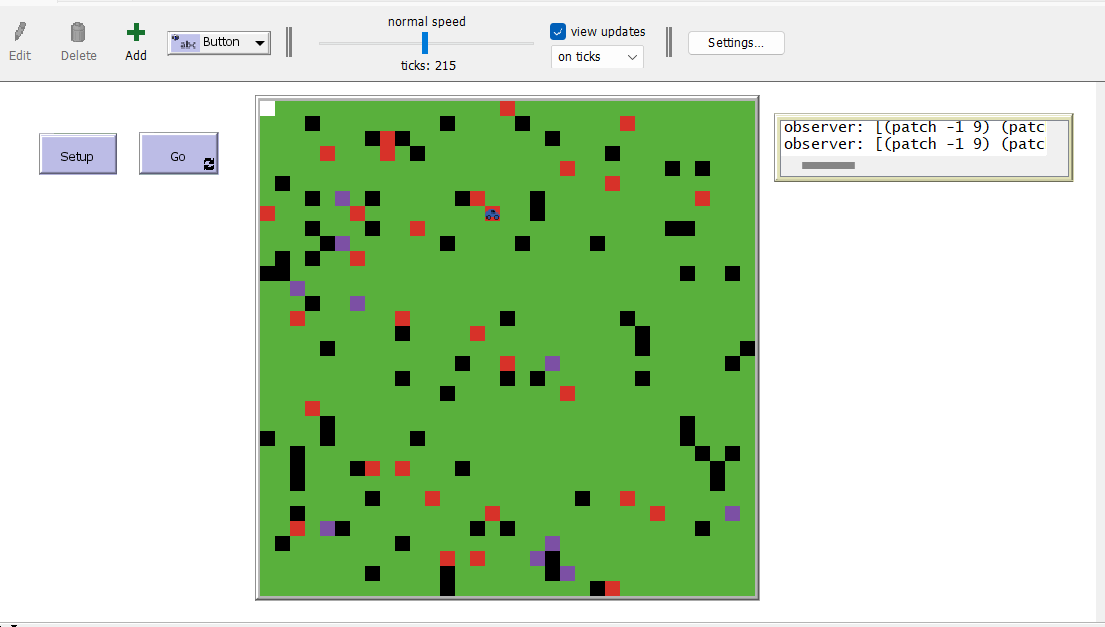

Our NetLogo deliverable aims to simulate and display how the functioning of the robot will look visually as well as how the robot will communicate and interact with the app. The simulation will display the robot on a regular operation, traversing the mapped garden trimming grass and scanning for plants which are sick. In the simulation when a robot detects a sick plant it will drive up as close as possible to its location and send the coordinates of the sick plant to the application. The application will then be able to receive this information and display it graphically for the user to access. So far we have been able to make the NetLogo application write directly to a text file which can then be read by Android Studio and therefore the app.

This week I managed to setup the first working version of the simulation which I will demonstrate during Monday's meeting. The simulation right now has a robot which starts at its charging station which is modelled by a white patch. The robot then randomly traverses the garden and stays within its boundaries by avoiding black patches which are obstacles or fences. Red patches indicate healthy plants and violet patches indicate sick plants. The robot is represented by the car icon and is able to move by one patch each tick. Below I present how the environment currently looks and what is printed to the text file the application will communicate with as the simulation runs.

Interview

In order to confirm our decisions and ask for clarifications and recommendations on features that our user group truly desires we will perform an interview. The interview will be completed with the owner of two different private gardens in Poland. The interviewee also owns two grass trimming robots, one produced by Bosch and the other by Gardena which he utilizes in both gardens. We believe his expertise and hands on experiences with similar state-of-the-art robots will allow us to solidify our requirements and improve on our product as a whole. The interview will be performed in Polish as the interviewee is not fluent in English and will be translated into English on the wiki.

Questions

- What is the current navigation system your robot uses?

- My current robot navigates by randomly walking around the garden within the limits I set by cables that I dug into the ground that came with the robot

- What issues do you see with it that you would like improved?

- I mean the obvious thing would be I guess if its less random it might take a quicker time to finish cutting the grass but honestly I do not really see an issue in that as it does not affect my time as I do not have to monitor the robot anyway.

- What is the way in which you would currently charge your robot and how would you like to charge and store the plant identification robot?

- Currently my robot has a charging station to which it returns to charge after it has completed cutting grass, if something similar could be made for the plant identification robot I would be very satisfied.

- Would you like the robot to map out your garden before its first usage to set its boundaries or would you like that to be done by the boundary wires your current robot uses?

- I feel my case is a bit special as I have already done the work of digging the cables into the ground so I would not have to do anything again and I would probably pick that. However if I was a new customer I feel like the mapping feature would be better as I would not have to set up the whole cable system which was tiring for me.

- Would you like the robot to map out your garden in order to pinpoint problems in your garden and display them on your phone or would you just like to receive the GPS coordinate of the issue and why?

- I feel like seeing the map of my garden on the app will always make my life simpler however, if I simply get the GPS location which then i can paste into google maps or some application and see its location that would also be more than fine. As long as I know where the sick plant is I will be happy with it.

- Are there any problems with movement that your current robots are facing with regards to using wheels? (getting stuck somewhere etc)

- Generally I would say no, there are times however where the robot has to go over a brick path running through my garden and sometimes it struggles to get up on the ledge, however eventually it always manages to find a more even place to cross and it crosses it.

- Are there any hardware features that you believe would benefit your current robot?

- I wouldn't say there is anything that the robot is missing in its core functionality, however I recently installed solar panels on my house and they help me save on electricity so maybe if the robot also had some small solar panel it would use less electricity as well but besides that I am not sure.

- How satisfied are you with the precision and uniformity of grass cutting achieved by the robot's mowing mechanism?

- I am very satisfied, although I remember the store employee telling me that it evenly cuts the grass even with random movement. I did not believe him but I can truly say I guess over the time it operates it manages to cut the grass everywhere within its area.

- Have you noticed any issues or areas for improvement regarding the battery performance or power management features?

- Not really, my robot works in a way that it operates until the battery drains and it returns to its charging station to charge. Once its done charging it resumes its operation so I do not really see any issues with its battery and especially since it works the whole day I don’t see any problems with it charging and returning to work.

- How well has the automated grass cutting robot endured exposure to various weather conditions, such as rain, heat, or cold?

- I cannot run the robot in the rain and it really is not recommended either, so when it starts raining I just tell the robot to got back to its station. Regarding heat and cold, ive not seen any issues, obviously it hasn't had any significant running in cold temperatures as I don't use it during the winter as there is snow and no need for grass cutting.

- Have you observed any signs of wear or deterioration related to weather exposure on the robot's components or construction?

- No not at all the robot is still in a very good condition after some years now. I have seen that you can buy some replaceable parts if something breaks but I have not had the need to.

- What plant species do you currently own?

- Too many to name if I’m being honest, buy many flowers, bushes and trees.

- Have you always gravitated towards these species, or did you grow different species in the past?

- When we first bought the house with my wife around 2004 we just had a gardener and some friends help us decided how to decorate the garden with plants and when plants die of the gardener who comes each spring helps us decide whether to replace it with something new or the same one again.

- What health problems do your plants typically encounter?

- Probably the most important issues are drying out during the summer as I often forget how much water each plant needs when the temperature is high, at some point a few years ago I also had some bug outbreak which spread which forced me to dig up many flowers.

- Is there anything that you find confusing about the design of modern apps, and, if so, what?

- Obviously I am getting quite old and not so good with new technology so what I love about apps that I use is that they have their main features on the home page and are easily accessible, I have a hard time finding things if I have to navigate through many pages to find it.

Survey

Questions

- Patryk

- What would be your preferred movement system on a gardening robot?

- Tracked Robots

- Wheel Robots

- Omni-Wheel robots

- Legged Robot

- Flying Robot

- Would you like the robot to be able to map out your garden to pinpoint exactly on the map where an issue occurs?

- Yes

- No

- How would you like the robot to be able to charge?

- Through a changing station similar to a Roomba

- Charger manually plugged in and stored indoors by the user

- Solar panels on top of the robot (May be ineffective during cloudy days)

- Etc.

- Would you be willing to consider spending more money for a more expensive and accurate technology such as a LIDAR sensor for navigation or go for the cheaper and commonly used bump sensor?

- Cheaper bump sensor which may be less delicate with plants

- More expensive LIDAR which is able to locate the pinpoint plants and avoid them

- What would be your preferred movement system on a gardening robot?

- Briana

- Which one do you prefer:

- Having plenty of information about specific plants that you can browse through at any time.

- Just have information about the disease of the plant that is detected and how to combat the issue, without other overwhelming or at that time irrelevant data.

- Which one do you prefer:

- Raul S

- Which of the following features would you prioritize when considering the lawn mowing mechanism of an automated grass cutting robot?

- Precision and uniformity of grass cutting

- Ability to adjust cutting height and settings

- Safety features to prevent accidents or damage

- Durability and resistance to wear

- What factors would influence your satisfaction with the charging station of an automated grass cutting robot?

- Ease of docking and charging process

- Reliability and durability of the charging station

- Compatibility with other devices or accessories

- Additional features such as status indicators or remote control capabilities

- How important is battery life and power management efficiency when choosing an automated grass cutting robot?

- Very important

- Important

- Neutral

- Not important

- How essential is weatherproofing in your decision to purchase an automated grass cutting robot?

- Extremely important

- Important

- Somewhat important

- Not important

- Which of the following features would you prioritize when considering the lawn mowing mechanism of an automated grass cutting robot?

- Raul H

- Rares

- Ania

- Would it be useful to be able to schedule the robot to cut the grass at a specific time or on a specific schedule pattern?

- •Yes

- •No

- Would it be preferable for the app to notify the user to water the plants?

- •Yes

- •No

- How would the tasks identified by the robot best be communicated by the app?

• To do list screen in app (tasks can be ticked off when done to be removed)

• Notification screen in app (push notifications will be recorded to be viewed in more detail before disappearing)

• The map screen will show a red alert dot at the location that a task was recorded, clicking on the dot will give more information and the alert will be removed once the user marks it as completed)

Identifying plant Diseases

Diseases to identify.

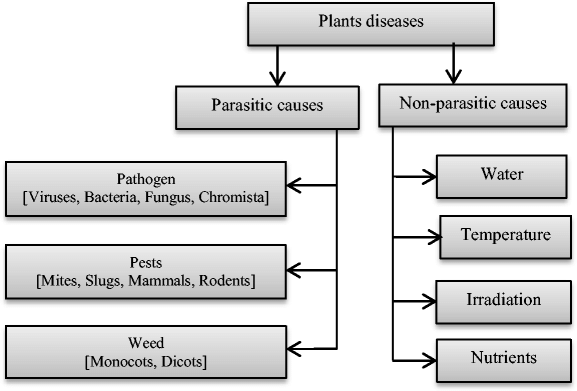

There is no doubt that taking care of plants can get overwhelming, especially because sometimes people do not know the actions that they should take in order to properly assist these plants. However, all the plants are different, and some are overly sensitive while others require heavy care. It gets extremely messy and confusing for the novice gardener. The plant’s disease by fungus, virus, bacteria, and other factors also affect the plants. The have disease symptoms such as spots, dead tissue, fungus like fuzzy spores, bumps, bulges, irregular coloration on the fruits . There are different types of plant pathogens, including bacteria, fungi, nematodes, viruses, and phytoplasmas, and they can spread through different methods such as contact, wind, water, and insects. It is important to identify the specific pathogen causing a disease in order to implement effective management strategies. In order to manage these diseases, the gardener must be equipped with appropriate knowledge and tools. This includes understanding the life cycle of the disease, identifying the signs early, and applying the correct treatments promptly. Regular inspection and proper sanitation practices can also help prevent the spread of these diseases. Plants can also be susceptible to various pests that can contribute to their deterioration. Common pests include aphids, slugs, snails, caterpillars and beetles. These pests can cause significant damage by eating the leaves, stems, or roots of the plant, or by introducing diseases. Aphids, for instance, are known to spread plant viruses. Some pests, like certain types of beetles, can also damage the plant by boring into its wood. The most common pests include Aphids, Thrips, Spider mites, Leaf miners, Scale, Whiteflies, Earwigs and Cutworms. A gardener would also have to be cautious about weeds that grow among their plants and feed on precious resources. So how could we detect and remedy these problems?

Artificial Intelligence recognising diseases.

Hyperspectral imaging

Hyperspectral imaging offers more precise colour and material identification. It delivers significantly more information for every pixel, surpassing the capabilities of a conventional camera. Therefore, it is commonly used in agriculture, identifying several plant diseases before they start showing serious signs of trouble. This technology is used heavily to monitor the health and condition of crops in agriculture[32], but could we bring it to the average gardener? . Hyperspectral imaging is more reliable, but also more expensive. Currently, a hyperspectral imaging camera costs from thousands to tens of thousands of dollars. However, the technology seems to be replicable at a lower price. VTT Technical Research Centre of Finland has managed to achieve this technology for only 150 dollars [33]. The potential to develop affordable hyperspectral imaging technology for everyday gardening presents an exciting opportunity. This could revolutionise domestic plant care, allowing individuals to detect diseases early and improve their plant's health. However, further research and development are required to make this technology widely accessible and user-friendly.

Digital Camera imaging

Methods of identification and detection.

In recent years, the field of plant recognition has made significant strides away from the need for manual approaches carried out by human experts, as they are too laborious, time-consuming, and context dependent (it is possible, after all, for a task to require time-sensitive plant recognition and for no expert to be available in the area), and towards automated methods, driven by the analysis of leaf images. Emerging technologies are being used, to resounding success, to streamline the plant-recognition process by leveraging shape and color features extracted from these images. Through the application of advanced classification algorithms such as k-Nearest Neighbor, Support Vector Machines (SVM), Naïve Bayes, and Random Forest, researchers have achieved remarkable success rates, with reported accuracies reaching as high as 96%. Of these, SVMs are particularly noteworthy for their proficiency at identifying diseased tomato and cucumber leaves, showing the potential of these technologies in plant pathology and disease management.

To address the issues posed by complex image backgrounds, segmentation techniques are used to isolate the leaves, allowing for more accurate feature extraction and, subsequently, classification, for which the Moving Center Hypersphere (MCH) approach is used.

Five fundamental features are extracted: the longest distance between any two points on a leaf border, the length of the main vein, the widest distance of a leaf, the leaf area, and the leaf perimeter. Based on these features, twelve additional features are constructed by means of some mathematical operations: smoothness of a leaf image, aspect ratio, form factor (the difference between a leaf and a circle), rectangularity, narrow factor, ratio of perimeter to longest distance, ratio of perimeter to the sum of the main vein length and widest distance, and five structural features obtained by applying morphological opening on grayscale image.

The classification algorithms employed after feature extraction has been completed are:

• Support Vector Machines: this method takes the shape of a case in a two-dimensional space with linearly separable data points, but it can also handle a higher dimensional space and data points that are not linearly separable.

• K-Nearest Neighbor classifies unknown samples according to their nearest neighbors. For classifying an unknown sample, k closest training samples are determined. The most frequent class among these k neighbors is chosen as the class of this sample.

• Naïve Bayes classifiers are statistical models capable of predicting the probability than an unknown sample belongs to a specific class. As their name suggests, they are based on Bayes’ theorem.

• Random Forest aggregates the predictions of multiple classification tree, where each tree in the forest is grown using bootstrat samples. At prediction, classification results are taken from each tree and that means trees in the forest use their votes to the target class. The class which has the most votes is selected by the forest.

For testing each of these classification algorithms, the researchers used two sampling approaches. In the first method, a random sampling approach was employed, where they used 80% of the images for training and the remaining 20% for testing. In the second method, they partitioned the dataset into 10 equal sized subsamples, of which one subsample is used for testing while the remaining 9 subsamples are used as training data. This process is repeated 10 times with a different subsample each time, and then the final result is the average across all 10 runs.

Plant identification accuracy is at its highest when both shape and color features are assessed side by side. However, leaf colors change with the seasons, which may reduce the accuracy of classification attempts. Consequently, textural features should be incorporated into future classification technologies such that the algorithms will be able to recognize leaves independently of seasonal changes.

Classification Tensorflow

k-nearest neighbor

Bayesian classifiers

UI Design Guiding Principles: Ania

When designing an application, the user is the most important factor. The simplest of ways to taking the general user into account in the design process is by considering psychological principles that can affect the ways users experience the app and its functionalities.

Thus, we will continue by outlining important factors based on psychological finding and how they translate in terms of UI design.

1. Patterns of perception[34][35]

The principles of Gestalt outline how people tend to organize the results of their perception. Knowing the ways the average user perceives the content of a screen can be used to design a UI that results in an easier navigation of the app and a more visually appealing interface.

These laws identify the following :

· Symmetry is seen as more organized.

· The mind has the tendency to fill in incomplete information or objects. This is more important to logos but it can be taken into account when shapes are used in design.

· Simple designs are good. Simplicity aids in faster understanding of information.

· Elements can be grouped toghether by having a visual connection (same color, same shape, move in the same direction, close position) or a similar arrangement of the elements that creates continuity. People tend to group elements that appear to be creating a line, a curve or basic well recognisable shapes.

· Similar elements are better grouped when the aim is to attract the attention of the user to them.

· Each screen should have a focal point.

· Different colores can be used to define areas that are perceived as separate objects.

· Complex objects tend to be interpreted in the more simple manner.

2. Information processing

According to the study by George A. Miller, on average, people can remember 7± 2 objects at a time. It was also found that by using certain techniques to aid memorization this limit can be surpassed, but this does not nullify the effect of Miller’s study as UI design should be made as simple to interact with as possible[36]. This implies that each screen of an app should be designed to only have 7±2 elements so users have less to remember and an easier time visually navigating the screen.

When it comes to the use of text and images, the “Left-to-Right” theory points that it is more convenient for users to have the most important information on the top left side of the screen. According to certain findings, this might not be completely accurate for UI design as it was observed in a limited study that people tend to look at the center of a screen first[37]. Furthermore, people have 2 visual fields, the right field is responsible for the interpretation of images and the left for text. Putting images on the left and the text on the right would makes it easier for users to process the given information[38].

Further limitations on how people process information is tied to the limitations of the use of motor systems. Multitasking of a motor system or multitasking multiple motor systems should be avoided. Focusing on having a task of one motor system introduced at a time makes it easier for users to process the information and to have an easier time paying attention to the task. Thus, screens should not give the users multiple information of different types at the same time. The main point thus being that important text should not be put over a complex image used as background[39].

3. Use of Colors

Colors can be used to improve the visual appeal of an app. The only issue is that the color selection is a complex subject. Color schemes can be chosen based on the context they are to be used, different color theories and the desired effect on the user. Nevertheless, it is important that colors are used consistently across the app, and they are not overused[40].

Regarding accessibility, the limited color perception of color-blind people should be considered when choosing contracting colors to highlight different elements[41]. It should also be noted that bright and vivid colors, or a mix of bright and dark colors can tire the eye muscles. This should be avoided to make the app easier to use for longer periods of time[42].

4. Feedback

Feedback is quite important for users to feel that their actions have an effect[43]. It can also affect the ease with which users can remember how to use an app. Feedback should be immediate, consistent, clear, concise and it should fit the context of their actions[44].

5. Navigation and guiding users

It can be important to make it clear to users how they should begin interacting with an interface. To make it clear it would be useful to make the starting element stand out. This can be done either through a different color, size, hue, shape, orientation etc[45]. Guiding the user can further be done through a visual hierarchy. This can be created by assigning visual priority to elements by making them stand out compared to the other elements. It would mean the higher priority an element should have the more it should stand out. This would guide the eyes of users in the way the UI designer considers the screen should be navigated, giving a logical order of tasks that the users would subconsciously tend to follow.

Important elements should be made to stand out in general due to the phenomenon of inattentional blindness. The Invisible Gorilla Experiment by Simons and Chabris in 1999 showed that people will miss unexpected elements when they are focused on a different task[46].

Another aspect that helps in navigation an interface Is that the user should be able to find a logical consistency in it. The responses to user actions should be consistent and any changes from whatever monotony was created should be predictable. The responses should also be reflective of the content of the interface[47].

A further important aspect that can ease navigation for users is providing them with a clear reversal or exit option. Such options give a sense of confidence to users and make navigating the app less stressful once they know that they can opt out if they make a mistake or change their mind about an action[48].

6. Efficiency

Hick’s law states that the more options available the longer it takes to decide. In term of UI design, this implies that menus and navigations systems should be simplified, either there should be a focus on a few items or elements should be labeled well and similar elements should be grouped together. Another way to decrease options would be to create a visual hierarchy[49].

Fitts Law is based around the connection between target size and distance, and the times it takes to reach a target. When it comes to UI, the law implied that bigger buttons are in general faster to use and better suited for the most frequently used elements. The law can also reinforce that the steps of a tasks are best contained on the same screen to make navigation more efficient[50].

Application Prototype

Work Distribution

| Week | Task | Deliverable to meeting |

|---|---|---|

| 2 | Define Deliverables and brainstorm new idea | Completed wiki with new idea |

| 3 | Write survey questions about garden maintenance robot, research methods to maneuver in an area, specifically in a garden. | Wiki |

| 4 | Complete interview questions, complete research maneuvering in gardens and begin looking into mapping techniques | Wiki |

| 5 | Analyze survey results, research mapping techniques, sensors that might be required for this to be effective and complete research of AI classification models. | Wiki |

| 6 | Decide on the final requirements for the sensors and mapping of robot. | Wiki |

| 7 | Update wiki to document our progress and results | Wiki |

| 8 | Work on and finalize presentation | Final Presentation |

Hardware and Netlogo Implementation: Raul S.

| Week | Task | Deliverable to meeting |

|---|---|---|

| 2 | Literature Review and State of the Art | Wiki |

| 3 | Write survey questions about garden maintenance robot, research about what hardware, equipment and materials

that the robot would need. |

Wiki |

| 4 | Send survey questions, complete research about hardware, equipment and materials. | Wiki |

| 5 | Analyse survey results. | Wiki |

| 6 | Decide on a final set of requirements for the hardware of the robot. | Wiki |

| 7 | update wiki to document our progress and results | Wiki |

| 8 | Work on and finalize presentation | Final Presentation. |

Research into AI identifying plant diseases and infestations: Briana.

| Week | Task | Deliverable to meeting |

|---|---|---|

| 2 | Research on state of the art AI. | Wiki |

| 3 | Research on plant diseases and infestations | Google Doc |

| 4 | Research on best ways to detect diseases and infestations (where to point the camera, what other sensors to use) | Google Doc |

| 5 | Research on AI state recognition (healthy/unhealthy) | Google Doc |

| 6 | Research on limitations of AI when it comes to recognising different states of a plant (healthy/unhealthy) | Google Doc |

| 7 | Conducting interviews with AI specialist + specifying what kind of AI training method can be used for our project. | Google Doc |

| 8 | Work on and finalize presentation. | Presentation |

Research into AI identifying plant diseases and infestations: Rareş.

| Week | Task | Deliverable to meeting |

|---|---|---|

| 2 | Research on state of the art AI. | Wiki |

| 3 | Research on plant diseases and infestations | Google Doc |

| 4 | Research on best ways to detect diseases and infestations (where to point the camera, what other sensors to use) | Google Doc |

| 5 | Research on AI state recognition (healthy/unhealthy) | Google Doc |

| 6 | Research on limitations of AI when it comes to recognising different states of a plant (healthy/unhealthy) | Google Doc |

| 7 | Conducting interviews with AI specialist. | Google Doc |

| 8 | Work on and finalize presentation | Presentation |

Interactive UI design and implementation: Raul H.

| Week | Task | Deliverable to meeting |

|---|---|---|

| 2 | Literature Review and State of the Art | Wiki |

| 3 | Write interview questions in order to find out what requirements users expect from the application, and research the Android application development process in Android Studio. | Wiki |

| 4 | Based on the interviews, compile a list of the requirements and create UI designs based on these requirements. | Wiki |

| 5 | Start implementing the UI designs into a functional application in Android Studio. | |

| 6 | Finish implementing the UI designs into a functional application in Android Studio. | Completed demo application |

| 7 | ||

| 8 | Work on and finalize presentation |

Interactive UI design and implementation: Ania

| Week | Task | Deliverable to meeting |

|---|---|---|

| 2 | Literature Review and State of the Art of Garden Robots and Plant Recognition Software | Wiki |

| 3 | Write interview questions in order to find out what requirements users expect from the application, start creating UI design based on current concept ideas. | Wiki |

| 4 | Based on the interviews, compile a list of the requirements and create UI designs based on these requirements. | Wiki |

| 5 | Start implementing the UI designs into a functional application in Android Studio. | |

| 6 | Finish implementing the UI designs into a functional application in Android Studio. | Completed demo application |

| 7 | Testing and final changes to UI design. | |

| 8 | Work on and finalize presentation |

Individual effort

| Break-down of hours | Total Hours Spent | ||

|---|---|---|---|

| Week 1 | Patryk Stefanski | Attended kick-off (2h), Research into subject idea (2h), Meet with group to discuss ideas (2h), Reading Literature (2h), Updating wiki (1h) | 9 |

| Raul Sanchez Flores | Attended kick-off (2h) | 2 | |

| Briana Isaila | Attended kick-off (2h), Meet with group to discuss ideas (2h), Research state of the art (2h), Look into different ideas (2h) | 8 | |

| Raul Hernandez Lopez | |||

| Ilie Rareş Alexandru | |||

| Ania Barbulescu | Attended kick-off (2h), Reading Literature (2h) | 4 | |

| Week 2 | Patryk Stefanski | Meeting with tutors (0.5h), Researched and found contact person who maintains Dommel (1h), Brainstorming new project ideas (3h), Group meeting Thursday (1.5h), Created list of possible deliverables (1h), Group meeting to establish tasks (4.5h), Literature review and updated various parts of wiki (2h) | 13.5 |

| Raul Sanchez Flores | Meeting with tutors (0.5h), Group meeting Thursday (1.5h) | 2 | |

| Briana Isaila | Meeting with tutors (0.5h), Group meeting Thursday (1.5h), Brainstorming new project ideas (2h), Updating wiki (2h), Group meeting to establish tasks (4.5h) | 10.5 | |

| Raul Hernandez Lopez | Meeting with tutors (0.5h), Group meeting Thursday (1.5h) | 2 | |

| Ilie Rareş Alexandru | Meeting with tutors (0.5h), Group meeting Thursday (1.5h) | 2 | |

| Ania Barbulescu | Group meeting Friday (4h), Research Literature (2h), Updated Wiki (2h) | 8 | |

| Week 3 | Patryk Stefanski | Meeting with tutors (0.5h), Research to specify problem more concretely (3.5h), Discuss with potential users if the robot idea would be useful to them (2h), Found literature that backs up problem is necessary (1h), Group meeting Tuesday (1h), Finished Problem statement, objectives, users (2h), Research into maneuvering and reporting on findings (3h) | 13 |

| Raul Sanchez Flores | |||

| Briana Isaila | Meeting with tutors (0.5h), Research to specify problem more concretely (3.5h), Group meeting Tuesday (1h), Finding literature and a survey on our chosen problem and analysing different views and solutions(3.5h). Research into plant disease recognition (2.5h) | 11 | |

| Raul Hernandez Lopez | |||

| Ilie Rareş Alexandru | |||

| Ania Barbulescu | |||

| Week 4 | Patryk Stefanski | Meeting with group (1.5h), Research into maneuvering and fixing parts of wiki (2h), Describing robot operation on wiki (1h), Research into sensors and updating wiki (6h), Research into NetLogo writing files and environment setup (2h), Prepare and organize interview (1h), Research mapping and watch videos about it (3h), Created References in APA (0.5h) | 17 |

| Raul Sanchez Flores | |||

| Briana Isaila | Meeting with group (1h), Research into state of the art plant health recognition AI + technology used (4h), Updated the problem statement and the objectives of our project (2h), Prototype UI for application design (3h), Research into imaging methods for accurate plant detection (4h) | 14 | |

| Raul Hernandez Lopez | |||

| Ilie Rareş Alexandru | |||

| Ania Barbulescu | |||

| Week 5 | Patryk Stefanski | Meeting with tutors (0.5h), Meeting with group (1.5h), Work on and setup NetLogo for a random moving robot (4h), Write interview questions (1h), Research into intervieews robots before interview (1h), Organize and conduct Interview with grass cutting robot user (1.5h) | 9.5 |

| Raul Sanchez Flores | |||

| Briana Isaila | |||

| Raul Hernandez Lopez | |||

| Ilie Rareş Alexandru | |||

| Ania Barbulescu |

Literature Review

1. TrimBot2020: an outdoor robot for automatic gardening (https://www.researchgate.net/publication/324245899_TrimBot2020_an_outdoor_robot_for_automatic_gardening)

· The TrimBot2020 program aims to build a prototype of the world’s first outdoor robot for automatic bush trimming and rose pruning.

· State of the art: ‘green thumb’ robots used for automatic planting and harvesting.

· Gardens pose a variety of hurdles for autonomous systems by virtue of being dynamic environments: natural growth of plants and flowers, variable lighting conditions, as well as varying weather conditions all influence the appearance of objects in the environment.

· Additionally, the terrain is often uneven and contains areas that are difficult for a robot to navigate, such as those made of pebbles or woodchips.

· The design of the TrimBot2020 is based on the Bosch Indigo lawn mower, on which a Kinova robotic arm is then mounted. (It might, therefore, be worthwhile to research both of these technologies.)

· The robot’s vision system consists of five pairs of stereo cameras arranged such that they offer a 360◦ view of the environment. Additionally, each stereo pair is comprised of one RGB camera and one grayscale camera.

· The robot uses a Simultaneous Localization and Mapping (SLAM) system in order to move through the garden. The system is responsible for simultaneously estimating a 3D map of the garden in the form of a sparse point cloud and the position of the robot in respect to the resulting 3D map.

· For understanding the environment and operating the robotic arm, TrimBot2020 has developed algorithms for disparity computation from monocular images and from stereo images, based on convolutional neural networks, 3D plane labeling and trinocular matching with baseline recovery. An algorithm for optical flow estimation was also developed, based on a multi-stage CNN approach with iterative refinement of its own predictions.

2. Robots in the Garden: Artificial Intelligence and Adaptive Landscapes (https://www.researchgate.net/publication/370949019_Robots_in_the_Garden_Artificial_Intelligence_and_Adaptive_Landscapes)

· FarmBot is a California-based firm and designs and markets open-source commercial gardening robots, and develops web applications for users to interface with these robots.

· These robots employ interchangeable tool heads to rake soil, plant seeds, water plants, and weed. They are highly customizable: users can design and replace most parts to suit their individual needs. In addition to that, FarmBot’s code is open source, allowing users to customize it through an online web app.

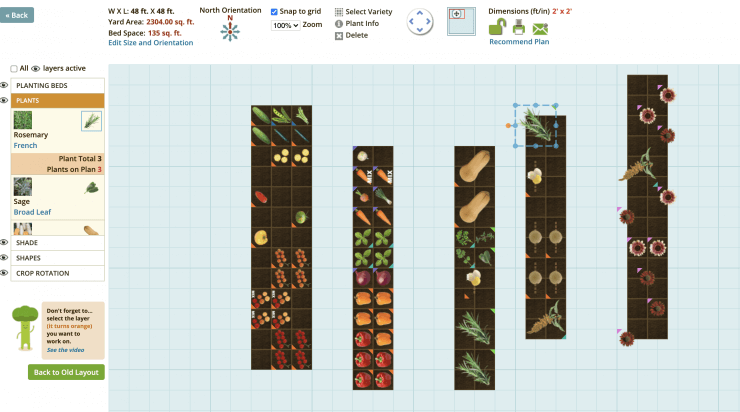

· Initially, the user describes the garden’s contents to a FarmBot as a simple placement of plants from the provided plant dictionary on a garden map, a two-dimensional grid visualized by the web app. FarmBot stores the location of each plant as a datapoint (x, y) on that map. Other emerging plants, if detected by the camera, are treated uniformly as weeds that should be managed by the robot.

· The robotic vision system employed by the Ecological Laboratory for Urban Agriculture consists of AI cameras that process images with OpenCV, an open-source computer vision and machine learning software library. This library provides machine learning algorithms, including pre-trained deep neural network modules that can be modified and used for specific tasks, such as measuring plant canopy coverage and plant height.

3. Indoor Robot Gardening: Design and Implementation (https://www.researchgate.net/publication/225485587_Indoor_robot_gardening_Design_and_implementation)

·

4. Building a Distributed Robot Garden (https://www.researchgate.net/publication/224090704_Building_a_Distributed_Robot_Garden)

5. A robotic irrigation system for urban gardening and agriculture (https://www.researchgate.net/publication/337580011_A_robotic_irrigation_system_for_urban_gardening_and_agriculture)

6. Design and Implementation of an Urban Farming Robot (https://www.researchgate.net/publication/358882608_Design_and_Implementation_of_an_Urban_Farming_Robot)

7. Small Gardening Robot with Decision-making Watering System (https://www.researchgate.net/publication/363730362_Small_gardening_robot_with_decision-making_watering_system)

8. A cognitive architecture for automatic gardening (https://www.researchgate.net/publication/316594452_A_cognitive_architecture_for_automatic_gardening)

9. Recent Advancements in Agriculture Robots: Benefits and Challenges (https://www.researchgate.net/publication/366795395_Recent_Advancements_in_Agriculture_Robots_Benefits_and_Challenges)

10. A Survey of Robot Lawn Mowers (https://www.researchgate.net/publication/235679799_A_Survey_of_Robot_Lawn_Mowers)

11. Distributed Gardening System Using Object Recognition and Visual Servoing (https://www.researchgate.net/publication/341788340_Distributed_Gardening_System_Using_Object_Recognition_and_Visual_Servoing)

12. A Plant Recognition Approach Using Shape and Color Features in Leaf Images (https://www.researchgate.net/publication/278716340_A_Plant_Recognition_Approach_Using_Shape_and_Color_Features_in_Leaf_Images)

13. A study on plant recognition using conventional image processing and deep learning approaches (https://www.researchgate.net/publication/330492923_A_study_on_plant_recognition_using_conventional_image_processing_and_deep_learning_approaches)

14. Plant Recognition from Leaf Image through Artificial Neural Network (https://www.researchgate.net/publication/258789208_Plant_Recognition_from_Leaf_Image_through_Artificial_Neural_Network)

15. Deep Learning for Plant Identification in Natural Environment (https://www.researchgate.net/publication/317127150_Deep_Learning_for_Plant_Identification_in_Natural_Environment)

16. Identification of Plant Species by Deep Learning and Providing as A Mobile Application (https://www.researchgate.net/publication/348008139_Identification_of_Plant_Species_by_Deep_Learning_and_Providing_as_A_Mobile_Application)

17. Path Finding Algorithms for Navigation (https://nl.mathworks.com/help/nav/ug/choose-path-planning-algorithms-for-navigation.html)

18. NetLogo Models (https://ccl.northwestern.edu/netlogo/models/)

19. Path Finding Algorithms (https://neo4j.com/developer/graph-data-science/path-finding-graph-algorithms/#:~:text=Path%20finding%20algorithms%20build%20on,number%20of%20hops%20or%20weight.)