PRE2022 3 Group5

Group members

| Name | Student id | Role |

|---|---|---|

| Vincent van Haaren | 1 | Human Interaction Specialist |

| Jelmer Lap | 1569570 | LIDAR & Environment mapping Specialist |

| Wouter Litjens | 1751808 | Chassis & Drivetrain Specialist |

| Boril Minkov | 1564889 | Data Processing Specialist |

| Jelmer Schuttert | 1480731 | Robotic Motion Tracking Specialist |

| Joaquim Zweers | 1734504 | Actuation and Locomotive Systems Specialist |

Project Idea

The project idea we settled on is designing a crawler robot to autonomously create 3d maps of difficult to traverse environments so humans can plan routes through small unknown spaces

Project planning

| Week | Description |

|---|---|

| 1 | Group formation |

| 2 | Prototype design plans done

Bill of Materials created & Ordered components |

| break | Carnaval Break |

| 3 | Monday: Split into sub-teams

work started on prototypes for LIDAR, Locomotion and Navigation |

| 4 | Thursday: Start of integration of all prototypes into robot demonstrator |

| 5 | Thursday: First iteration of robot prototype done [MILESTONE] |

| 6 | Buffer week - expected troubles with integration |

| 7 | Environment & User testing started [MILESTONE] |

| 8 | Iteration upon design based upon test results |

| 9 | Monday: Final prototype done [MILESTONE] & presentation |

| 10 | Examination |

State of the Art

Literature Research

| Paper Title | Reference | Reader |

|---|---|---|

| Modelling an accelerometer for robot position estimation | [1] | Jelmer S |

| An introduction to inertial navigation | [2] | Jelmer S |

| Position estimation for mobile robot using in-plane 3-axis IMU and active beacon | [3] | Jelmer S |

| Mapping and localization module in a mobile robot for insulating building crawl spaces | [4] | Jelmer L |

| A review of locomotion mechanisms of urban search and rescue robot | [5] | Joaquim |

| Variable Geometry Tracked Vehicle, description, model and behavior | [6] | Joaquim |

| Stepper motors: fundamentals, applications and design | [7] | Joaquim |

| Incremental Visual-Inertial 3D Mesh Generation with Structural Regularities | [8] | Jelmer L |

| Keyframe-Based Visual-Inertial SLAM Using Nonlinear Optimization | [9] | Jelmer L |

| Balancing the Budget: Feature Selection and Tracking for Multi-Camera Visual-Inertial Odometry | [10] | Jelmer L |

| Optical 3D laser measurement system for navigation of autonomous mobile robot | [11] | Boril |

| Design and basic experiments of a transformable wheel-track robot with self-adaptive mobile mechanism | [12] | Wouter |

| Rough terrain motion planning for actuated, Tracked robots | [13] | Wouter |

| Realization of a Modular Reconfigurable Robot for Rough Terrain | [14] | Wouter |

| A mobile robot based system for fully automated thermal 3D mapping | [15] | Boril |

| A review of 3D reconstruction techniques in civil engineering and their applications | [16] | Boril |

| Analysis and optimization of geometry of 3D printer part cooling fan duct | [17] | Wouter |

| 2D LiDAR and Camera Fusion in 3D Modeling of Indoor Environment | [18] | Boril |

| A Review of Multi-Sensor Fusion SLAM Systems Based on 3D LIDAR | [19] | Jelmer L |

| An information-based exploration strategy for environment mapping with mobile robots | [20] | Jelmer S |

| Mobile robot localization using landmarks | [21] | Jelmer S |

Modelling an accelerometer for robot position estimation

The paper discusses the need for high-precision models of location and rotation sensors in specific robot and imaging use-cases, specifically highlighting SLAM systems (Simultaneous Localization and Mapping systems).

It highlight sensors that we may also need: " In this system the orientation data rely on inertial sensors. Magnetometer, accelerometer and gyroscope placed on a single board are used to determine the actual rotation of an object. "

It mentions that, in order to derive position data from acceleration, it needs to be doubly integrated, which tents to yield great inaccuracy.

drawback: the robot needs to stop after a short time (to re-calibrate) when using double-integration to minimize error-accumulation: “Double integration of an acceleration error of 0.1g would mean a position error of more than 350 m at the end of the test”.

An issue in modelling the sensors is that rotation is measured by gravity, which is not influenced by for example yaw, and gets more complicated under linear acceleration. The paper modelled acceleration, and rotation according to various lengthy math equations and matrices, and applied noise and other real-word modifiers to the generated data.

It notably uses cartesian and homogeneous coordinates in order to seperate and combine different components of their final model, such as rotation and translation. These components are shown in matrix form and are derived from specification of real-world sensors, known and common effects, and mathematical derivations of the latter two.

The proposed model can be used to test code for our robot's position computations.

This paper (as report) is meant to be a guide towards determining positional and other navigation data from interia based sensors like gyroscopes, accelerometers and IMU's in general.

It starts by explaining the inner workings of a general IMU, and gives an overview of an algorithm used to determine position from said sensors' readings using integration, showing what intermitted values represent using pictograms.

It then proceeds to discuss various types of gyroscopes, their ways of measuring rotation (such as light inference), and resulting effects on measurements, which are neatly summarized in equations and tables. It takes a similar for Linear acceleration measurement devices.

In the latter half the paper, concepts and methods relevant to processing the introduced signals are explained, and most importantly it is discussed how to partially account for some of the errors of such sensors. It starts by explaining how to account for noise using allan variance, and shows how this effects the values from a gyroscope.

Next, the paper introduces the theory behind tracking orientation, velocity and position. It talks about how errors in previous steps propagate through the process, resulting in the infamously dangerous accumulation of inaccuracy that plagues such systems.

Lastly, it shows how to simulate data from the earlier discussed sensors. Notably though the previous paper already discussed a more accurate and recent algorithm (building on this paper).

Position estimation for mobile robot using in-plane 3-axis IMU and active beacon

The paper highlights 2 types of positioning determination: Absolute (does not depend on previous location) and Relative (does depend on previous location). It goes on to highlight advantages and disadvantages of several location determination systems. It then proposes a navigation system that mitigates as much of the flaws as possible.

The paper continues by describing the sensors used to construct the in plane 3 axis IMU: - x/y accelerometer, - z-axis gyroscope

Then, the ABS is described. It consists of 4 beacons mounted to the ceiling, and 2 ultrasonic sensors attached to the robot. The technique essentially uses radio frequency triangulation to determine the absolute position of the robot. The last sensor described is an odometer, which needs no further explanation.

Then, the paper discusses the model used to represent the system in code. Most notably the system is somewhat easier to understand, as the in-plane measurements mean that much of the robot position's complexity is restricted to 2 dimensions. The paper also discusses the used filtering and processing techniques such as a karman filter to combat noise and drift. The final processing pipeline discussed is immensely complex due to the inclusion of bounce, collision and beacon-failure handling.

Lastly, the paper discusses the result of their tests on the accuracy of the system, which shown a very accurate system, even when the beacon is lost.

Mapping and localization module in a mobile robot for insulating building crawl spaces

This paper describes a possible use case of the system we are trying to develop. According to studies referenced by the authors the crawl spaces in many european buildings can be a key factor in heat loss in houses. Therefore a good solution would be to insulate below floor to increase the energy efficiency of these buildings. However this is a daunting task since it requires to open up the entire floor and applying rolls of insulation. The authors then propose the creation of a robotic vehicle that can autonomously drive around the voids between floors and spray on foam insulation. There already exist human operated forms of this product, however the authors suggest an autonomous vehicle can save time and costs. A big problem with the Simultanious localization and mapping (SLAM) problem in underfloor environments according to the authors is the presence of dust, sand, poor illumination and shadows, this makes the mapping very complex according to the authors.

A proposed way to solve the complex mapping problem is by using both camera and laser vision combined to create accurate maps of the environment. The authors also describe the 3 reference frames of the robot, they consist of the robot frame, the laser frame and the camera frame. The laser provides a distance and with known angles 3d points can be created which can then be transformed into the robot frame. The paper also describes a way of mapping the color data of the camera onto the points

The authors continue to explain how the point clouds generated from different locations can be fit together into a single point cloud with an iterative closest point (ICP) algorithm. The point clouds generated by the laser are too dense for good performance on the ICP algorithm. Therefore the algorithm is divided in 3 steps, point selection, registration and calidation. During point selection the amount of points is drastically reduced, by downsampling and removing floor and ceiling. Registration is done by running an existing ICP algorithm on different rotations of the environment. This ICP algorithm returns a transformation matrix that forms the relation between two poses and one that maximizes an optimization function is considered to be the best. The validation step checks if the proposed solution for alignment of the clouds is considered good enough. Finally the calculation of the pose is made depending on the results of the previous 3 steps.

Lastly, the paper discusses the results of some expirements which show very promising results in building a map of the environment

A review of locomotion mechanisms of urban search and rescue robot

This paper investigates/compiles different locomotion methods for urban search and resque robots. These include:

Tracks

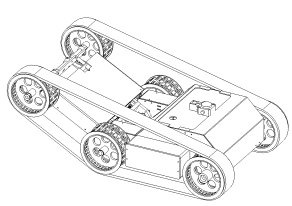

A subgroup of track-based robots are variable geometry tracked (VGT) vehicles. These robots are able to change the shape of the tracks to anything from flat to triangle-shaped, to bend tracks. This is useful to traverse irregular terrain. Some VGT-vehicles which use a single pair of tracks are able to loosen the tension on the track to allow it to adjust its morphology to the terrain (e.g. allow the track to completely cover a half-sphere surface). An example of such a can be seen below.

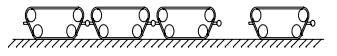

There also exist track based robots which make use of multiple tracks on each side such as the one illustrated below. It is a very robust system making use of its smaller ‘flipper’ to get over higher obstacles. Such an example is seen in the figure below.

Wheels

The paper also describes multiple wheel based systems. One of which is a hybrid of wheel and legs working like some sort of human-pulled rickshaw. This system however is complicated since it will need to continuously map its environment and adjust its actions accordingly.

Furthermore the paper details a wheel-based robot capable of directly grasping a human arm and carrying it to safety.

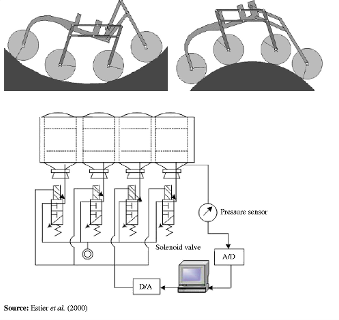

A vehicle using a rover-like configuration as shown below is also discussed. The front wheel makes use of a spring to ensure contact and the middle wheels are mounted on bogies to allow it to passively adhere to the surface. This kind of setup could traverse obstacles as large as 2 times its wheel diameter.

Gap creeping robots

I took the liberty of skipping this since it was mainly focussed on robots purely able to move through pipes, vents etc. which is not applicable for our purposes.

Serpentine robots

The first robot which is explored is a multiple degrees of freedom mechanical arm which is capable of holding small objects with the front and has a small camera attached there also. This being a mechanical arm means it is not truly capable of locomotion but it still has its uses in rescue work which has a fragile and sometimes small environment which a small cross section could help with. The robot is controlled using wires which run throughout the body which are actuated at its base.

Leg based systems

The paper describes a few leg based designs. First of which was created for rescue work after the Chernobyl disaster. This robot-like design spans almost 1 metre and is able to climb vertically using the suction cups on its 8 legs. While doing so, it is able to carry up to 25 kg of load. It can also handle transitions between horizontal and vertical terrain. Furthermore it is able to traverse concave surfaces with a minimum radius of 0.5 metres.

Conclusion

This paper concludes by evaluating all prior robots and their real life application in search and rescue work. This however is not relevant for autonomous crawl space scanning. Except it may indicate why none of the prior robots would be suitable for search and rescue work due to the unstructured environment and the limitations of its autonomous workings.

Variable Geometry Tracked Vehicle, description, model and behavior

This paper presents a prototype of an unmanned, grounded, variable geometry tracked vehicle (VGTV) called B2P2. The remote controlled vehicle was designed to venture through unstructured environments with rough terrain. The robot is able to adapt its shape to increase its clearing capabilities. Different from traditional tracked vehicles the tracks are actively controlled which allows it more easily clear some obstacles.

The paper starts off with stating that robots capable of traversing dangerous environments are useful. Particularly ones which are able to clear a wide variety of obstacles. It states that to pass through small passages a modest form factor would be preferable. B2P2 is a tracked vehicle making use of an actuated chassis as seen in a prior image.

The paper states that the localization of the centre of gravity is useful for overcoming obstacles. However, since the shape of the robot isn’t fixed, a model of the robot is a necessity to find it as a function of its actuators. Furthermore the paper explains how the robot geometry is controlled which consists of the angle at the middle and the tension-keeping actuator between the middle and last axes. These are both explained using a closed-loop control diagram.

Approaching the end of the paper multiple obstacles are discussed and the associated clearing strategy. I would suggest skimming through the paper to view these as they use multiple images. To keep it brief they discuss how to clear: a curb, staircase, and a bumper. The takeaway is that being able to un-tension the track allows it to have more control over its centre of gravity and allows increasing friction on protruding ground elements (avoiding the typical seesaw-like motion associated with more rigid track-based vehicles).

In my own opinion these obstacles highlight 2 problems:

- The desired tension on the tracks is extremely situationally dependent. There are 3 situations in which it could be desirable to lower the tension, first is if it allows the robot to avoid flipping over by controlling its centre of gravity (seen in the curb example). Secondly it could allow the robot to more smoothly traverse pointy obstacles (e.g. stairs such as shown in the example). Thirdly, having less tension in the tracks could allow the robot to increase traction by increasing its contact area with the ground. This context-dependent tension requirement to me makes it seem that fully autonomous control is a complex problem which most likely falls outside of the scope of our application and this course.

- The second problem is that releasing tension could allow the tracks to derail. This problem however could be partially remedied by adding some guide rails/wheels on the front and back. This would confine the problem to only the middle wheels.

The last thing I would want to note is that if the sensor to map the room is attached to the second axis, it would be possible to alter the sensor’s altitude to create different viewpoints.

Stepper motors: fundamentals, applications and design

This book goes over what stepper motors are, variations of stepper motors as well as their make-up. Furthermore it goes in-depth about how they are controlled.

Incremental Visual-Inertial 3D Mesh Generation with Structural Regularities

According to the authors advances in Visual-Inertial odometry (VIO), which is the process of determining pose and velocity (state) of an agent using the input of cameras has opened up a range of applications like AR drone navigation. Most of VIO systems use point clouds and to provide real-time estimates of the agent’s state they create sparse maps of the surroundings using power heavy GPU operations. In the paper the authors propose a method to incrementally create 3D mesh of the VIO optimization while bounding memory and computational power.

The authors approach is by creating a 2d Delaunay triangulation from tracked keypoints, and then projecting this into 3d, this projection can have some issues where points are close in 2d but not in 3d, this is solved by geometric filters. Some algorithms update a mesh for every frame but the authors try to maintain a mesh over multiple frames to reduce computational complexity, capture more of the scene and capture structural regularities. Using the triangular faces of the mesh they are able to extract geometry non-iteratively.

In the next part of the paper they talk about optimizing the optimization problem derived from the previously mentioned specifications.

Finally the authors share some benchmarking results on the EuRoC dataset which are promising as in environments with regularities like walls and floors it performs optimally. The pipeline proposed in this paper provides increased accuracy at the cost of some calculation time.

Keyframe-Based Visual-Inertial SLAM Using Nonlinear Optimization

In the robotics community visual and inertial cues have long been used together with filtering however this requires linearity while non-linear optimization for visual SLAM increases quality, performance and reduces computational complexity.

The contributions the authors claim to bring are constructing a pose graph without expressing global pose uncertainty, provide a fully probabilistic derivation of IMU error terms and develop both hardware and software for accurate real-time slam.

The paper describes in high detail how the optimization objectives were reached and how the non-linear SLAM can be integrated with the IMU using a chi-square test instead of a ransac computation.

Finally they show results of a test with their developed prototype which shows that tightly integrating the IMU with a visual SLAM system really improves performance and decreases the deviation from the ground truth to close to zero percent after 90m distance travelled.

Balancing the Budget: Feature Selection and Tracking for Multi-Camera Visual-Inertial Odometry

The authors from this paper propose an algorithm that fuses feature tracks from any amount of cameras along with IMU measurements into a single optimization process, handles feature tracking on cameras with overlapping fovs, a subroutine to select the best landmarks for optimization reducing computational time and results from extensive testing.

First the authors give the optimization objective after which they give the factor graph formulation with residuals and covariances of the IMU and visual factors. Then they explain how they approach cross camera feature tracking. This is done by projecting the location from 1 camera to the other using either stereo camera depth or IMU estimation, then the it is refined by matching it with to the closest image feature in the camera projected to using Euclidian distance. After this it is explained how feature selection is done, this is done by computing a jacobian matrix and then finding a submatrix that preserves the spectral distribution best.

Finally experimental results show that with their system is closer to the ground truth than other similar systems.

This paper presents an autonomous mobile robot, which using a 3D laser navigation system can detect and avoid obstacles in its path to a goal. The paper starts by describing in high detail the navigation system- TVS. The system uses a rotatable laser and scanning aperture to form laser light triangles, which are formed due to the reflected light of the obstacle. Using this method the authors were able to obtain the information necessary to calculate the 3D coordinates. For the robot base, the authors used Pioneer 3-AT, four-wheel, four-motor ski-steer robotics platform.

After this the authors go in-depth on how the robot avoids obstacles. Via the usage of optical encoders on the wheels and a 3-axis accelerometer, the robot keeps track of its travelled distance and orientation. Via IR sensors the robot can detect obstacles that are a certain distance in front of it, after which it performs a TVS scan to avoid the obstacle. The trajectory the robot follows to avoid the obstacle is calculated using 50 points in the space in front of it, which are used to form a curve, which the robot then follows. Thus after the robot starts-up, it calculates an initial trajectory to the goal location, after which it recalculates the trajectory, whenever it encounters an obstacle. Finally the authors go over their results from simulating this robot in Mathlab as well as analyse its performance.

Design and basic experiments of a transformable wheel-track robot with self-adaptive mobile mechanism

This paper shows the design process of one fully mechanical track powered by one servo. The mechanism is quite complicated but the design process shows a lot of information on designing for rough terrain. This paper is focused on efficiency so less motors are needed, which is important while the robot can then be smaller.

The robot has an adaptive drive system which when moving over rough terrain its mobile mechanism gets constraint force information directly instead of using sensors. This information can be used to move efficiently by changing locomotion mode.

Basically it is composed of a transformable track and a drive wheel mechanism. With the following modes:

After this they mainly showed the mechanical details on how their design works. With formulas and CAD models.

They used three experiments Moving on the Even and Uneven Road Overcoming Obstacle by Track Overcoming Obstacle with Different Heights by Wheels It was able to overcome obstacles of 120 mm height while having itself a max height of 146 mm.

Conclusion

Basic experiments have proven that the robot is adaptable over different terrain. The full mechanical design shows promise for our work and goals.

Rough terrain motion planning for actuated, Tracked robots

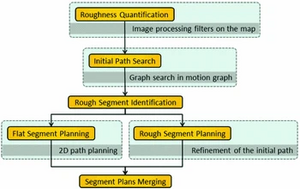

This paper proposes a two step path planning for moving over rough terrain. Firstly consider the robot’s operating limits for a quick initial path. Refine segments that are identified to be through rough areas.

The terrain is seen by using a camera with image processing. Something cannot be overcome if the hill is too high or inclination is too steep. The first path search uses a roughness quantification to prefer less risky routes and is mainly based on different weights. The more detailed planning is done by splitting paths into segments with flat spots and rough spots. After this it uses environmental risk with system safety in a formula to give it a weight.

Further the paper gives a roadmap (based on A*) and RRT* planner.

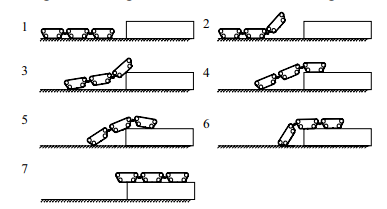

Realization of a Modular Reconfigurable Robot for Rough Terrain

This paper has a robot for rough terrain to use multiple modular reconfigurable robots. Basically a robot with multiple modules that can be disconnected from each other. Using different modules can make the robot do different tasks better. It looks like this:

It can be used for steps like this:

The joint between the robots can move and rotate in basically all directions which makes it able to traverse a lot of terrains.

A mobile robot based system for fully automated thermal 3D mapping

This paper showcases a fully autonomous robot, which can create 3D thermal models of rooms. The authors begin by describing what components the robot uses, as well as how the 3d sensor (a Riegl VZ-400 laser scanner from terrestrial laser scanning) and the thermal camera (optris PI160) are mutually calibrated. Both cameras are mounted on top of the robot, together with a Logitech QuickCam Pro 9000 webcam. After acquiring the 3D data, it is merged with the thermal and digital image via geometric camera calibration. After that the authors explain the sensor placement. The approach of the paper to the memory-intensive issue of 3 planning is to combine 2D and 3D planning- the robot would start off by only using 2D measurements, once it detects an enclosed space however it would switch to 3D NBV (next best view) planning. The 2d NBV algorithm starts off with a blank map, and explores based on the initial scan, where all inputs are range values parallel to the floor, distributed on the 360 degree field of view. A grid map is used to store the static and dynamic obstacle information. A polygonal representation of the environment stores the environment edges (walls, obstacles). This NBV process is composed of three consecutive steps- vectorization (obtaining line segments from input range data), creation of exploration polygon, selection of the NBV sensor position- choosing the next goal. The room detection is grounded in the detection of closed spaces in the 2D map of the environment. Finally the authors showcase their results from their experiments with the robot, showcasing 2D and 3D thermal maps of building floors. The 3D reconstruction of which is done using Marching Cubes algorithm.

A review of 3D reconstruction techniques in civil engineering and their applications

This paper presents and reviews techniques to create 3D reconstructions of objects from the outputs of data collection equipment. First the authors researched the currently most used equipment for getting the 3D data- laser scanners (LiDAR), monocular and binocular cameras, video cameras, which is also the equipment that the paper focuses on. From this they classify two categories for 3D reconstruction based on cameras- point-based and line-based. Furthermore 3D reconstruction techniques are divided into two steps in the paper - generating point clouds and processing those point clouds. For generating the point clouds: For monocular images - feature extraction, feature matching, camera motion estimation, sparse 3D reconstruction, model parameters correction, absolute scale recovery and dense 3D reconstruction feature extraction- gaining feature points, which reflect the initial structure of the scene. Algorithms used for this are Feature point detectors and feature point descriptors. Feature matching- matching feature points of each image pair. Camera motion estimation is used to find out the camera parameters of each image. The Sparse 3D reconstruction step is to compute the 3D location of points using the feature points and camera parameters, generating a point cloud. This is done via the triangulation algorithm. Then the model parameters correction step is to correct the camera parameters of each image. This step leads to precise 3D locations of points in the point cloud. Absolute scale recovery aims to determine the absolute scale of the sparse point cloud by using the dimensions/points of absolute scale in the sparse point cloud. Finally using all of the above is used to generate a dense point cloud. For stereo images, the camera motion estimation and absolute scale recovery steps are skipped, and instead we need to calibrate the camera before feature extraction. After this the authors explain how to generate point clouds from video images. in Techniques for processing data, the authors showcase a couple of algorithms for data data processing. For point cloud processing they use ICP. For Mesh reconstruction- PSR, for point cloud segmentation- they divide the algorithms into two categories- feature-based segmentation (region growth and clustering, K-means clustering) and model-based segmentation (Hough transform and RANSAC). After this the authors go in depth on applications of 3D reconstruction in civil engineering such as reconstructing construction sites and reconstructing pipelines of MEP systems. Finally the authors go over the issues and challenges of 3D reconstruction.

Analysis and optimization of geometry of 3D printer part cooling fan duct

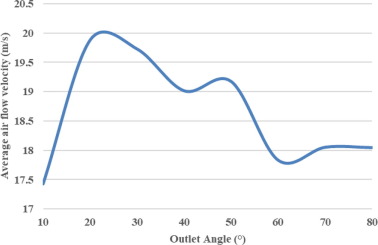

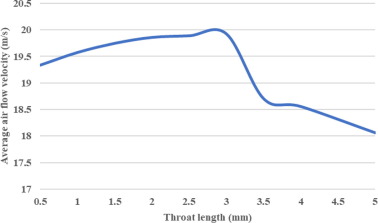

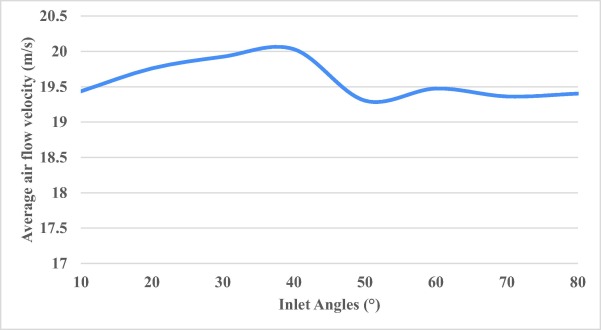

This paper researched fan ducts for 3D printers and how to optimise it for maximum airflow. Of Course we are not making a 3D printer but the principles of airflow are mostly the same. The paper analysis it based on inlet, outlet and throat length. And concludes optimised inlet angle of 40 degrees, outlet angle of 20 degrees with a 3 mm throat length. It optimised the fan with 23% more airflow.

Important is that this fan used in this research was 27 mm, which seems feasible for an as small as possible crawler.

Here are the results processed in ANSYS 2021 R1 cfd. First the outlet was done then throat length and finally inlet, because outlet has the biggest impact based on their prior research.

2D LiDAR and Camera Fusion in 3D Modelling of Indoor Environment

This paper goes over how to effectively fuse data from multiple sensors in order to create a 3D model. An entry level camera is used for color and texture information, while a 2D LiDAR is used as the range sensor. To calibrate the correspondences between the camera and LiDAR, a planar checkerboard pattern is used to extract corners from the camera image and intensity image of the 2D LiDAR. Thus the authors rely of 2D-2D correspondences. A pinhole camera model is applied to project 3D point clouds to 2D planes. RANSAC is used to estimate the point-to-point correspondence. Using transformation matrices the authors match the color images of the digital camera to with the intensity images. B aligning a 3D color point cloud in different location, the authors generate the 3D model of the environment. Via a turret widow X servo, the 2D LiDAR is moved in vertical direction for a 180 degree horizontal field of view. The digital camera rotates in both vertical and horizontal directions, to generate panoramas by stitching series of images. In the third paragraph the authors go over how they calibrated the two image sources. To determine the rigid transformation between camera images and 3D points cloud a fidual target is used, RANSAC is used to estimate outliers during calibration process and a checkerboard with 7x9 squares is employed to find correspondences between LiDAR and camera. Finally the authors go over their results.

A Review of Multi-Sensor Fusion SLAM Systems Based on 3D LIDAR

This paper is a review of multiple SLAM systems from which their main vision component is a 3D LiDAR which is integrated with other sensors. LiDAR, camera and IMU are the 3 most used components and all have their advantages and disadvantages. The paper discusses LiDAR-IMU coupled systems and Visual-LiDAR-IMU coupled system, both tightly and loosely coupled.

Most loosely coupled systems are based on the original LOAM algorithm by J. Zhang et all, these systems are new in terms of that the paper by Zhang is from 2014, but there have been many advancements in this technology. The LiDAR-IMU systems often use the IMU to increase the accuracy of the LiDAR measurements and new developments involve speeding up to ICP algorithm to combine point clouds with clever tricks and/or GPU acceleration. The LiDAR-Visual-IMU systems use the complementary properties of LiDAR and camera’s, LiDAR needs textured environments while visions sensors lack the ability to perceive depth, thus the cameras are used for feature tracking and together with LiDAR data allow for more accurate pose estimation.

In contrast to the speed and low computational complexity of loosely coupled systems, tightly coupled systems sacrifice some of this for greater accuracy. One of the main points of these systems is a derivation of the error term and pre-integration formula for the IMU, this can be used to increase accuracy of the IMU measurements by estimating the IMU bias and noise. For LiDAR-IMU systems this derivation is used for removing distortion in LiDAR scans, optimizing both measurements and many different approached to couple the 2 devices to obtain greater accuracy and computation speed. The LiDAR-Visual-IMU use strong correlation between images and point clouds to produce more accurate pose detection.

The authors then do performance comparisons on SLAM datasets where most recent SLAM systems appear to estimate pose really close to the ground truth even over distances of several 100 meters.

An information-based exploration strategy for environment mapping with mobile robots

This paper proposes a mathematically oriented way of mapping environments. Based on relative entropy, the authors evaluate a mathematical way to produce a planar map of an environment, using a laser range finder to generate local point-based maps that are compiled to a global map of the environment. Notably the paper also discusses how to localize the robot in the produced global map.

The generated map is a continuous curve that represents the boundary between navigable spaces and obstacles. The curve is defined by a large set of control points which are obtained from the range finder. The proposed method involves the robot generating and moving to a set of observation points, at which it takes a 360 degree snapshot of the environment using the range finder, finding a set of points several specified degrees apart, with some distance from the sensor. The measured points form a local map, which is also characterised by the given uncertainty of the measurements. Each local map is then integrated into the global map (a combination of all local maps), which is then used to determine the next observation point and position of the robot in global space.

The researches go on to describe how the quality of the proposal is measured, namely in the distance traveled and uncertainty of the map. The uncertainty is a function of the uncertainty in the robot's current position, and the accuracy of the range finder. The robot has a pre-computed expected position of each point, and a post-measurement position of each point, which is then evaluated through relative entropy to compute the increment of the point-information. This and similar equations for the robot's position data are used to select the optimal points for observing the environment. Lastly, the points of each observation point are combined into one map, by using the robot's position data.

Mobile Robot Localization Using Landmarks

The paper discusses a method to determine a robot's position using landmarks as reference points. This is a more absolute system than just inertia based localization. The paper assumes that the robot can identify landmarks and measure their position relative to each other. Like other papers, it highlights its importance due to error accumulation on relative methods.

It highlights the robot's capability to: - Find landmarks - Associate landmarks with points on a map - Use this data to compute its position.

It uses triangulation between 3 landmarks to find its position, with low error. The paper also discusses how to re-identify landmarks that were misjudged with new data. The robot takes 2 images (using a reflective ball to create a 360 image), and solves the correspondence problem (identifying an object from 2 angles) to find its location. In the paper, the technique is tested in an office environment.

The paper discusses how to perform triangulation using an external coordinate system and the localisation of the robot. The vectors to the landmark are compared and using their angle and magnitude the position can be computed. Next, the paper discusses the same technique, adjusted for noisy data. The paper uses Least-Squares to derive an estimation that can be used, evaluating the robot's rotation relative to at least 2 landmarks. The paper then evaluates the expected distribution in angle-error and position on each axis, to correct for the noise, using the method described above.

Users

Problem statement

Crawl spaces are home to a number of possible dangers and problems for home inspectors. These include animals, toxins, debris, mold, live wiring or even simply height. [35] The use robots to help inspect crawl spaces, is already something that is being done. However, there are still some reasons why they are not fully being used. Robots might get stuck due to wires, pipes or ledges, or it might be difficult to control the robot remotely. Lastly, there is the argument that a human is still better equiped to do the inspection, as feel mind tell more than a camera view. [36]

To elimante the problem of control, the robot should be an autonomous one, capapble of traversing the crawlspace by itself, without getting stuck or trapped. The overall goal of the robot is to reduce possible harm the a human, which it will dor by creating a 3Dmap of the environment. Because a human inspector needs to enter a crawlspace themselves eventually, they know what they can expect from the crawlspace and beforehand prepare for any dangers or problems.

Requirements

There are a few function that the robot must able to do, in order to work as a general crawlspace robot. Additionally, since we are improving current models that rely on cameras and human control, there are some other requirements too.

Firstly, it should be able to enter the crawlspaces based on its size. In the US, crawlspace typically range from between 18in to 6ft (around 45cm to 180m) [37], in the BENELUX (Belgium, Netherlands, Luxembourg), the average lies between 40cm and 80cm [38], but sometimes even being smaller than 35cm. Entrances are of course even smaller.

The robot must also be protected from the dangers of the environment. Protective casing, protection against live wires, reasonably waterproof (the robot is not designed to work under water, but humidity or leaks should not shut it down) and a way to be save from animals.

Next, it must also be able to traverse the space, regardless of pipes, or small sets of debris.

Following from that, it must also be able to travel autonomously, while keeping track of its position, to make sure it has been through the entire crawlspace.

Lastly, the most important added feature, it must be able to complete a 3D mapping of the environment.

To be able to perform these tasks, there are some technical requirements.

The robot must have enough data storage or a way to transmit it quickly, to handle the 3D modelling.

Next, it must have enough processing power to navigate through the environment at a reasonable speed.

It must have a power supply strong enough to make sure it can complete the mapping of a full crawlspace.

Lastly, it must have

Current crawlers

As mentioned before, there are some robots already in use to help inspectors with their job.

First, we have inspectioncrawlers, where different crawler robots can be bought. All of their robots have basic specifications, consisting of at least hours of runtime, high quality cameras, protective covers, wireless (distant) control, waterproof electronics and good lighting. The main advantage of these robots are that they are capable of providing an almost 360 camera view of their surroundings which allows an inspector to see most of the environment. However, control is still necessary by the a human operator.

Next there is the GPK-32 Tracked Inspection Robot from SupderDroid Robots. With dimensions of only 32cm by 24cm and height of 19cm (12.5" X 9.5" X 7.25"), it can easily fit in most crawlspaces. Included are several different protective items such as a Wheelie Bar to protect from flipping, a Roll Cage to protect the camera and a Debris Deflector. Their biggest disadvantage is the fact that they require line of sight or proximity in order to wirelessly control the robot.

Lastly, there is a tethered inspection robot from SupderDroid Robots. The entire system is waterproof, has a longer runtime and the camer allows for a 360° pan with a -10°/+90° tilt, which allows for a clear visioni. There are two main disadvantages, one being its size and the other the fact that it requires tethering to be able to be controlled. With dimensions of 48cm by 80cm and a height of 40cm (18.9" X 31.2" X 15.7"), it is a bit big to be used in some crawl spaces, which might cause it to get stuck easier. Lastly, the fact that it is tethered means that the cable can also easily be stuck and that the robot requires more precise control.

- ↑ Z. Kowalczuk and T. Merta, "Modelling an accelerometer for robot position estimation," 2014 19th International Conference on Methods and Models in Automation and Robotics (MMAR), Miedzyzdroje, Poland, 2014, pp. 909-914, doi: 10.1109/MMAR.2014.6957478.

- ↑ Woodman, O. J. (2007). An introduction to inertial navigation (No. UCAM-CL-TR-696). University of Cambridge, Computer Laboratory.

- ↑ T. Lee, J. Shin and D. Cho, "Position estimation for mobile robot using in-plane 3-axis IMU and active beacon," 2009 IEEE International Symposium on Industrial Electronics, Seoul, Korea (South), 2009, pp. 1956-1961, doi: 10.1109/ISIE.2009.5214363.

- ↑ Mapping and localization module in a mobile robot for insulating building crawl spaces. (z.d.). https://www.sciencedirect.com/science/article/pii/S0926580517306726

- ↑ Wang, Z. and Gu, H. (2007), "A review of locomotion mechanisms of urban search and rescue robot", Industrial Robot, Vol. 34 No. 5, pp. 400-411. https://doi.org/10.1108/01439910710774403

- ↑ Jean-Luc Paillat, Philippe Lucidarme, Laurent Hardouin. Variable Geometry Tracked Vehicle, description, model and behavior. Mecatronics, 2008, Le Grand Bornand, France. pp.21-23. ffhal-03430328

- ↑ Athani, V. V. (1997). Stepper motors: fundamentals, applications and design. New Age International.

- ↑ https://arxiv.org/pdf/1903.01067v2.pdf

- ↑ http://www.roboticsproceedings.org/rss09/p37.pdf

- ↑ https://www.robots.ox.ac.uk/~mobile/drs/Papers/2022RAL_zhang.pdf

- ↑ Luis C. Básaca-PreciadoOleg Yu. SergiyenkoJulio C. Rodríguez-QuinonezXochitl GarcíaVera V. TyrsaMoises Rivas-LopezDaniel Hernandez-BalbuenaPaolo MercorelliMikhail PodrygaloAlexander GurkoIrina TabakovaOleg Starostenko (2013), Optical 3D laser measurement system for navigation of autonomous mobile robot, https://www.sciencedirect.com/science/article/pii/S0143816613002480

- ↑ Design and basic experiments of a transformable wheel-track robot with self-adaptive mobile mechanism | IEEE Conference Publication | IEEE Xplore

- ↑ Rough Terrain Motion Planning for Actuated, Tracked Robots | SpringerLink

- ↑ IEEE Xplore Full-Text PDF:

- ↑ Dorit Borrmann, Andreas Nüchter, Marija Ðakulović, Ivan Maurović, Ivan Petrović, Dinko Osmanković, Jasmin Velagić, A mobile robot based system for fully automated thermal 3D mapping (2014), https://www.sciencedirect.com/science/article/pii/S1474034614000408

- ↑ Zhiliang Ma, Shilong Liu, 2018, A review of 3D reconstruction techniques in civil engineering and their applications (2014), https://www.sciencedirect.com/science/article/pii/S1474034617304275?casa_token=Bv6W7b-GeUAAAAAA:nGuyojclQld2SMnIeHougCByarFJX7eu049kMp_IWrnU5e8ljX9RMao-U4vs6cB3nREk8JP3qIA

- ↑ Analysis and optimization of geometry of 3D printer part cooling fan duct - ScienceDirect

- ↑ Juan Li, Xiang He, Jia L, 2D LiDAR and camera fusion in 3D modeling of indoor environment (2015), https://ieeexplore.ieee.org/document/7443100

- ↑ https://www.mdpi.com/2072-4292/14/12/2835

- ↑ Francesco Amigoni, Vincenzo Caglioti, An information-based exploration strategy for environment mapping with mobile robots, Robotics and Autonomous Systems, Volume 58, Issue 5, 2010, Pages 684-699, ISSN 0921-8890, https://doi.org/10.1016/j.robot.2009.11.005. (https://www.sciencedirect.com/science/article/pii/S0921889009002024)

- ↑ M. Betke and L. Gurvits, "Mobile robot localization using landmarks," in IEEE Transactions on Robotics and Automation, vol. 13, no. 2, pp. 251-263, April 1997, doi: 10.1109/70.563647.

- ↑ Paillat, Jean-Luc & Com, Jlpaillat@gmail & Lucidarme, Philippe & Hardouin, Laurent. (2008). Variable Geometry Tracked Vehicle (VGTV) prototype: conception, capability and problems.

- ↑ Paillat, Jean-Luc & Com, Jlpaillat@gmail & Lucidarme, Philippe & Hardouin, Laurent. (2008). Variable Geometry Tracked Vehicle (VGTV) prototype: conception, capability and problems.

- ↑ Wang, Z. and Gu, H. (2007), "A review of locomotion mechanisms of urban search and rescue robot", Industrial Robot, Vol. 34 No. 5, pp. 400-411. https://doi.org/10.1108/01439910710774403

- ↑ Design and basic experiments of a transformable wheel-track robot with self-adaptive mobile mechanism | IEEE Conference Publication | IEEE Xplore

- ↑ Design and basic experiments of a transformable wheel-track robot with self-adaptive mobile mechanism | IEEE Conference Publication | IEEE Xplore

- ↑ Design and basic experiments of a transformable wheel-track robot with self-adaptive mobile mechanism | IEEE Conference Publication | IEEE Xplore

- ↑ Rough Terrain Motion Planning for Actuated, Tracked Robots | SpringerLink

- ↑ IEEE Xplore Full-Text PDF:

- ↑ IEEE Xplore Full-Text PDF:

- ↑ IEEE Xplore Full-Text PDF:

- ↑ Analysis and optimization of geometry of 3D printer part cooling fan duct - ScienceDirect

- ↑ Analysis and optimization of geometry of 3D printer part cooling fan duct - ScienceDirect

- ↑ Analysis and optimization of geometry of 3D printer part cooling fan duct - ScienceDirect

- ↑ Gromicko, N. (n.d.). Crawlspace Hazards and Inspection. InterNACHI®. https://www.nachi.org/crawlspace-hazards-inspection.htm

- ↑ Cink, A. (2022, 5 april). Crawl Bots for Home Inspectors: Are they worth the investment? InspectorPro Insurance. https://www.inspectorproinsurance.com/technology/crawl-bots/

- ↑ Crawl Pros. (2021, 12 March). The Low Down: Crawl Spaces vs. Basements 2022. https://crawlpros.com/the-low-down-crawl-spaces-vs-basements/

- ↑ G. (2022, 19 December). Kruipruimte uitdiepen (10 - 70 cm). De Kruipruimte Specialist. https://de-kruipruimte-specialist.nl/kruipruimte-uitdiepen/

- ↑ https://www.inspectioncrawlers.com/

- ↑ https://www.superdroidrobots.com/store/industries/pest-control/product=2729

- ↑ https://www.superdroidrobots.com/store/industries/pest-control/product=2452