PRE2022 3 Group12: Difference between revisions

m (testing wikilanguage) |

m (added user guide to Appendix) |

||

| (176 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

= | ==Design Goal== | ||

== | ===Problem Statement=== | ||

A major demographic challenge is ageing population. By around 2040, it is expected that one-quarter of the population will be aged 65 years or older. Compared to today, the size of this group of people will have increased by about 1.2 million people, all while the number of people working (in the age group 20 to 64 years old) will stay roughly the same. <ref>[https://www.cbs.nl/en-gb/news/2020/51/forecast-population-growth-unabated-in-the-next-50-years Forecast: Population growth unabated in the next 50 years]</ref> This means that there will be relatively fewer healthcare workers available for the amount of elderly causing a dramatic decrease in the support ratio and a shortage of healthcare workers.<ref>Johnson, D.O., Cuijpers, R.H., Pollmann, K. ''et al.'' Exploring the Entertainment Value of Playing Games with a Humanoid Robot. ''Int J of Soc Robotics'' 8, 247–269 (2016). <nowiki>https://doi.org/10.1007/s12369-015-0331-x</nowiki></ref> The reduced support ratio not only results in higher pressure on healthcare workers, but also causes increasing loneliness among elderly people, especially those aged 75 years or older.<ref>[https://www.cbs.nl/en-gb/news/2020/13/nearly-1-in-10-dutch-people-frequently-lonely-in-2019 Nearly 1 in 10 Dutch people frequently lonely in 2019]</ref> One possible way to mitigate loneliness is to provide social support in the form of entertainment. However, with the increasingly larger group of elderly people, it will become harder for healthcare workers to provide entertainment activities for the elderly. It would therefore be interesting to consider the use of a social robot to entertain the elderly. Currently, socially assistive robots have already been applied to assist the elderly. The PARO robot (a pet robot with the appearance of a seal) is a perfect example, which is employed as a therapeutic device for older people, children, and people with disabilities.<ref>Šabanović Selma, & Chang, W.-L. (2016). Socializing robots: constructing robotic sociality in the design and use of the assistive robot paro. ''Ai & Society<span> </span>: Journal of Knowledge, Culture and Communication'', ''31''(4), 537–551. <nowiki>https://doi.org/10.1007/s00146-015-0636-1</nowiki></ref> Games can also be a good method to entertain people and provide social interaction. Especially for elderly people, a low-tech card game would be most effective since it might be more familiar than a digital game.<ref>[https://doi.org/10.1145/1463160.1463205 Designing and Evaluating the Tabletop Game Experience for Senior Citizens]</ref> Thus, the design goal will address how a social robot can be developed that is able to play card-games for the purpose of entertaining elderly people to mitigate loneliness in the ageing society. | |||

The | ===User=== | ||

The purpose of the entertainment robot would be to provide the elderly with the opportunity to play physical card games without the need for other players. For example when they are unable to visit others, or unable to have visitors, they can still play with the robot and enjoy a game of cards. Even though such a robot could be beneficial for anyone who is for some reason unable or uninclined to play with others, this robot is specifically focused on elderly people since they are mostly faced with the major concern of increased loneliness due to ageing. Furthermore, providing social support to elderly people in the form of entertainment will have three main benefits.<ref>Johnson, D.O., Cuijpers, R.H., Pollmann, K. ''et al.'' Exploring the Entertainment Value of Playing Games with a Humanoid Robot. ''Int J of Soc Robotics'' 8, 247–269 (2016). <nowiki>https://doi.org/10.1007/s12369-015-0331-x</nowiki></ref> Firstly, it has been shown that a social robot can improve the well-being of the elderly. Secondly, the social robot could engage the elderly in other tasks such as taking medicine or measuring blood pressure, which reduces the workload of caregivers that would otherwise have to perform these tasks. Lastly, due to the physical embodiment of the robot, it will provide a richer interaction for elderly people in contrast to online games. Thus, the card-game playing robot will increase the Quality of Life (QoL) of the elderly by providing them with social support.<ref>[https://ieeexplore.ieee.org/document/7745165 Just follow the suit! Trust in Human-Robot Interactions during Card Game Playing] </ref> However, the elderly are generally assumed to have more difficulties with technology.<ref name=":0">[https://doi.org/10.1080/01449290601173499 How older people account for their experiences with interactive technology.]</ref> This could pose a challenge when the elderly have to learn how to use the robot. Nevertheless, this could be accounted for during the design process by incorporating an easy-to-use user interface. On top of that, it is expected that if elderly people are able to properly use and understand the product, the younger generations will be able to do so as well, which can potentially make the robot relevant to multiple stakeholders. | |||

===Related Work:=== | |||

Even though a physical card-game playing robot does not exist, there are papers that describe similar research. Firstly, the research by A. Al Mahmud et al. focuses on tabletop technology.<ref name=":2">[https://doi.org/10.1016/j.entcom.2010.09.001 Designing social games for children and older adults: Two related case studies]</ref> The research aimed to create a game for children and elderly people while combining digital and physical aspects of games. Because of the digital aspect, a new element of uncertainty was introduced into the game, which was very appreciated by the users. They also appreciated that there were physical objects to hold, such as gaming tiles and cards. Next, the research by D. Pasquali et al. focuses on whether a robot can detect important information about its human partner’s inner state, based on eye movement.<ref name=":3">[https://doi.org/10.1145/3434073.3444682 Magic iCub: A Humanoid Robot Autonomously Catching Your Lies in a Card Game]</ref> The robot also had a second goal, namely doing an entertaining activity with a human. The human is only lying about the secret card, and the robot has to then guess which card is the secret card out of six cards. Although the robot did not have 100% accuracy in guessing the secret card, their human partners still considered the game entertaining. Lastly, the research by F. Correia et al. investigated the trust levels between robots and humans such that it would be possible to create a social robot that would be able to entertain elderly people, who suffer from social isolation.<ref name=":4">[https://doi.org/10.1109/ROMAN.2016.7745165 Just follow the suit! Trust in Human-Robot Interactions during Card Game Playing]</ref> The paper describes an experiment in which only one robot with a card game was used. Furthermore, groups were formed where the robot could either be your partner with whom you needed to work together, or the robot could be your opponent. The authors of this paper only considered the trust levels of human partners and concluded that humans are able to trust robots. But to establish higher trust levels between the humans and the robot, the humans should interact more with the same robot. | |||

===Purpose:=== | |||

The previously discussed papers provide good insight into the engagement between robots and humans during games. For example, during the research by D. Pasquali et al. a human performed an entertaining activity with a robot.<ref name=":3" /> However, the game that was played was a game with tricks and guessing, instead of a game usually played between two people. The research by F. Correia et al. does have a robot player as an opponent, but the main focus of this project was on the trust levels between partners. <ref name=":4" /> So, the current research is still insufficient to design a card-game playing robot specifically aimed at elderly people. According to a study that focused on the motivational reasons for elderly people to play games, difficulty systems are important to engage more people.<ref name=":1">[https://www.researchgate.net/publication/277709317_Motivational_Factors_for_Mobile_Serious_Games_for_Elderly_Users Motivational Factors for Mobile Serious Games for Elderly Users] </ref> If the game is too easy it could become boring, and if the game is too hard it could cause anxiety. As has already been stated, the primary user also experiences difficulties with current technology.<ref name=":0" /> If the primary user is unable to figure out how to use the robot, or it takes too much effort they are less likely to engage with the robot. Therefore, it is important to figure out how to make the user interface of the robot easy and understandable for elderly people. | |||

The purpose of this research is to investigate how the user interface of a card game-playing robot can be designed so that elderly people can easily use it and how a difficulty system can be implemented into the software of the card-game playing robot, such that the different levels of difficulty are appropriate for the elderly. The findings of the research will be implemented by means of software developed for a card-game playing robot. Due to limitations, the physical body of the robot will not be realized apart from a camera to detect the physical playing cards. Several steps were taken to answer these research questions. Firstly, A user study with elderly people and caregivers will be conducted to explore important design aspects of a card-game playing robot. Next, both functional and technical specifications will be formulated according to the MoSCoW method. Then, user fun, objection recognition and the gameplay strategy of the card-game playing robot will be discussed. Lastly, conclusions will be drawn upon the design, and points for improvements will be listed. | |||

==User study== | |||

===User interviews=== | |||

The aim of conducting user interviews is to receive feedback from the user to make design choices that are more centered around the user. Furthermore, the user interviews specifically focused on a few aspects that are important to the research questions. Firstly, even though it is the ambition to have the robot play multiple card games in the future, it is desirable to know which card game should be focused on during this research for developing an appropriate card-game strategy and object recognition algorithm. Therefore, participants of the user interviews were asked how familiar the users are with Uno, crazy eights or memory. Also, it would be useful to know for developing the difficulty model how much elderly value winning in a card game and the relation between winning a card game and the fun experienced during the game. | |||

====Method==== | |||

Interviews were conducted including both the elderly and caretakers, which are the primary and secondary users respectively. In total, six participants were interviewed of which three participants were elderly people and three participants were caretakers. Due to the differences between the two groups of participants, both groups were asked general questions and more specific questions depending on the group. For example, the questions for the elderly were more directed towards their experience with card games while the questions for the caregivers were more focused on the requirements that the robot should adhere to. The complete questionnaire can be found on the [[PRE2022 3 Group12/User interview|User Interview]] page, which can also be found in the [[PRE2022 3 Group12#Appendix|Appendix]]. | |||

====Results==== | |||

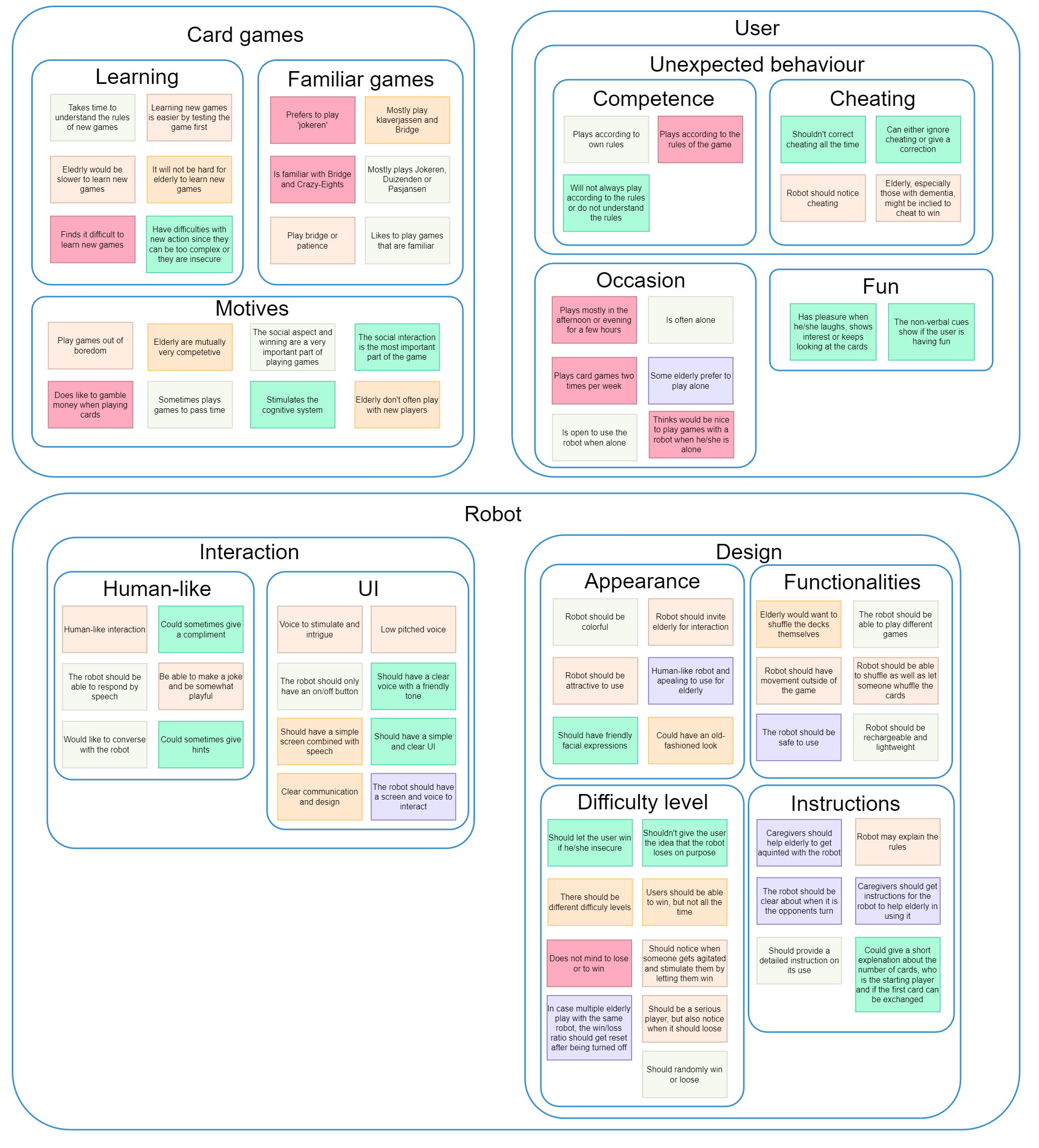

The answers of the participants were analyzed by means of an affinity diagram. At first transcriptions were made for every interview. From these, all key phrases or ideas containing relevant information have been summarized on sticky notes. These sticky notes were later grouped in terms of relations between similar phrases, specifically; 'robot', 'user' and 'card games'. These groups were further subdivided into smaller categories, as an example; 'learning', 'familiar games' and 'motives' are subgroups of the overarching 'card games' group. At the end, a coherent definition was assigned to each group. The resulting affinity diagram is shown below, providing an overview of data collected from the interviews. The different colors in the affinity diagram are used to represent a different participant and the boxes display the different (sub)groups. | |||

From the affinity diagram it can be seen that elderly people mostly play the card-games ‘Jokeren’ and ‘Bridge’. While these are the most often played games, they are also familiar with card-games such as 'Crazy-Eights', ‘Klaverjassen’, ‘Duizenden’ or patience. They were especially well familiar with the Dutch version of the game 'Crazy-Eights', which is called 'Pesten'. Hence, the choice was made to focus on creating a card-game playing robot which can play a game of 'Pesten'. It was also found that it can be hard for elderly to learn new card games and that the reason why elderly play games is mainly because of the social interaction. While social interaction was mentioned the most, competitiveness and passing time where also mentioned. Not all elderly play according to the same rules and might even be inclined to cheat during the game. Most participants responded that the robot should be able to interact in a very similar way as a human would since it should be able to respond by speech to occasionally give, for example, a compliment, a hint or make a joke. On top of that, most participants answered that the user interface could be realized by using a screen or display in combination with a voice. However, it is very important that the UI is clear and simple. Several participants responded that the robot should also be appealing to the elderly and this could be realized by adding facial expressions, color and even an old-fashioned look. Furthermore, the functionalities of the robot would include shuffling, being able to play multiple games, movement, and a lightweight design. It should be a serious player, but must also notice when it should let the elderly win since it can give the user a boost in confidence and therefore motivate them to keep playing. Lastly, detailed instructions must be given to the caretakers on the use of the robot and the robot should be clear about the number of cards each player needs, who starts the game and when it is the opponent's turn. [[File:Affinity diagram.png|1105x1105px|Affinity diagram|center|frameless]] | |||

<br /> | |||

===User requirements=== | |||

From the results of the user interviews, several concrete user requirements have been identified. These requirements will now be discussed and supported by relevant literature. Firstly, due to their age, most elderly have increased problems with their sight, hearing, or motor skills.<ref name=":1" /> Therefore, it is important that the card-game playing robot addresses these problems. From the results of the user interviews, it was found that the robot should be lightweight. This ensures that the elderly can still carry the robot. Furthermore, to compensate for reduced hearing, a screen should be included, having an easy-to-read font and text size. In addition, the robot should have a voice with clear and loud audio implementations for the elderly with reduced sight. In addition, the robot should specifically have a low-pitched voice, which was concluded from the affinity diagram. Thus, to address problems related to old age, requirements for the robot's physical design must be lightweight and have a clear screen as well as a low-pitched voice. | |||

Secondly, the literature study has also shown that elderly people often experience more difficulties when learning something new. For this reason, it can be argued that the robot should be able to play games that the elderly are already familiar with, or at least similar to those. This will result in elderly understanding and learning these games easier which in turn makes it easier to use the robot.<ref name=":0" /> This is further supported by the interview results since many participants responded that they find it difficult to learn and understand the rules of new games. From the user interviews, it was found that the most familiar games are ‘Jokeren’, ‘Klaverjassen’, 'Duizenden', Bridge, Crazy Eights, Pesten or Patience. Since Pesten can be played with two persons and is, compared to ‘Jokeren’ for example, a relatively simple game, this research will mostly focus on developing a game-playing strategy for the game Pesten. It is to note however that since the object recognition algorithm will be trained on a regular stock of playing cards, the software could be expanded to include other games as well such as ‘Jokeren’ or Bridge. | |||

The | Next, multiple difficulty settings for the robot to balance the ability of the user against the robot’s difficulty level is an important requirement with respect to the user experience.<ref name=":1" /> The interview results showed that the robot should be a serious player and not let the user win all the time. To engage the users’ more while playing with the robot, it is important that the robot has a competitive nature. Instead of having a robot that is relationship driven. This is further confirmed by the fact that one of the motives to play card games is competitiveness.<ref>[https://ieeexplore.ieee.org/document/9473499 Friends or Foes? Socioemotional Support and Gaze Behaviors in Mixed Groups of Humans and Robots]</ref> However, it could lose on purpose to stimulate the user whenever the user is insecure or agitated. | ||

= | Furthermore, another aspect that could be added in order to improve the user experience is the implementation of motivational messages during the game.<ref name=":1" /> As indicated by the results of the interviews, the interaction with the robot should be human-like. Also, the social interaction of card games is one of the main motives of players. Therefore, the ability of the robot to converse with the user plays a role in the user experience. This could be realized by having the robot give compliments when the user makes a good move or hints when the user is not playing very well. On top of that the robot could make jokes to be somewhat playful. | ||

Lastly, a card-game playing robot is required to deal with unexpected user behaviors, such as cheating or different game rules. This is evident from the user interview results where some participants have mentioned that they play according to different rules and it has even been mentioned that some elderly, especially those with dementia, might be inclined to cheat in order to win a game. To deal with such behavior, the robot should first be able to notice whether a move made by the opponent is valid. Then there are two options, the robot can either correct the user by pointing out that a move was not valid, for example by using speech, or completely ignore the invalid move and continue playing. However, dealing with unexpected user behavior is outside the scope of this research and hence not a specification. | |||

==Specifications== | |||

===Functional specifications=== | |||

Functional MoSCoW table for the project: | |||

{| class="wikitable" | |||

!Must have | |||

(project won’t function without these) | |||

|Ability to classify regular playing cards (95%) in a live camera feed | |||

|Have a valid strategy at playing the game | |||

|Be understandable and interactable by elderly people | |||

|Give general commands to a phantom robot to play the game | |||

| | |||

|- | |||

!Should have | |||

(improves the quality of the project) | |||

|Ability to track moving/obscured/out-of-frame cards | |||

|Strategy should optimize user fun | |||

|Communicate intent with the user; display/audio | |||

| | |||

| | |||

|- | |||

!Could have | |||

(non-priority nice to have features) | |||

|Have a self-contained user interface window to play in | |||

|Know strategies for multiple popular card games | |||

|Ability to classify non-standard cards from other games | |||

|A robot design ready to be built and integrated with the program | |||

|Robust ability to keep playing after user (accidentally) cheats | |||

|- | |- | ||

!Won’t have | |||

(indicates maximum scope of the project) | |||

|No physical device except for the camera | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|} | |} | ||

== | ===Technical specifications=== | ||

Technical MoSCoW table for the project: | |||

Concrete requirements for the functional entries above. | |||

{| class="wikitable" | |||

!Must have | |||

(project won’t function without these) | |||

|At least 95% accuracy when classifying any playing card (suit and number) | |||

|Must be able to decide on a valid move within 3 seconds | |||

|The UI is designed with proven methods to ease interactivity for the elderly | |||

|The code must be compatible with some robot movement interface | |||

| | |||

|- | |||

!Should have | |||

(improves the quality of the project) | |||

|The project should remember all cards it was confident it saw (95%) during a match as if they are in play | |||

|The project should have a notion of a ‘fun’ level and adjust its moves based on this (combined with the selected difficulty) | |||

|The project should communicate clearly at any time (English & Dutch) what it is doing at the moment; thinking, moving, waiting for the user’s turn | |||

| | |||

| | |||

|- | |||

!Could have | |||

(non-priority nice to have features) | |||

|A game can be launched & played without the use of a terminal, inside a browser-tab or separate window | |||

|Know valid strategies for at least 3 popular card games | |||

|Ability to classify (95% accuracy) non-standard cards from other games (like Uno) | |||

|A 3D robot CAD design ready to be built and integrated with the program without much extra work | |||

|After noticing an (unexpected) state-change of the game the project should continue playing, not try to correct the user | |||

|- | |||

!Won’t have | |||

(indicates maximum scope of the project) | |||

|No physical device except for the camera | |||

| | |||

| | |||

| | |||

| | |||

|} | |||

<br /> | |||

==Object recognition== | |||

===Datset & AI model=== | |||

In order for a digital system to play a physical card game it is required to have the capabilities of reading the environment as well as recognizing the cards that are currently in play. Therefore the designed systems makes us of an object recognition model. The target set in the 'must have' design goal is that this AI should be able to recognize playing cards with an accuracy of at least 95%. | |||

[[File:Annotated training image.png|thumb|Screenshot from Roboflow showing an annotated image of playing cards]] | |||

While there already exist many projects that can classify cards, as well as many different types of AI models, the implemented algorithm was constructed using the YOLOv8 model and the Roboflow image labelling software. The Roboflow software was used because of its easy tweaking and training interface while it also allowed for easy collaboration on the dataset. The Roboflow project with the dataset for this project is also [https://universe.roboflow.com/0lauk0/playing-cards-muou8/ publically available]. YOLOv8 is an object dection model which outputs a set of boxes around all detected objects, and attaches a confidence score to each of these predictions. | |||

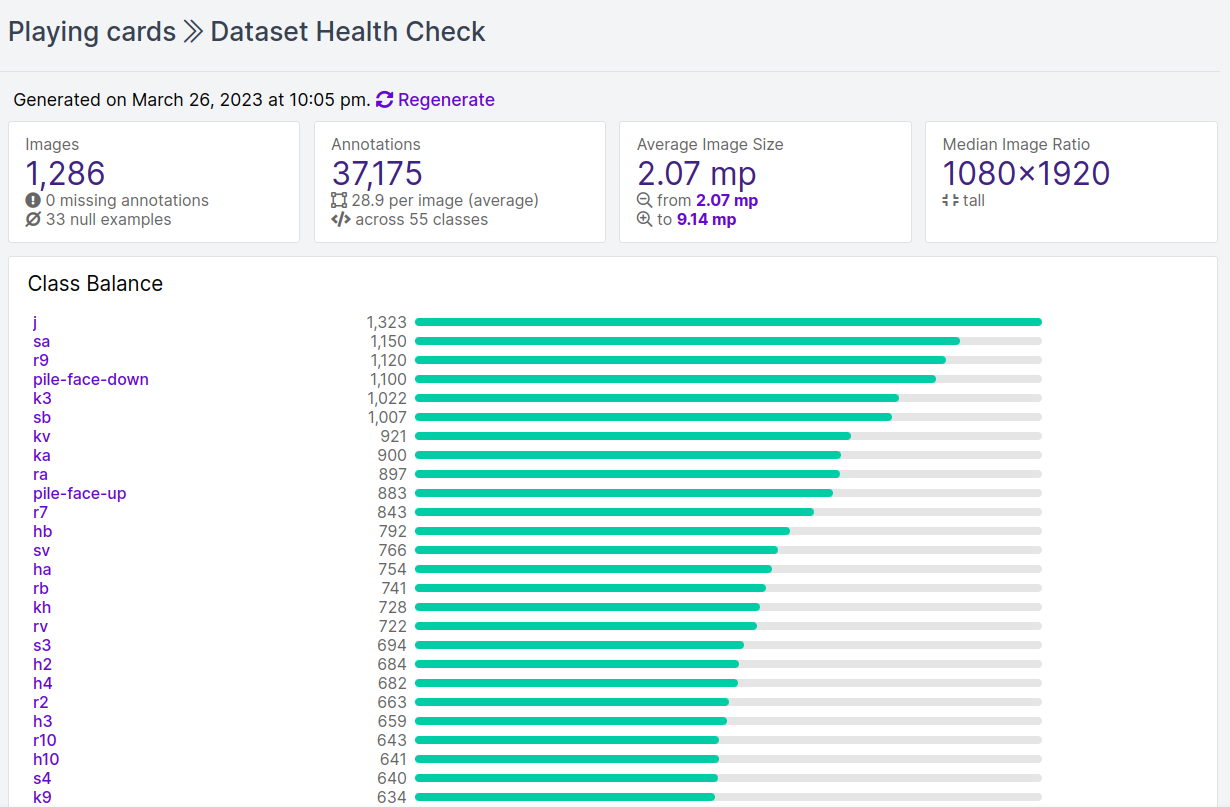

With the YOLOv8 object detection model as a base, the algorithm was trained on the dataset with labels as exported from Roboflow. This video footage was split up into individual pictures and hand labeled in Roboflow. This labeling was done to create datasets in which objects were correctly ‘recognized’ (labeled), which could than be used by the model to learn from or verify its own skills. Because there exist many different playing cards; ace, king, queen, jack, rank 10-2 and a joker for all four suits, 53 different classes needed to be represented and labeled in the dataset. These classes where labeled based on their suits: diamonds, clubs, hearts or spades (‘r’, ‘k’, ‘h’ or ‘s’ respectively) followed by their value; 2-10 for the ranked cards or ‘b’, ‘v’, ‘h’ or ‘a’ for jack, queen, king and ace respectively. So, for example the two of hearts would be classed as ‘h2’ and the ace of spades as ‘sa’. The only exception to this would be the joker card which was labeled ‘j’ as it simply did not have a suit type. This time-consuming task could in later steps be partially automated as the AI model improved. Using the initial versions of the AI model, new data could be labeled by the AI, and then manually corrected where necessary. However, the accuracy of the early versions was not always high, especially after introducing a different looking deck of playing cards. | |||

[[File:Roboflow Dataset Health Check.png|thumb|Statistics of the dataset as shown by Roboflow]] | |||

The data in Roboflow is automatically divided into training, validation and test parts, using a 70%-20%-10% split. The training data is directly exposed to the model during the training process. The validation set is indirectly exposed to the model, and used to automatically tweak the model's parameter in between the training cycles, which are also called epochs. The test set is not exposed to the model and only used to assess it's performance after it has been trained. The entire dataset consists of 1286 images, with 37175 annotations. | |||

The training process of the YOLOv8 model is very compute-intensive. On a modern RTX 3060 graphics card, a single epoch (training cycle) of the model took close to 1.5 minutes to complete. To fully train the model on the constructed dataset, around 500 epochs were required. This added up to a total training time of more than 12 hours. The final model achieves a mAP@50 of 97.1%. mAP stands for mean-average-precision, where the 50 indicates the amount of overlapping area the predicted box, which was output by the model, should have compared to the box in the training data. This is important to take into account, as for this dataset, the boxes do not have to align perfectly to the training data. As long as they cover the same area, it is considered a correct prediction. | |||

===Implementation=== | |||

While playing a game, the output of a webcam is fed into the model continuously. The predictions that the model makes are then fed into an averaging system that averages them out over a time period of 0.5 seconds. This is done such that short incorrect predictions made by the model are ignored. A detected box by the model is only used if it is detected by the model for at least 0.5 seconds with a high enough confidence (>0.8). | |||

After the detections have been averaged over time, the boxes of a similar type are combined together to form a grouped confidence. It was observed during testing that the model could sometimes detect a single corner of a card incorrect, while the other 3 corners were detected correctly. By grouping all detections of the same card type, combining their coverage, and also factoring in the distance between all grouped boxes, a very precise combined confidence score is computed. When a sufficiently high combined coverage score is reached, the type of the detected card is passed onto the game logic to be handled in the desired way. | |||

==Difficulty Model== | |||

===User fun=== | |||

In designing a card game robot, it is important that a user has fun while playing the game while also wanting to continue playing it in the future. Since it would not be sustainable if a user only uses the robot once and then gets bored. This makes it important to known what fun is, how to get someone to enjoy the game, and how to measure if someone is actually having fun at that time. | |||

According to the article “Assessment of fun in interactive systems”,<ref name=":9">[https://doi.org/10.1016/j.cogsys.2016.09.007 Assessment of fun in interactive systems: A survey]</ref> there are three different aspects of fun, namely interaction, immersion and emotion. The first aspect, interaction, is defined as the phenomenon of mutual influence. Humans interact with the environment through the six senses. However, they tend to limit their attention more to the precepts that are most in line with the internal goals. Secondly, immersion describes to which degree a person may be involved with or focuses attention on the task at hand. Lastly, the subjective part of fun is related to human emotions, which are defined by current mood, preferences and past experiences. Human emotions are made up of three hierarchical levels, namely the visceral, behavioral and reflective level. Firstly, the visceral level is triggered by sensory perception resulting in physiological responses such as a change in heart rate, sweating or facial expressions. secondly, the behavioral level involves the unconscious execution of routines. The emotions experienced in this level are related to the satisfaction of overcoming challenges. Lastly, the reflective level allows for conscious considerations in an attempt to control physical and mental bodily changes. Emotions are often classified in two dimensions. The first dimension is valence which describes the extent to which an emotion is positive or negative. For example, joy has a high positive valence while sadness has a very negative valence. In contrast, the second dimension is often referred to as arousal, which refers to the intensity of the emotion or the energy felt. Anger is, for example, a state of high arousal while boredom is a state of low arousal. | |||

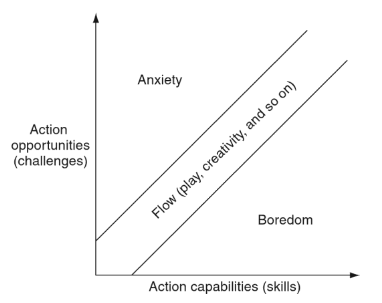

The | Consequently, from the three different aspects of fun, fun can be described as the feeling of being in control and overcoming challenges. Therefore, those three aspects of fun are related to the Flow Theory. This theory states that a person is in a flow when that person only needs to put in a limited effort to complete a task.<ref name=":9" /> In view of interaction, when being in a state of flow, it will be effortless for that person to limit the attention on the task at hand. Which makes the person experience enjoyment due to a feeling of control. At the same time, the task should be something which can actually be attained. Otherwise, the outcome cannot be controlled. Immersion relates to flow, since the level that someone is involved in a task depends on how much effort they need to put in. Again, if someone needs to put in too much effort, they might become less immersed as they get stuck and cannot continue. Whereas, when something takes too little effort, the task will be finished very quickly and therefore also not need to immerse a lot. A difference between immersion and flow is that the initial stages of immersion do not guarantee enjoyment, but are still required to achieve flow.<ref name=":9" /> The last aspect of fun, namely emotions, is also related to the amount of effort needed for a task. If something is too difficult, people can start feeling frustrated or anxious as is shown in the graph. But at the same time if something does not require enough effort from someone, they might get bored as it feels too easy and does not provide a challenge for that person. | ||

[[File:Flow Theory Graph.png|thumb|The flow state compared to skill and type of challenges.<ref name=":14">[https://books.google.nl/books?hl=nl&lr=&id=6IyqCNBD6oIC&oi=fnd&pg=PA195&dq=flow+theory+CSIKSZENTMIHALYI&ots=INJcTEW8sv&sig=gBNpVuZ7uECMlB0zsJTbFj7HHFc#v=onepage&q=flow%20theory%20CSIKSZENTMIHALYI&f=false Flow Theory And Research]</ref>]] | |||

As can also be seen in the graph, flow can vary among different people. If you have acquired more skills, a more difficult task is needed to demand the perfect amount of effort. In contrast, if you possess fewer skills, an easier task is required to limit the amount of effort. In other words, the level of the task at hand should match the person’s capabilities. This graph has therefore been linked to a difficulty system, which will be implemented in the game. Depending on the skills of the user, the difficulty of the game is increased or decreased to ensure that the user stays in their flow. As a result, the user keeps experiencing fun instead of anxiety or boredom. | |||

The study from Cutting et al.<ref>[https://doi.org/10.1098/rsos.220274 Difficulty-skill balance does not affect engagement and enjoyment: a pre-registered study using artificial intelligence-controlled difficulty]</ref> opposes the flow theory<ref name=":14" /> of the previous paragraphs. The authors state that they could not find a direct link between the user enjoyment and engagement, and the different difficulty-skill ratios. Although they suggest this might be because there exist other factors that influence the user’s enjoyment and engagement, such as relative improvement over time and what they suggest for this case, curiosity. Since the tests were performed with a new game, the authors suspect that there might be little difference in the difficulty-skill ratios and the user enjoyment and engagement due to the curiosity of the users for the new game. | |||

While it is important to know what user fun is and how it can be implemented in the game, it is equally important to be able to measure whether the user is actually having fun. In “The FaceReader: Measuring instant fun of use”,<ref name=":5">[https://doi.org/10.1145/1182475.1182536 The FaceReader: Measuring instant fun of use]</ref> two methods are described to measure emotions, namely non-verbal and verbal methods. Some of those methods can be automated as well, whereas others cannot or are harder to automate. | |||

Non-verbal methods focus on aspects for which “words” are not needed to predict how a person is feeling. For example, such methods can be implemented by using biometric sensors measuring heart rate or skin conductivity, detecting facial expressions and body movement or data hooking to capture game data such as progress measurements. On top of that, these methods can also be automated. The benefits of non-verbal methods are that there is generally no bias, as well as that there is no language barrier, and they usually do not bother the users during a task (with the exception of biometric sensors).<ref name=":5" /> | |||

Verbal methods require some form of input of the user. This can be done through rating scales, questionnaires, or interviews. Some of these methods can be automated, by using standard questions for example. But these methods can never be fully automated, as the users still have to give input themselves. An advantage of verbal methods is that they can be used to evaluate all emotions. Although, a disadvantage is that people actually provide feedback on how they felt after the task and not during the task.<ref name=":5" /> Thus, the difference between non-verbal and verbal methods is that non-verbal methods provide feedback on the experience of people during the process, whereas the verbal methods provide feedback on the way in which people experienced the end result. | |||

Given the fact that non-verbal methods provide feedback on how people actually feel during a game and that these methods can be automated, therefore requiring no input from the user. Non-verbal methods are the preferred above verbal methods in creating a difficulty system for the card-game playing robot. | |||

Non-verbal methods can be implemented by, for example, biometric sensors such as a heart rate monitor. However, these sensors are intrusive since they often need to be attached to the user’s body, which can influence the comfort of the user. It is also possible to detect facial expressions with a camera and machine learning. This method can provide an accurate assessment of the user’s emotional state. Nevertheless, it requires the implementation of complex algorithms. On top of that, this method can introduce biases due to bad quality training data and also threatens the privacy of the user since the user needs to be monitored with a camera during the game. | |||

In contrast, data hooking uses statistics of the game to base then decisions on. For example, what is the win rate? How long does someone play? How many sets does someone play? How fast does someone make their decisions? Those statistics can all indicate whether someone is enjoying the game. If someone quits really fast this could be because they are bored, frustrated or because they have something else to do. But if someone is playing very long, they are enjoying the game. If someone is able to play their next move very fast, this is either because they are very focused and have thought ahead, or they are just doing random things. People also tend to view games as a challenge.<ref name=":1" /><ref>[https://dl.acm.org/doi/10.1145/49108.1045604 Fun]</ref> So, if people win games all the time, they will not be challenged anymore. On the other hand, people like to win as well. Therefore, one can implement a win ratio, to ensure that the game remains challenging whilst ensuring that people win regularly. | |||

Data hooking has several benefits. Firstly, users will not be interrupted during a game to fill in rating scales or questionnaires. This will also improve the accessibility of the robot for elderly since the user-interface does not need to include an option for rating scales. Another benefit of the users not having to fill in questionnaires, is that people tend to fill in in questionnaires how they feel about the task afterwards and not how they felt during.<ref name=":5" /> Whereas data hooking just looks at the statistics of the game, and will therefore look at how someone felt during the game. Another benefit is that data hooking is relatively easy to implement in the system as it does not require extra devices or sensors, such as a heart rate monitor or camera to detect facial expressions. | |||

Therefore, data hooking will be implemented to monitor various game statistics and adjusting the difficulty accordingly. Both the win ratio of the user and the duration of each game will be measured to ensure that the “optimal” win/loss ratio is maintained. In further detail, if the user wins a game, the difficulty of the game will increase. On the other hand, if the user loses a game, the difficulty will decrease. Similarly, if the duration of a game is longer than the average duration, the difficulty will decrease and vice versa. | |||

===Dynamic Difficulty=== | |||

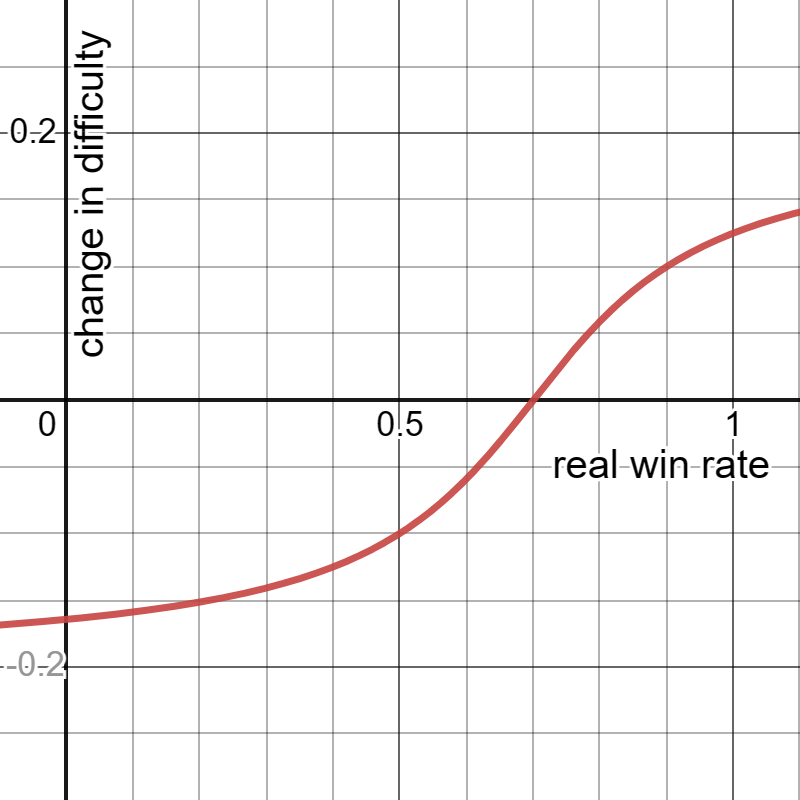

As stated before the proxy variable used to optimise user fun is <code>difficulty</code>, implemented as a floating point number between 0.0 and 1.0. Using this proxy the desired end-state after a short number of games (3~7) is that the <code>difficulty</code> converges to an ideal level where the player feels challenged but is not simply handed easy wins every time. | |||

After completing every game in a play session the robot is designed to update its <code>difficulty</code> based on 2 metrics which were determined from the gameplay: | |||

[[File:Winrate atan.png|thumb|285x285px|The arctan function used to compute the difficulty change based on win rate.]] | |||

*The win ratio of the player in the play session. The total number of player wins divided by the total number of completed games. | |||

*The game time from start to finish, measured in seconds from drawing the first cards until the player wins or the robot wins the game. | |||

For the win rate a target win rate of 0.7 has been set (70% player wins). And the mean game time is set to be 5 minutes. | |||

' | The robot's difficulty is updated using the following functions: | ||

<code>winratio_deltadiff = 0.2 * arctan(5 * (real_win_ratio - 0.7)) * (2.0 / π)</code> | |||

<code>gametime_deltadiff = 0.1 * arctan((gametime / 60.0) - 5.0) * (-2.0 / π)</code> | |||

<code>difficulty += winratio_deltadiff + gametime_deltadiff</code> | |||

The | The arctan function has been implemented for both metrics because this function is continuous, it's symmetric around the origin, and it has 2 horizontal asymptotes (at y=π/2 and y=-π/2) which are bounds for the functions. Additionally if the derivative of the function should be increased for any specific input, there is the option to scale the function horizontally (which preserves the other mentioned properties). | ||

So in concrete terms for the win ratio we translate the arctan function horizontally +0.7, then scale it horizontally by 5 (effectively squishing the function together by a factor of 5), then the output of the function is multiplied with (2.0 / π) which normalises the function vertically to the range -1.0 to 1.0. Then finally it is multiplied again by 0.2 so the robots difficulty changes no more than 0.2 every game due to the win rate. | |||

Similarly for the game time the game time is divided by 60 to get minutes, translate the arctan function horizontally +5.0, then the output of the function is multiplied with (-2.0 / π) which normalises the function vertically to the range -1.0 to 1.0 and also inverts the function (if the game time is lower than 5 minutes this will increase the difficulty and vice versa). Then finally it is multiplied again by 0.1 which is less than the factor of the win rate since it should converge to that metric more strongly. | |||

====Target win ratio justification==== | |||

Martens and White<ref name=":6">[https://doi.org/10.1016/0022-1031(75)90015-3 Influence of win-loss ratio on performance, satisfaction and preference for opponents]</ref> did a research on the influences of the win ratio. They looked at the influences on performance, satisfaction and preference for opponents. They were able to confirm that players in an unfamiliar situation prefer to choose an opponent who has a low ability in playing the game. While when one gets more familiar with the game, they are more likely to choose someone with a similar ability. They theorize that this is, because players in a competitive situation are more concerned about protecting their self-esteem, just as Wilson and Benner found as well<ref>[https://doi.org/10.2307/2786205 The effects of self-esteem and situation upon comparison choices during ability evaluation.]</ref>. They were able to find that performance is best in the 50% win ratio. The win ratio is the total amount of wins divided by the total amount of games played. They also found that the task satisfaction increases until 50% after which is stays the same. | |||

Although Deci et al.<ref>[[doi:10.1177/014616728171012|When Trying to Win: Competition and Intrinsic Motivation]]</ref> concluded that a competitive environment makes the winning a reward, and therefore diminishes the intrinsic motivation of a player. Reeve et al.<ref name=":7">[https://doi.org/10.1007/BF00991833 Motivation and performance: Two consequences of winning and losing in competition]</ref> argues that winning does not necessarily have to be a reward that discounts the intrinsic motivation. Instead, winning can be seen as having an informational aspect which should be salient for the intrinsic motivation. They assume that winners receive information that they are competent, whereas losers receive information that they are not. They concluded that this competence feeling, results in the winner having a higher intrinsic motivation than the loser. Tauer and Harackiewicz<ref name=":8">[https://doi.org/10.1006/jesp.1999.1383 Winning Isn't Everything: Competition, Achievement Orientation, and Intrinsic Motivation]</ref> came to the same conclusion. Intrinsic motivation is important, because when someone has a high intrinsic motivation for something, they might engage with it for a longer time and preexisting interests might increase.<ref name=":7" /> | |||

Other studies mainly focused on the 50% win rate and its results, this is probably because 50% is best for suited for achievement motivated subjects.<ref name=":6" /> But since our goal is not achievement motivated, but happiness motivated, we will use 70% as the target win rate. As stated before, task satisfaction is higher when the total amount of wins is at least as great as the total amount of losses.<ref name=":6" /> In other words, when this amount gets smaller, task satisfaction will go down as well. When we would aim for 50%, there is always a change that it will be just below 50% as well. Therefore, lowering the task satisfaction. Whereas, if it is with 70% a bit lower than aimed for, we will still be above 50%. And therefore, still have a high task satisfaction. Another reason why we prefer 70% over 50% is that it can increase the competence feeling and the intrinsic motivation.<ref name=":7" /><ref name=":8" /> We decided not to use a higher win rate than 70%, since if it is too high users might start winning too easily. And as a result might leave their flow and get bored, as they won’t need to put in enough effort in the game.<ref name=":14" /><br /> | |||

==Gameplay strategy== | |||

===Task environment=== | |||

In order to find an effective search strategy for choosing the next move in the game Pesten, let us first define the task environment of this specific game according to the properties mentioned in the book “Artificial Intelligence: A Modern Approach”. | |||

*The game is a multi-agent environment since both the robot and the user will be maximising their cost function. Therefore, the robot must take into account how the actions of the user affects the outcome of its own decisions. On top of that, games are by definition competitive multi-agent environments, meaning that the goals of the agents are essentially in conflict with each other since both agents want to win. It can also be argued that it is cooperative since the robot and the user want to maximise the user’s fun. | |||

*The game Pesten is non-deterministic since the initial state of cards is randomly determined by the dealer. However, the actions of the players are deterministic. | |||

*The game is discrete since the cards are essentially discrete states. | |||

*Also, the game is static since the game does not continue while one of the players is taking a decision. | |||

*Previous actions taken determine the next state of the game, thus it can be classified as sequential. | |||

*The last important distinction is that the game is partially observable since the players do not know the cards of the other player at any given moment. In other words, there is private information that can not be seen by the other player.<ref>Russell, Stuart J. (Stuart Jonathan), 1962-. (2010). Artificial intelligence<span> </span>: a modern approach. Upper Saddle River, N.J.<span> </span>:Prentice Hall,</ref> | |||

===Monte Carlo Tree Search=== | |||

The process of Monte Carlo Tree Search (MCTS) can be described by four steps, namely selection, expansion, simulation and backpropagation. It is also given a certain computational budget after which it should return the best action estimated at that point. The steps are defined as follows: | |||

#Selection: From the starting node, a child node in the game tree is selected based upon some policy. This policy tells what action or move should be done in some state and could therefore be based on the game’s rules. The selection is continued until a leaf node is reached. | |||

#Expansion: The selection process ended at the leaf node, so this is the node that will be expanded. Now, one possible action is evaluated and the resulting state of that action will be added to the game tree as a child node of the expanded node. | |||

#Simulation: From this new node, a simulation is performed using a default policy that lets all players choose random actions. This provides an estimate of the evaluation function. | |||

#Backpropagation: The simulation results are used to update the parent nodes. In this step, it backpropagates from the newly added leaf node to the root node | |||

After finishing backpropagation, the algorithm loops around to step one and reiterates all steps until the computational budget has been reached. The benefit of MCTS is that it is a heuristic algorithm, which means that the algorithm can be applied even when there is no knowledge of a particular domain, apart from the rules and conditions.<ref name=":10">Bax, F. (n.d.). ''Determinization with Monte Carlo Tree Search for the card game Hearts''.</ref> | |||

====Perfect Information Monte Carlo==== | |||

Monte Carlo Tree Search has been widely applied to perfect information games such as chess, go and others. Nevertheless, its success depended on the fact that all information on the state of these games is always known to both players and therefore also the actions other players can take. From the task environment, it can be concluded that Pesten is a partially observable game meaning that the actions that other players can take are unknown, so a game tree can not be directly created due to imperfect information. To solve this issue, the algorithm can be expanded with a technique called determinization. This technique samples different states from the set of all possible states, providing sample states with perfect information that can be solved by perfect information algorithms such as MCTS. Thus, by using determinization MCTS can be applied to find the good actions or moves to take given that perfect information states have been sampled by determinization. This MCTS combined with determinization is often referred to as Perfect Information Monte Carlo or PIMC in short. PIMC has already shown much success with the card game called Bridge.<ref name=":10" /> | |||

' | ====Implementation==== | ||

MCTS can be applied as a move picking algorithm for the robot. In code, this algorithm is implemented by making use of the mcts library of Python, which provides a function that can run MCTS. It does require that the current state of the game state, the possible actions, the resulting state of each action and the goal state will have to be specified. Furthermore, since Pesten is partially observable, a determinization function has been added as well. This function samples states by randomly assigning cards to the opponent's hand based on all possible cards in that state, which is determined by those that are available in a standard card stack and not in the robot’s hand or on the discard stack. | |||

The MCTS function from the Python library has an input argument that makes it possible to control the time limit, in either milliseconds or the number of iterations, for which the algorithm will run. After reaching the time limit, the algorithm will return the most promising action at that moment. By providing more iterations, the algorithm can evaluate more simulation results and therefore search a larger part of the game tree, often resulting in better performance. It is for this reason that, in their article, Y. Hao et al. proposed to implement dynamic difficulty adjustment based on varying the simulation time of MCTS.<ref name=":15">Y. Hao, Suoju He, Junping Wang, Xiao Liu, jiajian Yang and Wan Huang, "Dynamic Difficulty Adjustment of Game AI by MCTS for the game Pac-Man," 2010 Sixth International Conference on Natural Computation, Yantai, 2010, pp. 3918-3922, doi: 10.1109/ICNC.2010.5584761.</ref> They applied this method to Pac-Man and concluded that by adjusting the simulation time of MCTS, it was possible to create dynamic difficulty adjustment to optimise the player’s satisfaction. Thus, MCTS can be used for the robot to pick the moves and to adjust the difficulty level by varying the simulation times. | |||

====Drawbacks==== | |||

Monte Carlo Tree Search has several drawbacks. Firstly, MCTS requires much computational power of the hardware.<ref name=":15" /> After running the algorithm it was found that it could take a few seconds for the computer to select a move with reasonable performance, but this is not much slower than the time it would take for the average human player to select a move. Secondly, S. Demediuk et al. argue that MCTS is not a suited algorithm for dynamic difficulty adjustment since it is prone to producing unbalanced behaviours.<ref>S. Demediuk, M. Tamassia, W. L. Raffe, F. Zambetta, X. Li and F. Mueller, "Monte Carlo tree search based algorithms for dynamic difficulty adjustment," 2017 IEEE Conference on Computational Intelligence and Games (CIG), New York, NY, USA, 2017, pp. 53-59, doi: 10.1109/CIG.2017.8080415.</ref> Under tight computational constraints, the algorithm may find a strong action. On the other hand, provided with generous computational constraints, the algorithm may not always find a strong action. This is due to the fact that not the entire game tree is explored, but only a small part. So, the performance depends on the selection step. Lastly, the MCTS lacks transparency since it can not provide an explanation why a certain move is picked. This does not give much flexibility in terms of dynamic difficulty adjustment, because it will not be possible to check how a certain move compares to other options. For these reasons, MCTS is not suited as a move picking algorithm for the robot player. Thus, an alternative method for picking moves is used, which will be discussed in the next section.<br /> | |||

===Optimal strategy=== | |||

During the construction of the object recognition software, the gameplay strategy software was also already under construction. The object recognition software was merged with the gameplay strategy software only later in the project to obtain our final product, but there of course was a need to test the gameplay strategy software earlier on in our project. Hence, a Python environment was created in which all the different playing cards have been established and the game rules of a game of “Pesten” have been implemented. To be able to construct a difficulty model, this code was used and with the code it is possible to play a game against the robot using your keyboard, simulating a physical game of “Pesten”. When the highest difficulty level (1.0) is selected by the player the robot should of course use an optimal strategy. As covered in previous section, MCTS was found to not be a suiting algorithm to use for our gameplay strategy software. Hence, in order to decide which playing card to play or whether to draw a new playing card when it is the turn of the robot, the robot makes use of the <code>move_score()</code> function which yields a score to each possible action the robot can perform during their turn. The function makes use of many different factors, which all together decide whether the value should be high or low. The function, for instance, penalizes for playing a “pestkaart” too early in the game and rewards for again getting the next turn in the resulting game state after playing a certain card. The function even uses another function, the <code>chance_player_has_valid_card()</code>, which makes use of probability to decide whether it is likely that the opponent who will get the turn next will be able to play a valid playing card when the robot will perform an action. By being able to play a game against this robot, the <code>move_score()</code> function has been tested and adjusted repeatedly until it performed only the optimal choices in each situation. | |||

<br /> | |||

==User Interface== | |||

===Research=== | |||

One of the research questions of this paper focuses on the User Interface (UI). We are trying to create a UI that encourages our users, the elderly, to use our robot. Since elderly can experience problems when using a computer system,<ref name=":0" /> we aim to create an UI that is as easy and intuitive to use as possible by using UI design principles that decrease possible frustrations. Another reason for using design principles is that it improves performance, and increases user acceptance.<ref>[https://doi.org/10.1002/aorn.12006 Assessing Use Errors Related to the Interface Design of Electrosurgical Units]</ref> | |||

There exist many UI principles, and depending on who is going to be the user of the UI those principles may also differ. So might a person with bad sight prefer bigger letters to improve the readability, whereas someone with normal eyesight might prefer to be able to see more of a text at the same time to have a better overview. Therefore, we looked at multiple papers that had elderly as their target group or were looking for rules that are good in general and not for specific people, and focused on the similarities. | |||

Darejeh and Singh<ref name=":11">[https://doi.org/10.3844/jcssp.2013.1443.1450 A review on user interface design principles to increase software usability for users with less computer literacy]</ref> looked at three cases: elderly, children, and users with mental or physical limitations. They narrowed the mental or physical disorders down to two specific cases, namely people with visual impairments and people with cognitive or learning problems. They were able to conclude that for all the investigated groups the following principles were useful. | |||

*Reducing the number of features that are present at a given time | |||

*Tools should be easy to find | |||

*The icons and components for key functions should be bigger | |||

*Minimize the use of computer terms | |||

*Let users choose their own font, size, and color scheme. | |||

*Using descriptive texts | |||

*Using logical graphical objects to represent an action, like icons (as well as using a descriptive text) | |||

But the elderly specifically also consider user guides, simple and short navigation paths, and similar functions for different tasks helpful and useful. For some elderly with bad eyesight, reading text aloud, hearing a sound when buttons are clicked, and using distinct colors when they are close to each other can also make the use of the system easier. | |||

Salman et co.<ref name=":12">[https://doi.org/10.1007/s10209-021-00856-6 A design framework of a smartphone user interface for elderly users]</ref> created a framework for smartphones with UI suitable for the elderly. They were able to conclude that their prototype based on their framework worked better than the original android UI. For their framework, they identified some of the problems elderly experienced with other designs, as well as possible solutions to those problems written as their design guidelines. Some of those problems with their design guidelines are the following. | |||

*Too much information on the screen | |||

**Put the most important information on the homepage | |||

**Avoid having unwanted information on the screen | |||

*The absence of tooltips or instructions | |||

**Have easy-to-find help buttons or tool tips | |||

*Using an inappropriate layout of the UI elements | |||

**UI elements in more visible colors and noticeable sizes | |||

**Make the difference between ‘tappable’ and ‘untappable’ elements clear and provide the elements with text labels | |||

**Have a good contrast between the elements and the background | |||

*Similar icons for different tasks | |||

**Keep the icons consistent | |||

*Unfamiliar UI design | |||

**Avoid using uncommon and drastically new designs | |||

*Vague and unclear language | |||

**Don’t use ambiguous words | |||

*Using abbreviations, jargon terms, and/or technical terms | |||

**Avoid them all. If technical terms are really necessary provide an explanation for the terms. | |||

*Similar terms for operations that do unrelated or different actions | |||

**Use unique terms | |||

*Functions that require users to do unfamiliar actions | |||

**Use easy actions such as ‘tap’ and ‘swipe’ | |||

**Avoid tasks that are more difficult to execute such as ‘tap and hold’ or ‘drag and drop’ | |||

*No confirmation message when deleting something | |||

**Provide a message when something successfully or failed to delete | |||

*No clear observable feedback | |||

**Provide immediate feedback when the user did an action | |||

**Provide a mixed mode of feedback, e.g. when the user pushed on an element, such as a button, make a sound | |||

*Different responses for the same action | |||

**Consistent responses | |||

It is important to notice though that for their comparison between the prototype and the android design they used only 12 participants. | |||

Ruiz et co.<ref name=":13">[https://doi.org/10.1080/10447318.2020.1805876 Unifying Functional User Interface Design Principles]</ref> tried to find the coherency between all the principles as well. They tried to find the three most important authors that have written about and tried to create UI principles before and then took the best 36 principles. Then from those 36 principles, they took the five most commonly mentioned. | |||

*Offer informative feedback | |||

*Strive for consistency | |||

*Prevent errors. So if there is a commonly made mistake among the users, change the UI in such a way that the users won’t make the mistake anymore. | |||

*Minimize the amount a user has to remember | |||

*Simple and natural dialog | |||

Also one of those most important authors, Hansen, stated that to create a good UI design it is important to know the user.<ref>[https://doi.org/10.1145/1479064.1479159 User engineering principles for interactive systems] </ref> For example what their education is, their age, prior experience, capabilities, limitations, etc. | |||

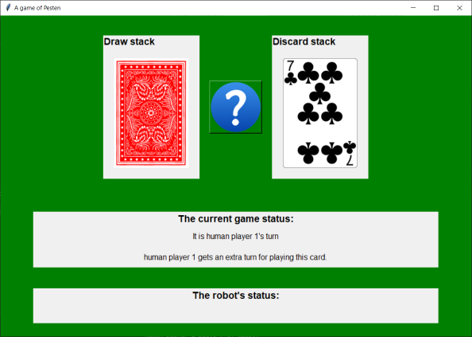

[[File:The graphical user interface.png|right]] | |||

Some of the common factors in the above-mentioned papers are consistency,<ref name=":12" /><ref name=":13" /> offering feedback,<ref name=":12" /><ref name=":13" /> tools that can offer help,<ref name=":11" /><ref name=":12" /> no technical terms,<ref name=":11" /><ref name=":12" /> reducing the number of features and elements,<ref name=":11" /><ref name=":12" /> and having a type of customization for the size, font, and color scheme.<ref name=":11" /><ref name=":12" /> According to multiple sources,<ref>[https://agefriendly.dc.gov/sites/default/files/dc/sites/agefriendly/publication/attachments/AgeFriendlyDC-Effective_Print-2.3.17-v2-PRINT.pdf Reaching adults age 50+ more effectively through print]</ref><ref>[https://health.gov/healthliteracyonline/display/section-3-3/ Display Content Clearly on the Page]</ref><ref>[https://doi.org/10.3389/fpsyg.2022.931646 How to design font size for older adults: A systematic literature review with a mobile device]</ref> a font size of 12, or 12+ in combination with sans-serif works best for elderly. In our final design we used two types of tools that can offer help, the question mark in the UI and the paper user guide. | |||

[[File:User guide.pdf|thumb]] | |||

===Implementation=== | |||

From our user interviews and research on the UI, the focus points of our design of the user interface became clear. In our design the robot has a digital screen on which the graphical user interface will be displayed. As already stated, it became clear that the UI should have a basic design and the information should appear consistently. Hence, our UI design consists of only a few parts. | |||

The user interface contains a digital representation of the game by showing both the draw stack and discard stack which get dynamically updated based on the software of the image recognition. In the middle of these two stacks, a question mark button is present, which leads to the user guide and to the game rules, which is available at all times in order to help the user. Additionally, two text widgets are present at the bottom of the screen. The top one informs the player about the current status of the game by displaying whose turn it is and by displaying what the effect of the last thrown card is if it has any. The bottom one simply informs the player about the current status of the robot, it either states what the robot is doing with a phrase such as “Robot is drawing 2 cards, wait for the robot to finish.” or it states what the robot expects the player to do by giving an instruction such as “Robot is waiting for you to draw another card”. Note, however, that the functionality of this text widget has not yet been put to use in our implementation, as a physical robot (arm) is not currently part of the prototype and hence the user does not need to wait for the robot to finish performing a certain action for instance. | |||

<br /> | |||

1. | ==Flow Chart== | ||

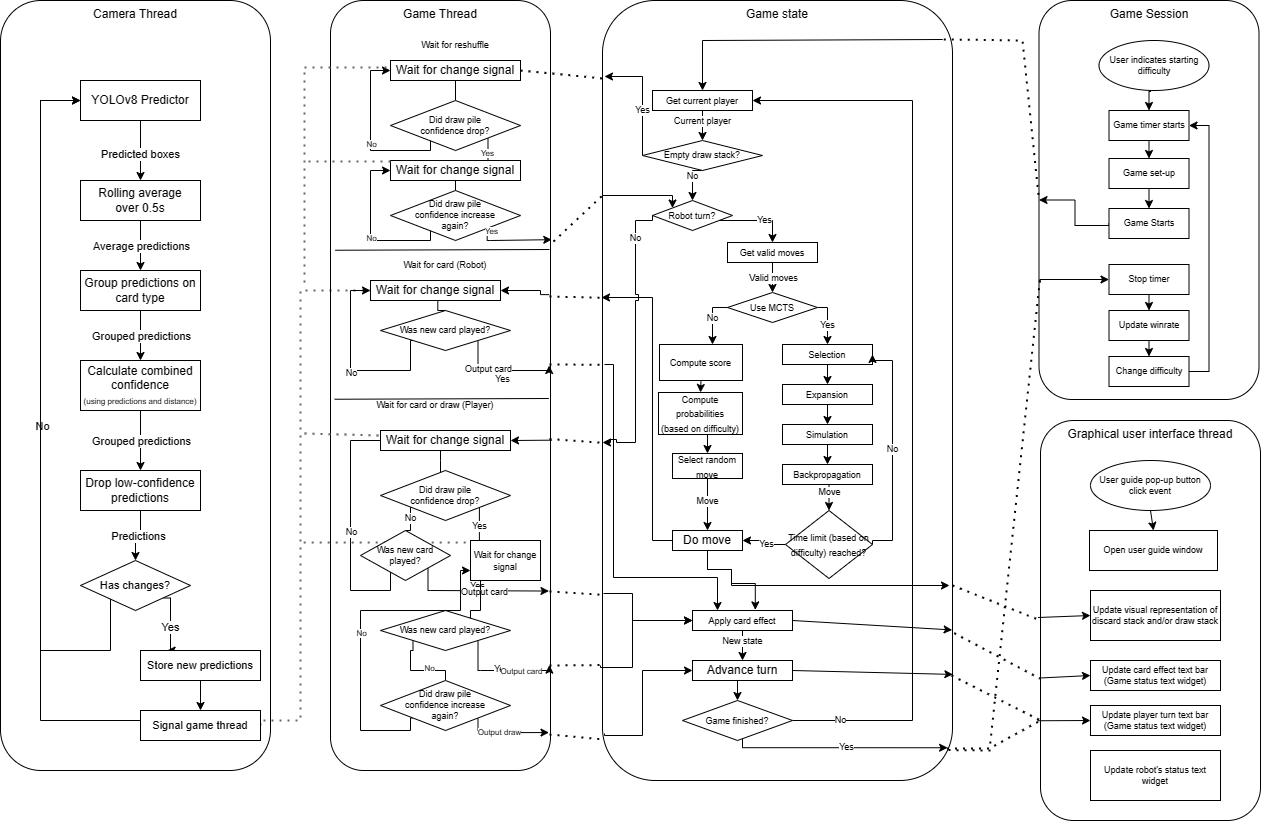

The flow chart provides a schematic overview of the code of our final product, which can be viewed from the github repository that is linked in the appendix. | |||

[[File:Camera diagram.drawio (1).png|thumb|1002x1002px|Flow chart of the code for a card-game playing robot|center]] | |||

==Test Plan== | |||

To test whether the difficulty system worked as it should, five games will be played since it is assumed that people play approximately five games before they quit again. And by that time the difficulty should already be close to the user’s skills, and the win rate to 0.7. | |||

The five games were executed in the shell without card recognition. This decision was made, as this made it easier to test the difficulty and win rate. The only difference between the use of a shell or camera is that the last one uses a card recognition system. So the results could then be influenced by whether the cards and moves of the user were correctly recognized. | |||

Since the game was executed in the terminal, the average game time was changed for this test from 5 to 15 minutes. The average game time in the terminal takes this much longer, because it takes time to input the correct cards in the terminal. But also because one person had to play with their own cards but also had to play for the robot. This resulted in the player having no thinking time during the turn of the robot, as instead, they were busy playing the robot’s cards. Therefore, to ensure that the difficulty wouldn’t decrease because of the longer input and thinking time, the average time was increased. | |||

5. | At last, there were executed three tests with different initial difficulties. The first difficulty was 0.5, the second 0.1, and in the last test, it was 0.9. The tests were executed by the developers. | ||

<br /> | |||

6. | ===Test Results=== | ||

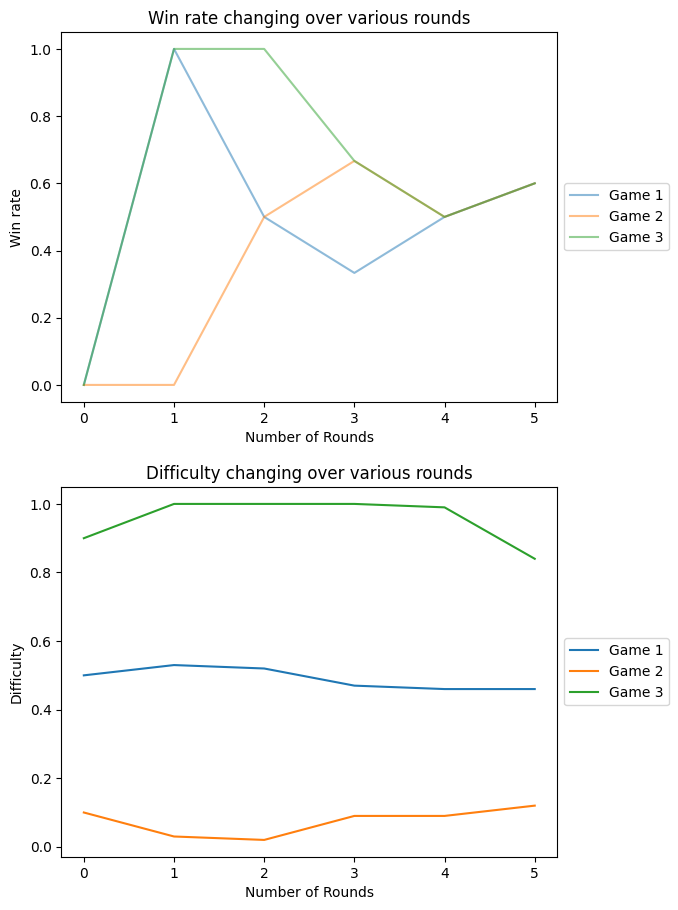

[[File:Win Rate and Difficulty.png|thumb|Graphs relating the number of rounds played to the win rate and difficulty of that round.]] | |||

It’s noticeable in the top graph that in all three tests, the win rate after five games is 0.6. The shapes of their graphs are all different. Although this might be a coincidence, the difficulty model seems to be working correctly. However, more tests will need to be performed to be able to conclude this with certainty. Despite that, it can be see that after one game it always goes to an extreme. Not long after that, the line starts to go more toward the middle of the diagram. This is good because very low or very high win rates are not desired. | |||

The bottom graph shows how the difficulty changes over various rounds. The first game, which starts with a difficulty of 0.5, has a nice horizontal line. It might differ a bit, but in general, it is consistent. Whereas, for game 2 and game 3 it can be seen see that at the end it starts to go more towards the middle. A reason for this could be because the level of the person playing it was more in the middle. To prove this more rounds must be played. | |||

Looking at game 3, from round 1 to round 3, it can be seen that the difficulty and win rate correlate correctly. When the user won the game, since the game rate went from 0/0 to 1/1, the difficulty also increased. Whereas for game 2, from round 1 to round 3, the difficulty decreased because the user lost. Something else noticeable is in game 3 from round 4 to 5. Despite the user winning, the difficulty decreased. This is because this game took 24 minutes, instead of the expected average time of 15 minutes. Therefore, the algorithm decided that despite the user winning, because the user took so long the user must have been struggling. And therefore, decreasing the difficulty. | |||

To be able to get more insights, more game rounds would have to be played to be certain that the win rate actually goes more towards 0.7 and that the difficulty actually converges to a person’s skill level. As well as that it would be good if multiple people tested it, since these tests were conducted by only a single person. | |||

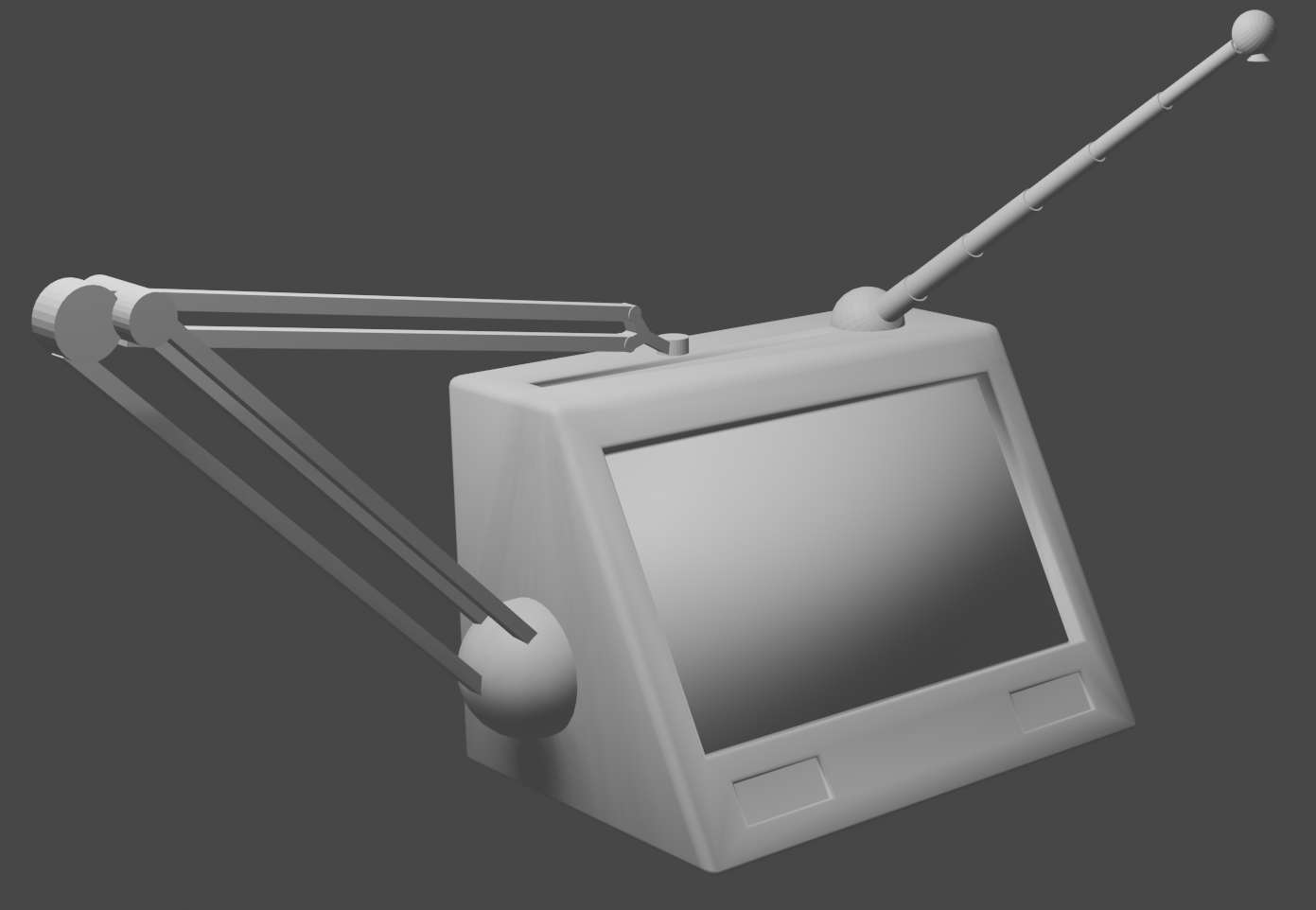

==Physical design== | |||

While the design of a physical implementation of the system was not directly in the planning, as is evident from the position of 3D design in the could-have sections. A sample design was created using the Blender software. This design had both some technical requirements; to house the electronics and to allow for card movement, as well as some design oriented requirements like an appealing rounded look. | |||

The main components required are: | |||

*Body of the robot: This houses the main electronics needed for the software as well as the display and speakers needed for user interaction. | |||

*Robotic arm: To allow the robot to move cards and interact with its environment. | |||

*Camera + arm: To create the top-down view needed for the object recognition software. | |||

[[File:Cardrobot place.png|thumb|A design of the cardplaying robot]] | |||

As can bee seen in the image the robot has a simple shape and contains little user buttons or other objects as to not confuse its users. The two arms that can be seen are, as previously mentioned, required to move the cards and get a top-down view of the playing field. The first arm, located on the top of the robot, can be moved from the base and extended in a similar way as older radio antennas could. This method allows the camera to be easily set up while also allowing it to fold up in a compact way when not in use. The second arm, which is located at the side of the robot, is used to move the cards and interact with its environment. This arm consists of two main legs that can be moved with a ball-and-socket joint and a hinge joint. This allows the robot to access a large portion of the table as well as giving it access to its own 'head' as the top of the robot also makes use of a small tray that can store the 'on-hand' cards of the robot. | |||

As there already exist many designed robotic arms that can pick up cards and move them around using mechanics such as grippers or suction cups. Not much time was put into the actual gripper arm design. This portion would incorporate either an already existing arm or be left for future work. | |||

[[ | Where the top and the side of the robot are connected to the two arms the front of the robot is reserved for its interface. Here a display is placed along two speakers that supports the design as explained in the [[Pre2022 3 group12#User%20Interface|User Interface]] section. As already mentioned the design uses the display as user interface and does not incorporate any other buttons to not confuse its users. | ||

<br /> | |||

==Ideas for future work / Extension== | |||

While it was possible to create and design many aspects of this product not everything could be achieved in the time span of this project. With that and the fact that this project has been shrunk to fit a more specific goal it can be seen that there are several opportunities to extend this project. These extension could for example include: | |||

*more research and improvements on specific parts of the design. | |||

*further specified and implementable physical design. | |||

*multiple cards games that are playable by the robot. | |||

*multiple card sets that can be recognized by the robot (Uno cards, resource cards for other games). | |||

*more research on the measurement of user enjoyment. | |||

*research and solutions on possible pitfalls and errors that could be encountered. | |||

*extended and more rigid software. | |||

<br /> | |||

==Conclusion== | |||

All in all, this research has investigated the development of a card-game playing robot for the purpose of entertainment. The aim is to mitigate loneliness among elderly people, caused by a decreased support ratio due to ageing. More specifically, the research focused on two main research questions. Firstly, how can the user interface of a card game-playing robot be designed to make the robot easy to use for the elderly? And secondly, how can a dynamic difficulty adjustment system be implemented into the robot, such that the difficulty matches the user's skill level? To provide an answer to these research questions, a user study was first conducted followed by the development of a software program for the card-game playing robot. The user study showed that the robot should be suited for elderly people which have problems with hearing, sight and motor skills, it should play games that the elderly are familiar with, it should support multiple difficulty levels, the interaction should be human-like and lastly it is required to deal with unexpected behavior. From the user study, it was found that the elderly were highly familiar with the card-game called 'Pesten' and hence it was decided that 'Pesten' was the card-game the robot would be capable of playing at the end of our project. Next, the software consists of several components, namely card recognition, dynamic difficulty adjustment, gameplay strategy and user interface. The card game recognition was implemented with the YOLOv8 model and achieved a mAP@50 of 97.1%. User fun can be measured by data hooking, which relies on measuring game statistics. The win rate and duration of each game were therefore measured to adjust the difficulty level of the robot. It was concluded that a win rate of 70% maximises user fun and that the average game time is five minutes. So, these values were used to implement a function, using the inverse tangent function, to map the statistics to a certain difficulty level. This dynamic difficulty adjustment was combined with a gameplay strategy for the robot. Thìs strategy was implemented by a score function that scores valid actions based on the expected utility of the resulting state of that action. The algorithm was tested by playing five game rounds consecutively. At first, the win rate and difficulty correlated correctly. However, during the last rounds the opposite was observed due to the relatively large game duration. To find whether the win rate diverges correctly towards an optimal win rate of 0.7, more tests should be conducted. Lastly, the user interface will be realized by a digital screen mounted on the robot. To ensure that the graphical UI was user-friendly, we tried to use as little elements as possible and used the sans-serif font with a font size of 12. The software for the graphical UI only includes a graphical representation of the draw stack and the discard stack, a question mark button leading to the user guide and game rules, one text widget which informs the player about the game status and one text widget which informs the player about robot's status. | |||

In conclusion, a dynamic difficulty system can be implemented by combining data hooking to measure user fun and by using a score function to select the moves. However, the current method should be tested more explicitly to find whether it diverges correctly to the desired win rate. Furthermore, it was found that the user interface of the robot should be consistent, offer feedback and help, reduce the number of features and elements, exclude technical terms and allow the size, font and colors to be customized in order for the robot to be easy to use for the elderly. Nevertheless, this research mainly focused on the software for a card-game playing robot. To further investigate this idea, future research should focus on building the physical design for the robot as well. On top of that, more research should be conducted on the dynamic difficulty adjustment system by performing more tests. | |||

==Appendix== | |||

===Code Repository=== | |||

[https://github.com/tom-90/0LAUK0-card-robot Github repository] | |||

===Task Division & Logbook=== | |||

The logbook with member task and time spend per week can be found on the [[PRE2022 3 Group12/Logbook|Logbook page]]. | |||

The tasks will be divided weekly among the group members during the team meetings. The task division for each week is also shown on the [[PRE2022 3 Group12/Logbook|Logbook page]]. | |||

===Literature Study=== | |||

The articles read for the literature study accompanied with their summary can be found in the [[PRE2022 3 Group12/Literature|Literature page]]. | |||

===Links=== | |||

*[[PRE2022 3 Group12/User interview|User interview]] | |||

*[[PRE2022 3 Group12/Literature|Literature study articles]] | |||

*[[Logbook Group12|Logbook of group12]] | |||

*[[Deliverables and milestones of group 12|Deliverables and milestones]] | |||

<br /> | |||

*[https://github.com/tom-90/0LAUK0-card-robot Github repository] | |||

=== User guide === | |||

[https://cstwiki.wtb.tue.nl/images/User_guide.pdf User_guide.pdf] | |||

<br /> | |||

==Members== | ==Members== | ||

{| | |||

! | |||

! | |||

! | |||

{| | |||

! | |||

! | |||

! | |||

|- | |- | ||

|Abel Brasse | |Abel Brasse | ||

| | | - 1509128 | ||

| | | - a.m.brasse@student.tue.nl | ||

|- | |- | ||

|Linda Geraets | |Linda Geraets | ||

| | | - 1565834 | ||

| | | - l.j.m.geraets@student.tue.nl | ||

|- | |- | ||

|Sander van der Leek | |Sander van der Leek | ||

| | | - 1564226 | ||

| | | - s.j.m.v.d.leek@student.tue.nl | ||

|- | |- | ||

|Tom van Liempd | |Tom van Liempd | ||

| | | - 1544098 | ||

| | | - t.g.c.v.liempd@student.tue.nl | ||

|- | |- | ||

|Luke van Dongen | |Luke van Dongen | ||

| | | - 1535242 | ||

| | | - l.h.m.v.dongen@student.tue.nl | ||

|- | |- | ||

|Tom van Eemeren | |Tom van Eemeren | ||

| | | - 1755595 | ||

| | | - t.v.eemeren@student.tue.nl | ||

|} | |} | ||

<br /> | |||

==References== | ==References== | ||