PRE2019 3 Group15

Group Members

| Name | Study | Student ID |

|---|---|---|

| Mats Erdkamp | Industrial Design | 1342665 |

| Sjoerd Leemrijse | Psychology & Technology | 1009082 |

| Daan Versteeg | Electrical Engineering | 1325213 |

| Yvonne Vullers | Electrical Engineering | 1304577 |

| Teun Wittenbols | Industrial Design | 1300148 |

Problem Statement and Objectives

DJ-ing is a relatively new profession. It has only been around for less than a century but has become more and more widespread and the last few decades. This activity has for the most part been executed by human beings. Current technology in the music industry has become better and better at generating playlists, or 'recommended songs' as, for example, Spotify does. Can we integrate a form of this technology into the world of DJs and create a 'robot DJ'? A robot DJ would autonomously create playlists and mix songs, based on algorithms and real-life feedback in order to entertain an audience.

How to develop an autonomous system/robot DJ which enables the user to easily use it as a substitute for a human DJ.

Users

The identification of the primary and secondary users and their needs is based on extensive literature research on the interaction between the DJ and the audience in a party setting. The reader is referred to the references section for the full articles. This section is written based on (Gates, Subramanian & Gutwin, 2006), (Gates & Subramanian, 2006) and (Berkers & Michael, 2017).

Primary users

- Dance industry: this is the overarching organization that will possess most of the robots.

- Organizer of a music event: this is the user that will rent or buy the robot to play at their event.

- Owner of a discotheque or club: the robot can be an artificial alternative for hiring a DJ every night.

Primary user needs

- The DJ-robot is more valued than a human performer.

- The system's user interface is easy to understand, no experts needed.

- The DJ-robot system provides something extraordinary and special.

- The system is better than a human DJ in gathering information regarding audience appreciation.

Secondary users

- Attenders of a music event: these people enjoy the music and lighting show that the robot makes.

- Human DJ's: likely to "cooperate" with a DJ-robot to make their show more attractive.

Secondary user needs

- The system selects popular tracks that are valued by the audience.

- The system selects appropriate tracks regarding genre.

- The music set played is structured and progressive.

- The DJ-robot takes the audience reaction into account in track selection.

- The dancers are taken on a cohesive and dynamical music journey.

- Keeping the audience engaged.

- The musical presentation should reflect the audience energy level.

- Track selection should fit the audience background.

- There is a balance between playing familiar tracks and providing rare, new music.

- Similar tracks to what the audience is into are played.

- The audience members want to express themselves.

- The audience wants to hear their favourite music.

- The audience doesn't want a predictable set of music.

- The audience desires control over the music being played, to a certain extent.

- A desire to create a collective experience.

Approach, Milestones, and Deliverables

Approach

The goal of the project is to create a robot that functions as a DJ and provides entertainment to a crowd. In order to reach the goal, first a literature study will be executed to find out the current state of the art regarding the problem. After enough information has been collected, an objective will be defined.

Then, the USE and technical aspects of the problem will be researched. The technical aspect-research will be implemented in a design for the robot. Based on this design a prototype will be built and programmed that is able to meet the requirements of the goal.

Milestones

In order to complete the project and meet the objective, milestones have been determined. These milestones include:

- A clear problem and goal have been determined

- The literature research is finished, this includes research about

- Users (attenders of music events, DJs, club owners)

- The state of the art in the music (AI) industry

- The research on how to create the DJ-software is finished

- Ways of feedback from a crowd

- Spotify API

- The DJ-software is created

- Depending on what method of feedback is chosen, a sensor is also built

- A test is executed in which the environment the software will be used in is simulated

- The wiki is finished and contains all information about the project

Other milestones, which probably are not attainable in the scope of 8 weeks, are

- A test in a larger environment is executed (bar, festival)

- A full scale robot is constructed to improve the crowd’s experience

- A light show is added in order to improve the crowd’s experience

Deliverables

The deliverables for this project are:

- The DJ-software, which is able to use feed-forward and incorporate user feedback in order to create the most entertaining DJ-set

- Depending on what type of user feedback is chosen, a prototype/sensor also needs to be delivered

- The wiki-page containing all information on the project

- The final presentation in week 8

Who's Doing What?

Personal Goals

The following section describes the main roles of the teammates within the design process. Each team member has chosen an objective that fits their personal development goals.

| Name | Personal Goal |

|---|---|

| Mats Erdkamp | Play a role in the development of the artificial intelligence systems. |

| Sjoerd Leemrijse | Gain knowledge in recommender systems and pattern recognition algorithms in music. |

| Daan Versteeg | |

| Yvonne Vullers | Play a role in creating the prototype/artificial intelligence |

| Teun Wittenbols | Combine all separate parts into one good concept, with a focus on user interaction. |

Weekly Log

Based on the approach and the milestones, a planning has been made. This planning is not definite and will be updated regularly, however it will be a guideline for the coming weeks.

Week 2

Goal: Do literature research, define problem, make a plan, define users, start research into design and prototype

| Group | Mats Erdkamp | Sjoerd Leemrijse | Daan Versteeg | Yvonne Vullers | Teun Wittenbols | |

|---|---|---|---|---|---|---|

| Monday 10-02 | We formed a group and discussed the first possibilities within the project, chose a general theme and started doing research. We attended the tutor session.

|

Work on SotA and evaluate design options | Attended meeting with tutors: 30 minutes Elaborated the notes of the meeting: 30 minutes Reading and summarizing scientific literature on the interaction between DJ and crowd: 3 hours |

Work on relevant literature research. | Attended tutor meeting

|

Started doing literature research and summarized Pasick (2015) & Johnson

|

| Tuesday 11-02 | Searching scientific literature on user requirements of the public and the DJ at a party (Gates, Subramanian & Gutwin, 2006), (Gates & Subramanian, 2006): 1 hour | |||||

| Wednesday 12-02 | Summarizing scientific literature on user requirements: 3 hours | Started looking for papers about user interaction and user feedback

|

||||

| Thursday 13-02 | We had a meeting in which we discussed the feedback from the tutor session, discussed the research and formed a more detailed and specific plan for the project.

|

Meeting with group members, discussing who will be doing what the coming week: 1.5 hours | Meeting with group members, discussing who will be doing what the coming week. Emailed Effenaar.

|

Did some more research on audience interaction and summerized it. (Hödl, Fitzpatrick, Kayali & Holland, 2017)(Zhang, Wu, & Barthet, ter perse) I, also updated the wiki, made the planning more clear and divided the references of the SotA.

| ||

| Friday 14-02 | Developed access to spotify API 4 hours. | Researched different forms of audience interaction and added it to the SotA. #Receiving feedback from the audience via technology

| ||||

| Saturday 15-02 | Created data set from spotify API: 8 hours | Writing the "Users" section based on my prior literature research: 2 hours Updating the section on state of the art based on my prior literature research: 2 hours |

||||

| Sunday 16-02 | Updating the lay-out of the wiki: 1 hour | Updating the milestones and deliverables. Continued looking for papers on incorporating user feedback and user feedback for music events. Summarized papers (Barkhuus & Jorgensen, 2008) & (Atherton, Becker, McLean, Merkin & Rhoades, 2008)

|

Week 3

Goal: Continue research, start on design

| Group | Mats Erdkamp | Sjoerd Leemrijse | Daan Versteeg | Yvonne Vullers | Teun Wittenbols | |

|---|---|---|---|---|---|---|

| Monday 17-02 | Group meeting and general discussion: 45 minutes | Attended tutor meeting: 30 minutes. Discussion with group members: 15 minutes. | ||||

| Tuesday 18-02 | ||||||

| Wednesday 19-02 | Worked on a first concept model of our designed system, A first model. 4 hours | Elaborated the notes of the meeting: 30 minutes | ||||

| Thursday 20-02 | Group meeting, discussing plans and everyone's contributions: 1 hour | Attended group meeting: 1 hour. Worked on how the user needs lead to a first model: 2 hours | Attended group meeting: 1 hour | |||

| Friday 21-02 | ||||||

| Saturday 22-02 | ||||||

| Sunday 23-02 |

| Group | Mats Erdkamp | Sjoerd Leemrijse | Daan Versteeg | Yvonne Vullers | Teun Wittenbols | |

|---|---|---|---|---|---|---|

| Monday 24-02 | ||||||

| Tuesday 25-02 | ||||||

| Wednesday 26-02 | ||||||

| Thursday 27-02 | ||||||

| Friday 28-02 | Worked on getting to know javascript, node.js, and visual studio: 6 hours. | |||||

| Saturday 28-02 | ||||||

| Sunday 1-03 | Continued on getting to know javascript and node.js. Also started on getting to know express JS: 4 hours. |

Week 4

Goal: Finish first design, start working on software.

Week 5

Goal: Work on software

Week 6

Goal: Finish software , do testing

Week 7

Goal: Finish up the last bits of the software

Week 8

Goal: Finish wiki, presentation

State of the Art

The dance industry is a booming business that is very receptive of technological innovation. A lot of research has already been conducted on the interaction between DJ's and the audience and also in automating certain cognitively demanding tasks of the DJ. Therefore, it is necessary to give a clear description of the current technologies available in this domain. In this section the state of the art on the topics of interest when designing a DJ-robot are described by means of recent literature.

Defining and influencing characteristics of the music

When the system receives feedback from the audiene it is necessary that it is also able to do something useful with that feedback and convert it into changes in the provided musical arrangement. The most important aspects of this arrangement are chaos, energy and tempo ADD HERE MORE FEATURES DURING THE PROJECT.

Chaos is the opposite of order. Dance tracks with order can be assumed as having a repetitive rhythmic structure, contain only a few low-pitched vocals and display a pattern in arpeggiated lines and melodies. Chaos can be created by undermining these rules. Examples are playing random notes, changing the rhythmic elements at random time-points, or increasing the number of voices present and altering their pitch. Such procedures create tension in the audience. This is necessary because without differential tension, there is no sense of progression (Feldmeier, 2003).

The factors energy and tempo are inherently linked to each other. When the tempo increases, so does the perceived energy level of the track. In general, the music's energy level can be intensified by introducing high-pitched, complex vocals and a strong occurence of the beat. Related to that is the activity of the public. An increase in activity of the audience can signal that they are enjoying the current music, or that they desire to move on to the next energy level (Feldmeier, 2003). Because in general, people enjoy the procedure of tempo activation in which they dance to music that leads their current pace (Feldmeier & Paradiso, 2004).

Algorithms for track selection

One of the most important tasks of a DJ-robot - if not the most important - is track selection. In (Cliff, 2006) a system is described that takes as input a set of selected tracks and a qualitative tempo trajectory (QTT). A QTT is a graph of the desired tempo of the music during the set with time on the x-axis and tempo on the y-axis. Based on these two inputs the presented algorithm basically finds the sequence of tracks that fit the QTT best and makes sure that the tracks construct a cohesive set of music. The following order is taken in this process: track mapping, trajectory specification, sequencing, overlapping, time-stretching and beat-mapping, crossfading.

In this same article, use is been made of genetic algorithms to determine the fitness of each song to a certain situation. This method presents a sketch of how to encode a multi-track specification of a song as the genes of the individuals in a genetic algorithm (Cliff, 2006).

Receiving feedback from the audience via technology

Audiences of people attending musical events generally like the idea of being able to influence performance (Hödl et al., 2017). What is important is the way of interaction with the performers, what works well and what do users prefer?

In the article of Hödl et al. (2017), describes multiple ways of interacting, namely, mobile devices, as can be seen in the article of McAllister et al. (2004), smartphones and other sensory mechanisms, such as the light sticks discussed in the paper of Freeman (2005).

The system presented by Cliff (Cliff, 2006) already proposes some technologies that enables the crowd to give feedback to a DJ-robot. They discuss under-floor pressure sensors that can sense how the audience is divided over the party area. They also discuss video surveillance that can read the crowd's activity and valence towards the music. Based on this information the system determines whether the assemblage of the music should be changed or not. In principle, the system tries to stick with the provided QTT, however, it reacts dynamically on the crowd's feedback and may deviate from the QTT if that is what the public wants.

Another option for crowd feedback discussed by Cliff is a portable technology, more in the spirit of a wristband. One option is a wristband that is more quantitative in nature and transmits location, dancing movements, body temperature, perspiration rates and heart rate to the system. Another option is a much simpler and therefore cheaper solution. This second solution is a simple wristband with two buttons on it; one "positive" button and one "negative" button. In that sense, the public lets the system know whether they like the current music or not, and the system can react upon it.

Summary of Related Research

This patent describes a system, something like a personal computer or an MP3 player, which incorporates user feedback in it's music selection. The player has access to a database and based on user preferences it chooses music to play. When playing, the user can rate the music. This rating is taken into account when choosing the next song. (Atherton, Becker, McLean, Merkin & Rhoades, 2008)

This article describes how rap-battles incorporate user feedback. By using a cheering meter, the magnitude of enjoyment of the audience can be determined. This cheering meter was made by using Java's Sound API. (Barkhuus & Jorgensen, 2008)

Describes a system that transcribes drums in a song. Could be used as input for the DJ-robot (light controls for example). (Choi & Cho, 2019)

This paper is meant for beginners in the field of deep learning for MIR (Music Information Retrieval). This is a very useful technique in our project to let the robot gain musical knowledge and insight in order to play an enjoyable set of music. (Choi, Fazekas, Cho & Sandler, 2017)

This article describes different ways on how to automatically detect a pattern in music with which it can be decided what genre the music is of. By finding the genre of the music that is played, it becomes easier to know whether the music will fit the previously played music.(De Léon & Inesta, 2007)

This describes "Glimmer", an audience interaction tool consisting of light sticks which influence live performers. (Freeman, 2005)

Describes the creation of a data set to be used by artificial intelligence systems in an effort to learn instrument recognition. (Humphrey, Durand & McFee, 2018)

This describes the methods to learn features of music by using deep belief networks. It uses the extraction of low level acoustic features for music information retrieval (MIR). It can then find out e.g. of what genre the the musical piece was. The goal of the article is to find a system that can do this automatically. (Hamel & Eck, 2010)

This article communicates the results of a survey among musicians and attenders of musical concerts. The questions were about audience interaction. "... most spectators tend to agree more on influencing elements of sound (e.g. volume) or dramaturgy (e.g. song selection) in a live concert. Most musicians tend to agree on letting the audience participate in (e.g. lights) or dramaturgy as well, but strongly disagree on an influence of sound." (Hödl, Fitzpatrick, Kayali & Holland, 2017)

This article explains the workings of the musical robot Shimon. Shimon is a robot that plays the marimba and chooses what to play based on an analysis of musical input (beat, pitch, etc.). The creating of pieces is not necessarily relevant for our problem, however choosing the next piece of music is of importance. Also, Shimon has a social-interactive component, by which it can play together with humans. (Hoffman & Weinberg, 2010)

This article introduces Humdrum, which is software with a variety of applications in music. One can also look at humdrum.org. Humdrum is a set of command-line tools that facilitates musical analysis. It is used often in for example Pyhton or Cpp scripts to generate interesting programs with applications in music. Therefore, this program might be of interest to our project. (Huron, 2002)

This article focuses on next-track recommendation. While most systems base this recommendation only on the previously listened songs, this paper takes a multi-dimensional (for example long-term user preferences) approach in order to make a better recommendation for the next track to be played. (Jannach, Kamehkhosh & Lerche, 2017)

In this interview with a developer of the robot DJ system POTPAL, some interesting possibilities for a robot system are mentioned. For example, the use of existing top 40 lists, 'beat matching' and 'key matching' techniques, monitoring of the crowd to improve the music choice and to influence people's beverage consumption and more. Also, a humanoid robot is mentioned which would simulate a human DJ. (Johnson, n.a.)

In this paper a music scene analysis system is developed that can recognize rhythm, chords and source-separated musical notes from incoming music using a Bayesian probability network. Even though 1995 is not particularly state-of-the-art, these kinds of technology could be used in our robot to work with music. (Kashino, Nakadai, Kinoshita, & Tanaka, 1995)

This paper discusses audience interaction by use of hand-held technological devices. (McAllister, Alcorn, Strain & 2004)

This article discusses the method by which Spotify generates popular personalized playlists. The method consists of comparing your playlists with other people's playlists as well as creating a 'personal taste profile'. These kinds of things can be used by our robot DJ by, for example, creating a playlist based on what kind of music people listen to the most collectively. It would be interesting to see if connecting peoples Spotify account to the DJ would increase performance. (Pasick, 2015)

This paper takes a mathematical approach in recommending new songs to a person, based on similarity with the previously listened and rated songs. These kinds of algorithms are very common in music systems like Spotify and of utter use in a DJ-robot. The DJ-robot has to know which songs fit its current set and it therefore needs these algorithms for track selection. (Pérez-Marcos & Batista, 2017)

This paper describes the difficulty of matching two musical pieces because of the complexity of rhythm patterns. Then a procedure is determined for minimizing the error in the matching of the rhythm. This article is not very recent, but it is very relevant to our problem. (Shmulevich, & Povel, 1998)

In this article, the author states that the main melody in a piece of music is a significant feature for music style analysis. It proposes an algorithm that can be used to extract the melody from a piece and the post-processing that is needed to extract the music style. (Wen, Chen, Xu, Zhang & Wu, 2019)

This research presents a robot that is able to move according to the beat of the music and is also able to predict the beats in real time. The results show that the robot can adjust its steps in time with the beat times as the tempo changes. (Yoshii, Nakadai, Torii, Hasegawa, Tsujino, Komatani, Ogata & Okuno, 2007)

This paper describes Open Symphony, a web application that enables audience members to influence musical performances. They can indicate a preference for different elements of the musical composition in order to influence the performers. Users were generally satisfied and interested in this way of enjoying the musical performance and indicated a higher degree of engagement. (Zhang, Wu, & Barthet, ter perse)

A first model

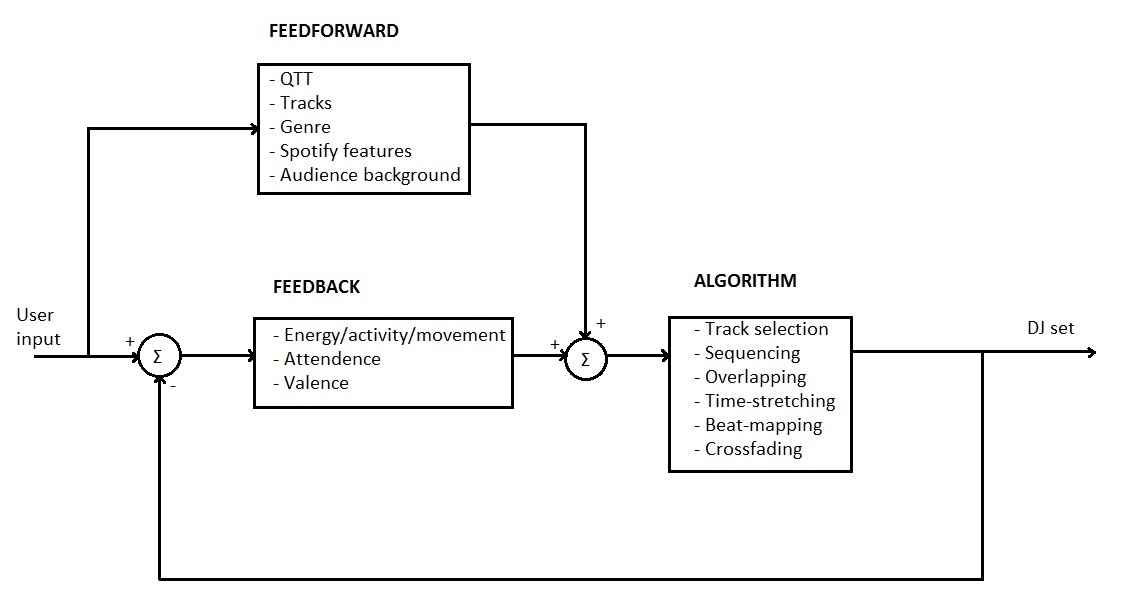

Based on the state of the art and the user needs a first model of our automated DJ system is made. We chose to depict it in a block diagram with separate blocks for the feedforward, the feedback and the algorithm itself.

Feedforward

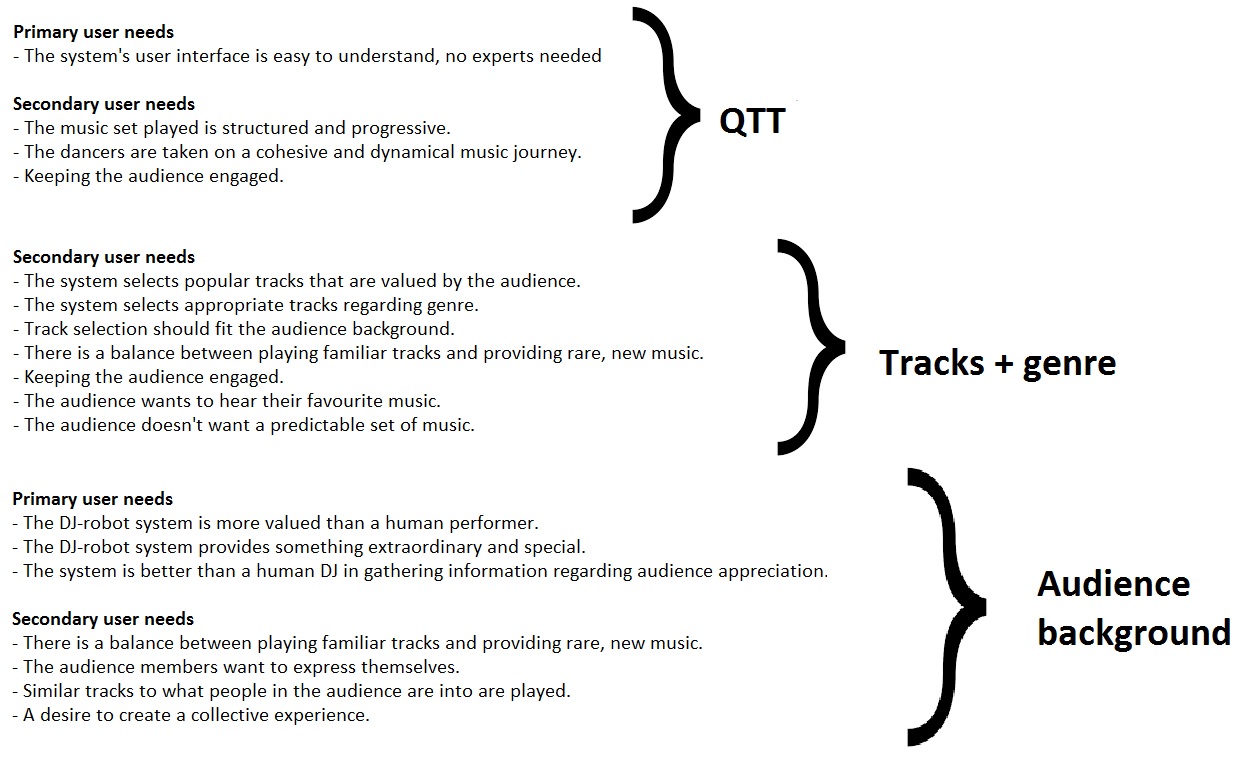

The feedforward part of our system is completely based on the user input. The user has a lot of knowledge about the desired DJ set to be played beforehand. This information is fed to the system to control it. Because this feedforward block is based on user input, it has to answer to the user needs. Below, a scheme is presented to show how the user needs relate to the feedforward parameters.

The first part of the feedforward is the desired QTT of the set. This answers to the secondary user needs of a structured, progressive musical journey over which a certain extent of control is possible. It will also keep the audience engaged. To fulfill the desired QTT certain tracks are delivered to the system to pick from as feedforward. These tracks are delivered via an enormous database with all kinds of music in it, such that the system has enough options to pick from in order to form the best set. Also, this answers to the user need of an non-predictable music set.

Another parameter of the feedforward that will help in this selection is genre of the music. This will limit the algorithm's options for track selection, making the system more stable. The feedforward of tracks and genre answer to the secondary user needs of popular, valued tracks and appropriate selection regarding genre. Also, it keeps the audience engaged and makes it more fun for them to dance to their favourite music.

MATS, KAN JIJ MISSCHIEN UITEINDELIJK WAT SCHRIJVEN OVER DE BELANGRIJKSTE SPOTIFY FEATURES DIE WE GAAN GEBRUIKEN ALS FF?

In order to satisfy the audience we have to feedforward background information of the audience members to the system. In that way the system has knowledge about what the current audience is into. What someone is into could include their preferences regarding SPOTIFY FEATURES and their favourite or most hated tracks. This answers to the primary user need of making the system something extraordinary and more valued than a human DJ. It also answers to the secondary user needs of keeping balance between familiar and new music and audience members wanting to express themselves. It also values the DJ's desire to play similar tracks to what the audience is into and the desire to create a collective experience by means of a music set.

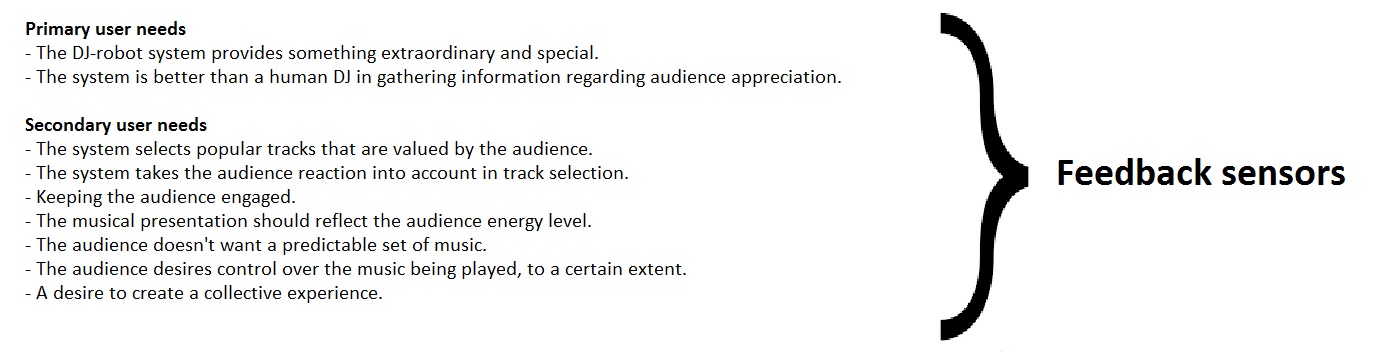

Feedback

The feedback of the system consists of sensor output. This is the part where the audience takes more control. The feedback sensor system detects how many persons are present on the dance floor, relative to the rest of the event area. This can be done by means of pressure sensors in the floor. This information cues whether the current music is appreciated or not. Another cue for appreciation of the music can be generated via active feedback of the crowd. For example, valence can be assessed by the public by means of technologies described in the state of the art section. This answers the secondary user need of having partial control over the music being played and that the track selection procedure takes the audience reaction into account such that it comes up with tracks that are valued by the audience members.

The other part of sensory feedback is the audience energy level. This energy level may include the activity or movement of the audience members. This can be measured passively by means of a wristband with different options for sensing activity or energy by means of heart rate, accelerometer data, sweat response or other options described in the state of the art section. The incorporation of audience energy level feedback answers to the primary user need of gathering information about the audience appreciation in a better way than a human DJ can. It also answers to the secondary user need of making the musical presentation reflect the audience energy level.

Below, a scheme is presented with the user needs that call for feedback sensors.

The algorithm

Based on the feedforward and feedback of the crowd, the tracks to be played and their sequence are selected Template:Hoe gaan we dat doen? Nog geen volledig idee, want dat is ongeveer ons einddoel...

The next step in the algorithm is overlapping the tracks in the right way. Properly working algorithms that handle this task already exist. For example, the algorithm described in (Cliff, 2006). We will describe the working principles of that algorithm in this section. The overlap section is meant to seamlessly go from one track to the other. In the described technology the time set for overlap is proportional to the specified duration of the set and the number of tracks, making it a static time interval. Alterations to the duration of this interval are made when the tempo maps of the overlapping tracks produce a beat breakdown or when the overlap interval leads to an exceedance of the set duration.

References

Atherton, W. E., Becker, D. O., McLean, J. G., Merkin, A. E., & Rhoades, D. B. (2008). U.S. Patent Application No. 11/466,176.

Barkhuus, L., & Jørgensen, T. (2008). Engaging the crowd: studies of audience-performer interaction. In CHI'08 extended abstracts on Human factors in computing systems (pp. 2925-2930).

Berkers, P., & Michael, J. (2017). Just what makes today’s music festivals so appealing?.

Choi, K., Cho, K. “Deep Unsupervised Drum Transcription”, 20th International Society for Music Information Retrieval Conference, Delft, The Netherlands, 2019.

Choi, K., Fazekas, G., Cho, K., & Sandler, M. (2017). A tutorial on deep learning for music information retrieval. arXiv preprint arXiv:1709.04396.

Cliff, D. (2006). hpDJ: An automated DJ with floorshow feedback. In Consuming Music Together (pp. 241-264). Springer, Dordrecht.

De León, P. J. P., & Inesta, J. M. (2007). Pattern recognition approach for music style identification using shallow statistical descriptors. IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews), 37(2), 248-257.

Feldmeier, M. C. (2003). Large group musical interaction using disposable wireless motion sensors (Doctoral dissertation, Massachusetts Institute of Technology).

Feldmeier, M., & Paradiso, J. A. (2004, April). Giveaway wireless sensors for large-group interaction. In CHI'04 Extended Abstracts on Human Factors in Computing Systems (pp. 1291-1292).

Freeman, J. (2005) Large Audience Participation, Technology, and Orchestral Performance in Proceedings of the International Computer Music Conference, 2005, pp. 757–760.

Gates, C., & Subramanian, S. (2006). A Lens on Technology’s Potential Roles for Facilitating Interactivity and Awareness in Nightclub. University of Saskatchewan: Saskatoon, Canada.

Gates, C., Subramanian, S., & Gutwin, C. (2006, June). DJs' perspectives on interaction and awareness in nightclubs. In Proceedings of the 6th conference on Designing Interactive systems (pp. 70-79).

Greasley, A. E. (2017). Commentary on: Solberg and Jensenius (2016) Investigation of intersubjectively embodied experience in a controlled electronic dance music setting. Empirical Musicology Review, 11(3-4), 319-323.

Humphrey, E.J., Durand, S., McFee, B. “OpenMIC-2018: An open dataset for multiple instrument recognition”, 19th International Society for Music Information Retrieval Conference, Paris, France, 2018.

Hamel, P., & Eck, D. (2010, August). Learning features from music audio with deep belief networks. In ISMIR (Vol. 10, pp. 339-344).

Hödl, Oliver; Fitzpatrick, Geraldine; Kayali, Fares and Holland, Simon (2017). Design Implications for TechnologyMediated Audience Participation in Live Music. In: Proceedings of the 14th Sound and Music Computing Conference,

July 5-8 2017, Aalto University, Espoo, Finland pp. 28–34.

Hoffman, G., & Weinberg, G. (2010). Interactive Jamming with Shimon: A Social Robotic Musician. Proceedings of the 28th of the International Conference Extended Abstracts on Human Factors in Computing Systems, 3097–3102.

Huron, D. (2002). Music information processing using the Humdrum toolkit: Concepts, examples, and lessons. Computer Music Journal, 26(2), 11-26.

Jannach, D., Kamehkhosh, I., & Lerche, L. (2017, April). Leveraging multi-dimensional user models for personalized next-track music recommendation. In Proceedings of the Symposium on Applied Computing (pp. 1635-1642).

Johnson, D. (n.a.) Robot DJ Used By Nightclub Replaces Resident DJs. Retrieved on 09-02-2020 from http://www.edmnightlife.com/robot-dj-used-by-nightclub-replaces-resident-djs/

Kashino, K., Nakadai, K., Kinoshita, T., & Tanaka, H. (1995). Application of Bayesian probability network to music scene analysis. Computational auditory scene analysis, 1(998), 1-15.

McAllister, G., Alcorn, M., Strain, P. (2004) Interactive Performance with Wireless PDAs Proceedings of the International Computer Music Conference, 2004, pp. 1–4.

Pasick, A. (21 December 2015) The magic that makes Spotify's Discover Weekly playlists so damn good. Retrieved on 09-02-2020 from https://qz.com/571007/the-magic-that-makes-spotifys-discover-weekly-playlists-so-damn-good/

Pérez-Marcos, J., & Batista, V. L. (2017, June). Recommender system based on collaborative filtering for spotify’s users.In International Conference on Practical Applications of Agents and Multi-Agent Systems (pp. 214-220). Springer, Cham.

Shmulevich, I., & Povel, D. J. (1998, December). Rhythm complexity measures for music pattern recognition. In 1998 IEEE Second Workshop on Multimedia Signal Processing (Cat. No. 98EX175) (pp. 167-172). IEEE.

Wen, R., Chen, K., Xu, K., Zhang, Y., & Wu, J. (2019, July). Music Main Melody Extraction by An Interval Pattern Recognition Algorithm. In 2019 Chinese Control Conference (CCC) (pp. 7728-7733). IEEE.

Yoshii, K., Nakadai, K., Torii, T., Hasegawa, Y., Tsujino, H., Komatani, K., Ogata, T. & Okuno, H. G. (2007, October). A biped robot that keeps steps in time with musical beats while listening to music with its own ears. In 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems (pp. 1743-1750). IEEE.

Zhang, L., Wu, Y., & Barthet, M. (ter perse). A Web Application for Audience Participation in Live Music Performance: The Open Symphony Use Case. NIME. Geraadpleegd van https://core.ac.uk/reader/77040676