PRE2017 4 Groep7: Difference between revisions

m (→Potential Improvements for the Future: Fix typos and small improvements) |

|||

| (74 intermediate revisions by 6 users not shown) | |||

| Line 3: | Line 3: | ||

Group Members | Group Members | ||

- Bas Voermans | | - Bas Voermans | 0957153 | ||

- Julian Smits | 0995642 | - Julian Smits | 0995642 | ||

| Line 14: | Line 14: | ||

- Emre Aydogan | 0902742 | - Emre Aydogan | 0902742 | ||

== Planning == | |||

[[File:PAG7Planning.JPG|center|upright=6.0|Planning]] | |||

== Problem Statement == | == Problem Statement == | ||

| Line 22: | Line 25: | ||

== Users == | == Users == | ||

=== Who are the users? === | === Who are the users? === | ||

The users that this research is meant for the users that have to weed through countless notifications while deciding what is important to them and what is not. Hence users that deal with many of these notifications are | The users that this research is meant for the users that have to weed through countless notifications while deciding what is important to them and what is not. Hence users that deal with many of these notifications are the main goal. | ||

This research will focus mainly on the student user group, which makes it easier to define the needs and requirements of this group since this research is familiar with this group. | This research will focus mainly on the student user group, which makes it easier to define the needs and requirements of this group since this research is familiar with this group. | ||

=== Requirements of the users === | === Requirements of the users === | ||

* The system should run on pre-owned devices | * The system should run on pre-owned devices | ||

* The system should filter important information out of incoming messages | * The system should communicate with existing university infrastructure | ||

* The system should tune its intrusiveness based on the users feedback | * The system should manage the agenda of a user (e.g. notifications of upcoming deadlines, lectures and exams) | ||

* The system should filter important information out of incoming messages | |||

* The system should, when desired by the user, correspond on behalf of the student | |||

* The system should tune its intrusiveness based on the users feedback | |||

== USE Aspects == | == USE Aspects == | ||

This chapter takes a look at the potential impact of the product of the research. If the product fully works and solves the problem described in the problem description, it can have a great impact on the users of the product and the society as a whole. Beneath is described what impact the product can have on the users, society, possible relevant enterprises and the economy. Lastly it is described whether or not certain features are desirable. | |||

=== Users === | === Users === | ||

The users of the product will, as described above, primaliary be students, but it can also be extended to anybody with a smartphone who receives more messages than desired but does not want to miss out on any potentially important messages. | The users of the product will, as described above, primaliary be students, but it can also be extended to anybody with a smartphone who receives more messages than desired but does not want to miss out on any potentially important messages. | ||

When a person no longer has to spend time on reading all seemingly unimportant messages or scan through them looking for important messages, they will have more time to spend on things they want to spend their time on. This is a positive effect of | When a person no longer has to spend time on reading all seemingly unimportant messages or scan through them looking for important messages, they will have more time to spend on things they want to spend their time on. This is a positive effect of the product as this allows the user to focus on their core business. | ||

However, | However, the product might also have different effects on the user. Scanning texts messages or text in general for relevant information can be a valuable skill to have, as it has also applications in other scenarios, such as scanning scientific articles or reports for important information. When an AI takes care of this tasks, users might lose this skill. This might hinder them in the other scenarios as described above, where the AI possible can not help them find the important information. | ||

Another negative consequence might occur when the AI does not work perfect, but the user trusts it to work perfect. In this scenario the user might miss an important message, which can have quite some consequences. In a work environment this can mean that the user does not get informed about a (changed) deadline or meeting. In a social environment this can lead to irritation or even a quarrel. | Another negative consequence might occur when the AI does not work perfect, but the user trusts it to work perfect. In this scenario the user might miss an important message, which can have quite some consequences. In a work environment this can mean that the user does not get informed about a (changed) deadline or meeting. In a social environment this can lead to irritation or even a quarrel. | ||

=== Society === | === Society === | ||

When | When talking about society, it means all people - users and non-users of the product - combined and everything included that comes with that. To look at what impact the product might have on the society, it is researched how relations between individuals chance, as well as how the entire society together behaves. The consequences for users as described above can be extended to a society level. If people become more productive as described above, it certainly would benefit society, as more can be accomplished. | ||

The fact that people might lose the ability to quickly scan text to find important information can also have an impact on society. If an entire generation grows up like this, there will also nobody to teach it to younger generations, meaning that society as a whole will lose this skill. Now it can be questioned how relevant such a skill might still be in future society, but its a loss nonetheless. | The fact that people might lose the ability to quickly scan text to find important information can also have an impact on society. If an entire generation grows up like this, there will also nobody to teach it to younger generations, meaning that society as a whole will lose this skill. Now it can be questioned how relevant such a skill might still be in future society, but its a loss nonetheless. | ||

Another thing that might occur when a large public uses | Another thing that might occur when a large public uses the product is that nobody longer reads all the seemingly unimportant messages. If nobody reads them anymore, those who write those messages will probably stop doing so, removing the purpose of the product. | ||

=== Enterprise === | === Enterprise === | ||

Possible relevant enterprises might be those who are interested to buy | Possible relevant enterprises might be those who are interested to buy the product. This could be either a company like WhatsApp themselves, who want to integrate it in their application themselves, or a third party that wants to publice it as an application on its own. The companies, especially a third party, would want to make profit of such an application. companies like WhatsApp could offer it as a free service to make sure users keep using their application and possible attract new users. Third party companies can not do this and would need to find another way to make profit of the application. An easy solution for this seems to make the application not free of charge. | ||

=== Economy === | === Economy === | ||

The product will reduce costs for users. A lot of people do not have time or do not want to filter the most important information themselfs. For this they can use a personal assistant to take over this task. But the agent will be less expensive than a personal assistant. This will save money. | |||

A disadvantage of this is that personal assistants will have less work. If people use | A disadvantage of this is that personal assistants will have less work. If people use the product of this research instead of a personal assistant for this particular task, personal assistants are not needed for this task anymore. This causes that there is less work for personal assistants. | ||

=== Desirability of possible features of the agent === | |||

In this section it is researched how desirable certain possible features of the agent are. These features would probably improve the performance of the agent, but might have negative ethical consequences. | |||

First it is analysed what the effect of the agent having access to the university infrastructure or certain other application the users uses, such as its agenda is. When the agent is able to use the information available on those platforms, such as which courses the users follows currently or when a next meeting is scheduled, the agent can make a better decision on whether or not a message is relevant on that moment of time. But is it desirable that the agent has access to these types of information? It could be seen as an infringement to the users privacy. This argument can be tackled by the fact that the user would have to give consent before the agent can access the information, as well as the fact that no human other than the user would have access to the information when the agent uses it, as it operates locally on the users smartphone. The agent could spread personal information towards third parties, if it would automatically respond to some messages. When these responses contain personal information, the privacy of the user could be lost. However, it is planned to add such features to the agent, and therefore the privacy of the user will be guaranteed. | |||

Next it is analyzed whether or not the agent could be seen as censorship. By hiding certain messages, the agent could influence the users opinion and behaviour. If the algorithm of the agent could be manipulated by third parties, to always block or show certain messages, it could be seen as a form of censorship. This would be a bad thing and certainly not desirable. Therefore it should be impossible for third parties to influence the agents algorithm. When the application works locally on the users phone, this should be the case. Furthermore, the agent only blocks or shows the notification about a message, and not the messages themselves. When the user opens the chat application, such as WhatsApp, the user can still read all the messages it received, including those of which it did not receive a notification. Therefore, in the occasion a third party could abuse the application to censor certain messages, it would only be partial censorship. Thus it can be concluded that the application will not lead to censorship. | |||

== Approach == | == Approach == | ||

To start of, research to the state-of-the-art will be done to acquire the knowledge to do a good study on what the desired product should be. Next an analysis will be made concerning the User, Society and Enterprise (USE) aspects with the coupled advantages and disadvantages. At this point the description of the prototype will be worked out in detail and the prototype will start to be build. At the same time research will be done to analyse the different approaches of filtering the incoming messages and the impact they give. The results of the research will be implemented in the prototype. When the prototype is complete, the goal of the project will be reflected upon and some more improvements of the prototype can be made. | To start of, research to the state-of-the-art will be done to acquire the knowledge to do a good study on what the desired product should be. Next an analysis will be made concerning the User, Society and Enterprise (USE) aspects with the coupled advantages and disadvantages. At this point the description of the prototype will be worked out in detail and the prototype will start to be build. At the same time research will be done to analyse the different approaches of filtering the incoming messages and the impact they give. The results of the research will be implemented in the prototype. When the prototype is complete, the goal of the project will be reflected upon and some more improvements of the prototype can be made. | ||

== State of the art == | == State of the art == | ||

| Line 90: | Line 99: | ||

On the field of text categorization and classification, much research has also already been done. First up naive Bayes could be used in different variants to classify text messages. When adding preprocessing or incorporating additional features the efficiency did not increase nor decrease drastically. However, it does reduce the feature space of the classification algorithm, which is beneficial when working with limited resources. discriminative or generative recurrent neural networks can also be used for text classification. Both of them have their different uses, and are better depending on the scenario. The generative model is especially effective for so called zero-shot learning, which is about applying knowledge from different tasks to tametisks that the model did not see before. The discriminative model is however more effective on larger datasets. These kinds of text classifications can also be used to find recommendations for users, to to filter messages on their relevance. A learning personal agent can be used to find new relevant information. The agent both learns from the user what he deems relevant, en classifies text to find whether or not it is indeed relevant to said user. A different approach to text classification is a keywords-based approach. Filtering on text messages on relevant keywords, the amount of notification that needs to be send to the user can greatly be used, sending only notifications of those message that are marked urgent or important. This method is also quite effective. Lastly, to easy the text classification algorithms, preprocessing can be done. By removing words that are seemingly irrelevant to determine its classification, the classification is both faster and reduce the feature space. Different techniques can be used to remove the irrelevant words, all with their pros and cons. | On the field of text categorization and classification, much research has also already been done. First up naive Bayes could be used in different variants to classify text messages. When adding preprocessing or incorporating additional features the efficiency did not increase nor decrease drastically. However, it does reduce the feature space of the classification algorithm, which is beneficial when working with limited resources. discriminative or generative recurrent neural networks can also be used for text classification. Both of them have their different uses, and are better depending on the scenario. The generative model is especially effective for so called zero-shot learning, which is about applying knowledge from different tasks to tametisks that the model did not see before. The discriminative model is however more effective on larger datasets. These kinds of text classifications can also be used to find recommendations for users, to to filter messages on their relevance. A learning personal agent can be used to find new relevant information. The agent both learns from the user what he deems relevant, en classifies text to find whether or not it is indeed relevant to said user. A different approach to text classification is a keywords-based approach. Filtering on text messages on relevant keywords, the amount of notification that needs to be send to the user can greatly be used, sending only notifications of those message that are marked urgent or important. This method is also quite effective. Lastly, to easy the text classification algorithms, preprocessing can be done. By removing words that are seemingly irrelevant to determine its classification, the classification is both faster and reduce the feature space. Different techniques can be used to remove the irrelevant words, all with their pros and cons. | ||

== State of the art sources == | |||

=== Sources - Personal assistant/email filtering === | |||

'''Understanding adoption of intelligent personal assistants: A parasocial relationship perspective'''<ref>https://www.emeraldinsight.com/doi/full/10.1108/IMDS-05-2017-0214</ref> | |||

The article is about intelligent personal assistants (IPA’s). IPA’s help for example with sending text messages, setting alarms, planning schedules, and ordering food. In the article is a review of existing literature on intelligent home assistants given. The writers say that they don’t know a study that analyzes factors affecting intentions to use IPA’s. They only know a few studies that have investigated user satisfaction with IPA’s. Furthermore is the parasocial relationship (PSR) theory presented. This theory says that a person responds to a character “similarly to how they feel, think and behave in real-life encounters” even though the character appears only on TV, according to the article. Lastly is there a lot about the study in the article. The hypotheses of this study are: | |||

H1. Task attraction perceived by a user of an IPA will have a positive influence on his or her PSR with the IPA. | |||

H2. Task attraction perceived by a user of an IPA will have a positive influence on his or her satisfaction with the IPA. | |||

H3. Social attraction perceived by a user of an IPA will have a positive influence on his or her PSR with the IPA. | |||

H4. Physical attraction perceived by a user of an IPA will have a positive influence on his or her PSR with the IPA. | |||

H5. Security/privacy risk perceived by a user of an IPA will have a negative influence on his or her PSR with the IPA. | |||

H6. A person’s PSR with an IPA will have a positive influence on his or her satisfaction with the IPA. | |||

H7. A person’s satisfaction with an IPA will have a positive influence on his or her continuance intention toward the IPA. | |||

'''Personal assistant for your emails streamlines your life'''<ref>https://www.sciencedirect.com/science/article/pii/S0262407913600925</ref> | |||

This article is about GmailValet, which is a personal assistant for emails. Normally is a personal assistant for turning an overflowing inbox into a to-do list only a luxury of the corporate elite. But the developers of GmailValet wanted to make this also affordable for less then $2 a day. | |||

'''Everyone's Assistant'''<ref>https://search.proquest.com/docview/1704945627/50DE051B4E904379PQ/4?accountid=27128</ref> | |||

This article is about “Everyone’s Assistant”, which is a California based service company for personal assistant services in Los Angeles and surrounding areas. The company makes personal assistant service affordable and accessible for everyone. The personal assistants cost $25 a hour and can be booked the same day or for future services. | |||

'''Experience With a Learning Personal Assistant'''<ref>https://www.ri.cmu.edu/pub_files/pub1/mitchell_tom_1994_2/mitchell_tom_1994_2.pdf</ref> | |||

This article is about the potential of machine learning when it comes to personal software assistants. So the automatic creating and maintaining of customized knowledge. A particular learning assistant is a calancer manager what is calles Calendar APprentice (CAP). This assistant learns by experience what the user scheduling preferences are. | |||

'''SwiftFile: An Intelligent Assistant for Organizing E-Mail'''<ref>http://www.aaai.org/Papers/Symposia/Spring/2000/SS-00-01/SS00-01-023.pdf</ref> | |||

This article is about SwiftFile, which is an intelligent assistant for organizing e-mail. It helps by classifying email by predicting the three folders that are most likely to be correct. It also provides shortcut buttons which makes selecting between folders faster. | |||

'''An intelligent personal assistant robot: BoBi secretary'''<ref>https://ieeexplore.ieee.org/document/8273196/</ref> | |||

This article is about an intelligent robot with the name BoBi secretary. Closed it is a box with the size of a smart phone, but it can be transformed to a movable robot. The robot can entertain but can also do all the work a secretary does. The three main functions are: intelligent meeting recording, multilingual interpretation and reading papers. | |||

'''RADAR: A Personal Assistant that Learns to Reduce Email Overload'''<ref>http://www.aaai.org/Papers/Workshops/2008/WS-08-04/WS08-04-004.pdf</ref> | |||

This article discusses artificial learning agents that manage an email system. The problem described in the article is that overload of email causes stress and discomfort. A big question remains that it is not sure whether or not the user will accept an agent managing their email system. Nevertheless the agent improved really fast and improved the productivity of the user. | |||

'''Intelligent Personal Assistant — Implementation'''<ref>https://link.springer.com/chapter/10.1007/978-1-85233-842-8_10</ref> | |||

This article does research to the best and most promising current Agents used by the major companies such as apple and microsoft. The conclusion of this paper states that cortana is currently the best working agent in assisting the user. | |||

'''Intelligent Personal Assistant'''<ref>http://ijifr.com/pdfsave/10-05-2017475IJIFR-V4-E8-060.pdf</ref> | |||

This article is about the current by speech driven agents that perform tasks for the user. In the paper this communication would become bi-directional and therefore will the agent respond back to the user. It will also store user preferences to have a better learning capacity | |||

'''Voice mail system with personal assistant provisioning'''<ref>https://patents.google.com/patent/US6792082B1/en</ref> | |||

A patent that describes a PA that can be used to keep track of address books and to make predictions on what the user wants to do. The patent also suggests text-to-speech so that the user can listen to, rather than read the response. The PA should also remember previous commands and respond accordingly on related follow-up commands. | |||

'''USER MODEL OF A PERSONAL ASSISTANT IN COLLABORATIVE DESIGN ENVIRONMENTS'''<ref>http://papers.cumincad.org/data/works/att/d5b5.content.06921.pdf</ref> | |||

The article is about creating models of the users of PA’s and the different domains associated to the user and the PA. The article suggests four different user models, user interest model, user behavior model, inference component and collaboration component. According to the article the user should have the right to change the user model, since ‘the user model can be more accurate with the aid of the user.’ Two approaches are through periodically promoted dialogs or by giving the user the final word. | |||

'''A Personal Email Assistant'''<ref>https://s3.amazonaws.com/academia.edu.documents/39232090/0c9605226714eeedad000000.pdf?AWSAccessKeyId=AKIAIWOWYYGZ2Y53UL3A&Expires=1524910399&Signature=BjYwD6z0FRQMoazG%2BSJHjHc8nZQ%3D&response-content-disposition=inline%3B%20filename%3DA_personal_email_assistant.pdf</ref> | |||

The paper is about Personal Email Assistants (PEA) that have the ability of processing emails with the help of machine-learning. The assistant can be used in multiple different email systems. Some key features of the PEA described in the paper are: smart vacation responder, junk mail filter and prioritization. The team members of the paper found the PEA good enough to be used in daily life. | |||

'''Rapid development of virtual personal assistant applications'''<ref>https://patents.google.com/patent/US20140337814A1/en</ref> | |||

This patent is about creating a platform for development of a virtual personal assistant (VPA). The patent works by having three ‘layers’, first the user interface that interacts with the user. Next is the VPA engine that analyses the user intent and also generates outputs. The last layer is the domain layer that contains domain specific components like grammar or language. | |||

'''A Softbot-Based Interface to the Internet'''<ref>https://homes.cs.washington.edu/~weld/papers/cacm.pdf</ref> | |||

The article describes an early version of a PA that is able to interact with files, search databases and interact with other programs. The interface for the Softbot is build on four ideas: Goal oriented, Charitable, Balanced and Integrated. Furthermore, different modules could be created to communicate with the softbot in different ways, like speech or writing. | |||

'''Socially-Aware Animated Intelligent Personal Assistant Agent'''<ref>http://www.aclweb.org/anthology/W16-3628</ref> | |||

The article describes a Socially-Aware Robot Assistant (SARA) that is able to analyse the user in other ways than normal input, for example the visual, vocal and verbal behaviours. By analysing these behaviours SARA is able to have its own visual, vocal and verbal behaviours. The goal of SARA is to create a personalized PA that, in case of the article, can make recommendations to the visitors of an event. | |||

'''JarPi: A low-cost raspberry pi based personal assistant for small-scale fishermen'''<ref>https://ieeexplore.ieee.org/document/8279618/</ref> | |||

This article describes how fisherman can also have a form of a personal assistant, that keeps track of the weather and current position on the sea. Normally such systems are really expensive and not available for small-scale fisherman, but using cheap technology such as the raspberry pi a great alternative can be created. | |||

'''Solution to abbreviated words in text messaging for personal assistant application'''<ref>https://ieeexplore.ieee.org/document/8251876/</ref> | |||

This article describes how a personal assistant that reads incoming text messages such as SMS-messages can handle abbreviations, which are commonly used in text based messaging. The study was performed with abbreviations common in the Indonesian language, based on a survey. | |||

'''A voice-controlled personal assistant robot'''<ref>https://ieeexplore.ieee.org/document/7150798/</ref> | |||

This article described the design and testing of a voice controlled physical personal assistant robot. commands can be given via a smartphone to the robot, which can perform various tasks. | |||

'''Management Information Systems in Knowledge Economy'''<ref>https://books.google.nl/books?id=sRqlLLOboagC&lpg=PA246&dq=artificial%20intelligence%20personal%20assistant%20administrative%20tasks&hl=nl&pg=PR2#v=onepage&q=artificial%20intelligence%20personal%20assistant%20administrative%20tasks&f=false</ref> | |||

'''AI Personal Assistants: How will they change our lives�'''<ref>https://www.fungglobalretailtech.com/research/ai-personal-assistants-will-change-lives/</ref> | |||

'''How artificial intelligence will redefine management'''<ref>https://hbr.org/2016/11/how-artificial-intelligence-will-redefine-management</ref> | |||

'''How can AI transform public administration?'''<ref>http://www.icdk.us/aai/public_administration</ref> | |||

=== Sources - Spam Filters/Machine Learning === | |||

'''Intellert: a novel approach for content-priority based message filtering'''<ref>https://ieeexplore.ieee.org/document/7940206/ </ref> | |||

This article described how filtering text based on its content and keywords leads to great reduction in the amount of notification that has to be send, by only sending those messages that are marked urgent or important. The results look promising. | |||

'''Content-based SMS spam filtering based on the Scaled Conjugate Gradient backpropagation algorithm'''<ref>https://ieeexplore.ieee.org/document/7382023/ </ref> | |||

'''Classification of english phrases and SMS text messages using Bayes and Support Vector Machine classifiers'''<ref>https://ieeexplore.ieee.org/document/5090166/ </ref> | |||

'''Generative and Discriminative Text Classification with Recurrent Neural Networks'''<ref>https://arxiv.org/abs/1703.01898 </ref> | |||

This article analyses the difference between discriminative and generative Recurrent Neural Networks (RNN) for text classification. The authors find that the generative model is more effective most of the time, while it does have a higher error rate. The generative model is especially effective for zero-shot learning, which is about applying knowledge from different tasks to tasks that the model did not see before. The discriminative model is more effective on larger datasets. The datasets that are tested range from two to fourteen classifications. | |||

'''SMS spam filtering and thread identification using bi-level text classification and clustering techniques'''<ref>http://journals.sagepub.com/doi/pdf/10.1177/0165551515616310 </ref> | |||

The problem that this article is addressing is the large amount of sms messages that are sent and that identifying spam or threads in these messages is difficult. First the spam is classified, which could be done with one of four popular text classifiers, NB, SVM, LDA and NMF. These are all binary classification algorithms that either work with hyper planes, matrices or probabilities to split up the classes. Next, the clustering is applied to construct the sms threads, which is done by either the K-means algorithm or NMF. The results of the article are that the choice of the algorithms is very important. The algorithms used in the experiment are SVM classification and NMF clustering which give good results. | |||

'''Spam filtering using integrated distribution-based balancing approach and regularized deep neural networks'''<ref>https://link.springer.com/content/pdf/10.1007/s10489-018-1161-y.pdf</ref> | |||

This article is about creating a spam filter with the help of a Recurrent Neural Network. The spam filter is intended for both SMS and email. The network is tested on four spam datasets, Enron, SpamAssassin, SMS and Social Networking. The experiment starts by pre-processing the datasets such that there are only lower cases, no special characters and no stop words, since these contain no semantic information. The results of the experiment are compared with the following spam filters, Minimum description length, Factorial design analysis using SVM and NB, Incremental Learning, Random Forest, Voting and CNN. The results of the experiment are that the model is better on three of the four datasets by a small amount and the accuracy is around 98% for the three and 92% for the last one. | |||

'''A Comparative Study on Feature Selection in Text Categorization'''<ref> http://www.surdeanu.info/mihai/teaching/ista555-spring15/readings/yang97comparative.pdf </ref> | |||

This article researches five different techniques to categorize text. | |||

'''A Learning Personal Agent for Text Filtering and Notification'''<ref> | |||

http://citeseerx.ist.psu.edu/viewdoc/download;jsessionid=B2FB1F51D66DC0D90FA785BB9D087172?doi=10.1.1.44.562&rep=rep1&type=pdf </ref> | |||

This article is about an agent that is used for managing notifications. This agent acts as a personal assistant. This agent learns the model of the user preferences in order to notify a user when relevant information becomes available. | |||

'''Combining Collaborative Filtering with Personal Agents for Better Recommendations'''<ref>http://www.aaai.org/Papers/AAAI/1999/AAAI99-063.pdf </ref> | |||

This article is about information filtering agents that identify which item a user finds worthwhile. This paper shows that Collaborative filtering can be used to combine personal Information filtering agents to produce better recommendations. | |||

'''Spam filtering using integrated distribution-based balancing approach and regularized deep neural networks. '''<ref> https://search.proquest.com/docview/2017545987/B4CAA5405B794CA8PQ/1?accountid=27128 </ref> | |||

This article is about anti-spam filters by using machine-learning and calculation of word weights. This categorizes spam and non-spam messages. This categorizing is more and more difficult because spammers use more legitimate words. | |||

'''Robust personalizable spam filtering via local and global discrimination modeling'''<ref>https://search.proquest.com/docview/1270351132/B4CAA5405B794CA8PQ/3?accountid=27128 </ref> | |||

There are two options of filtering: a single global filter for all users or a personalized filter for each user. In this article a personalized filter is presented and the challenges of it. They also present a strategy to personalize a global filter. | |||

'''Mail server probability spam filter'''<ref>https://patents.google.com/patent/US7320020B2/en </ref> | |||

This article is about a spam filter that uses a white list, black list, probability filter and keyword filter. The probability filter uses a general mail corpus and a general spam corpus to calculate the probability that the email is a spam. | |||

'''The Art and Science of how spam filters work'''<ref>https://securityintelligence.com/the-art-and-science-of-how-spam-filters-work/</ref> | |||

This article explains the principle of blacklists which analysis the header of a message to determine whether something is spam. Also messages that contain statistically dangerous files, such as .exe files, are often automatically blocked by content filters. The article end with a piece about Machine Learning in spam filters. Algorithms used in these filters try to find similar characteristics found in spam. | |||

'''The Effects of Different Bayesian Poison Methods on the Quality of the Bayesian Spam Filter ‘SpamBayes’'''<ref>https://www.cs.ru.nl/bachelorscripties/2009/Martijn_Sprengers___0513288___The_Effects_of_Different_Bayesian_Poison_Methods_on_the_Quality_of_the_Bayesian_Spam_Filter_SpamBayes.pdf </ref> | |||

This article discusses how spammers try to elude spam filters. The principle works as follows: add a few words that are more likely to appear in non-spam messages in order to trick spam filters in believing the message is legitimate. This article illustrates that even spam evolves, and as a result filters have to evolve with them. | |||

'''A review of machine learning approaches to Spam filtering'''<ref>https://www.sciencedirect.com/science/article/pii/S095741740900181X</ref> | |||

This paper presents a review of currently existing approaches to spam filtering and how the researchers believe we could improve certain methods. | |||

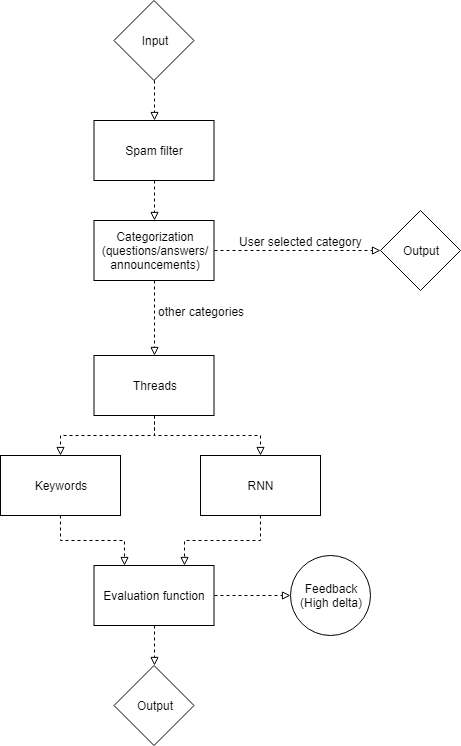

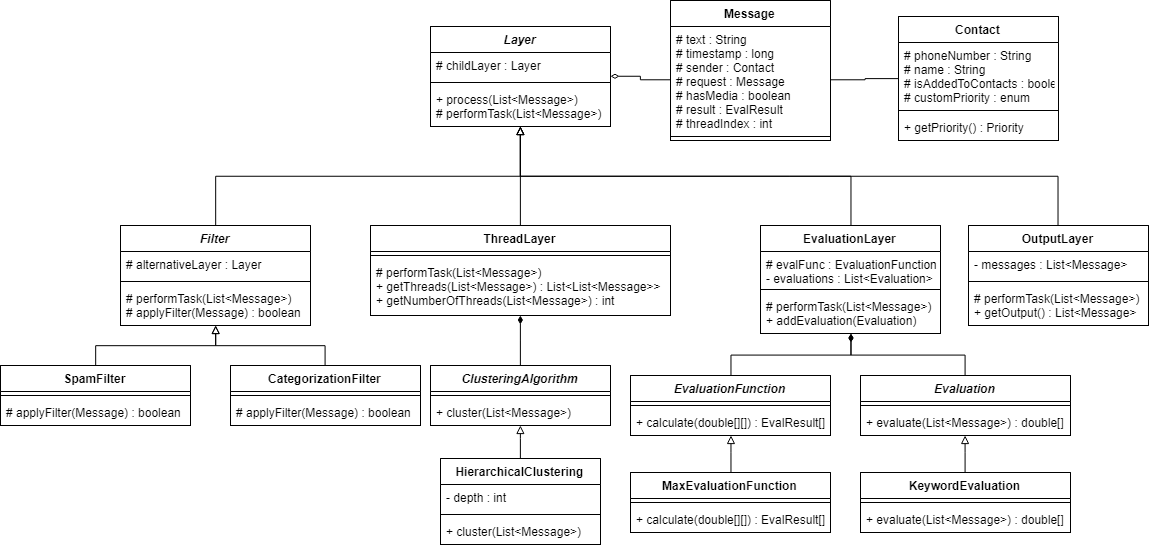

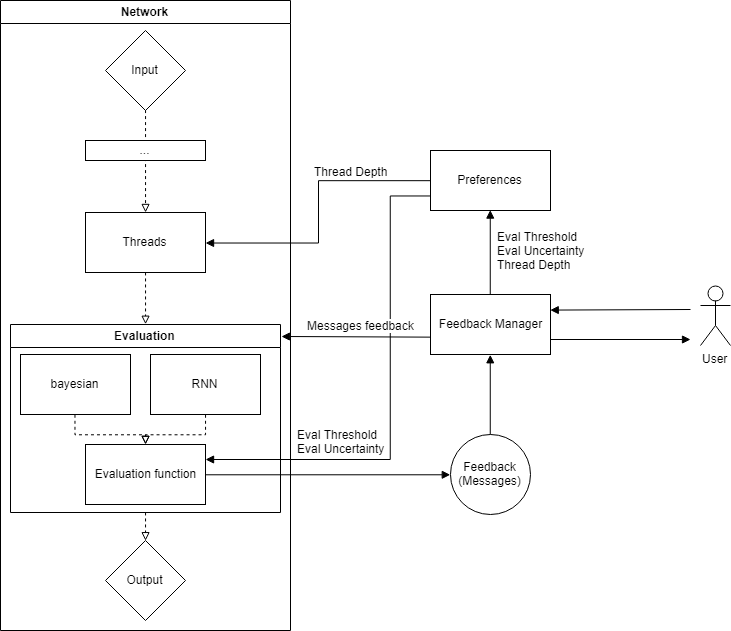

== Prototype description == | == Prototype description == | ||

| Line 112: | Line 269: | ||

==== Spam filter ==== | ==== Spam filter ==== | ||

The first step to start analyzing the messages is to filter the spam out of the messages. The purpose of this is to cut out the messages that | The first step to start analyzing the messages is to filter the spam out of the messages. The purpose of this is to cut out the messages that do not really have an influence on the context. For example the smiley’s are mostly not important. Therefore when there is a message with only smiley’s the program can categorize this as spam and thus filter it out. In this part the message is clearly looked at from a point that it only looks at what the actual text of a message is. To give an example, a message with a strange combination of letters would be filtered out. Thus the program does not pay attention to the meaning of a message but to the actual content of that particular message. Filtering out the spam before analyzing is important because the program would not have to analyze messages that have no influence in the first place. | ||

==== Categorization ==== | ==== Categorization ==== | ||

| Line 132: | Line 289: | ||

===== Recurrent neural networks ===== | ===== Recurrent neural networks ===== | ||

The second method is recurrent neural networks. This method uses learning to categorize messages. Therefore it needs training. There are two ways of obtaining this training. The first one is to analyze messages by hand and use this to train the neural network. The second one is to give a set of messages to the user of | The second method is recurrent neural networks. This method uses learning to categorize messages. Therefore it needs training. There are two ways of obtaining this training. The first one is to analyze messages by hand and use this to train the neural network. The second one is to give a set of messages to the user of the product and let the user categorize these messages. This creates personalized test data for all the users and thus will the neural network also be a personalized to a user when using this test data to learn. Combining these two methods of creating training data is the best thing to do. This is because then the neural network can have more training and it is not fully personalized. The fact that it is not fully personalized is a good thing because the user would otherwise fully rely on his categorization. When the user would not be able to categorize the messages the program would perform bad. Now with using both training data sources the program is optimized. Using a neural network gives a certain percentage of correct categorized messages. There option is there to make the user give a percentage to the program and that it keeps learning until this percentage is reached. | ||

Recurrent neural networks have a simple structure with a built in feedback loop, which allows it to act as a forecasting motor. They are extremely versatile in their applications. In feedforward neural networks signals flow in only one direction from input to output, one layer at a time. In a recurrent net, the output of a layer is added to the next input and fed back into the same layer, which is typically the only layer in the network. A recurrent net can receive sequence as input, and can also send out a sequence as output, this ability increases the versatility of recurrent neural networks as opposed to feed forward loops. | |||

Typically an RNN is an extremely difficult net to train. Since the network uses backpropagation, one runs into the problem of the vanishing gradient. The vanishing gradient is exponentially worse for an RNN, the reason for this is that each time step is the equivalent of an entire layer in a feed forward network. i.e. training a RNN for 100 time steps is like training a one hundred layer feed forward net. This leads to exponentially small gradients and a decay of information through time. There are several ways to address this problem ,the most popular of this is gating. Gating is a technique with which the network decides the current input and when to remember it for future time steps. | |||

Both options of filtering the messages can be used separately or combined. An analysis will be performed when both filters are finished and based on that analysis the evaluation function will be created, which is explained in the next section. | Both options of filtering the messages can be used separately or combined. An analysis will be performed when both filters are finished and based on that analysis the evaluation function will be created, which is explained in the next section. | ||

| Line 138: | Line 298: | ||

==== Evaluation function ==== | ==== Evaluation function ==== | ||

The evaluation subsystem will evaluate the incoming messages with the results of the different filtering options. Based on the results of the filtering options a different evaluation function can be chosen. Some ideas for the evaluation function are only choosing a result of one of the filters; taking the average; taking the maximum or minimum or looking at the magnitude of the difference. The evaluation subsystem also allows for personalization, since the users can indicate a degree of how many messages need to be filtered out, which can be transformed into a threshold that can be compared to the result of the evaluation function. Furthermore, personalization can be applied in the form of asking feedback. Users will most likely not want to give feedback on every message that is filtered so the results of the two filtering options could be used to get an understanding of the certainty of the network in filtering that message. If, for example, the difference of two filtering options exceeds a value that can be indirectly set by the user, the program can show the message and ask whether it is useful. | The evaluation subsystem will evaluate the incoming messages with the results of the different filtering options. Based on the results of the filtering options a different evaluation function can be chosen. Some ideas for the evaluation function are only choosing a result of one of the filters; taking the average; taking the maximum or minimum or looking at the magnitude of the difference. The evaluation subsystem also allows for personalization, since the users can indicate a degree of how many messages need to be filtered out, which can be transformed into a threshold that can be compared to the result of the evaluation function. Furthermore, personalization can be applied in the form of asking feedback. Users will most likely not want to give feedback on every message that is filtered so the results of the two filtering options could be used to get an understanding of the certainty of the network in filtering that message. If, for example, the difference of two filtering options exceeds a value that can be indirectly set by the user, the program can show the message and ask whether it is useful. | ||

== Preprocessing == | |||

The input the program gets is most of the time a really raw input. When analyzing emails the input will be a perfectly fine piece of text without typo’s and strange non-important messages in between.On the contrary the program that is being build has to take into account that in whatsapp a lot of typo’s are made and a lot of different strange text messages will be sent that do not mean anything by first seeing them. When an user is more used to the Whatsapp languages he or she gets to know some abbreviations that do not exist in the normal speaking languages. Therefore preprocessing is necessary to make it the program a lot easier to “read” and interpret all the messages. | |||

The idea that removes all the words like: “the”, “is”, “was”, “where” might be a good idea to implement. Generally those words are not important to the meaning of a message. Those words are called stopwords. This would be implementing with have a list of stopwords, the stoplist. Then all the messages would be scanned for those stopwords and then the stopwords would be removed from the message. | |||

The idea to remove the stopwords would be a benefit to the program because it would have less clutter and non important words to analyze. Nevertheless it would make it harder to identify questions, as it removes one of the most important parts of the message that would identifies it as a question. | |||

Therefore this preprocessing step would suit the program more when it is done after the messages are categorized, and thus be a processing step somewhere in the middle of the programm. | |||

An addition to the preprocessing, that other research papers suggested, could be that all the verbs would be translated back to their root form. This is called stemming. When having a sentence with the word talking in it. It would be replace talking with talk. In addition to that all the different verbs of talk would also be replaced with talk. When doing this the set of words that have to be checked would be a lot smaller because the list would only need to have one word instead of five different verbs of that word. | |||

== Threads == | |||

To get more out of singular messages threads can be used to to couple multiple messages into threads. With this the program can analyze a conversation instead of a single message. In conversations the topic does not change much. Therefore in single messages there might be a topic that is not literally stated in that message. Looking the context, the other messages, most of the time there is a topic that is addressed in that message. This is why threads are a great tool to analyze messages. | |||

When will messages be coupled together in a thread is the next question. The most important property that the program will take into account is time. When messages fall in the same time interval they will be coupled. This is a very basic but good implementation. The second property that will be implemented is checking who is the sender of a message. When the same person sends more messages right after each other. The chances are pretty high that those messages address the same topic. Thus the messages should be put into a thread. | |||

The paper suggest the usage of K-Means or NMF to cluster the messages. K means works well when the shape of the clusters are hyper-spherical. For the algorithm constructed in this research the clusters are not hyper-spherical. This is not the case in the implementation of clustering messages. Also for both K-means and NMF the number of clusters have to be predefined. In the case of clustering messages the number of clusters is not defined before clustering. | |||

A third algorithm to cluster is hierarchical clustering. This algorithm starts with giving every instance its own cluster. Then the algorithm starts combining clusters until it converges. | |||

This works well for this implementation because in hierarchical clustering there is not a predefined amount of clusters. However with hierarchical clustering a depth limit has to be specified. This could be a disadvantage for this implementation. | |||

Clustering based on time is fairly easy because every timestamp is a number and the program can cluster on the distance between messages using the euclidean distance function or another distance function. In which the distance is the difference in time for the mean of two clusters. Clustering based on who sent the message is harder. There is not a way to do clustering on contacts using an euclidean or other similar distance measures, thus therefore a different method needed to be implemented to work with categories instead of numbers, which is described below. | |||

=== Distance function for clustered categories === | |||

To compute a reasonable distance measure for categories that are uniformly distributed, meaning the individual differences are equal, inspiration from the Levenshtein distance was taken. The reason why the Levenshtein distance itself is not completely what is desired for the distance of categories, like the sender is because it also takes the order and the length into account. If the order or length is different between two clusters containing the same two senders the distance could be greater than the distance between two equal length clusters having different senders. | |||

The next step was to make a sketch of multiple clusters with a different length and sender configuration. Some general rules were established to get an idea of which cluster pairs should receive a higher distance than others. For example two equal clusters should have a distance of 0 and two completely different clusters should have a distance of 1. Then the cluster ABB was compared with clusters AB, AC and C with the desired order of increasing distance: AB, AC, C. To establish the distance, fractions are made with the denominator equal to the sum of the two clusters together and the numerator equal to the sum of different senders in both clusters. As can be seen in the table below, this gives the desired result. To balance this distance function out with the other possible distance functions a multiplication factor has been added that can scale the distance depending on the importance of the clustering categories. | |||

<pre> | |||

clusterDistance(c1, c2): | |||

diffNumber = #{c1 - c2} + #{c2 - c1} | |||

totalNumber = #{c1} + #{c2} | |||

return diffNumber / totalNumber | |||

</pre> | |||

{| class="wikitable" | border="1" style="border-collapse:collapse" ; | |||

|- | |||

! | |||

! A | |||

! B | |||

! C | |||

! AB | |||

! AC | |||

! ABB | |||

|- | |||

| A || 0 || 1 || 1 || 1/3 || 1/3 || 2/4 | |||

|- | |||

| B || x || 0 || 1 || 1/3 || 1 || 1/4 | |||

|- | |||

| C || x || x || 0 || 1 || 1/3 || 0/4 | |||

|- | |||

| AB || x || x || x || 0 || 2/4 || 0/5 | |||

|- | |||

| AC || x || x || x || x || 0 || 3/5 | |||

|- | |||

| ABB || x || x || x || x || x || 0 | |||

|} | |||

== Classic Naive Bayes == | |||

In order to design a new technique to classify relevance of messages, it is necessary to first look at established techniques that approximate the goal of a Whatsapp spam filter. The first technique that comes to mind is the use of Bayes classifiers. | |||

Naïve Bayes classifiers are a popular technique In use for e-mail filtering. Typically spam is filtered using a bag of words technique, where words are used as tokens to calculate the probability according to Bayes Theorem that an e-mail is spam or not spam(ham). | |||

[[File:Bayes_rule.png|thumb|right|800px|Bayes Rule. source(https://www.analyticsvidhya.com/blog/2017/09/naive-bayes-explained/)]] | |||

To demonstrate how a naïve bayes spam filter might work, consider the example of a database of a random number X spam messages and 2X ham messages. It is now our task to classify new e-mails as they arrive, based on the currently existing objects. | |||

Since the amount of ham messages is twice the size of the spam messages, a new (still unobserved) message is twice as likely to be a member of ham than to be a member of spam. In Bayesian theorem, this probability is known as prior probability. These probabilities are solely based on previous observations. | |||

With the priors formulated, the program is ready to classify a new message. The message is broken up into words and each word is ran through the conditional probability table of all words in the database. Through this process the likelihood of the message being spam or ham is calculated. | |||

Finally, the posterior probability of the messages belonging to either class is calculated and whichever is higher is the class the message will be assigned to. | |||

Words have a certain probability of occurring in either spam or ham. The filter does not know these probabilities in advance, it needs to be trained first so they can be built up. For instance, the spam probability of words like “Sex” or “Nigerian” are generally higher than the probabilities of names of family members and friends. | |||

When the agent is trained, the likelihood functions are used to compute the chance of an e-mail with a particular set of words belongs to either the spam or the ham class. | |||

One of the biggest advantages of Bayesian spam filtering is the fact that it is possible to train the filtering to each user, creating a personal spam filter. This training is possible because the spam a user receives correlates with that users activities. Eventually a Bayesian spam filter will assign a higher probability based on the user’s patterns. This property makes the use of a Bayesian classifier particularly attractive for Whatsapp spam filtering as the types of messages a user receives vary widely for users of the app. Bayesian Classifiers might also assign accurate probabilities from messages received from different groups as the group name can be used as a token as well. | |||

== Research questions == | |||

=== Feedback before using the prototype === | |||

The easiest and probably most obvious way to get personal data from the user is to give them a form to fill in. This form would consist of some general questions like: “Are you a student?”, “If so, where are you studying?”, “Do you consider positive enforcing messages important? (think of a confirmation or a compliment)” and so on. Giving the user such a form to fill in has the advantages that the program would already be able to be personalized when it will do its job in the beginning. Also during the improvement of the programm while the user is using it, it would need less feedback from the user because it already got a lot. The disadvantages of using such a form is that when an user answers such a question, the program makes an assumption based on the answer. For example when the user says that is has football as hobby, the program takes a certain list of words with all the keywords for football in it and gives the user a notification when one of those keywords is sent. But it might be the case that the user is not interested what happens in the champions league at all, but it plays football as a hobby. Another disadvantage of using a from might be that the user does not like to take the time to fill it in. | |||

Furthermore when the user fills in that he or she likes everything that is in the form the program will not do anything. Considering this the choice has been made to not include a form in the beginning. | |||

=== Contact biasses === | |||

For making the program more personal, considering the contacts of the user is a very important aspect in doing this. This can be done in a couple of different ways. | |||

The first option on how to consider contacts is to label a contact with a tag which would for example be: “Peer”, “Teacher” or “Brother”. In reality there would be a lot of different tags. Also when the contact is not in one of the categories of the tags the user would be able to create a new tag and give that tag a importance rate. The user would give this tag to a sender when he receives a messages from him or her. This tagging would only be done once and that would be the first time when the user receives a message from the sender. The advantage of this is that the program can use the information from the tag to get a better view of the importance of a message. The biggest disadvantage is that the user would have to do a lot of tagging the the beginning. | |||

The other option would be to consider if the user has the contact in his or her phone. And according to this give a priority to the contact. There are three priorities for a contact. These priorities are low, medium and high. When a the number of the contact is already in the phone of the user. The contact is set to a medium priority. When the contact’s number is not in the phone of th user the contact is set to low. The user is able to manually adjust the priority of a contact in the user interface. This solution would be good because the user does not have to do a lot of work in the beginning. In addition to that the program would be able to consider the contact in determining the importance of a message. Also the user would be able to customize the priorities when desired. | |||

By taking both options into consideration the choice has been made to implement the second option. This is because the the advantages of being able to customize when desired and not having to do a lot of work are decisive. | |||

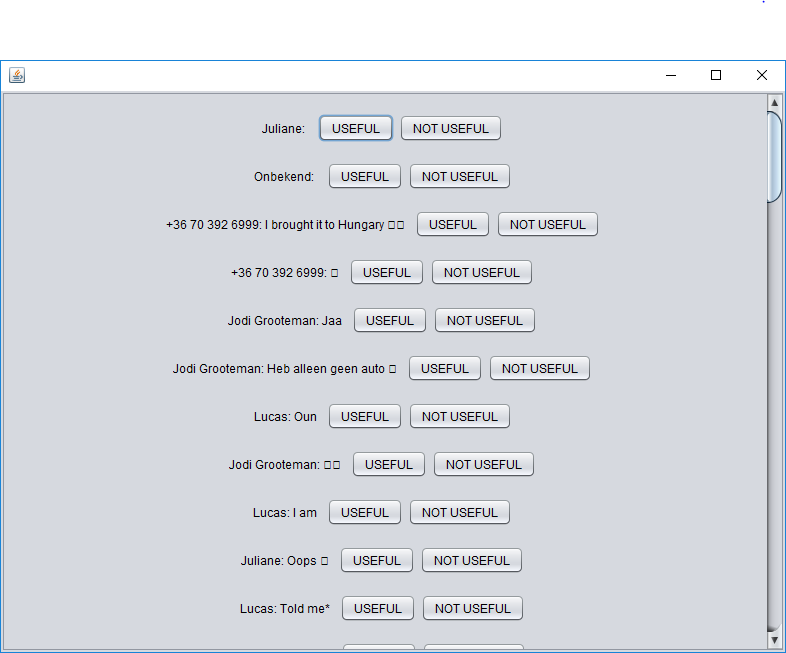

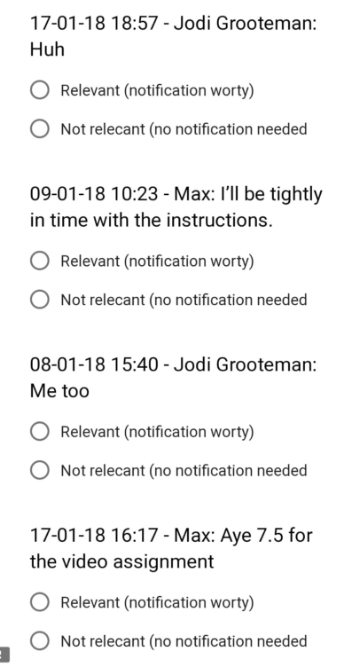

=== Feedback while using prototype === | |||

Getting feedback from the user is always important to consider. An user is more satisfied most of the time when something or someone cares about their opinion. From an use aspect making a feature that takes the opinion of the user into account would be a great thing to do. Then the question arises in what way would it be best to do this. | |||

The easiest thing to do would be to give the user the option to give their opinion whether or not a message is useful after every message. This would be an easier option to implement but a rather annoying one for the user. The program would get a lot of information to learn and would be able to filter better probably. | |||

On the contrary the user would have to give a lot of feedback. Imagine geting 100 messages in an hour in a groupschat. Then the user would get the question 100 times whether or not the program did good. This is a huge disadvantage and does outweigh the advantages of this solution. | |||

The next solution to this problem would require significantly less feedback from the user. For classification of the messages the program uses different algorithms. When the different algorithems do not give a matching answer. Then there will be asked for feedback from the user. In this way the program can learn from the things that are unclear to the program and the user would not have to give a lot of feedback. Also because the program would improve itself, it would ask for less feedback over time. This solution should in theory work much better than the first one. Therefore the choice has been made to implement this solution. It will fit the use aspect really well as the user will be giving feedback and wont be annoyed by the amount of feedback it has to give. | |||

=== Communicate with the university infrastructure === | |||

In the the designed prototype, the only action the agent can do which impacts the user is to show or hide messages. however, it could prove advantageous to have the agent operate in more ways than just that. if for example, the user would receive a lot of messages about an upcoming group meeting and the agent has access to the users timetable, the agent could easily filter these messages into one category. The other way around could be that if a few group members schedule an appointment and invite the user over whatsapp, the agent could introduce an event in the users calendar. A very useful tool for people who are more forgetful of actually scheduling planned meetings. These new ways to act could also have a downside because they introduce complexity into the agent as for example, each course a user takes would have different keywords that are relevant and the dataset should then contain keywords for each course, this could show the agent down. | |||

This challenge will not be tackled in this study, but research into it could be useful for future studies. | |||

=== Respond on the users behalf === | |||

An important message does not necessarily require a very complex action, if these actions could be handed over to a robotic PA the user need not spent as much time replying with simple “yes” and “no” answers. In the event of for example a question being asked to the user the PA would recognize this as such and respond appropriately. Simply said, the user is saved the time of having to respond to these messages. Furthermore, in the event where the PA is synchronized with the agenda of the user, automatic ‘do not disturb’ or ‘unavailable right now’ messages could be dispersed whenever the user is prompted to reply to a message. Of course, being able to disable these features is part of the system of the PA. | |||

Having described these features there is something to be said for its disadvantages. A PA letting someone know that you are unavailable might lead to sharing information you never wanted to share. Perhaps an extreme example of this is someone with malicious intentions ‘pinging’ your PA to know whether you’re in a meeting or not, which could potentially signal that you’re not home. | |||

Another flaw of a PA is that it isn’t personal enough. The PA responding to messages might make the sender of the message feel like he or she is talking to a robot instead of having a personal conversation with the person the message was intended for. | |||

Finally, and this might be more on the user than the PA itself, your agenda is not always fully up to date. The PA might think that you’re in a meeting right now and respond with a do-not-disturb, while in reality that meeting was cancelled yesterday and you just forgot to remove the meeting from your agenda. | |||

Since the overall feeling was that the disadvantages outweigh the advantages, the decision was made not to include this kind of functionality in the PA. | |||

== User Survey == | |||

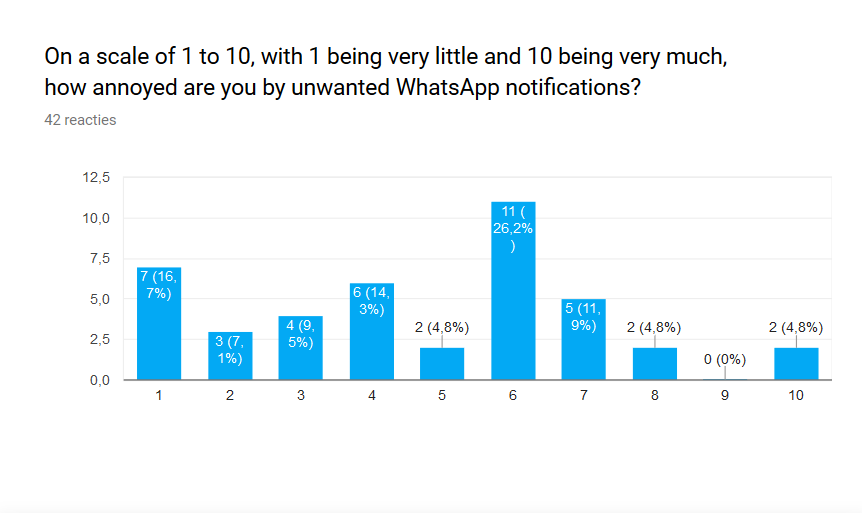

Since the team themselves are potential users of the product, they already have a general idea about what the user wants. However, the team only consits of 6 people, and opinions may vary greatly. To get a better idea of what other users would want from the product, a survey was created. The survey has been sent to other students of TU/e, therefore, almost all responses are from other students. This can have influence on the results, but since the product is also targeted towards these students, the responses still seem to be representative for the potential users.42 people filled in the survey. The survey itself can be found here: [[Survey Regarding WhatsApp Spam Filtering]] | |||

The goal of the first question was to see how big of a problem the problem the team tries to solve actually is. The amount of people who are not very annoyed by WhatsApp notifications is quite large. 20 out of the 42 people (47,6%) answered with a 4 or lower. This is the same amount of people that answered with a 6 or higher. Overall it can concluded that the problem, although less than initially thought, is indeed present to a certain degree among students of the TU/e. The full result can be seen below: | |||

[[File:Result1.png|thumb|center|upright=3.0|The results of question 1]] | |||

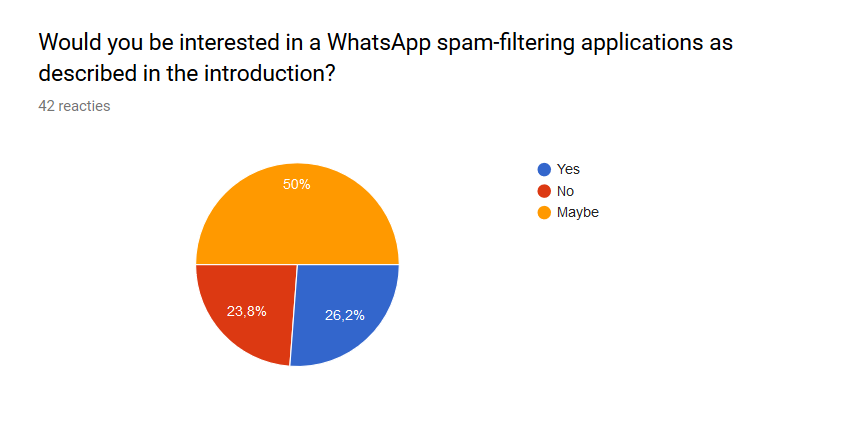

Next up was aksed how interested people were in the presented solution to the problem. Most people (21 out of the 42 people, 50%) said that they would maybe use the application. 11 people (26,2%) answered yes and 10 people (23,8%) answered no. If half of the people who answered maybe and all of the people who answered yes would end up using the product, half of the respondents would use the product. Therefore it can be concluded that there exists a market for the product. | |||

[[File:Result2.png|thumb|center|upright=3.0|The results of question 2]] | |||

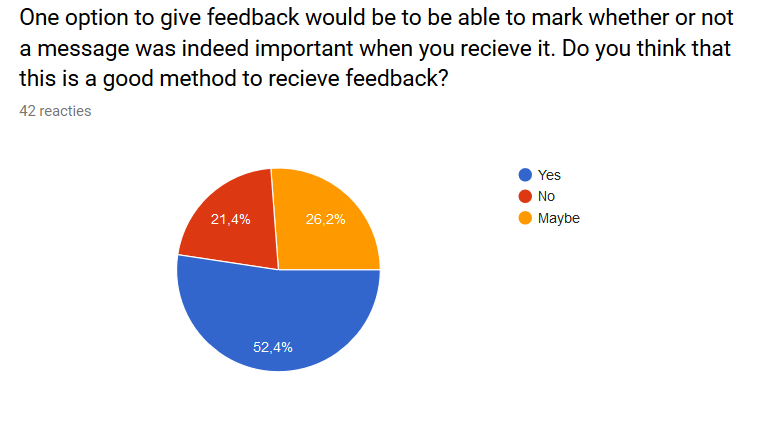

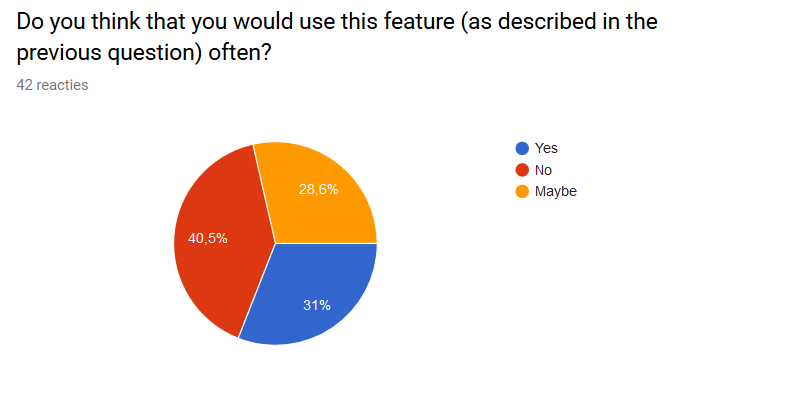

In question 3 and 4 it was what the respondents thought about the presented idea to receive feedback from the user, which is required to personalize the application. Many people (22 people, 52,4%) thought the idea where the user can give explicit feedback to the application by marking whether or not a message was indeed important was a good idea. 11 people (26,2%) answered maybe and 9 people (21,4%) answered no. Surprisingly, way less people answered yes on the follow-up question whether or not they would actually use the feedback function. Here only 13 people (31%) answered yes, while the amount of people who said they would probably not use the feature grew to 17 (40,5%). The remaining 12 people (28,6%) answered maybe. So although many people liked the idea, it is questionable whether or not it will generate enough feedback to fully personalize the application, as is desirable. | |||

[[File:Result3.png|thumb|center|upright=3.0|The results of question 3]] | |||

[[File:Result4.png|thumb|center|upright=3.0|The results of question 4]] | |||

To end the survey, an open question was presented to the respondents, where they could write feedback, tips or other general remarks regarding the problem. Many people mentioned that WhatsApp already have functions to manage notifications. Although this is indeed true, it does not fully solve this problem, since when you mute a chat, you will receive no notifications of the chat at all, even when an important message is send in that chat. Others mentioned that other programs have options were the sender of the message can mark a message as important. This solution however imposes 2 problems: first of it lays the work of marking a message as important not by the receiver, but by the sender. Secondly, what one finds important differs from person to person. When a sender marks a message as important, the receiver might not find it important at all, or he might want to see a message that was not marked as important by the sender. | |||

Some responders also came with other useful feedback. First of it was suggested to not only let the users give feedback whether or not a message marked as important was indeed important, but also whether or not a message marked as unimportant was indeed unimportant. This way the application can also learn when it misses something important. It was also suggested to use a scale to give feedback instead of a yes or no question, to get a better understanding of how important a message was. Next up was the suggestion to use implicit feedback instead of explicit. This can be done by checking for example how long it took the user to read the message or if the user ignored the notification, and whether or not the user responded to the message. | |||

Many people also mentioned privacy in their response. They were concerned about WhatsApp (or the application) filtering messages for them, in a form of censorship. They also mentioned they did not want other people or WhatsApp to know what they found important. This is a legit concern and it should be carefully noted how the application uses certain information, and who can have access to it. | |||

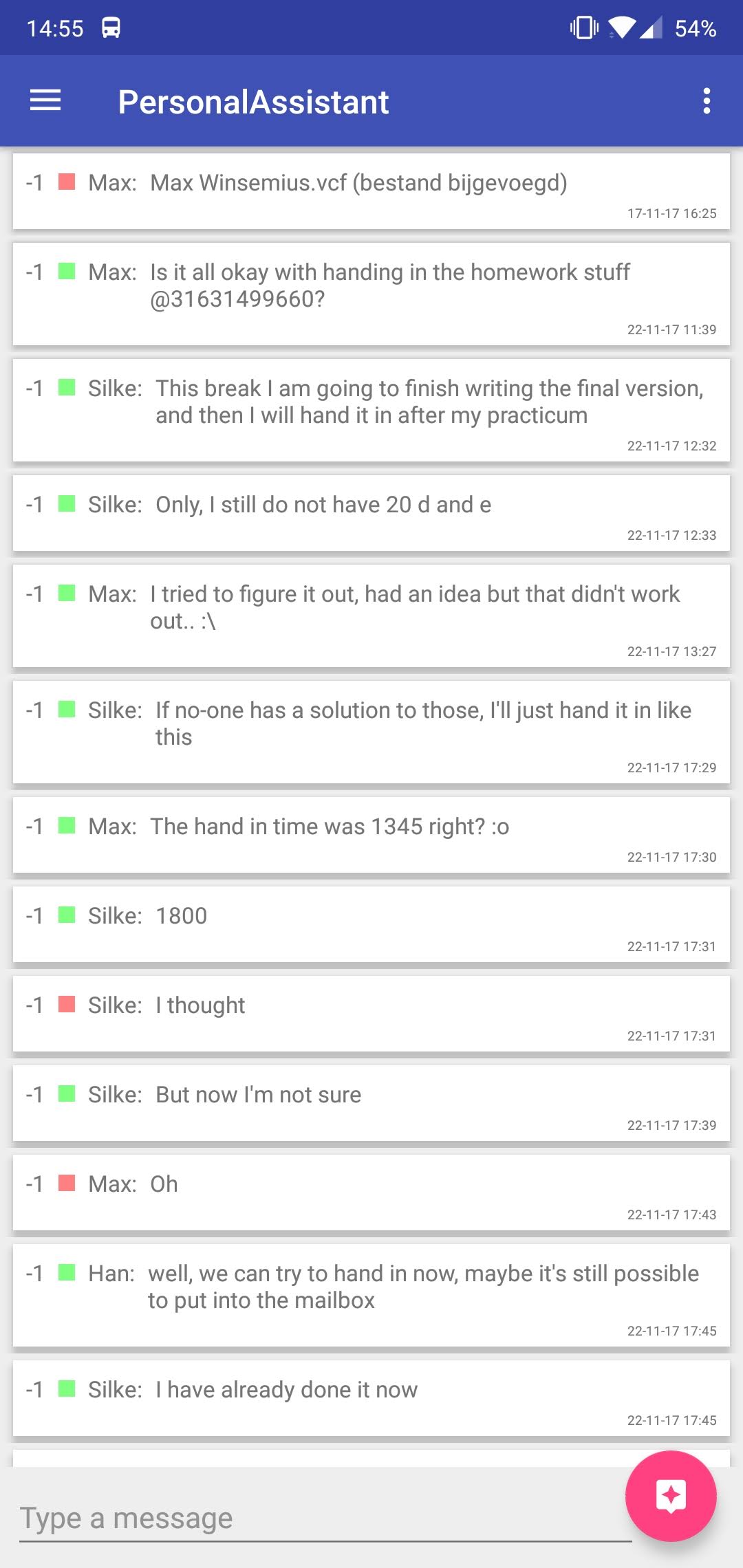

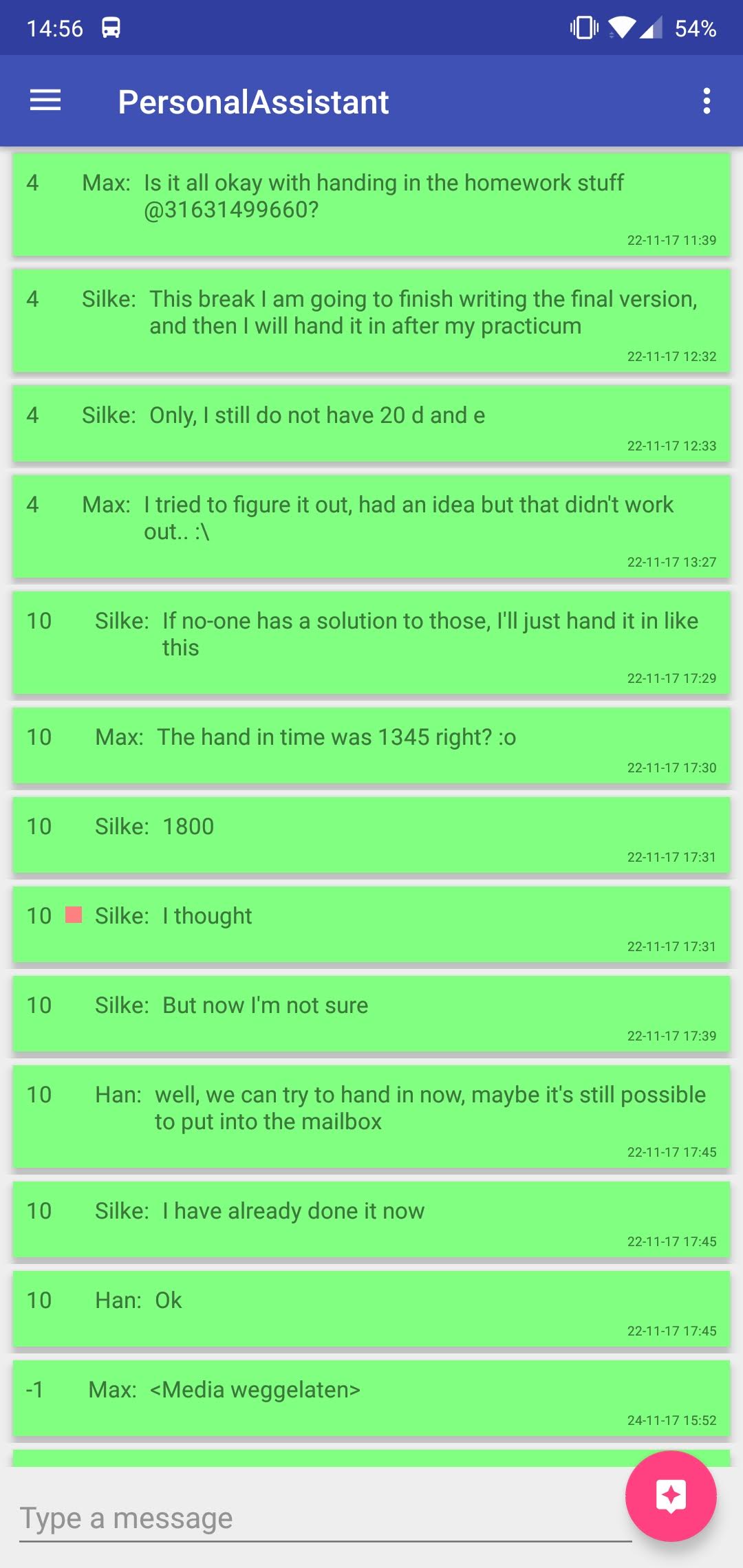

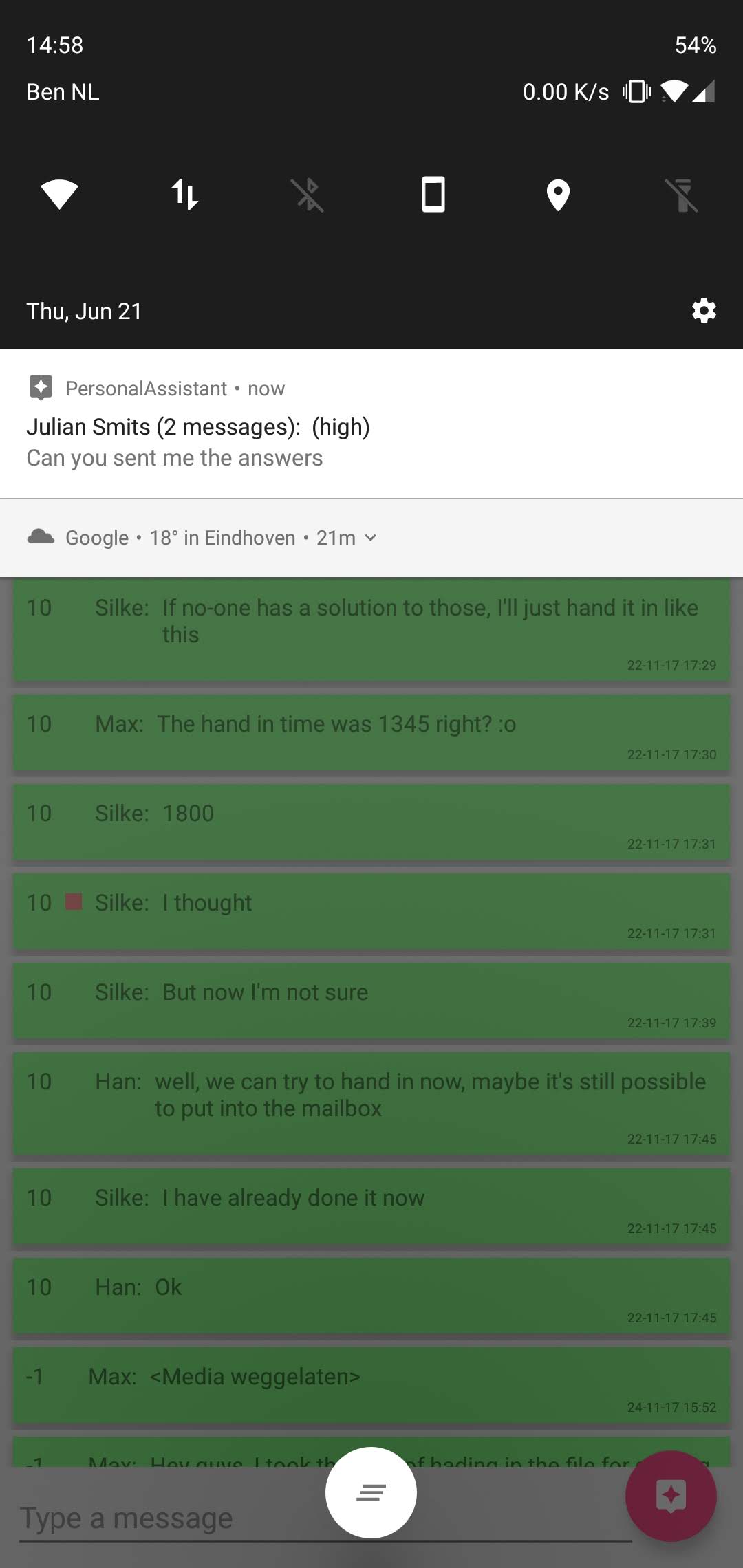

== Prototype progress == | == Prototype progress == | ||

| Line 240: | Line 536: | ||

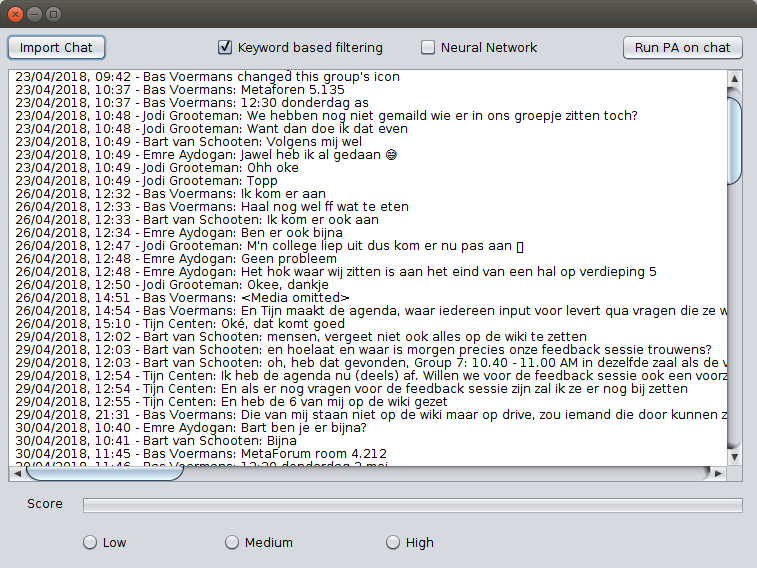

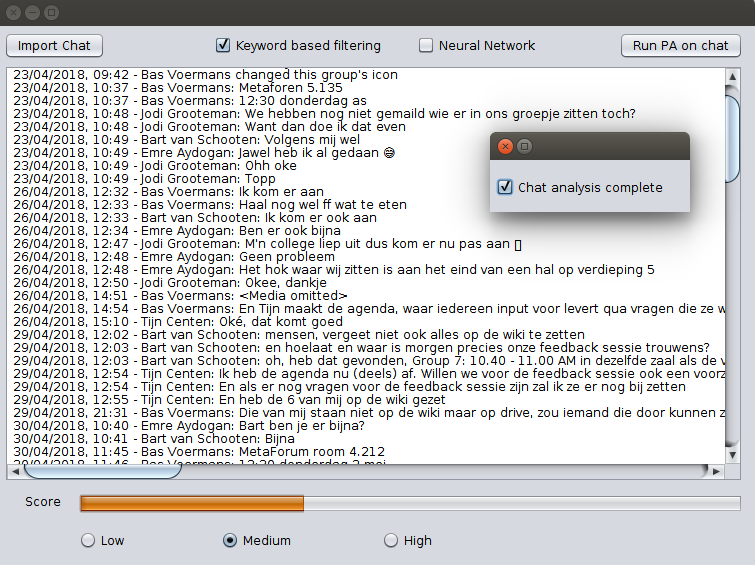

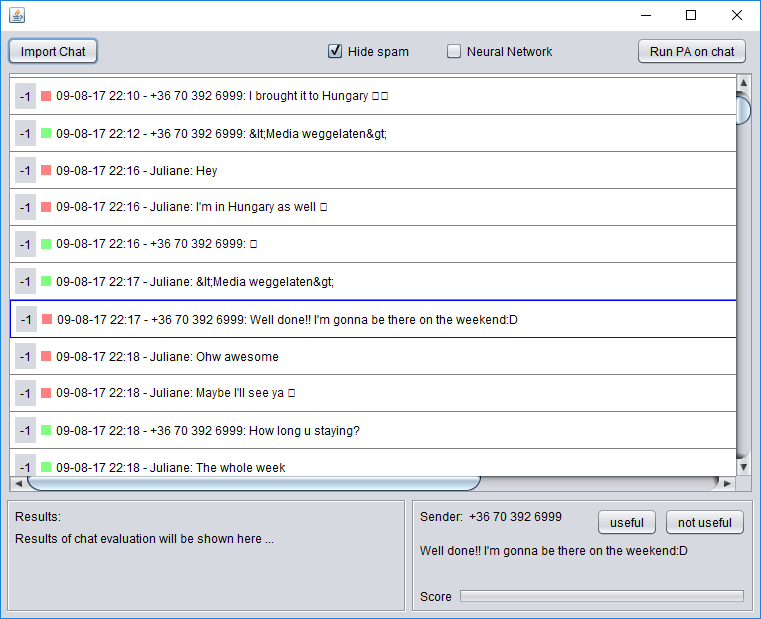

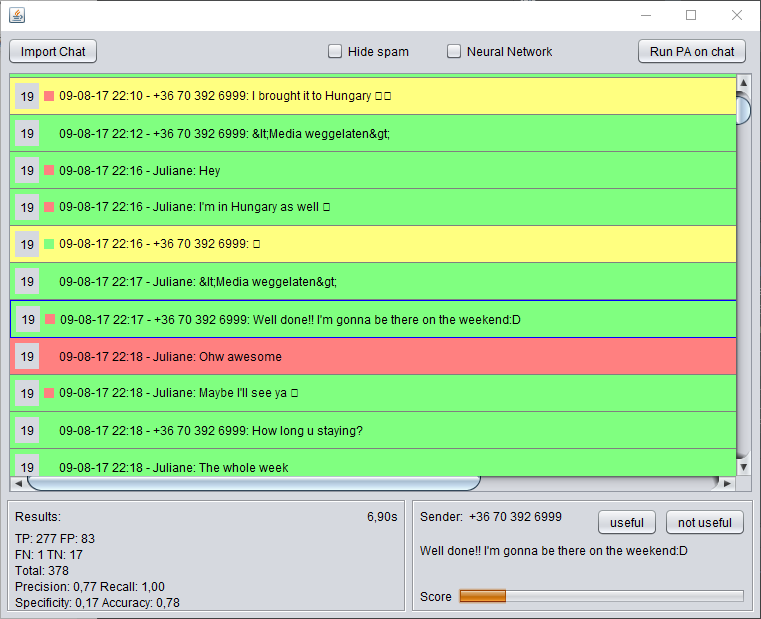

'''GUI Design''' | |||

To make for a pleasant way of interacting with the PA prototype a GUI was designed in parallel with the actual implementation of the PA. | To make for a pleasant way of interacting with the PA prototype a GUI was designed in parallel with the actual implementation of the PA. | ||

[[File:GUI-1.png|thumb|center|The GUI when it has started up and imported some text file]] | {| | ||

| [[File:GUI-1.png|thumb|upright=3|center|The GUI when it has started up and imported some text file]] | |||

| [[File:GUI-2.png|thumb|upright=3|center|After the user has pressed the 'Run PA on chat' button]] | |||

|} | |||

For the first iteration the functionality of the GUI was kept fairly limited. The user is able to import chats as .txt files through the file manager of the OS, which the GUI then shows in a text area. The user is then given the choice which filters he/she want to apply to this chat. The last bit of interactivity this GUI offers is the actual button to run the PA on the imported chat with the selected filters. This has yet to be implemented in a future version of the prototype. | For the first iteration the functionality of the GUI was kept fairly limited. The user is able to import chats as .txt files through the file manager of the OS, which the GUI then shows in a text area. The user is then given the choice which filters he/she want to apply to this chat. The last bit of interactivity this GUI offers is the actual button to run the PA on the imported chat with the selected filters. This has yet to be implemented in a future version of the prototype. | ||

What follows then is a pop up dialog that notifies the user that the analysis has been completed successfully. Furthermore, a random score is generated and shown to the user to further give an idea of how the GUI should function. | What follows then is a pop up dialog that notifies the user that the analysis has been completed successfully. Furthermore, a random score is generated and shown to the user to further give an idea of how the GUI should function. | ||

=== Iteration 2 === | === Iteration 2 === | ||

* | * Start on user preferences | ||

* | * Preprocessing and normalization | ||

* | * Bayesian network evaluation | ||

* Recurrent Neural Network evaluation | |||

* Prototype structure improvements | |||

* Create results summary | |||

* Reading of chat data | |||

'''User preferences''' | |||

To give the user the ability to express their own preferences regarding the degree of blocking notifications, creating threads and receiving feedback a user preferences object is created that stores all the preferences of the user. These preferences and settings can either be set by the user or can be altered by means of learning from feedback. The thread depth factor is an example of the latter, since the depth itself is not saying anything to the user. The user can however indicate that messages are coupled wrong in the sense of too few coupling or too many. With this answer the depth factor can be fine-tuned. Furthermore the preferences of the categorization layer are already present. These preferences indicate whether for example a question needs to be always blocked or allowed or needs to be automatically processed by the evaluation layer. This preference can be useful when a lot of questions are asked in a group that are not aimed at the user. The user preferences object can keep track of even more upcoming preferences of other layers in the future. | |||

'''Preprocessing and normalization''' | |||

The preprocessing that is done in the program consists of several different parts. Each part is described below. Some of the parts have to be done after classifying what kind of sentence it is, for example the removal of punctuation since it is important for question classification. Because of this the preprocessing is split up into two layers. The first one is the preprocessing layer and the second one is the normalization layer. The normalization layer is executed after the classification layer is done. This makes it so that the preprocessing is still done before determining the importance of a message but after the classification of what kind of sentence it actually is. | |||

Most of the punctuation in a sentence do not indicate the importance of a message | Messages contain a lot of meaningless words. They give the sentence structure but they do not have any influence on the meaning of the message. These words can thus be removed from the sentence before analyzing the importance of the message. The words that are meant here are for example: “the”, “a”, “an” or “to”. All these words are put into an array, then the sentence is checked whether or not it contains words of this array. When the sentence contains one or more of these words then they are deleted from the sentence and the leftover of the sentence is propagated to the next step of the preprocessing. | ||

Translating verbs to their base form is part of the normalization. This will make evaluating a lot easier. The first step is to split the sentences up in words then the words will be checked whether or not it is a verb and then the verb will be put in its base form. To do this a library named JAWS is being used. In combination with the dictionary from wordnet the JAWS library is able to convert a verb to their base form. The next step is finding out how to find a verb in a sentence. This could be done using the Stanford pos tagger. When processing a sentence with this library every word in the sentence will be tagged. This tag will say what kind of word it is. For example a noun or a verb. But by doing this the program needs a lot of computation time since this database is very big. Therefore this is not implemented in the prototype. Because this did not work out for the program another solution had to be found. This solution was pretty simple after all. Because when the program processes every word in a sentence with the JAWS library it only changes the words that are actually verbs. The other words in the sentence are untouched. When the processing is done the only thing that is left to do is to put the words back in a sentence so they actually form a message again. | |||

Translating the numbers to words is also a part of the preprocessing of the prototype and is done by using an existing class that translates numbers to words. The only thing left to do is to detect where the numbers are in a sentence and replacing them by calling the function in the existing class. The numbers in the sentence are found with a regular expression in java. This is a tool to find special characters or numbers really easy. When the numbers are replaced the sentence will be returned and put through the next step of the preprocessing. | |||

Most of the punctuation in a sentence do not indicate the importance of a message, therefore it is good to remove the punctuation before evaluating the message. Punctuation is however important for determining whether or not messages are questions for example. Therefore this step of preprocessing will be done after classifying the message but before evaluating the importance. Removing the punctuation is a very simple task because the regular expressions in java can easily remove all the punctuation. When this is done the sentence will be propagated to the next step of the program. | |||

'''Bayesian network evaluation''' | |||

The first evaluation method that has been created is the naïve bayesian network evaluation. The library used to create a bayesian network is the Classifier4j library and is implemented as follows. The evaluation class consists of a Bayesian classifier and a word data source. While training the bayesian network the text of all messages is being teached to the classifier depending on whether the message is spam or not. The classifier will then process the text and keep track of the number of occurrences in spam and non-spam for that word. The evaluation of messages works by computing the probability of the message being spam or not depending on these saved occurrences by the training method. The storing and loading of the word data source is not supported by the library and is thus created. The storing, loading and training functionalities are elaborated on more below. The results of the bayesian network are shown in the table below and from these results the bayesian network evaluation seems very promising, since all but one sentence is classified correctly. This is however still on the dummy messaging data and in the next iteration real data from a Whatsapp group will be used that is classified by hand. The results are generated by the network with the following structure: first a pre-processing layer followed by a thread layer, a categorization filter and a normalization layer. Then comes the evaluation layer with the bayesian evaluation method. The sentence that is classified wrongly as spam is: “is this good?” which is similar to the sentence “real good message”. More training data would resolve the issue but could also cause the network to function less good since Whatsapp messages are generally very short without good grammatical structure. | |||

{| class="wikitable" | border="1" style="border-collapse:collapse" ; | |||

|- | |||

! Time | |||

! Sender | |||

! Message | |||

! Classified answer | |||

! Expected answer | |||

|- | |||

| 0 || John || test || spam || spam | |||

|- | |||

| 1 || John || spam || spam || spam | |||

|- | |||

| 2 || Jane || this is spam || spam || spam | |||

|- | |||

| 3 || Jan || real message || spam || spam | |||

|- | |||

| 4 || Henk || real good message || spam || spam | |||

|- | |||

| 5 || John || Is this good? || spam || good | |||

|- | |||

| 6 || Henk || hello! shall we go to the beach? || spam || spam | |||

|- | |||

| 10 || John || Are you attending the lecture? || good || good | |||

|- | |||

| 11 || Jane || Yes, I am! || good || good | |||

|- | |||

| 12 || Henk || Yes, I am too! || good || good | |||

|- | |||

| 14 || Jan || No, I am on holiday || good || good | |||

|- | |||

| 16 || John || When will you be back? || good || good | |||

|- | |||

| 19 || Jan || I will be back tomorrow || good || good | |||

|- | |||

| 21 || Jane || Any of you know the answer to question 5? || good || good | |||

|- | |||

| 30 || Jane || ??? || spam || spam | |||

|} | |||

{| class="wikitable" | border="1" style="border-collapse:collapse" cellpadding="4" ; | |||

|- | |||

| TP || 7 || FP || 0 | |||

|- | |||

| FN || 1 || TN || 7 | |||

|- | |||

| Total || 15 | |||

|- | |||

| Precision || 1.0 | |||

|- | |||

| Recall || 0.88 | |||

|- | |||

| Specificity || 1.0 | |||

|- | |||

| Accuracy || 0.93 | |||

|} | |||

'''Recurrent Neural Network evaluation''' | |||

Furthermore, for the second visualization method Recurrent Neural Networks (RNN) have been looked at for the viability and while the training might be time consuming, neural networks have proved themselves to be able to analyze sequences of text or music very well. For this iteration a library for neural networks has already been chosen and a partial implementation is also already made. The library that is chosen is the DeepLearning4j library which supports a wide variety of neural networks for the Java programming language. While the library has a steep learning curve there are some good examples that show the implementation of a RNN on reviews where the network needs to categorize positive and negative reviews. The library works by setting up a network that expects so called word vectors. From these vectors the network is able to train and evaluate whether a message is spam or not. To be able to input the messages in the network, they first need to be transformed to word vectors, followed by a mapping onto the data structure that is expected by the library. The example uses a pre-trained database by Google of words to vectors, which is called the Google News dataset that ‘contains 300-dimensional vectors for 3 million words and phrases.’ This file is however 1.5GB of size and also requires a lot of memory to run the prototype. While testing 3GB of memory gave a out of memory exception and since it is desirable that the prototype can run locally on mobile devices this option is not possible. The next option was then to create a custom word to vector database that is aimed at the grammatical structure of messages. Since the structure is simpler and the vocabulary is much smaller in these messages, this custom database can be much smaller. For now the database is trained with a piece of text called ‘warpiece’, since the reading and classifying of chats is not done yet. With this custom dataset the network is able to run and only one message out of the fifteen could not be mapped to vectors, which is probably the message with only question marks. The network is now also able to be trained and evaluated but further work is needed to receive results out of the network. | |||

'''Prototype structure improvements''' | |||