Mobile Robot Control 2024 Ultron:Solution 1: Difference between revisions

No edit summary |

|||

| (23 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

==='''Exercise 1: the art of not crashing'''=== | |||

Hao | [[File:Execise1 Hao.png|thumb|350px|right|Solution of Hao]] | ||

====Solution of Hao==== | |||

#Boolean Flag: | #Boolean Flag: | ||

#*A boolean flag named 'move ' is used to control whether the robot should continue moving or stop. | #*A boolean flag named 'move ' is used to control whether the robot should continue moving or stop. | ||

#* It is initialized to 'true', indicating that the robot is initially allowed to move. | #*It is initialized to 'true', indicating that the robot is initially allowed to move. | ||

# Obstacle Detection: | #Obstacle Detection: | ||

#*The program continuously reads laser sensor data inside the control loop. | #* The program continuously reads laser sensor data inside the control loop. | ||

#*If any distance measurement from the laser scan is less than 0.2, an obstacle is detected. | #*If any distance measurement from the laser scan is less than 0.2, an obstacle is detected. | ||

# Stopping Action: | #Stopping Action: | ||

#*When an obstacle is detected, the 'move ' flag is set to 'false'. | #*When an obstacle is detected, the 'move ' flag is set to 'false'. | ||

#*Setting 'move ' to 'false' indicates that the robot should stop moving. | #*Setting 'move ' to 'false' indicates that the robot should stop moving. | ||

| Line 15: | Line 15: | ||

#Control Loop Condition: | #Control Loop Condition: | ||

#*The control loop continues executing as long as the robot is properly connected 'io.ok()' and the 'move ' flag is 'true'. | #*The control loop continues executing as long as the robot is properly connected 'io.ok()' and the 'move ' flag is 'true'. | ||

#*Once the 'move' flag is set to 'false' , the control loop stops executing, effectively halting the robot's motion.[[File: | #*Once the 'move' flag is set to 'false' , the control loop stops executing, effectively halting the robot's motion. | ||

====Solution of Chuyu==== | |||

# '''Initialization''': | |||

#* [[File:Exercise1 chuyu.png|thumb]]It initializes the IO object to interact with the robot's hardware. | |||

#* It creates a Rate object to control the loop frequency. | |||

# '''Flag Initialization''': | |||

#* It initializes a boolean flag named 'move' to 'true', indicating that the robot is initially allowed to move. | |||

# '''Control Loop''': | |||

#* The loop runs as long as two conditions are met: | |||

#** The robot is properly connected (io.ok() returns true). | |||

#** The 'move' flag is true. | |||

#* Within the loop, it reads laser sensor data. | |||

#* It checks for obstacles by iterating through the laser scan data and determining if any distance measurement is less than 0.5. | |||

#* If an obstacle is detected, it adjusts the robot's speed based on the closest obstacle distance: | |||

#** If the closest obstacle is further than 0.5 meters away, it moves at normal speed (0.2 units). | |||

#** If the closest obstacle is between 0.4 and 0.5 meters away, it decreases speed slightly. | |||

#** If the closest obstacle is between 0.3 and 0.4 meters away, it decreases speed further. | |||

#** If the closest obstacle is between 0.2 and 0.3 meters away, it decreases speed even more. | |||

#** If the closest obstacle is less than 0.2 meters away, it stops the robot and sets the 'move' flag to false to exit the loop. | |||

#* If no obstacles are detected, it continues moving forward at normal speed. | |||

#* The loop sleeps for the remaining time to maintain a fixed loop frequency. | |||

[[File:Exercise1 Nan.png|350px|thumb|Solution of Nan]] | |||

====Solution of Nan==== | |||

I defined a vector whose elements represent the distance of a "'''safe outer contour'''" from the LiDAR sensor in all directions that the sensor can detect. The robot stops immediately when this safe outer contour hits an object, i.e., when any element of the <code>emc::LaserData::rangs</code> vector is smaller than the corresponding element of the safe outer contour vector. | |||

The "safe outer contour" is defined as the actual outer contour of the robot plus the safe braking distance of the robot in all directions. In the current implementation, this outer contour is simply set as a circle and it is assumed that the safe braking distance is the same in all directions and independent of speed, i.e., a segment of a circular arc centered on the sensor with a fixed value of radius. And after experimentation, the radius was set to 0.15 meters. | |||

However, theoretically, the real outer contour data can be determined and a mapping model of speed and safe braking distance can be constructed. Thus, the accurate safe outer contour can be calculated at runtime. | |||

==== Solution of Yidan ==== | |||

Using LiDAR data in each loop to determine and adjust the robot's behavioural strategy. We iteratively check if any obstacle distance is below the set safe distance (0.5 metres) by iterating over these distance values. If this is found, a stop command is sent to the basic controller, otherwise the robot will keep going. | |||

* Robot needs to collect distance measurements from LIDAR for real time; | |||

* The robot will need to respond when any detected obstacle distance is below this threshold; | |||

* Based on the results of the analysis, the robot adjusts its travel speed or modifies its behaviour in order to avoid obstacles. | |||

==== Solution of Liz ==== | |||

Set the safe distance to 0.5 meters, initialize a flag parameter 'obstacle' to false. In every control loop, read the LiDAR data to detect the distance between robot and obstacle. If this distances is less than safe distance, then assume the robot is close to the obstacle, set the 'obstacle' to ture. | |||

Based on the value of 'obstacle', determine the next movement of the robot. If 'obstacle' equals ture, send stop commond to the robot to avoid crashing. Else send move commond to make robot move forward in the speed of 0.2m/s. | |||

==== Solution of Aori ==== | |||

The main idea behind this 'don't crash' system is to ensure that robots operating at different speeds can navigate safely in their environment. The velocity of the robots should be taken into account, as the time needed to decelerate and stop varies depending on their velocity. Here's how the method works: | |||

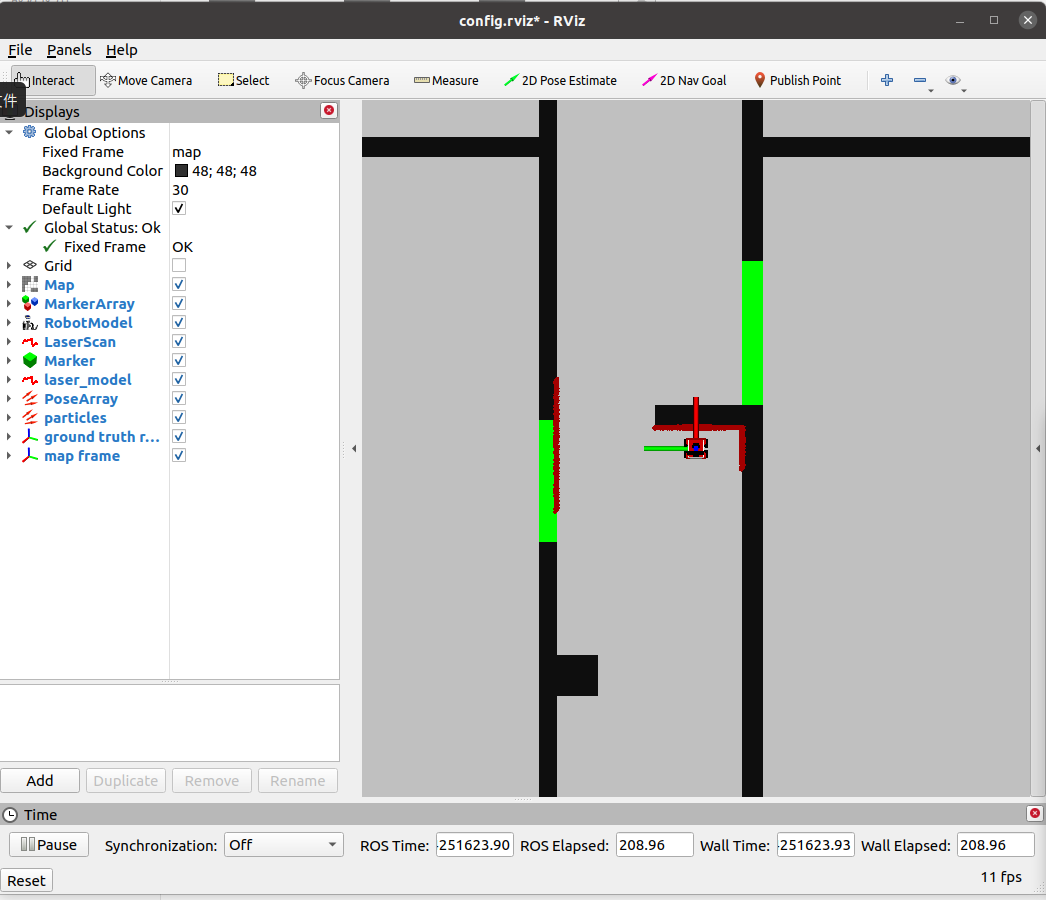

[[File:Screenshot from 2024-05-17 01-05-14.png|thumb|386x386px|Solution of Aori]] | |||

1. Laser Data Processing: | |||

The Laser data processing part are written in the `checkForObstaclesTooClose` function. The function iterates through the ranges of the laser data. | |||

For each range: | |||

* If the distance to an obstacle is less than a predefined threshold (`safe_near_obstacle`), the function sets a flag to indicate that an obstacle is near. | |||

* If the distance is even shorter, less than another predefined threshold (`safe_collision`), it sets the flag to indicate a stop. | |||

* If no obstacle is found within the near obstacle threshold, the function return its initial flag to indicate no obstacle is near. | |||

2. State Machine: | |||

The method uses a state machine to manage the robot's behavior based on the detected obstacles. The state machine defines three states: `NORMAL`, `NEAR_OBSTACLE`, and `COLLISION`. | |||

The robot's state is updated based on the flag returned by the `checkForObstaclesTooClose` function and the current velocity of the robot: | |||

* The robot initializes in the `NORMAL` state. If it detects a near obstacle and its velocity is higher than certain value, it transitions to the `NEAR_OBSTACLE` state. | |||

* If the robot is in the `NEAR_OBSTACLE` state and obstacles are cleared, it transitions back to the `NORMAL` state. If the stop flag raised, the robot transitions to the `COLLISION` state. | |||

* If the robot in the 'COLLISION' state finds its surroundings clear, it transitions back to the 'NORMAL' state for navigation. | |||

3. Actions Based on State: | |||

Depending on the current state of the robot, different actions are taken: | |||

* In the `NORMAL` state, the robot moves forward at a regular velocity to continue its navigation. | |||

* In the `NEAR_OBSTACLE` state, the robot decelerates to a lower velocity to safely navigate around the detected obstacle. | |||

* In the `COLLISION` state, the robot stops completely to prevent crashing into the obstacle. | |||

The details of functions, velocity calculation, and control flow can be found in the "dontcrash.cpp" file. | |||

---- | |||

=== '''Exercise 2: Testing your don't crash''' === | |||

==== Solution of Hao ==== | |||

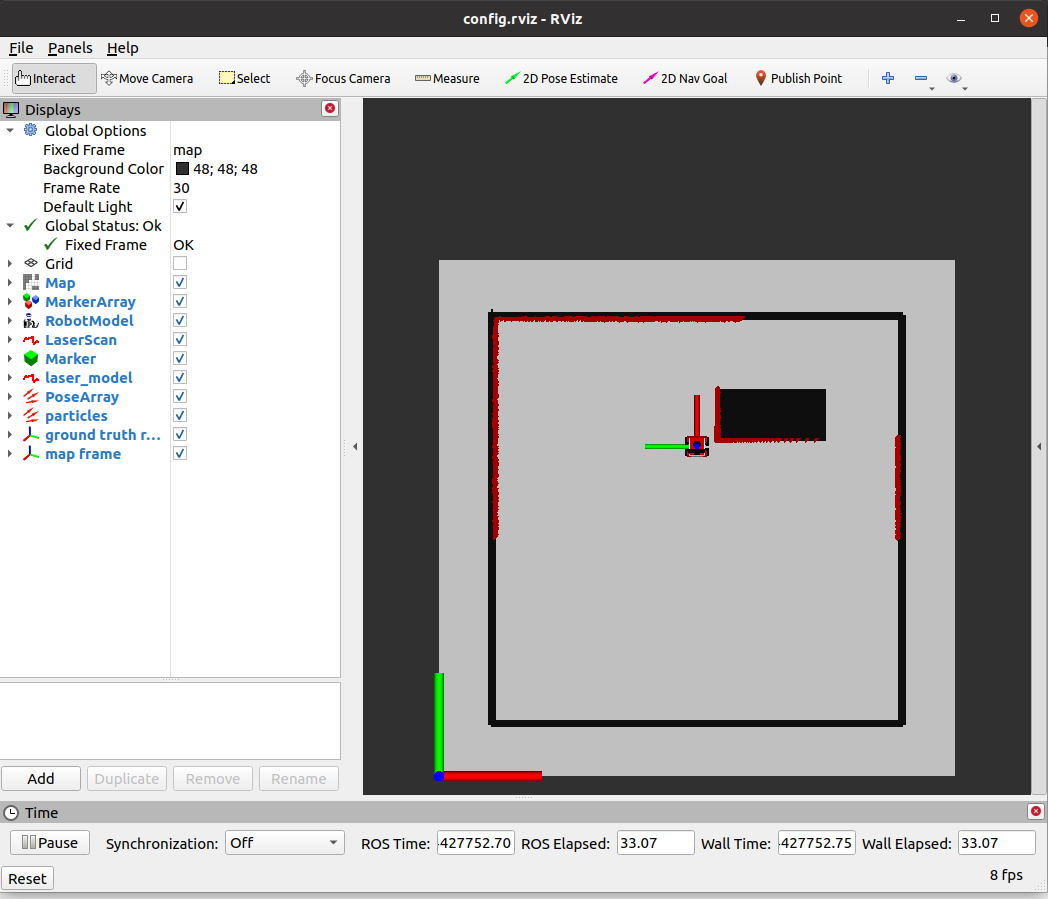

#[[File:Exercise2-1 Hao.png|thumb]]In map1 the robot can stop as the designed purpose. | |||

#[[File:Exercise2-2 Hao.png|thumb]]In map2 the robot stopped when detected the wall on the right side with distance<=0.2 | |||

[[File:Exercise2 2chuyu.png|thumb]] | |||

==== Solution of Chuyu: ==== | |||

#In map 1, robot stops when it is 0.2 meters from an obstacle | |||

#In map 2, robot stops when it is 0.2 meters from an obstacle | |||

[[File:Exercise2 1chuyu.png|thumb]] | |||

==== Solution of Nan ==== | |||

As shown in [https://gitlab.tue.nl/mobile-robot-control/mrc-2024/ultron/-/blob/7209bfb49a3fe39dc93b37dd9090f75895f165ed/1_introduction/test/map1.mp4 map1.mp4]and [https://gitlab.tue.nl/mobile-robot-control/mrc-2024/ultron/-/blob/7209bfb49a3fe39dc93b37dd9090f75895f165ed/1_introduction/test/map2.mp4 map2.mp4], the robot correctly detected obstacles in front and to the side. It stopped in time before the collision and was not disturbed by the side obstacles. | |||

---- | |||

=== '''Comparison among Different Approaches''' === | |||

We found several overlaps among our approaches so we tested three representative approaches to see if they works well. During the tests on robot HERO, we found: | |||

* The appraoches of Hao and Nan work well. | |||

Between the working solutions, | |||

Hao | * Hao,s approach is filled with clear logic and low computation, which is easy to understand and simple conditional judgment is needed. | ||

* Nan's approach is filled with more precise control, which defines a safe outer contour for more precise control of the robot's obstacle avoidance behavior in different directions. | |||

Overall, Hao's approach is more suitable for simple, fast-response scenarios, while Nan's approach is more suitable for complex scenarios requiring precise control and dynamic adjustment. To make the algorithm more flexible and more predictive, we finally choose '''Nan's approach''' as a basis for follow-up. | |||

Latest revision as of 12:31, 17 May 2024

Exercise 1: the art of not crashing

Solution of Hao

- Boolean Flag:

- A boolean flag named 'move ' is used to control whether the robot should continue moving or stop.

- It is initialized to 'true', indicating that the robot is initially allowed to move.

- Obstacle Detection:

- The program continuously reads laser sensor data inside the control loop.

- If any distance measurement from the laser scan is less than 0.2, an obstacle is detected.

- Stopping Action:

- When an obstacle is detected, the 'move ' flag is set to 'false'.

- Setting 'move ' to 'false' indicates that the robot should stop moving.

- Additionally, a stop command 'io.sendBaseReference(0, 0, 0)' is sent to the base controller immediately after detecting the obstacle.

- Control Loop Condition:

- The control loop continues executing as long as the robot is properly connected 'io.ok()' and the 'move ' flag is 'true'.

- Once the 'move' flag is set to 'false' , the control loop stops executing, effectively halting the robot's motion.

Solution of Chuyu

- Initialization:

- It initializes the IO object to interact with the robot's hardware.

- It creates a Rate object to control the loop frequency.

- Flag Initialization:

- It initializes a boolean flag named 'move' to 'true', indicating that the robot is initially allowed to move.

- Control Loop:

- The loop runs as long as two conditions are met:

- The robot is properly connected (io.ok() returns true).

- The 'move' flag is true.

- Within the loop, it reads laser sensor data.

- It checks for obstacles by iterating through the laser scan data and determining if any distance measurement is less than 0.5.

- If an obstacle is detected, it adjusts the robot's speed based on the closest obstacle distance:

- If the closest obstacle is further than 0.5 meters away, it moves at normal speed (0.2 units).

- If the closest obstacle is between 0.4 and 0.5 meters away, it decreases speed slightly.

- If the closest obstacle is between 0.3 and 0.4 meters away, it decreases speed further.

- If the closest obstacle is between 0.2 and 0.3 meters away, it decreases speed even more.

- If the closest obstacle is less than 0.2 meters away, it stops the robot and sets the 'move' flag to false to exit the loop.

- If no obstacles are detected, it continues moving forward at normal speed.

- The loop sleeps for the remaining time to maintain a fixed loop frequency.

- The loop runs as long as two conditions are met:

Solution of Nan

I defined a vector whose elements represent the distance of a "safe outer contour" from the LiDAR sensor in all directions that the sensor can detect. The robot stops immediately when this safe outer contour hits an object, i.e., when any element of the emc::LaserData::rangs vector is smaller than the corresponding element of the safe outer contour vector.

The "safe outer contour" is defined as the actual outer contour of the robot plus the safe braking distance of the robot in all directions. In the current implementation, this outer contour is simply set as a circle and it is assumed that the safe braking distance is the same in all directions and independent of speed, i.e., a segment of a circular arc centered on the sensor with a fixed value of radius. And after experimentation, the radius was set to 0.15 meters.

However, theoretically, the real outer contour data can be determined and a mapping model of speed and safe braking distance can be constructed. Thus, the accurate safe outer contour can be calculated at runtime.

Solution of Yidan

Using LiDAR data in each loop to determine and adjust the robot's behavioural strategy. We iteratively check if any obstacle distance is below the set safe distance (0.5 metres) by iterating over these distance values. If this is found, a stop command is sent to the basic controller, otherwise the robot will keep going.

- Robot needs to collect distance measurements from LIDAR for real time;

- The robot will need to respond when any detected obstacle distance is below this threshold;

- Based on the results of the analysis, the robot adjusts its travel speed or modifies its behaviour in order to avoid obstacles.

Solution of Liz

Set the safe distance to 0.5 meters, initialize a flag parameter 'obstacle' to false. In every control loop, read the LiDAR data to detect the distance between robot and obstacle. If this distances is less than safe distance, then assume the robot is close to the obstacle, set the 'obstacle' to ture.

Based on the value of 'obstacle', determine the next movement of the robot. If 'obstacle' equals ture, send stop commond to the robot to avoid crashing. Else send move commond to make robot move forward in the speed of 0.2m/s.

Solution of Aori

The main idea behind this 'don't crash' system is to ensure that robots operating at different speeds can navigate safely in their environment. The velocity of the robots should be taken into account, as the time needed to decelerate and stop varies depending on their velocity. Here's how the method works:

1. Laser Data Processing:

The Laser data processing part are written in the `checkForObstaclesTooClose` function. The function iterates through the ranges of the laser data.

For each range:

- If the distance to an obstacle is less than a predefined threshold (`safe_near_obstacle`), the function sets a flag to indicate that an obstacle is near.

- If the distance is even shorter, less than another predefined threshold (`safe_collision`), it sets the flag to indicate a stop.

- If no obstacle is found within the near obstacle threshold, the function return its initial flag to indicate no obstacle is near.

2. State Machine:

The method uses a state machine to manage the robot's behavior based on the detected obstacles. The state machine defines three states: `NORMAL`, `NEAR_OBSTACLE`, and `COLLISION`.

The robot's state is updated based on the flag returned by the `checkForObstaclesTooClose` function and the current velocity of the robot:

- The robot initializes in the `NORMAL` state. If it detects a near obstacle and its velocity is higher than certain value, it transitions to the `NEAR_OBSTACLE` state.

- If the robot is in the `NEAR_OBSTACLE` state and obstacles are cleared, it transitions back to the `NORMAL` state. If the stop flag raised, the robot transitions to the `COLLISION` state.

- If the robot in the 'COLLISION' state finds its surroundings clear, it transitions back to the 'NORMAL' state for navigation.

3. Actions Based on State:

Depending on the current state of the robot, different actions are taken:

- In the `NORMAL` state, the robot moves forward at a regular velocity to continue its navigation.

- In the `NEAR_OBSTACLE` state, the robot decelerates to a lower velocity to safely navigate around the detected obstacle.

- In the `COLLISION` state, the robot stops completely to prevent crashing into the obstacle.

The details of functions, velocity calculation, and control flow can be found in the "dontcrash.cpp" file.

Exercise 2: Testing your don't crash

Solution of Hao

- In map1 the robot can stop as the designed purpose.

- In map2 the robot stopped when detected the wall on the right side with distance<=0.2

Solution of Chuyu:

- In map 1, robot stops when it is 0.2 meters from an obstacle

- In map 2, robot stops when it is 0.2 meters from an obstacle

Solution of Nan

As shown in map1.mp4and map2.mp4, the robot correctly detected obstacles in front and to the side. It stopped in time before the collision and was not disturbed by the side obstacles.

Comparison among Different Approaches

We found several overlaps among our approaches so we tested three representative approaches to see if they works well. During the tests on robot HERO, we found:

- The appraoches of Hao and Nan work well.

Between the working solutions,

- Hao,s approach is filled with clear logic and low computation, which is easy to understand and simple conditional judgment is needed.

- Nan's approach is filled with more precise control, which defines a safe outer contour for more precise control of the robot's obstacle avoidance behavior in different directions.

Overall, Hao's approach is more suitable for simple, fast-response scenarios, while Nan's approach is more suitable for complex scenarios requiring precise control and dynamic adjustment. To make the algorithm more flexible and more predictive, we finally choose Nan's approach as a basis for follow-up.