Mobile Robot Control 2023 Ultron: Difference between revisions

| Line 58: | Line 58: | ||

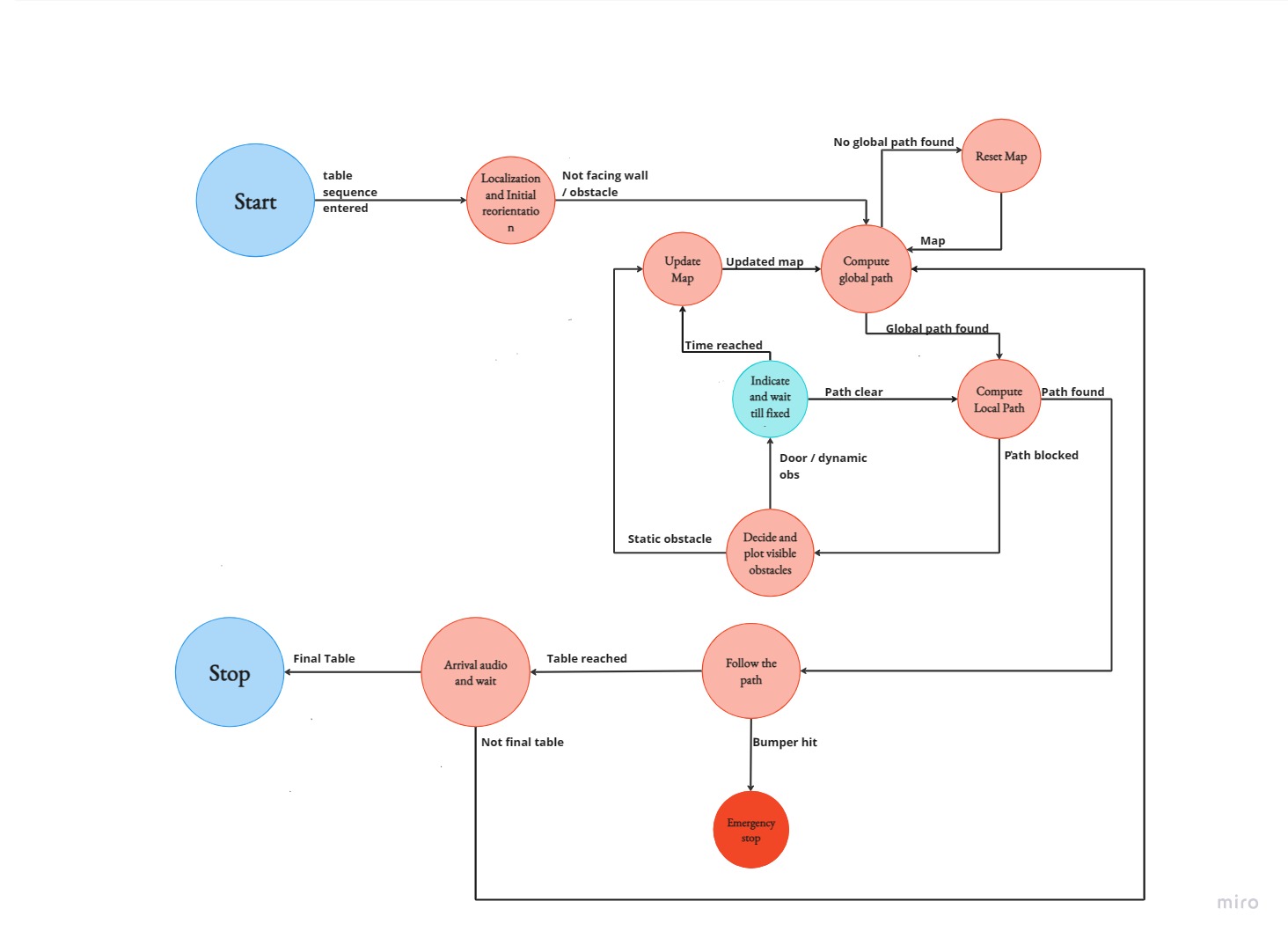

State Diagram | |||

<br /> | <br /> | ||

[[File:StateFlowDiagram1.jpg|thumb|500x500px|State Diagram|border]] | [[File:StateFlowDiagram1.jpg|thumb|500x500px|State Diagram|border]] | ||

Revision as of 12:02, 4 July 2023

Group members:

| Name | student ID |

|---|---|

| Sarthak Shirke | 1658581 |

| Idan Grady | 1912976 |

| Ram Balaji Ramachandran | 1896067 |

| Anagha Nadig | 1830961 |

| Dharshan Bashkaran Latha | 1868950 |

| Nisha Grace Joy | 1810502 |

Design Presentation Link

Introduction

In an increasingly autonomous world, robotics plays a vital role in performing complex tasks. One such task is enabling a robot to autonomously locate and navigate itself in a restaurant environment to serve customers. While this may seem like a straightforward task for humans, there are various challenges when considering robots. Planning the best routes for the robot to follow in order to navigate is one of the main challenges. Global path planning algorithms like A* or Dijkstra's algorithm, which guarantee effective route planning based on a predefined map, are just two examples of techniques that can be used to address this issue.

Techniques like the artificial potential field method or the dynamic window approach can be used for local navigation to achieve real-time obstacle avoidance and adaptation to dynamic objects, addressing the challenges posed by unknown and dynamic scenes.

Another difficulty is localization, which is required for an autonomous robot to determine its precise position and orientation. Particle filtering and Kalman filtering are two techniques that can be used to combine sensor measurements with a predetermined map, compensating for imperfections and adapting to real-world scenarios. Combining localization and navigation techniques is the final challenge. A robot can identify its location on a map, plan an optimal path, and successfully complete complex tasks by maneuvering precisely to the designated table by developing the necessary software.

This wiki will guide you through the process of attempting to solve the problem of allowing a robot to autonomously locate and navigate itself in a restaurant setting. Our journey will be shared, from designing and constructing the requirements to the decisions made along the way. We will go over what went well and what could have been better, providing a thorough overview of our project.

The challenge and deliverables

The basic map for the challenge contains the tables and their numbers correspondingly, walls and doors. During the challenge, the robot starts at an area of 1x1 meters in an arbitrary orientation. The robot then has to make its way to each of the tables, orient itself and announce that it has arrived at the respective table before moving to the next table in the sequence. To make things more challenging, a few unknown static and dynamic obstacles were also added to the map. The presence of these obstacles is not known to the robot prior to the commencement of the challenge. And it has to safely navigate around the obstacles without bumping into any of them.

With regard to deliverables, we presented an overview of the system, including the construction of requirements, data flow, and state diagrams to the class. Through weekly meetings with our supervisor and usage of the lab, we built towards the final assignment week by week. The culmination of our efforts was a presentation in a semi-real setting led by the teaching assistants.

The code could be found in our GitLab page: https://gitlab.tue.nl/mobile-robot-control/2023/ultron

General System Overview

- Our approach to this challenge involved carefully considering the various requirements that had to be met in order to complete it. These requirements were divided into three categories: Stakeholder requirements, Border requirements, and System Requirements. The stakeholder (or environmental) requirements of the challenge included safety, time, and customer intimacy. The robot had to be able to avoid obstacles and humans without bumping into them, take minimal time to complete all deliveries, and announce its arrival at the respective table.

- The border requirements were derived from the stakeholder requirements and encompassed the different strategies and considerations taken by our team to cater to each of the overarching stakeholder requirements. The system requirements/specifications gave the constraints of the robot and the environment in which it was operated. These were taken into account when trying to implement the border requirements.

The first step in tackling the Restaurant Challenge was to build the requirements based on the system specifications. This entailed carefully considering the robot and the environment in which it was used, as well as the overarching stakeholder requirements of safety, time, and customer intimacy. We were able to develop a set of requirements that would guide our efforts toward successfully completing the challenge by taking these factors into consideration.

Game plan and approach - Midterm

Requirements

Requirements are a crucial part of the design process as they define the goals and constraints of a project. By establishing clear requirements, a team can ensure that their efforts are focused on achieving the desired outcome and that all necessary considerations are taken into account. In the case of the Restaurant Challenge, we took the system setting from the previous paragraph as well as challenge into account when designing the specifications for our requirements. This allowed us to develop a set of requirements that were tailored to the specific needs of the challenge, ensuring that our efforts were directed towards achieving success.

Data Flow

The diagram for data flow was created based on the available data to the robot from its environment through its sensors namely, the bumper data, laser data and the odometry data, as well as the known data from the map and the input table sequence. Each of these inputs were used in different segments of the code to generate intermediate data. The odometry and laser data were used to perform localization which in turn gives the pose (position and orientation) of the robot. The laser data was also used in the process of detecting obstacles and doors. This data is used further down the line in other processes like local and global path planning. Overall the smooth transfer and interaction of data between each individual part of the code ensures that the robot moves in a predictable manner.

State Diagram

Milestones and achievements

Simulator vs Real world

Discussion and future scope

//On what we saw and why

Conclusion