Mobile Robot Control 2023 Rosey: Difference between revisions

| (64 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

Welcome to the group page of team Rosey! | |||

==Project organisation== | |||

'''Group members table and work division''' | |||

The group identified three major components of the assignment. These are the localisation, the global and local navigation of the robot. The group additionally saw use for an overseeing role, which should ensure that the interfaces between the functions are clear. The work is divided as shown in the table below. | |||

{| class="wikitable" | {| class="wikitable" | ||

|+ | |+Group members of team Rosey | ||

!Name!! | !Name!!Student ID | ||

!Main task | |||

|- | |- | ||

|Eline Wisse||1335162 | |Eline Wisse||1335162 | ||

|Local navigation | |||

|- | |- | ||

|Lotte Rassaerts | |Lotte Rassaerts | ||

|1330004 | |1330004 | ||

|Localisation | |||

|- | |- | ||

|Marijn Minkenberg | |Marijn Minkenberg | ||

|1357751 | |1357751 | ||

|System architecture & overview | |||

|- | |- | ||

|Rainier Heijne | |Rainier Heijne | ||

|1227575 | |1227575 | ||

|Local navigation | |||

|- | |- | ||

|Tom Minten | |Tom Minten | ||

|1372300 | |1372300 | ||

|Global navigation | |||

|- | |- | ||

|Lowe Blom | |Lowe Blom | ||

|1266020 | |1266020 | ||

|Localisation | |||

|} | |||

'''Midterm presentation''' | |||

During the midterm presentation, team Rosey presented their plan of action to make the robot deliver orders in a restaurant. The slides are available here for comparison to the final product: [[File:Midterm presentation Rosey.pdf|thumb|Midterm Presentation|none]] | |||

==Introduction== | |||

The Mobile Robot Control 2023 course is centered around a project known as the Restaurant challenge. It required a combination of technical skills, teamwork, and effective communication to design and implement a robotic system capable of autonomously navigating a restaurant environment and serving customers. During the build up to the challenge, the team gained valuable experience in various areas related to robotics and computer science. | |||

Throughout the course, different algorithms and techniques for robot control were explored, such as localization, mapping, path planning, and object detection. Hands-on experience was obtained by working with the Toyota HSR robot, known as Hero, which was equipped with sensors and actuators for perception and driving tasks. This practical engagement enhanced the understanding of the fundamental principles and challenges associated with robotic systems and nearly as importantly, enabled the team to test developed algorithms for the restaurant challenge. | |||

One of the significant obstacles encountered was the presence of dynamic obstacles. Developing a robust and efficient system to detect and avoid these obstacles while successfully delivering orders to the correct tables posed a significant challenge. Additionally, making sure all the parameters were tuned correctly within the limited amount of experiment time became quite the challenge. | |||

On this wiki page, group Rosey's final restaurant challenge algorithm will be explained in detail, including shortcomings of the algorithm, and possible solutions to these problems. First, the general system architecture will be discussed, after which the three identified main components of the assignment are discussed in detail. After that, the testing hours and final challenge day are reflected upon. | |||

==System architecture and overview== | |||

This section describes how the Rosey program functions on a supervisory level. | |||

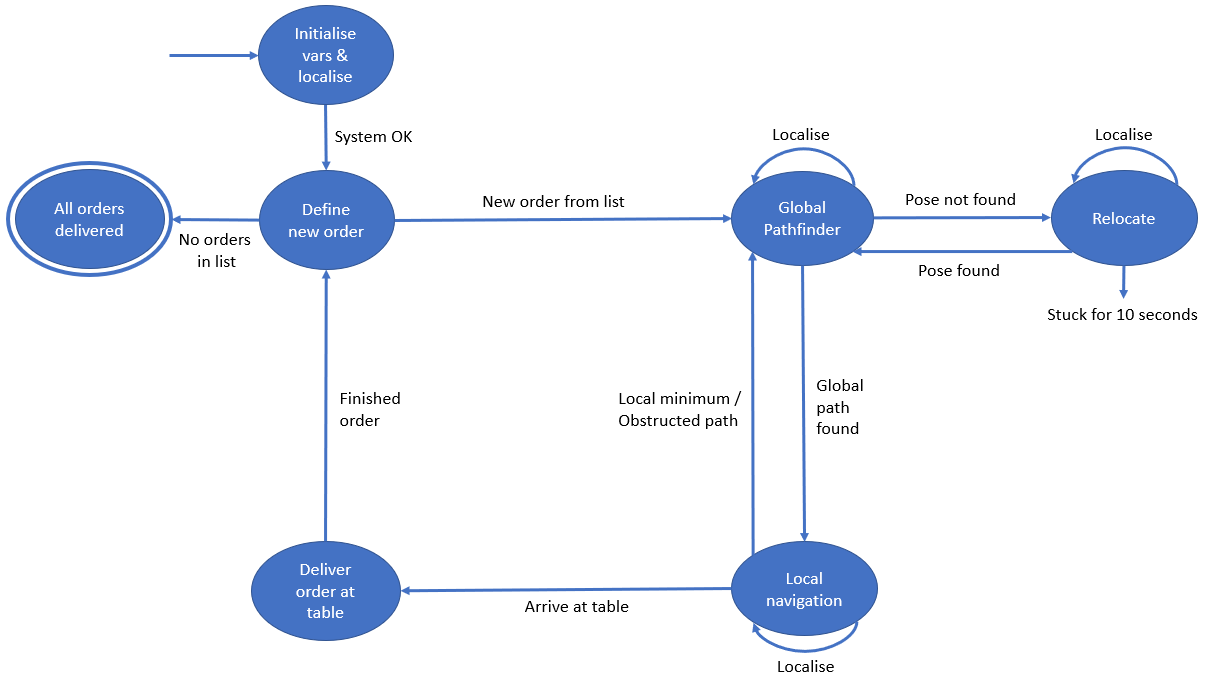

'''State diagram''' | |||

Taking the lecture on system architecture to heart, the state space diagram is used as a guideline to structure the main file. This gives a clear overview of what code is run and when, and it allows everybody to work separately on their part of the code. The state diagram that was presented in the midterm presentation has been iterated on multiple times. The aim was to simplify the states while capturing all necessary behaviours and transitions in them. See below for a second version.[[File:StateDiagramRosey.png|thumb|Intermediate version of state diagram (17-06-2023)|none]] | |||

After consideration with the team, the decision was made to go for a hierarchical design. The 'drive according to plan' state was internally becoming quite complex. Additionally, interfacing with other states like the request open door state would be easier if that state was part of the internal states in the 'drive according to plan' state. That is why two separate supervisors are made. The high-level state diagram describing the robot behaviour as a whole can be viewed below. The sub-level state diagram, describing the robot behaviour while in the 'local navigation' state, is further described under Local navigation. | |||

[[File:StateDiagram2.png|thumb|Final state diagram, used to construct the main supervisor.|none]]'''Supervisor implementation''' | |||

In the supervisor, supervisor states and transitions are defined as magic numbers. The main file contains a while loop which runs the correct state, based on the previous transition. A switch statement is employed for this. The simplest example of this is the 'define new order' state, given below: | |||

#<code>case DEFINE_NEW_ORDER:</code> | |||

#<code>{</code> | |||

#<code> ''transition'' = getOrderFromList(&orderList, &tableID);</code> | |||

#<code> if (''transition'' == NEW_ORDER)</code> | |||

#<code> {</code> | |||

#<code> state = GLOBAL_PATHFINDER;</code> | |||

#<code> std::cout << "[To state:] Global pathfinder" << std::endl;</code> | |||

#<code> }</code> | |||

#<code> else if (''transition'' == NO_ORDERS)</code> | |||

#<code> {</code> | |||

#<code> state = ALL_ORDERS_DELIVERED;</code> | |||

#<code> std::cout << "[To state:] All orders delivered" << std::endl;</code> | |||

#<code> }</code> | |||

#<code> else</code> | |||

#<code> {</code> | |||

#<code> state = ERROR_STATE;</code> | |||

#<code> error = "[LOG:] Error in DefineNewOrder";</code> | |||

#<code> }</code> | |||

#<code> break;</code> | |||

#<code>}</code> | |||

The function that is being run is the <code>getOrderFromList()</code> function, which expects a pointer to the <code>orderList</code> and to the <code>tableID</code>. The output of this function is the desired ''transition'': either a new order is defined, or no order is defined. In the former case, the next state is the global pathfinder, in the latter case, the next state is the final state, when all orders have been delivered. In case of any errors in <code>getOrderFromList()</code>, the function may return a different transition, in which case the program goes into the error state. | |||

This supervisory approach helped greatly to keep the overview. Additionally, state transitions very clearly indicated which part of the code is being run. The communication between functions was also quite smooth using this approach. The functions interacted with each other using pointers to variables declared in the main file. As an example, the localisation function updates the current estimate of the robot <code>Pose</code> on a global level. Every function using the <code>Pose</code> is then certain to have the latest updated version, and every function is certain to be accessing the same information. The same goes for variables such as the <code>pathNodeIDs</code>, <code>currentPose</code>, <code>tableID</code>, <code>laserData</code>, etc. | |||

The approach was also limiting at times. For example, it becomes slightly complicated to let a function try something 5 times before switching to a different method, without adjusting the structure of the main file. For this reason, most states have been slightly adjusted near the end of the project just to fit the needs of the functions. | |||

'''General variables''' | |||

In this section, a few general variables have been discussed. The table below explains the general variables in a bit more detail. | |||

{| class="wikitable" | |||

|+Shared variables | |||

!Variable | |||

!Type | |||

!Used by | |||

!Explanation | |||

|- | |||

|grid | |||

|Grid class | |||

|Global navigation | |||

|Class containing all node IDs, positions and the connections between them. | |||

|- | |||

|pathNodeIDs | |||

|std::vector<int> | |||

|Global navigation | |||

|List of all nodes from the currect robot position to the goal node (corresponding to a position in front of a table). | |||

|- | |||

|orderList | |||

|std::vector<int> | |||

|defineOrder, deliverOrder | |||

|List of all tables to be served. | |||

|- | |||

|tableID | |||

|int | |||

|defineOrder, deliverOrder, Global navigation | |||

|Table ID which is currently being served, taken from the orderList variable. | |||

|- | |||

|laserData | |||

|emc::LaserData | |||

|Localisation, local navigation | |||

|Is constantly updated with the most recent data from Rosey's LiDAR sensor. | |||

|- | |||

|odomData | |||

|emc::OdometryData | |||

|Localisation | |||

|Is constantly updated with the most recent data from Rosey's odometry. | |||

|- | |||

|currentPose | |||

|Pose struct | |||

|Localisation, Global navigation, local navigation | |||

|Contains the current estimate of the robot pose (x, y, angle) in map frame. | |||

|- | |||

|referencePose | |||

|Pose struct | |||

|Localisation | |||

|Contains the last certain robot pose (x, y, angle) in map frame. | |||

|} | |} | ||

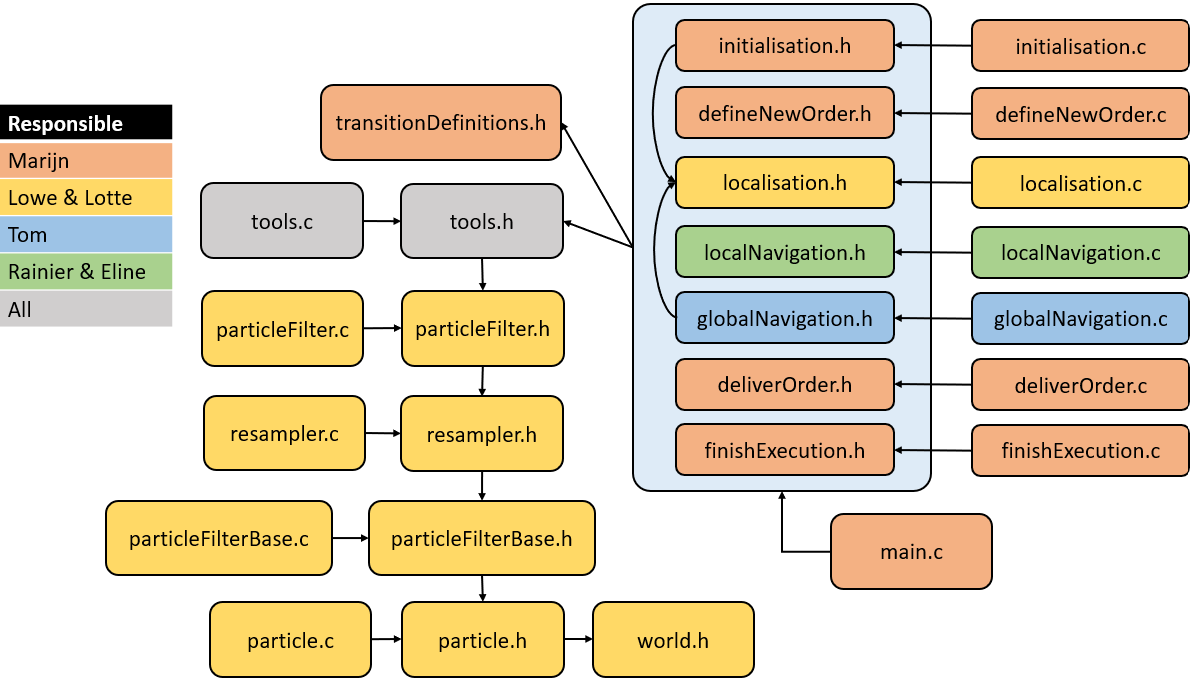

'''Include diagram''' | |||

To ensure the team could work well together, a file structure was set up early where the include tree was already in place. Apart from a few adjustments, this stayed consistent throughout the project. General functions could be added to the shared tools.c, and general classes, structs or magic numbers could be added to tools.h. Special care was taken to prevent self-looping includes, as these can cause hard-to-track errors. | |||

[[File:FileDiagram.png|none|thumb|Tree structure of files in the Rosey project. Arrows indicate which files include which files.]] | |||

==Global Navigation== | |||

The global navigation uses the A* algorithm to find the shortest path to from a starting node to a goal node. The A* algorithm uses a predefined grid of nodes. This grid is applied by a JSON file, generated with Matlab. With Matlab, the grid nodes with their connections are manually chooses in the known map. On top of that, we were inspired by the lecture about Safe navigation in a hospital environment to introduce some context-aware navigation into our model, by means of semantics. Each node contains some semantic information about its environment, described by a integer. The following semantic integers are included: | |||

*0 = table 0 | |||

*1= table 1, | |||

*..., | |||

*49 = table 49, | |||

*50 = door, | |||

*99 = empty (meaning no semantic information present). | |||

This information is also included in the JSON file. The global path finder can find a table by looking for the node with the correct semantic integer. | |||

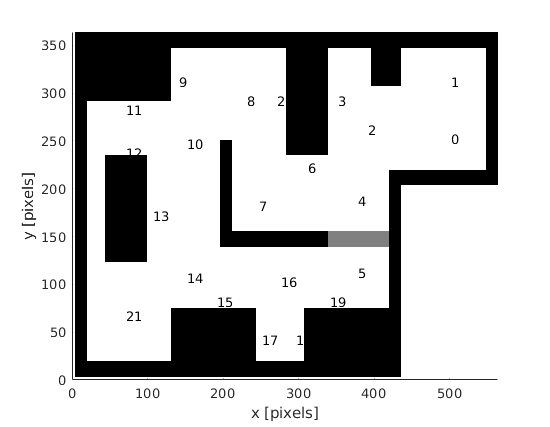

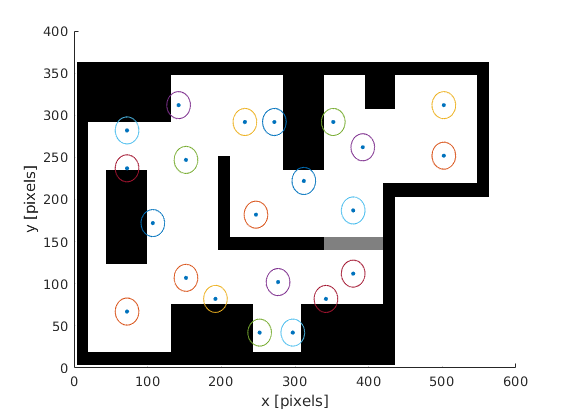

'''Preparation phase''' | |||

A JSON file, containing the grid information, can be created with the Matlab script below. The script has a visualization tool for plotting the position of all nodes. In the next graphs, the locations of the grid points in the final challenge are visualised. The circle has a radius of 20 centimeters. When the robot is in this circle, the local navigation considered this as being arrived at the node. The local navigation assumed that the robot has reached the node when it is 20 centimeters from the node. | |||

[[File:Matlab_grid_numbers.png]] | |||

[[File:Matlab_grid_circles.png]] | |||

<div class="mw-collapsible mw-collapsed" style="max-width:700px; overflow:auto;"> | |||

The corresponding Matlab script to generate the graphs and the JSON grid file: (click 'Expand'): | |||

<div class="mw-collapsible-content"> | |||

<pre> | |||

%% Data | |||

clear all; | |||

% Points in pixels, personally used pinta for that | |||

x = [500,500,390,350,377,377,310,245,230,140,150,70,70,105,150,190,275,250,295,340,270,70]; | |||

y = [110,50,100,70,175,250,140,180,70,50,115,80,125,190,255,280,260,320,320,280,70,295]; | |||

% move zero point for matching worldmodel | |||

x = x+2; | |||

y = (y*-1+362); | |||

% scale to meter | |||

res= 1/80; % 80 pixels per meter | |||

x_m = x*res; | |||

y_m = y*res; | |||

%% semantics | |||

% add semantics to certain nodes | |||

% 0: table 0, 1: table 1, ... 49: table 49, 50: door, 99: empty | |||

% also add coordinates of the semantic objects when necessary | |||

sem(1:length(x)) = 99; % fill array with 99 (empties) | |||

semx(1:length(x)) = 0; % fill array with 99 (empties) | |||

semy(1:length(x)) = 0; % fill array with 99 (empties) | |||

sem(10) = 0; | |||

semx(10) = 0.9375; | |||

semy(10) = 4.0625; | |||

sem(12) = 0; | |||

semx(12) = 0.9375; | |||

semy(12) = 4.0625; | |||

sem(4) = 1; | |||

semx(4) = 3.8750; | |||

semy(4) = 3.7500; | |||

sem(7) = 1; | |||

semx(7) = 3.8750; | |||

semy(7) = 3.7500; | |||

sem(21) = 1; | |||

semx(21) = 3.8750; | |||

semy(21) = 3.7500; | |||

sem(13) = 2; | |||

semx(13) = 0.8750; | |||

semy(13) = 2.2500; | |||

sem(14) = 2; | |||

semx(14) = 0.8750; | |||

semy(14) = 2.2500; | |||

sem(16) = 3; | |||

semx(16) = 2.3750; | |||

semy(16) = 0.5625; | |||

sem(18) = 3; | |||

semx(18) = 2.3750; | |||

semy(18) = 0.5625; | |||

sem(20) = 4; | |||

semx(20) = 4.6250; | |||

semy(20) = 0.5625; | |||

sem(19) = 4; | |||

semx(19) = 4.6250; | |||

semy(19) = 0.5625; | |||

doorNodes = [5,6] % door nodes | |||

for i =1:length(doorNodes) | |||

sem(doorNodes(i)) = 50; | |||

end | |||

%% connections | |||

% define connections for each node, using input mode of matlab | |||

conn_challenge_restaurant = zeros(length(x),10); | |||

for i = 1:length(x) | |||

i | |||

prompt="type Connections, in form [a,b] when more connections exists: " | |||

output = input(prompt) | |||

for j = 1:length(output) | |||

conn_challenge_restaurant(i,j) = output(j) | |||

end | |||

end | |||

%% Plot | |||

close all; | |||

figure() | |||

text(x, y, string((1:numel(x))-1)); % python numbering (index 0:..) | |||

%text(x, y, string((1:numel(x))-0)); % matlab numbering (index 1:...) | |||

axis([0, 564, 0, 364]) | |||

hold on | |||

% visualise bitmap with points | |||

I = imread('RestaurantChallengeMapRotatedFlipH.png'); | |||

h = image(2,2,I); | |||

uistack(h,'bottom') | |||

% size of points, which forms the circle around node | |||

sm = 10; | |||

% radius of circle about node | |||

R = 16; % 0.2*80, 20 centimeter radius | |||

th = linspace(0,2*pi) ; | |||

figure() | |||

scatter(x,y,sm,"filled") | |||

hold on | |||

for i = 1:length(x) | |||

x_c = R*cos(th)+x(i) ; | |||

y_c = R*sin(th)+y(i) ; | |||

scatter(x_c,y_c,1,"filled") | |||

end | |||

I = imread('RestaurantChallengeMapRotatedFlipH.png'); | |||

h = image(2,2,I); | |||

uistack(h,'bottom') | |||

%% generate JSON | |||

S.nodes = struct("x", num2cell(x_m), "y", num2cell(y_m), "semantic",num2cell(sem),"semanticX",num2cell(semx),"semanticY",num2cell(semy)) | |||

S.connections = conn_challenge_restaurant | |||

s = jsonencode(S,PrettyPrint=true) | |||

if exist('grid.json', 'file')==2 | |||

prompt="do you want to remove old grid.json file? (y=1/n=0) " | |||

output = input(prompt); | |||

if output == 1 | |||

delete('grid.json'); | |||

disp("Caution, old grid.json file is deleted...") | |||

end | |||

end | |||

fid=fopen('grid.json','w'); | |||

fprintf(fid, s); | |||

disp("new grid.json is succesfully saved") | |||

</pre> | |||

</div> | |||

</div> | |||

'''Initiation phase (run once)''' | |||

The json file is imported with the <code>loadConfigFile()</code>and the grid is constructed with<code>constructGrid()</code>. The grid information is placed in the global variable <code>grid</code>. | |||

'''Global path finder phase''' | |||

In <code>globalNavigation(),</code> there are a few steps which has to be taken for determining the best path as node sequence. | |||

#First, the robot determines its own position in its map and the closest node is found. One assumed that there is no complete blocking object as a wall in between the robot and the closest node, i.e. sufficient nodes has to be properly placed. | |||

#The goal node is found by finding the corresponding semantic number of the destination table | |||

#The <code>aStar()</code>is executed to find the best path - In case the output is [-1], there is no path available with the provided information. Possible causes: connections are not correctly defined or essential connections are removed. On the end, the output with the sequence of nodes to (and including) the goal node is written to the global<code>pathNodeIDs</code>variable. | |||

#The main function returns whether a path is found. | |||

'''Cutting connections in case of blocking objects or close doors (updating the grid)''' | |||

Note that the code for updating the grid is present, but not implemented in local navigation due to time constraints. So, it is not used. However, the plan for updating the grid will be explained below: | |||

In case a node cannot be reached according to a certain trajectory, the grid information has to be revised by removing the connection between the last node and the not reachable node. Then, a new trajectory has to be determined while taking into account the removed connection. This should result in another trajectory to the goal, providing that the removed connection is not crucial. When defining the grid, one should take into account that a connection can be removed and that another trajectory still has to be possible. To remove a connection, the function <code>cutConnections()</code> can be used. | |||

Note that a connection can be unavailable for a limited time. To handle that, the connections list has to be reset when a goal (e.g. table) is reached or when there are no other trajectories possible, due to all removed connections. In the grid, there are two connections list: one will be updated, while the other saves the initial connections list. To reset the connections list, the updated list is overwritten with the initial connections list. This can be done by using the <code>resetConnections()</code> function. | |||

'''Known bug while selecting the table node''' | |||

On the day before the demo day, we found out that the goal node selection is not perfect. Now, the node list is looped to find a node with the corresponding semantic integer for a certain table. So, the first node with this semantic integer is chosen directly. There are multiple nodes around the table which has the semantic integer for the specific table, but the lowest node number is always taken. Then, this node is used as finish node for the A* algorithm. For the demo, it was decided to only assign one node to each table. However during the demo, the specific node for the first table was giving problems. In case the selection of the goal node is able to take the closest table node, this issue was avoided by going to the closest table node on the side of the table. | |||

The way to solve this bug is to generate different paths to all table nodes, check the cost to come for all available table nodes, and select the path with the lowest cost to come. Due to lack of time, this bug fix could not be implemented. In addition, when the <code>cutConnections()</code>is used, the robot can cut the connection when the table node is not reachable, which was the case during the demo. Then, another table node can be used to reach the table. | |||

==Local Navigation== | |||

[[File:Local nav state diagram.png|thumb|State diagram of the local navigation algorithm.]]The local navigation is implemented based on the state diagram provided in the figure on the right, following the same structure as the main code. It acts like a finite state machine, within a finite state machine. Each state in the state diagram corresponds to a case in a switch function. After the one pass through switch-case function, the algorithm goes back to the main code to do localization and to get new scan data. When a global path is determined in the main model, this path, represented as a list of nodes called ''pathNodeList'', serves as an input for the sub state diagram. Other important inputs are the current pose that comes from the localization algorithm, and the scan data, that comes from the main code. | |||

<br /> | <br /> | ||

====INIT_LOCAL_NAV==== | |||

In the INIT_LOCAL_NAV state, the goal point is initialized. The goal point comprises the x-coordinate, y-coordinate, and a semantic label that indicates its type, such as table, door, or path node. The initialization process relies on two key components: the ''nodeIndex'' and the ''pathNodeList''. The ''nodeIndex'' keeps track of the current node in the list that the robot should navigate towards. Initially, the nodeIndex is set to zero to begin the navigation process at the first node of ''pathNodeList''. Upon reaching a goal point, the robot incrementally increases the ''nodeIndex''. This allows the system to retrieve the next node from the ''pathNodeList'', consequently guiding the robot towards the subsequent location. This iterative process continues until the final node of the path is reached, signifying the completion of the navigation task. When the goal is defined, the robot proceeds to transition into the subsequent state “TURN_TO_GOAL”. | |||

====TURN_TO_GOAL==== | |||

In the TURN_TO_GOAL state, the robot undertakes a turning action towards the designated goal before transitioning to the DRIVE_TO_GOAL state. First the angle to new goal is calculated. If this angle is bigger than one radian, the robot will stop its forward movement and only turn. If it is smaller than one radian, the robot will keep a small forward velocity while it is turning towards its goal. Once the angleToGoal is smaller than the TINY_ANGLE threshold, the state machine will go to next state. In the absence of any obstructions, the robot can drive a straight-line trajectory towards the goal point. | |||

====DRIVE_TO_GOAL==== | |||

In the DRIVE_TO_GOAL state, the robot's driving behavior is determined based on several conditions. Initially, the presence of an object within a 50-centimeter range in front of the robot is detected. If no object is detected, the robot proceeds to drive directly towards the goal node, guided by the angle to the goal. Specifically, if the angle to the goal falls within a certain range[ -TINY_ANGLE, TINY_ANGLE], the robot moves straight ahead. However, if the angle exceeds this range, the robot adjusts its motion by simultaneously turning towards the goal and driving at a slightly reduced speed. | |||

In the event that an object is detected within the specified range, the robot employs an artificial potential field approach for navigation. This method involves calculating an attractive force towards the goal, with the direction of the force aligned towards the goal node, while the magnitude is determined by the distance to the goal node. The attractive force is given by the following formulas: | |||

#F<sub>attractive_y</sub> = k<sub>attractive</sub> · d<sub>goal</sub><sup>2</sup> · sin(angle<sub>goal</sub>) | |||

#F<sub>attractive_x</sub> = k<sub>attractive</sub> · d<sub>goal</sub><sup>2</sup> · cos(angle<sub>goal</sub>) | |||

Additionally, the objects detected by the robot's laser scanner exert repulsive forces, which magnitude and direction again depend on the direction and distance of the detected object. This force is given by the following formula: | |||

#F<sub>repulsive_y</sub>= Σ(k<sub>repulsive</sub> · 1/d<sub>scan</sub><sup>2</sup> · sin(angle<sub>scan</sub>)) | |||

#F<sub>repulsive_x</sub>= Σ(k<sub>repulsive</sub> · 1/d<sub>scan</sub><sup>2</sup> · cos(angle<sub>scan</sub>)) | |||

In the case of the respulsive force, there are two different force gains depending on the distance to the obstacle. If an obstacle is within the HORIZON_MIN range, the repulsive gain k<sub>repulsive</sub> is almost 3 times higher than when the obstacle is between HORIZON_MIN and HORIZON_MAX. | |||

By adding up the attractive and repulsive forces, the robot derives the total force, which in turn determines the translational and rotational velocities. The rotational velocity depends on the direction of the total force vector, while the translational velocity is influenced by the magnitude of the total force. This artificial potential field enables the robot to maneuver around obstacles that may partially obstruct its path. | |||

In the DRIVE_TO_GOAL state, assuming a successful navigation process, the robot reaches the goal node. In each cycle within this state, it is checked whether the distanceToGoal is within a certain threshold. If this is the case, the state machine will go to the next state based on the semantic of the node. There exist three distinct types of goal nodes: door nodes, path nodes, and table nodes. Each type of goal node needs specific subsequent actions in the navigation procedure. Consequently, based on the type of goal node reached, the robot can progress into three different states: AT_DOOR_NODE, AT_PATH_NODE, or AT_TABLE_NODE. | |||

====AT_DOOR_NODE==== | |||

In the “AT_DOOR_NODE” state, the robot has arrived at a door node and the robot is stopped. The objective of this state is to navigate the robot through the door. Regrettably, we encountered challenges during the implementation of this functionality which will be explained further below. Nevertheless, the underlying idea was as follows: Firstly, the subsequent goal node for the robot is determined based on the ''pathNodeLis''t. If the next goal node is also a door node, the robot will orient itself towards this node and check the laserdata to see whether the door is open or closed. | |||

In the event that the door is open, the robot will proceed directly to the subsequent node. However, if the door is closed, the robot will request if the door could be opened and then patiently wait for a period of about 10 seconds. If the door is successfully opened within this timeframe, the robot will pass through it. For this, the localization algorithm would switch to a map with an open door and relocalize. This did not work however, as the robot would misinterpret the corner and the heading would be off by about 90 degrees. If The door would not open, the algorithm would go into the OBSTRUCTED_PATH state, where it would cut the link between the two door nodes and go back to the global path planner to find a new path. | |||

Conversely, if the subsequent goal node is not a door node, the model will transition to the TURN_TO_GOAL state and continue following the predefined path. | |||

====AT_PATH_NODE==== | |||

In the AT_PATH_NODE state, which represents reaching a node situated between the start node and the table node where an order is to be delivered, the robots velocity first is reduced. The ''nodeIndex'' is then incremented by one. After updating the ''nodeIndex'', the navigation system proceeds to the state INIT_LOCAL_NAV, where the new goal node will be initialized. | |||

====AT_TABLE_NODE==== | |||

In the AT_TABLE_NODE state, a similar process as with a table node would happen, where it first checks if the node is the final node in the ''pathNodeList, indicating it is at the table''. Upon confirmation of arriving at the correct table node, the robot proceeds with orienting itself towards the table. This orientation is done by adding an extra semantic to each table node, which states the position of the middle of the relevant table. The robot uses this to turn towards the table, similar to the TURN_TO_GOAL state. Furthermore, the ''nodeIndex'' is reset to zero since the current path, as indicated in the ''pathNodeList'', has been successfully completed. In the event that this is the final order, the robot's tasks are concluded; however, if there are additional orders, a new order needs to be specified, and thus the code initializes the global path planner again. | |||

====STOPPED==== | |||

The robot enters the STOPPED state whenever it detects an object within its close distance range, referred to as ''closeByDistance''. In such situations, the robot is instructed to remain stationary until no object is detected within this range. Currently, the implemented behavior involves the robot remaining stationary for a few seconds and then politely requesting the removal of the object. If, however, the object cannot be or isn't removed, such as in the case of a wall, the robot initiates a turning motion. The direction of rotation depends on the angle towards its current goal. Once the robot has a clear view, it will resume driving. However, there may be instances where the robot encounters the same object again, such as when it needs to pass through it. In such cases, the robot will consider the next goal point as the new target and attempt to reach it. This approach is taken because there might be an object obstructing the original node the robot was trying to reach. If the robot encounters further obstacles and needs to stop again, the robot will go into the OBSTRUCTED_PATH state, which is explained below. | |||

====OBSTRUCTED_PATH==== | |||

''Unfortunately, we were unable to successfully test this functionality in our code, mainly due to time concerns.'' | |||

The robot gets into this state if the robot entered the STOPPED state for the second time after trying to move away from the obstacle. Subsequently, the link between the current node from which the robot is moving and the destination node should be removed. If such a scenario occurs, it would necessary to find a new global path, accounting for the deletion of the node link caused by the presence of an object or an obstructed path. Thus, a transition to the main code, or more specifically, the “GLOBAL_PATH” state, needs to be executed. The deleted node link would be reset once the robot does get to the table. | |||

====LOCAL_MINIMUM==== | |||

''Unfortunately, we were unable to successfully test this functionality in our code, mainly due to time concerns.'' | |||

During navigation using an artificial potential field, it is possible for the robot to become stuck due to the repulsive forces and the attractive forces canceling each other out. One example would be an straight object right before or on top of the goal node. In this scenario, the robot could never get to its goal node. This behavoir is detected by a function that calculates the average traveled amount over a period of about 10 samples. If this average travelled distance is smaller then a certain threshold, a ''localMinimum'' Boolean would be set to true and the robot would cut the node link like in the OBSTRUCTED_PATH state. | |||

====STOPPED_BUMPER==== | |||

''Unfortunately, we were unable to successfully test this functionality in our code, mainly due to time concerns.'' | |||

This is a distinct intermediate state that occurs when the robot's bumper is pressed. In this state, the robot initially moves backward for a duration of half a second before transitioning to the “STOPPED” state. | |||

===Improvements=== | |||

It is important to note that each time the local navigation is executed by the main function, the first step is to check the laser's view angle of 90 degrees in front of the robot for any objects detected within a range of 0.2 meters, known as ''closeByDistance''. Additionally, the function verifies if the robot's bumper has been pressed. | |||

In hindsight, it may have been wiser to exclude the stop and bumper detection processes when the robot reaches a table node. Since the robot is programmed to turn towards the designated table upon reaching the corresponding node, it is conceivable that the table itself falls within the ''closeByDistance'' range, causing the robot to stop rotating and enter the STOPPED state. Ideally, the robot should not stop for a table but rather proceed to deliver the order as intended. | |||

This behavior did come up in simulation and more crucially during the final challenge. A solution that was not implemented is to change the ''closeByDistance'' based on the semantic of the next node. This ''closeByDistance'' can be decreased once the robot is near a table node. Also, the robot should only go into the STOPPED state from TURN_TO_GOAL or DRIVE_TO_GOAL states, and not from any state other. | |||

Since the robot did drive relatively well during testing, but failed straight away during the final challenge, it is hard to say what other changes to the current code would be made. | |||

==Localisation== | |||

====Used algorithm and predefined knowledge==== | |||

The localisation uses the particle filter approach, as introduced in 'global navigation 2' during this course. In short, the particle filter consists of a collection of samples centred around the initial guess or estimate of the robot's position. Each particle represents a possible pose of the robot. It then assigns weights to each particle based on how well the position aligns with the observed laser data. This weights represent the likelihood of the robot to coincide with that particular particle. If the laser data of the robot coincides well with the estimate of that particle, the likelihood of that particle is high, to represent the actual pose of the robot. The particle filter uses a world map, giving an expectation of where objects are present within the world. Comparing this expectation with the received laser data results in the weighting for each particle. | |||

The world map is applied by a JSON file. This JSON file also includes information on the maximum range of the laser range finder, the initial guess, the number of particles, the propagation noise, the measurement model and the resampling scheme. Then, the likelihoods are calculated after which the weighted average of this array can be taken to find the estimated pose of the robot. The more particles are used the higher the likelihood of a successful pose, but also the longer the algorithm takes to estimate the pose. Since the robot cannot check for collisions or perform changes in the local navigation state while it is performing the localisation algorithm, it is important for the robot to not exceed a certain amount of time per calculation. This limits the amount of particles that can be used during driving and there is thus an optimum for the amount of particles that are used. | |||

Another problem that arises is that if the samples are propagated the particle filter will converge to a weight approaching 1 for a single particle, while the other particles converge to a weight of 0. This means that computational effort is given to many useless particles, while only one particle is effective. This effect is called the degeneracy problem and can be counteracted by resampling the particles. How often the algorithm resamples is a design choice and can be optimised for, as resampling comes at the cost of computational expense. For more information about particle filters, their implementation and their use the following source can be consulted https://www.mdpi.com/1424-8220/21/2/438 | |||

====Initiation phase==== | |||

Within the <code>main.cpp</code> the particle filter is initiated. First, the JSON is loaded through <code>load_config_file()</code>. The loaded configuration file is passed to <code>constructWorldModel()</code>, where the map and maximum range of the laser range finder are loaded. Then the particle filter is constructed through <code>constructParticleFilter()</code>, where the initial guess, the number of particles, the propagation noise and the measurement model are loaded. On top of that the particle cloud is initialized. Originally, this could be done with either a Gaussian distribution around a given mean, which is the initial guess, or with a uniform distribution over the whole map. The uniform distribution function is adjusted to allow for specifying the size of the area in which the particles are initiated. This enables starting with a uniform distribution in the initial area, as the robot has an equal likelihood of starting at any position within the area and with any heading. To this extent, a variable for the spread in x- and y-direction has been added as input to the uniform distribution function, together with the initial guess loaded when loading the program configuration. After the robot is started in the starting area, the robot performs a few iterations of localisation to find a certain pose. In this state the robot is not moving and the calculation time of the particle filter is of less importance. This means that during this initial localisation the amount of particles can be implemented without decreasing the robot performance. The spread of these particles are picked to fill the entire starting area. After 10 iterations or if the found pose is certain (explanation in section: Localisation phase) the robot can continue to the global pathfinder. | |||

====Localisation phase==== | |||

Within localisation, first, the driven distance is computed with <code>computeDrivenDistance()</code>. Then the particle filter is updated using this driven distance, the laser data and the odometry data. This includes propagating the samples, computing the likelihood, setting the weights and normalising the weights of the particles. Then, in case it is required or requested, the particles are resampled. It is chosen to use the effective particle threshold strategy for resampling. This approach is chosen to avoid resampling at every timestep, thereby increasing the speed of the particle filter. Additionally, it maintains a slightly higher spread of particles, which helps reducing sample impoverishment. However, achieving the right balance is important, as excessive spread can lead to wrong pose estimates due to similarities between multiple points on the map. On the other hand, insufficient spread can make it challenging to track the pose when differences arise between the propagated samples and the actual pose of the robot, due to errornous odometry data. This threshold is mainly tuned with testing in simulation. | |||

Finally, the particle filter estimate is acquired with the <code>get_average_state()</code>. The resulting pose estimate is compared to the dead reckoned pose of the robot that is obtained using <code>deadreckonPose()</code>. This is done to verify if the new estimate deviates significantly from the previous estimate (including the driven distance). If the difference is substantial, it suggests that the estimate might be inaccurate. This performance variable is only used after a few iterations of the particle filter. Using this boolean right from the start is not possible, since the only previous position to compare the estimate to is the initial guess, but this guess is random within the starting area. Furthermore, the weighted variance of the particles is calculated using the average state, particle poses and particle weights. If the particles are widely spread with low weights, this weighted variance is high, indicating that the pose estimate is less certain. Both these properties are assessed with a threshold value, that is tuned by testing in the simulator and real-life environment. If the thresholds are not met, the particle filter returns false for <code>isCertain</code>. | |||

The following code is used to check the two thresholds: | |||

#<code>positionCondition = (diffX > THRESHHOLD_DIF_XY) || (diffY > THRESHHOLD_DIF_XY) || (diffTheta > THRESHHOLD_DIF_TH);</code> | |||

#<code>varianceThreshold = (sigma_p > likelihoodThreshold);</code> | |||

#<code></code> | |||

#<code>if ( positionCondition || varianceThreshold )</code> | |||

#<code>{</code> | |||

#<code> isCertain = false;</code> | |||

#<code>}</code> | |||

In case the particle filter indeed becomes uncertain, it is re-initialized with <code>constructParticleFilter()</code>. However, now the deadreckoned pose with respect to the previously known certain estimate is used as the initial guess. Moreover, the number of particles and uniform spread is increased in an attempt to accurately estimate the pose of the robot again. If the thresholds for the distance between the previous and current estimate and the weighted variance are met, the particle filter is certain. When a switch is made from uncertain to certain the particle filter is re-initialized again but with fewer particles over a smaller uniform spread, to speed up the particle filter. Also, the last particle estimate is used as an initial guess. Finally, if the particle filter is certain, the pose that is passed to local navigation is the pose estimate of the particle filter itself. If the filter is uncertain, the pose that is sent to the other functions is the deadreckoned pose. This re-initialisation of the particle filter might not be the most efficient option, but it is a relatively easy option to have some sort of adaptive particle filter functionalities. | |||

The total code structure of the localisation() function is given by the following pseudo-code: | |||

#<code>bool doLocalisation(inputs)</code> | |||

#<code>{</code> | |||

#<code> Define variables;</code> | |||

#<code></code> | |||

#<code> Compute driven distance;</code> | |||

#<code> Update particle filter;</code> | |||

#<code> Publish particle filter;</code> | |||

#<code> Pose = Get average state;</code> | |||

#<code></code> | |||

#<code> Calculate threshold booleans;</code> | |||

#<code> </code> | |||

#<code> Update particle filter based on booleans;</code> | |||

#<code> </code> | |||

#<code> if ( particle filter = updated )</code> | |||

#<code> {</code> | |||

#<code> reconstruct particle filter;</code> | |||

#<code> }</code> | |||

#<code> </code> | |||

#<code> if ( pose is certain) </code> | |||

#<code> {</code> | |||

#<code> referencePose = particle filter Pose;</code> | |||

#<code> } </code> | |||

#<code> else</code> | |||

#<code> {</code> | |||

#<code> referencePose = dead reckon Pose;</code> | |||

#<code> }</code> | |||

#<code> return certaintyBool;</code> | |||

#<code>} </code> | |||

As indicated in the state flow diagram, the localisation function is called at multiple states. First, localisation is called in the global navigation phase, in order to find a pose before global navigation is performed and a path is found. Then, localisation is called every timestep of local navigation to continuously update the pose of the robot. Finally, localisation is called in the relocate state, the functioning of this state is explained below. | |||

====Relocate phase==== | |||

When the robot loses its pose it will localise by deadreckoning its previously known certain pose. Since the odometry sensors of the HERO robot are quite accurate this is fine for a couple of seconds, but after a while, the odometry pose will deviate from the actual robot pose. A difference in the estimated pose and location of the robot can lead to a lot of problems, like finding a wrong path or colliding with walls/objects. The implemented approach involves transitioning into a relocate state, after the pose is uncertain for a number of timesteps. | |||

In this state the robot is halted and the particle filter is updated to increase the spread, switch to always resampling and increase the number of particles. The robot then tries to find its position by doing 30 localisation cycles or until a certain pose is found again. If no certain pose is found the robot will transition to the error state and cease operation. If a certain pose is found the particle filter is transitioned to the original settings and is reinitialized; after which the robot can again define a new path and continue operation. This state is thus the fail-safe to guarantee the robot can find its position or otherwise ensure no unsafe operation of the robot is done. | |||

==Testing the software== | |||

Every week from the very start, team Rosey made use of the full amount of testing hours that was available. During testing, parameters were tuned to what worked best on Rosey. Tested features include driving to a predetermined goal, avoiding obstacles and humans, and opening the door. | |||

====Practical sessions==== | |||

'''Testing global navigation''' | |||

The algorithm for determining the global path is evaluated in a simulation environment since it is independent of robot parameters. However, the validation of node placement is crucial and necessitates testing during the real-world practical sessions. These tests involve the robot following a predetermined plan composed of nodes, allowing us to assess the adequacy of their placement. Adjustments was required to ensure the robot maintains a safe distance from objects, avoiding both potential collisions and close proximity. Furthermore, we could also examine the optimal distance between the table and the table nodes to ensure that the robot can effectively serve the table when it reaches these table nodes. Moreover, the identification of potential bottlenecks or obstructions may necessitate the addition of extra nodes at specific locations along the path. This strategic inclusion of supplementary nodes aims to enable the robot to follow the path smoothly, minimizing the risk of getting stuck or encountering obstacles that impede its progress. | |||

'''Testing local navigation''' | |||

The local navigation code was also subjected to testing to verify its functionality. Initially, the robot was driven along predefined paths to assess its ability to follow the desired trajectory without colliding with walls or obstacles. Testing revealed that when no objects were present in the path, the robot successfully reached the designated tables with a quite smooth path. All segments of the code where investigated during these tests and fine-tuned: | |||

- TURN_TO_GOAL: The robot's behavior upon reaching a goal node was evident as it would promptly adjust its orientation towards the next goal before initiating forward movement. This action was fine-tuned by incorporating a slight forward velocity during rotation, but only when the angle to the new goal node fell within a specified range. As a result of this adjustment, the robot demonstrated improved path smoothness | |||

- DRIVE_TO_GOAL: In this state without objects, the robot would attempt to drive straight whenever possible, and correct its direction if it deviated significantly. This action is fine-tuned by implementing a strategy that involved reducing the forward velocity while simultaneously rotating, allowing the robot to smoothly realign itself and gradually resume forward movement in the correct direction. Consequently, this optimization facilitated the robot to follow a more smooth path. The implementation of the artificial potential field in the code effectively steered the robot away from walls when they slightly obstructed its path. The parameters of the artificial potential field were primarily fine-tuned within the simulation environment, and during testing, they demonstrated satisfactory performance for the real-world robot. Given additional time, it would have been valuable to further tune and test these parameters in a more extensive manner within the real-world setting. This could involve examining the robot's response to the presence of small objects in the corridors. Nevertheless, the crucial aspect was achieving successful navigation around the walls using the artificial potential field, which was accomplished as intended. | |||

- AT_PATH_NODE: Furthermore, this aspect underwent fine-tuning as well. Initially, the robot would come to a complete stop at the intermediate path nodes. However, this behavior was revised to reduce the speed significantly while maintaining forward movement, enabling the robot to smoothly execute a turn towards the subsequent node along the path. | |||

- AT_DOOR_NODE / AT_TABLE_NODE: At the designated door and table nodes, the robot executed the expected behavior of stopping its movement and rotating in respectively the door and table direction. This functionality operated smoothly without requiring any additional tuning. The placement of the door and table nodes, however, was subject to adjustment as elaborated upon in the global navigation part. | |||

So without objects the robot was perfectly able to deliver orders at predefined tables. Next is was time to test stopping for static and dynamic objects. However, due to time constraints, the full implementation of the "STOPPED" state was not done before the final practical session and the stop parameters were not tuned (magnitude of the closeByDistance). Consequently, difficulties arose when the robot attempted to stop for objects. Detection of objects within the closeByDistance occurred too late, leading to late braking and frequent collisions due to the robot's residual speed. Thus a larger closeByDistance was necessary in real-world setting compared to simulations. Unfortunately, it was not possible to do a lot of testing with the new implemented "STOPPED" state. (Ideally, we would have conducted additional testing to evaluate specific aspects such as the robot's stopping behavior, particularly to determine if it stops without bumping into something. Furthermore, we would have liked to test the robot's ability to detect and respond to dynamic objects by ensuring it accurately detects when an object is no longer present and resumes its intended path without unnecessary delays or deviations.) | |||

After the final practical session, the "STOPPED" state is tuned in the simulation environment. A notable observation in these simulations, consistent with the final challenge, was that if the robot needed to make a turn upon reaching a table, it would enter the "STOPPED" state and remain unable to transition out of it, as the code did not account for the robot recognizing the table as a non-obstacle. Nevertheless, as a group we made the choice to not make big changes in the code just before the final challenge. Nonetheless, the need for improvement in addressing this particular issue was acknowledged and explained in both the local navigation and reflection sections. | |||

'''Testing localisation''' | |||

During development of the particle filter a couple of tests were performed on the robot to verify the performance of a multitude of functions. | |||

As the particle filter had already started working on an existing code base it was important to thoroughly test the functioning of the code base and the functions that were implemented by the team. To this extent, the particle filter was tested in the simulation environment provided by the course. Some bugs were found leading to segmentation faults. The cause was found to be the resampling function that synthesises a new particle array with a new length. However, when this length was defined to be larger than the previous array size an index out of bounds was encountered. This was solved by implementing a threshold on the size of the array. After this fix the basic particle filter was proved to function and new functionality could be implemented. | |||

Futhermore, some threshold values used for the particle filter were tuned. This is partly done in simulation, but was slightly finetuned during physical testing. They are tuned by checking the actual pose and the estimated pose of the robot in combination with whether the particle filter gives a certain or uncertain boolean. Here it was important to make sure the particle filter does not become uncertain too quickly, when there is an unsignificant deviation in pose, but does become uncertain when the particle cloud suddenly jumps to a different, incorrect location on the map. | |||

Finally, during testing it was observed that when the door in the map is open, the localisation of the robot quickly diverged to a wrong pose estimate. This was due to the fact that the particle filter was using a map where the door was closed. On the other hand, using a map where the door was open also presented challenges. When the robot faced the door, expecting it to be absent while it was actually present, it led to an incorrect pose estimate as well. Due to time constraints, there was not enough time for further adjustments to the strategy of incorporating the door in the localization process. | |||

'''Videos''' | |||

During testing, Rosey was able to deliver to any table. We have a few videos of this: | |||

*[https://drive.google.com/file/d/1cSF7r_oi6pPeyEWX157hBaaLmqHDif6l/view?usp=drivesdk Here] is a video of Rosey delivering orders to tables 0 and 3. | |||

*[https://drive.google.com/file/d/1d-XPaVIDT-QRpaWo2Fi_yfogOCb2LUmE/view?usp=sharing Here] is a video of Rosey delivering an order to table 4, after opening the door. | |||

====Final challenge day==== | |||

During the final challenge day, Rosey clearly under-performed. The team estimated that Rosey would be able to serve up to table 3. However, she manoeuvred around table 1, arriving at the table only to see it as an object that is too close by. The second time, a slightly different version of the code was run, which featured an untested escape for this issue, however the same problem occurred. | |||

The main cause of this issue is the stop check, which is always active - even in states where there is no forward velocity, such as the 'turning to table' state. Besides this, the table was only approachable from one point, which made it very sensitive to errors. Below, each component of the robot software reflects on their performance during the challenge. | |||

'''Reflection system architecture and overview''' | |||

Having states shown as output helped to understand what the robot was doing at any time. However, it could have been made clearer ''why'' a certain transition was taken. Dividing up the code into states improves the code overview if handled carefully, but it can also be a limiting factor. This may have contributed to the oversight in the stop check. | |||

'''Reflection global navigation''' | |||

As discussed previously, the current software is not able to switch to another table node when a table node is blocked. During the demo, the table node of the first table was not reachable from the angle of the previous node. In case the software could remove a connection and switch to another table node, this issue was handled. During testing, the global path finder did its job well. Also, the manual grid construction with matlab and json was easily adaptable to test specific situations and update node connections and locations. | |||

'''Reflection local navigation''' | |||

As discussed previously, it may have been wiser to exclude the stop and bumper detection processes when the robot reaches a table node. Since the robot is programmed to turn towards the designated table upon reaching the corresponding node, it is conceivable that the table itself falls within the ''closeByDistance'' range, causing the robot to stop rotating and enter the STOPPED state. Ideally, the robot should not stop for a table but rather proceed to deliver the order as intended. A solution that was not implemented is to change the ''closeByDistance'' based on the semantic of the next node. This ''closeByDistance'' can be decreased once the robot is near a table node. Also, if the robot would go into the STOPPED state from AT_TABLE_NODE, the robot should go to DELIVER_ORDER instead of staying in the STOPPED state. | |||

Since the robot did drive relatively well during testing, but failed straight away during the final challenge, it is hard to say what other changes to the current code would be made (especially in the code of the artificial potential field). | |||

'''Reflection localisation''' | |||

*Particle spread: <br />It was noticed that during the final challenge the particle spread was quite high after a few timesteps before being resampled. It might have been better to decrease the particle spread parameters or the resampling threshold could have been tuned to resample more. | |||

*Amount of particles: <br />It is believed that the amount of particles during the final test was tuned quite well as the robot seemed to be able to keep it's localisation while driving to table 1. However, if the resampling threshold is changed it might be a good idea to retune the amount of particles to reoptimize for computational time. | |||

*Resampling threshold: <br />As suggested before it is believed that is is beneficial to increase the times resampling took place to counteract the large spread. This parameter was also not very well tuned during the test sessions. | |||

*diffXY & diffTh threshold: <br />The diffXY and diffTH threshold was based on the calculation of an average timestep and the velocity of the robot with a safety factor. The thresholds should be quite good, but a unit test was not performed on the robot where the robot speeds up until the particle filter breaks. It is recommended to test the particle filter to its limits after all parameters are tuned to see how robust the algorithm is. There was unfortunately insufficient time to perform these tests. Relocate(): The idea of this state was to increase robustness of the robot, but the implementation of the algorithm could have been better. IT was build in as an afterthought and did not easily align with the existing function. It was also quite hard to test easily (without defining a test\_main()). The functionality would improve when the robot would be driven with open space implementation instead of going to error state immediately, but there was no time to implement and test this. | |||

*Relocate: <br />The idea of this state was to increase robustness of the robot, but the implementation of the algorithm could have been better. It was build in as an afterthought and did not easily align with the existing function. It was also quite hard to test easily (without defining a test\_main()). The functionality would improve when the robot would be driven with open space implementation instead of going to error state immediately, but there was no time to implement and test this. | |||

*Test/improve localisation after opening door: <br />The proposed solution after opening the door was to update the map the particle filter used to reflect the opening of the door. This functionality was build in to the robot and tested in the simulation. There was not enough time to rigorously test the behaviour of the robot in this event. However, the tests that were performed showed that the robot had quite some trouble with finding an accurate pose as the corridor behind the door quite looked like the corridor next to table 1 and thus the particle filter had the possibility to converge to the wrong pose. The proposed solution to counteract this behaviour would be to drive on deadreckoning for a few seconds after the door is opened to finish the tasks behind the door while trying to find an accurate pose again. The pose should update when object are encountered that differentiate the two corridors from each other. | |||

Latest revision as of 15:03, 7 July 2023

Welcome to the group page of team Rosey!

Project organisation

Group members table and work division

The group identified three major components of the assignment. These are the localisation, the global and local navigation of the robot. The group additionally saw use for an overseeing role, which should ensure that the interfaces between the functions are clear. The work is divided as shown in the table below.

| Name | Student ID | Main task |

|---|---|---|

| Eline Wisse | 1335162 | Local navigation |

| Lotte Rassaerts | 1330004 | Localisation |

| Marijn Minkenberg | 1357751 | System architecture & overview |

| Rainier Heijne | 1227575 | Local navigation |

| Tom Minten | 1372300 | Global navigation |

| Lowe Blom | 1266020 | Localisation |

Midterm presentation

During the midterm presentation, team Rosey presented their plan of action to make the robot deliver orders in a restaurant. The slides are available here for comparison to the final product: File:Midterm presentation Rosey.pdf

Introduction

The Mobile Robot Control 2023 course is centered around a project known as the Restaurant challenge. It required a combination of technical skills, teamwork, and effective communication to design and implement a robotic system capable of autonomously navigating a restaurant environment and serving customers. During the build up to the challenge, the team gained valuable experience in various areas related to robotics and computer science.

Throughout the course, different algorithms and techniques for robot control were explored, such as localization, mapping, path planning, and object detection. Hands-on experience was obtained by working with the Toyota HSR robot, known as Hero, which was equipped with sensors and actuators for perception and driving tasks. This practical engagement enhanced the understanding of the fundamental principles and challenges associated with robotic systems and nearly as importantly, enabled the team to test developed algorithms for the restaurant challenge.

One of the significant obstacles encountered was the presence of dynamic obstacles. Developing a robust and efficient system to detect and avoid these obstacles while successfully delivering orders to the correct tables posed a significant challenge. Additionally, making sure all the parameters were tuned correctly within the limited amount of experiment time became quite the challenge.

On this wiki page, group Rosey's final restaurant challenge algorithm will be explained in detail, including shortcomings of the algorithm, and possible solutions to these problems. First, the general system architecture will be discussed, after which the three identified main components of the assignment are discussed in detail. After that, the testing hours and final challenge day are reflected upon.

System architecture and overview

This section describes how the Rosey program functions on a supervisory level.

State diagram

Taking the lecture on system architecture to heart, the state space diagram is used as a guideline to structure the main file. This gives a clear overview of what code is run and when, and it allows everybody to work separately on their part of the code. The state diagram that was presented in the midterm presentation has been iterated on multiple times. The aim was to simplify the states while capturing all necessary behaviours and transitions in them. See below for a second version.

After consideration with the team, the decision was made to go for a hierarchical design. The 'drive according to plan' state was internally becoming quite complex. Additionally, interfacing with other states like the request open door state would be easier if that state was part of the internal states in the 'drive according to plan' state. That is why two separate supervisors are made. The high-level state diagram describing the robot behaviour as a whole can be viewed below. The sub-level state diagram, describing the robot behaviour while in the 'local navigation' state, is further described under Local navigation.

Supervisor implementation

In the supervisor, supervisor states and transitions are defined as magic numbers. The main file contains a while loop which runs the correct state, based on the previous transition. A switch statement is employed for this. The simplest example of this is the 'define new order' state, given below:

case DEFINE_NEW_ORDER:{transition = getOrderFromList(&orderList, &tableID);if (transition == NEW_ORDER){state = GLOBAL_PATHFINDER;std::cout << "[To state:] Global pathfinder" << std::endl;}else if (transition == NO_ORDERS){state = ALL_ORDERS_DELIVERED;std::cout << "[To state:] All orders delivered" << std::endl;}else{state = ERROR_STATE;error = "[LOG:] Error in DefineNewOrder";}break;}

The function that is being run is the getOrderFromList() function, which expects a pointer to the orderList and to the tableID. The output of this function is the desired transition: either a new order is defined, or no order is defined. In the former case, the next state is the global pathfinder, in the latter case, the next state is the final state, when all orders have been delivered. In case of any errors in getOrderFromList(), the function may return a different transition, in which case the program goes into the error state.

This supervisory approach helped greatly to keep the overview. Additionally, state transitions very clearly indicated which part of the code is being run. The communication between functions was also quite smooth using this approach. The functions interacted with each other using pointers to variables declared in the main file. As an example, the localisation function updates the current estimate of the robot Pose on a global level. Every function using the Pose is then certain to have the latest updated version, and every function is certain to be accessing the same information. The same goes for variables such as the pathNodeIDs, currentPose, tableID, laserData, etc.

The approach was also limiting at times. For example, it becomes slightly complicated to let a function try something 5 times before switching to a different method, without adjusting the structure of the main file. For this reason, most states have been slightly adjusted near the end of the project just to fit the needs of the functions.

General variables

In this section, a few general variables have been discussed. The table below explains the general variables in a bit more detail.

| Variable | Type | Used by | Explanation |

|---|---|---|---|

| grid | Grid class | Global navigation | Class containing all node IDs, positions and the connections between them. |

| pathNodeIDs | std::vector<int> | Global navigation | List of all nodes from the currect robot position to the goal node (corresponding to a position in front of a table). |

| orderList | std::vector<int> | defineOrder, deliverOrder | List of all tables to be served. |

| tableID | int | defineOrder, deliverOrder, Global navigation | Table ID which is currently being served, taken from the orderList variable. |

| laserData | emc::LaserData | Localisation, local navigation | Is constantly updated with the most recent data from Rosey's LiDAR sensor. |

| odomData | emc::OdometryData | Localisation | Is constantly updated with the most recent data from Rosey's odometry. |

| currentPose | Pose struct | Localisation, Global navigation, local navigation | Contains the current estimate of the robot pose (x, y, angle) in map frame. |

| referencePose | Pose struct | Localisation | Contains the last certain robot pose (x, y, angle) in map frame. |

Include diagram

To ensure the team could work well together, a file structure was set up early where the include tree was already in place. Apart from a few adjustments, this stayed consistent throughout the project. General functions could be added to the shared tools.c, and general classes, structs or magic numbers could be added to tools.h. Special care was taken to prevent self-looping includes, as these can cause hard-to-track errors.

The global navigation uses the A* algorithm to find the shortest path to from a starting node to a goal node. The A* algorithm uses a predefined grid of nodes. This grid is applied by a JSON file, generated with Matlab. With Matlab, the grid nodes with their connections are manually chooses in the known map. On top of that, we were inspired by the lecture about Safe navigation in a hospital environment to introduce some context-aware navigation into our model, by means of semantics. Each node contains some semantic information about its environment, described by a integer. The following semantic integers are included:

- 0 = table 0

- 1= table 1,

- ...,

- 49 = table 49,

- 50 = door,

- 99 = empty (meaning no semantic information present).

This information is also included in the JSON file. The global path finder can find a table by looking for the node with the correct semantic integer.

Preparation phase

A JSON file, containing the grid information, can be created with the Matlab script below. The script has a visualization tool for plotting the position of all nodes. In the next graphs, the locations of the grid points in the final challenge are visualised. The circle has a radius of 20 centimeters. When the robot is in this circle, the local navigation considered this as being arrived at the node. The local navigation assumed that the robot has reached the node when it is 20 centimeters from the node.

The corresponding Matlab script to generate the graphs and the JSON grid file: (click 'Expand'):

%% Data

clear all;

% Points in pixels, personally used pinta for that

x = [500,500,390,350,377,377,310,245,230,140,150,70,70,105,150,190,275,250,295,340,270,70];

y = [110,50,100,70,175,250,140,180,70,50,115,80,125,190,255,280,260,320,320,280,70,295];

% move zero point for matching worldmodel

x = x+2;

y = (y*-1+362);

% scale to meter

res= 1/80; % 80 pixels per meter

x_m = x*res;

y_m = y*res;

%% semantics

% add semantics to certain nodes

% 0: table 0, 1: table 1, ... 49: table 49, 50: door, 99: empty

% also add coordinates of the semantic objects when necessary

sem(1:length(x)) = 99; % fill array with 99 (empties)

semx(1:length(x)) = 0; % fill array with 99 (empties)

semy(1:length(x)) = 0; % fill array with 99 (empties)

sem(10) = 0;

semx(10) = 0.9375;

semy(10) = 4.0625;

sem(12) = 0;

semx(12) = 0.9375;

semy(12) = 4.0625;

sem(4) = 1;

semx(4) = 3.8750;

semy(4) = 3.7500;

sem(7) = 1;

semx(7) = 3.8750;

semy(7) = 3.7500;

sem(21) = 1;

semx(21) = 3.8750;

semy(21) = 3.7500;

sem(13) = 2;

semx(13) = 0.8750;

semy(13) = 2.2500;

sem(14) = 2;

semx(14) = 0.8750;

semy(14) = 2.2500;

sem(16) = 3;

semx(16) = 2.3750;

semy(16) = 0.5625;

sem(18) = 3;

semx(18) = 2.3750;

semy(18) = 0.5625;

sem(20) = 4;

semx(20) = 4.6250;

semy(20) = 0.5625;

sem(19) = 4;

semx(19) = 4.6250;

semy(19) = 0.5625;

doorNodes = [5,6] % door nodes

for i =1:length(doorNodes)

sem(doorNodes(i)) = 50;

end

%% connections

% define connections for each node, using input mode of matlab

conn_challenge_restaurant = zeros(length(x),10);

for i = 1:length(x)

i

prompt="type Connections, in form [a,b] when more connections exists: "

output = input(prompt)

for j = 1:length(output)

conn_challenge_restaurant(i,j) = output(j)

end

end

%% Plot

close all;

figure()

text(x, y, string((1:numel(x))-1)); % python numbering (index 0:..)

%text(x, y, string((1:numel(x))-0)); % matlab numbering (index 1:...)

axis([0, 564, 0, 364])

hold on

% visualise bitmap with points

I = imread('RestaurantChallengeMapRotatedFlipH.png');

h = image(2,2,I);

uistack(h,'bottom')

% size of points, which forms the circle around node

sm = 10;

% radius of circle about node

R = 16; % 0.2*80, 20 centimeter radius

th = linspace(0,2*pi) ;

figure()

scatter(x,y,sm,"filled")

hold on

for i = 1:length(x)

x_c = R*cos(th)+x(i) ;

y_c = R*sin(th)+y(i) ;

scatter(x_c,y_c,1,"filled")

end

I = imread('RestaurantChallengeMapRotatedFlipH.png');

h = image(2,2,I);

uistack(h,'bottom')

%% generate JSON

S.nodes = struct("x", num2cell(x_m), "y", num2cell(y_m), "semantic",num2cell(sem),"semanticX",num2cell(semx),"semanticY",num2cell(semy))

S.connections = conn_challenge_restaurant

s = jsonencode(S,PrettyPrint=true)

if exist('grid.json', 'file')==2

prompt="do you want to remove old grid.json file? (y=1/n=0) "

output = input(prompt);

if output == 1

delete('grid.json');

disp("Caution, old grid.json file is deleted...")

end

end

fid=fopen('grid.json','w');

fprintf(fid, s);

disp("new grid.json is succesfully saved")

Initiation phase (run once)

The json file is imported with the loadConfigFile()and the grid is constructed withconstructGrid(). The grid information is placed in the global variable grid.

Global path finder phase

In globalNavigation(), there are a few steps which has to be taken for determining the best path as node sequence.

- First, the robot determines its own position in its map and the closest node is found. One assumed that there is no complete blocking object as a wall in between the robot and the closest node, i.e. sufficient nodes has to be properly placed.

- The goal node is found by finding the corresponding semantic number of the destination table

- The

aStar()is executed to find the best path - In case the output is [-1], there is no path available with the provided information. Possible causes: connections are not correctly defined or essential connections are removed. On the end, the output with the sequence of nodes to (and including) the goal node is written to the globalpathNodeIDsvariable. - The main function returns whether a path is found.

Cutting connections in case of blocking objects or close doors (updating the grid)

Note that the code for updating the grid is present, but not implemented in local navigation due to time constraints. So, it is not used. However, the plan for updating the grid will be explained below:

In case a node cannot be reached according to a certain trajectory, the grid information has to be revised by removing the connection between the last node and the not reachable node. Then, a new trajectory has to be determined while taking into account the removed connection. This should result in another trajectory to the goal, providing that the removed connection is not crucial. When defining the grid, one should take into account that a connection can be removed and that another trajectory still has to be possible. To remove a connection, the function cutConnections() can be used.

Note that a connection can be unavailable for a limited time. To handle that, the connections list has to be reset when a goal (e.g. table) is reached or when there are no other trajectories possible, due to all removed connections. In the grid, there are two connections list: one will be updated, while the other saves the initial connections list. To reset the connections list, the updated list is overwritten with the initial connections list. This can be done by using the resetConnections() function.

Known bug while selecting the table node

On the day before the demo day, we found out that the goal node selection is not perfect. Now, the node list is looped to find a node with the corresponding semantic integer for a certain table. So, the first node with this semantic integer is chosen directly. There are multiple nodes around the table which has the semantic integer for the specific table, but the lowest node number is always taken. Then, this node is used as finish node for the A* algorithm. For the demo, it was decided to only assign one node to each table. However during the demo, the specific node for the first table was giving problems. In case the selection of the goal node is able to take the closest table node, this issue was avoided by going to the closest table node on the side of the table.

The way to solve this bug is to generate different paths to all table nodes, check the cost to come for all available table nodes, and select the path with the lowest cost to come. Due to lack of time, this bug fix could not be implemented. In addition, when the cutConnections()is used, the robot can cut the connection when the table node is not reachable, which was the case during the demo. Then, another table node can be used to reach the table.

The local navigation is implemented based on the state diagram provided in the figure on the right, following the same structure as the main code. It acts like a finite state machine, within a finite state machine. Each state in the state diagram corresponds to a case in a switch function. After the one pass through switch-case function, the algorithm goes back to the main code to do localization and to get new scan data. When a global path is determined in the main model, this path, represented as a list of nodes called pathNodeList, serves as an input for the sub state diagram. Other important inputs are the current pose that comes from the localization algorithm, and the scan data, that comes from the main code.

INIT_LOCAL_NAV