Mobile Robot Control 2023 Group 3: Difference between revisions

No edit summary |

|||

| Line 36: | Line 36: | ||

Implementation video here: https://1drv.ms/v/s!Ah8TJsKPiHVQgdpdH5tZMWa6fCyjDA?e=7TtBUS | Implementation video here: https://1drv.ms/v/s!Ah8TJsKPiHVQgdpdH5tZMWa6fCyjDA?e=7TtBUS | ||

'''Main idea:''' | '''Main idea:''' The algorithm uses the open space approach. The robot finds the biggest open space on the horizon and turns so that the front of the robot is positioned directly towards it. It then drives to the open space and adjusts if new open spaces occur. | ||

===<u>Exercise 4 (Odometry data)</u>=== | ===<u>Exercise 4 (Odometry data)</u>=== | ||

*'''Keep track of our location:''' | *'''Keep track of our location:''' A small part of code was added that notes the current positions and changes in positions | ||

*'''Observe the behaviour in simulation:''' | *'''Observe the behaviour in simulation:''' When using the default settings the odometer is very trustworthy, but by uncertain mode it becomes very untrustworthy. The actual postion of the robot is far from the measured postition. | ||

*# | *# | ||

*# | *# | ||

*# | *# | ||

*# | *# | ||

*'''Observe the behaviour in reality:''' | *'''Observe the behaviour in reality:''' Just like with the uncertain mode, the values are not accurate. | ||

<br /> | <br /> | ||

Revision as of 18:32, 27 May 2023

Group members:

| Name | student ID |

|---|---|

| Sjoerd van der Velden | 1375229 |

| Noortje Hagelaars | 1367846 |

| Merlijn van Duijn | 1323385 |

Exercise 1 (Don't crash)

Watch the implementation video here: https://drive.google.com/file/d/1HEQ291tJWxiCRycf9JcPCIs-tHaA3roN/view?usp=share_link

Main idea: The robot drives forward until it detects an object within the specified range, then it stops. The range is declared as 45 degrees right and left from the center sight.

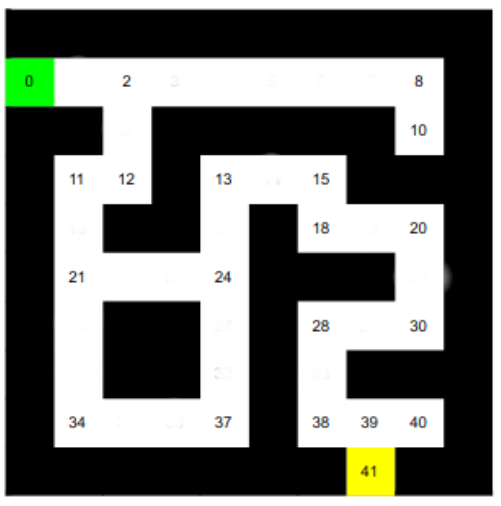

The code for the A* algorithm is uploaded to the gitlab.

To optimize the A* algorithm, certain nodes could be discarded. These nodes have exactly 2 neighbors on two consecutive sides and thus are not an end to a path, a corner or a intersection of paths. Due to this, these nodes do not have any added information and thus can be discarded, resulting in nodes only on intersection, corners and end of paths, leaving the straight path between them as one step.

Exercise 3 (Corridor)

Implementation video here: https://1drv.ms/v/s!Ah8TJsKPiHVQgdpdH5tZMWa6fCyjDA?e=7TtBUS

Main idea: The algorithm uses the open space approach. The robot finds the biggest open space on the horizon and turns so that the front of the robot is positioned directly towards it. It then drives to the open space and adjusts if new open spaces occur.

Exercise 4 (Odometry data)

- Keep track of our location: A small part of code was added that notes the current positions and changes in positions

- Observe the behaviour in simulation: When using the default settings the odometer is very trustworthy, but by uncertain mode it becomes very untrustworthy. The actual postion of the robot is far from the measured postition.

- Observe the behaviour in reality: Just like with the uncertain mode, the values are not accurate.