Mobile Robot Control 2023 Group 16

Group members:

| Name | student ID |

|---|---|

| Marijn van Noije | 1436546 |

| Tim van Meijel | 1415352 |

Practical exercise week 1

1. On the robot-laptop open rviz. Observe the laser data. How much noise is there on the laser? What objects can be seen by the laser? Which objects cannot? How do your own legs look like to the robot.

When opening rviz, noise is present on the laser. The noise is evidenced by small fluctuations in the position of the laser points. Objects located at laser height are visible. For example, when there is a small cube in front of the robot that is below the laser height, it is not observed. When we walk in front of the laser, our own legs look like two lines formed by laser points.

2. Go to your folder on the robot and pull your software.

Executed during practical session.

3. Take your example of dont crash and test it on the robot. Does it work like in simulation?

Executed during practical session. The code of dont crash worked immediately on the real robot.

4. Take a video of the working robot and post it on your wiki.

Link to video: https://youtu.be/4mXdJyXXidE

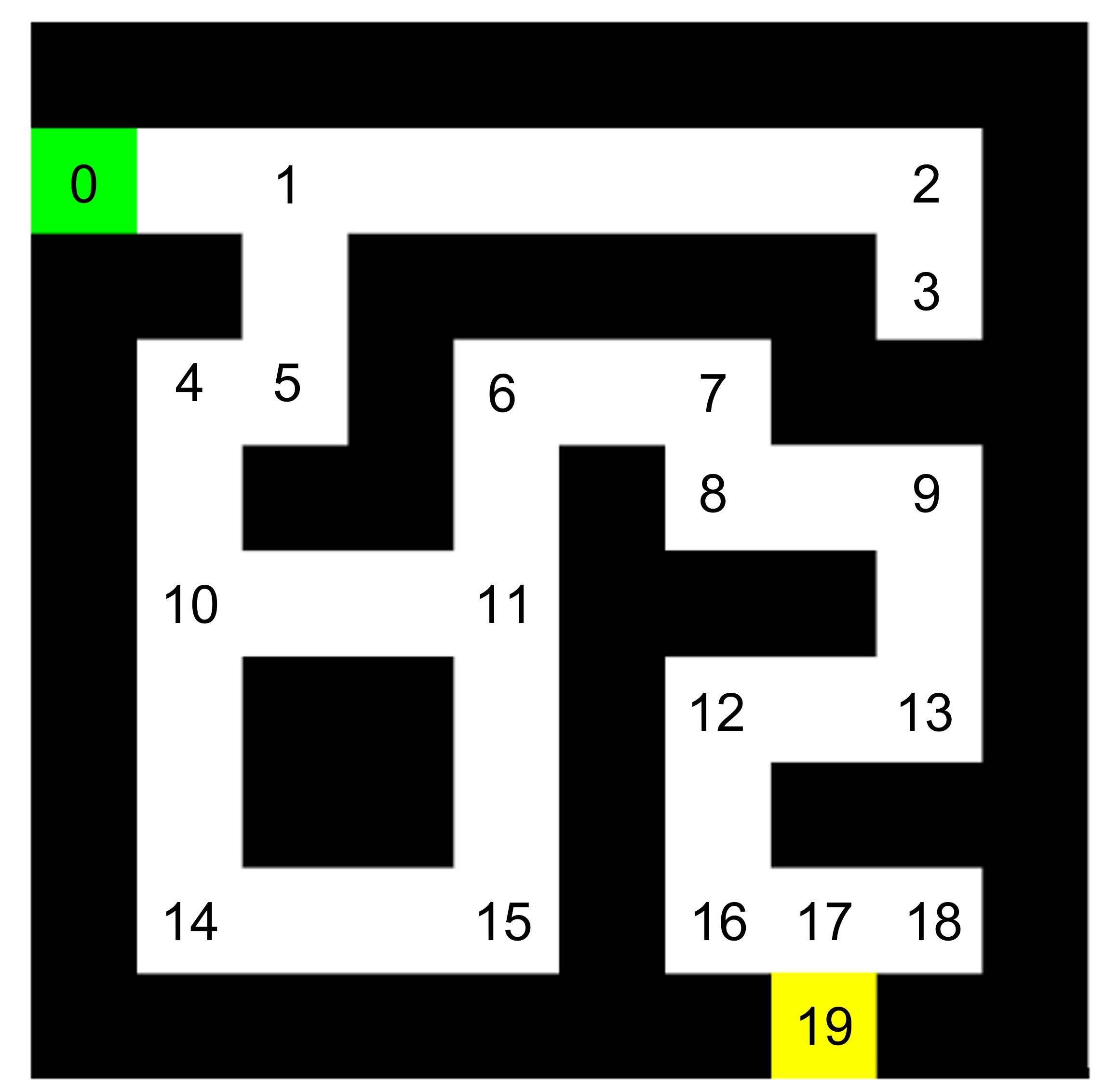

To make the A* algorithm more efficient, the amount of nodes should be reduced. This means that only nodes where the route change direction or where a path ends are considered. This is on the corners, T-junction and cross roads.

This would be more efficient, since the nodes in-between do not have to be considered by the A* algorithm. This increases computation speed.

To move the robot through the corridor, the Artifical Potential Field algorithm is used. There are two types of forces in this algorithm: the attractive force and the repulsive force. The attracting force calculated based on the position of the goal in comparison to the position of the robot. The repulsing forces are calculated by laserdata from walls and obstacles. When the robot moves forward in the corridor, it uses the laser to scan where the objects are positioned. Objects within a range of 1.5 meter are used in our approach. The repulsive forces result in a direction vector in which the robot wants to move. This direction is added up to the direction vector of the goal. Combined, these vectors result in a final direction vector in which the robot wants to move. The angular speed is then set to reach the desired direction.

Link to video of the simulation results: https://youtu.be/TG1GS70-G0A

Link to video of the real life experiment: https://youtu.be/_o3tM3mlx3E

Localisation assignment 1

Assignment 1: Keep Track of our location The code was written and uploaded on GitLab. It prints the odometry data including the difference between the last measurement and the new one.

Assignment 2: Observe the Behavior in Simulation

1) The accuracy of the odometry data is evaluated by running a simulation in which the robot has to follow a square path. The robot starts in the zero position. By following the path, the odometry data should constantly reflect the robot's exact position. Due to delays in the odometry data, the robot will probably never return exactly to its zero position, but when the odometric data return values of the actual position, it can be concluded that the accuracy is good. When the odometry data return values other than the position where the robot is actually located, the accuracy is no longer perfect.

2) When using the regular odometry data, the path followed is exact and after completing the path, the odometric data return the robot's exact position or at least know that the robot is not in the exact starting position. This can be seen in the video, where the robot ends slightly before the starting point, which can also be seen in the odometric data.

Using the uncertain_odom results in a similar final position. The uncertainty is not really visible. This is probably due to the low uncertainty level. However, in real life, this uncertainty will probably be larger.

3) No, we will not use this approach in the final challenge because in reality the same thing happens when using the uncertain_odom, but than also changing over time. The accuracy gets worse over time, so for the final challenge this is not desirable.

Video of simulation without uncertainty: https://youtu.be/DbtSd-Bld9s

Video of simulation with uncertainty: https://youtu.be/xS3cpYGw--8

Assignment 2: Observe the Behavior in reality

1) In reality, the robot has more uncertainties and if you run the experiment multiple times, the error changes each time you run the simulation. This means that you cannot rely on the odometry data. This is found in the video below. The robot ends up at the same position as it started. However, according to the odometry data, the robot is a bit in front of the starting position and turned to the right slightly. This is not the case in real life, and is due to wheelslip.

Video of real life experiment: https://youtu.be/e56ykR_f0xw