Mobile Robot Control 2020 Group 8

Group information

Group Members

| Name | Student Number |

|---|---|

| J.J.W. Bevers | 0904589 |

| J. Fölker | 0952554 |

| B.R. Herremans | 0970880 |

| L.D. Nijland | 0958546 |

| A.J.C. Tiemessen | 1026782 |

| A.H.J. Waldus | 0946642 |

Meeting Summaries

A logbook with a summary of the contents of each meeting is documented and can be found here

Course description

Introduction

As is proven during the current situation concerning the COVID-19 pandemic, our healthcare system is of high importance. However, there are shortcomings for which an efficient solution is required. At the time, the hospitals are occupied by many patients. Therefore, doctors and nurses have limited time for human-to-human contact with patients. By automating simple tasks, doctors and nurses have more time for contact with patients. An option is to use an autonomous embedded robot to assist in the everyday tasks such as getting medicine, moving blood bags or syringes and getting food and drinks. During this course, software for such an autonomous robot is developed. The goal is to complete two different challenges in a simulated environment: the Escape Room Challenge and the Hospital Challenge.

PICO

The mobile robot used is called PICO and is shown in Figure 1. PICO consists of multiple components. This year's edition of the course will be completely based on the PICO simulator (as developed by the project team) and will, thus, not contain implementation of the software on the actual robot. Only the relevant components for simulations are, therefore, briefly explained. For actuatation purposes, PICO has three omni-wheels which make for a holonomic base. A Laser Range Finder (LRF) is used by PICO to scan its surroundings, providing PICO with distance data. Furthermore, the wheel encoders data is used to keep track of PICO's translations and rotation (odometry).

Escape Room Challenge

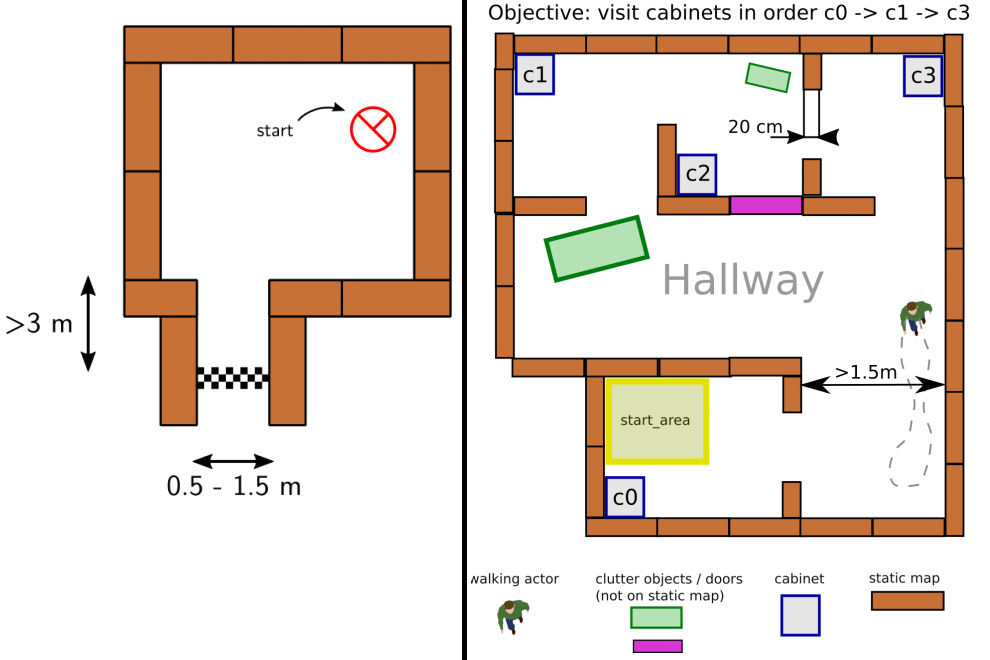

The goal of this challenge is to navigate PICO, through a corridor, out of a rectangular room of unknown dimensions without bumping into walls. An example of an environenment map that might be encountered during this challenge is shown on the left side of Figure 2. PICO has an unknown initial position within this room. Thus, PICO first needs to detect and recognize the exit. When the exit is found, PICO has to exit the room as fast as possible by moving through the corridor. The challenge is finished when the rear wheels of PICO cross the finish line entirely. In the section Escape Room Challenge, the approach is explained in more detail. Here, the algorithms used and its implementations are explained. Moreover, the results of the challenge are shown and discussed.

Hospital Challenge

This challenge represents an example of the application of an autonomous robot in a more realistic environment. In this challenge, PICO has to operate in a hospital environment. The environment will consist of multiple rooms, a hallway and an unknown number of static- and dynamic objects. In the rooms, cabinets are present that contain medicine. In this challenge, PICO will first need to recognize its initial position in the known environment. Next, PICO has to plan a path to various cabinets in a predefined order, representing the collection and delivery of medicine. When executing this path, PICO needs to prevent collisions with walls, and static- and dynamic objects. An example environment is shown on the right side of Figure 2. In Section Hospital Challenge, more information will be provided.

Design document

In this section, an adapted version of the design document is found which incorporates received feedback. The original design document can be found in Section Deliverables. The design document serves as a generic document applicable to both challenges. The functions listed are an initial guess of what is thought to be needed before actually working on the software. When producing the software, the actual functions arose. These functions are listed and explained in the Sections Escape Room Challenge and Hospital Challenge.

Requirements and Specifications

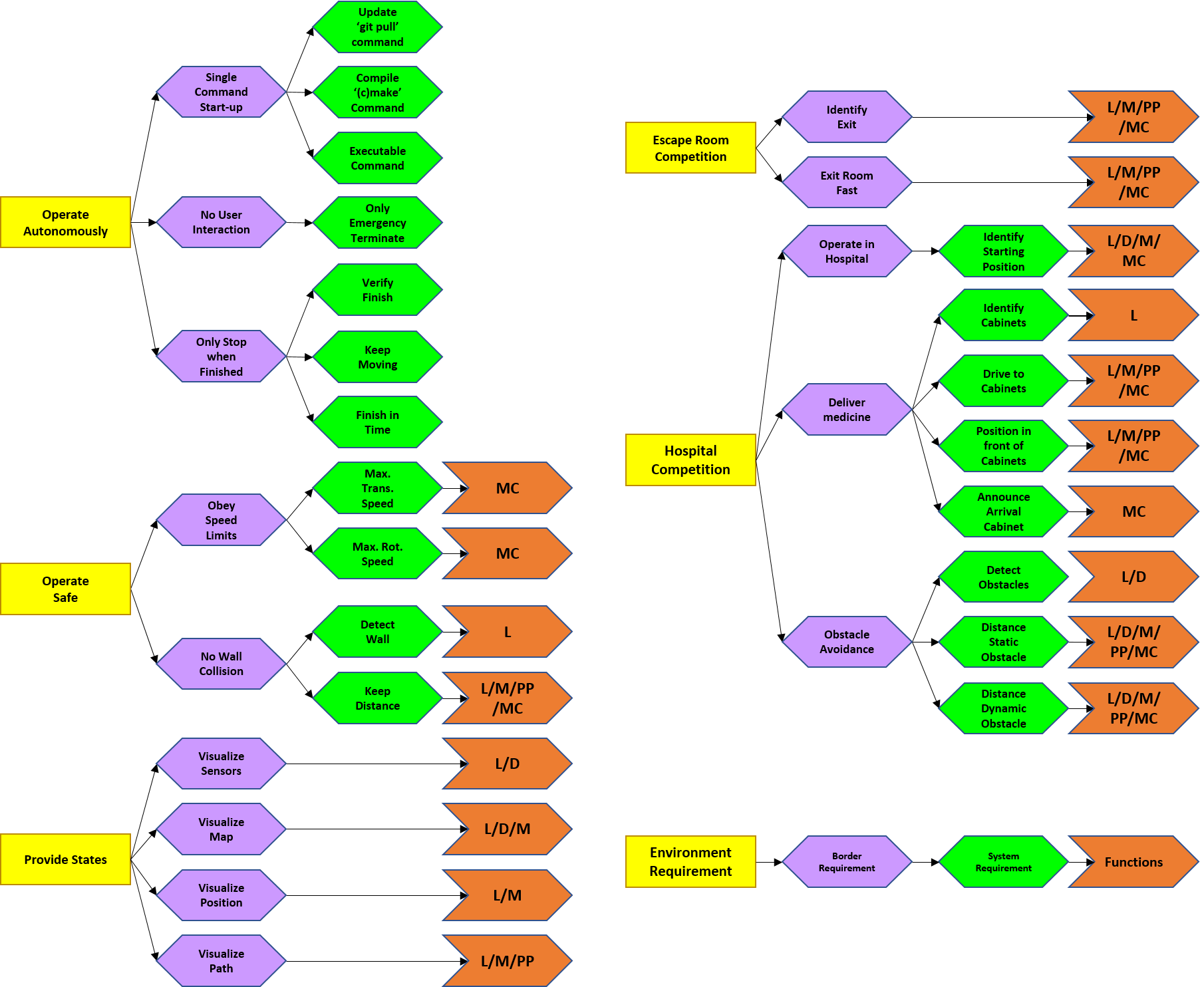

A distinction is made between general and competition specific requirements. Three stakeholders are considered: the hospital employees, the patients and the software engineers. In ascending order, the requirements are subdivided into environment requirements, border requirements, system requirements and function types. Five functions types are introduced: Localization(L), Detection(D), Mapping(M), Path Planning(PP) and Motion Control(MC). These are elaborated in the next section. The requirements are shown in Figure 3 and elaborated below. Note the legend located bottom right. First, the five environment requirements will be discussed in more detail. Even though the environment requirements are shown as separate parts, they do relate to each other. The first three environment requirements correspond to the functionality of PICO and are thus relevant for both challenges. The fourth and fifth environment requirement are specifically defined for the Escape Room- and Hospital Challenge, respectively.

- PICO should operate autonomously: No interaction is allowed, unless an emergency stop is needed. To start the software, the ’git pull’ command is used to update, the ’cmake’ and ’make’ commands are used for compiling and one executable is used to start execution.

- PICO can only stop executing when its task is finished: Therefore it must be able to verify when it is finished. Also, PICO cannot be stationary for 30 consecutive seconds and the task must be finished in time. PICO should operate safely. In order to do so, it must obey speed limits. A maximum transnational and rotational speed of 0.5 m/s and 1.2 rad/s, respectively, are set.

- PICO can not bump into walls: Therefore, PICO should be able to detect walls and keep a certain distance. PICO should provide information on its state. Therefore, PICO should visualize its sensor data, produce and visualize a map and its current position and finally visualize its produced path.

- PICO should finish the Escape Room Competition: To succeed, PICO must be able to identify the exit and it should exit as fast as possible. A strategy is explained in the next section.

- PICO should finish the Hospital Competition: PICO should deliver medicine. Therefore, PICO must recognize cabinets, drive to them, position in front of them facing towards them and afterwards produce a sound signal. PICO should operate in the hospital environment. Therefore it must recognize its (initial) position in the provided map. Finally, PICO should not bump into static or dynamic objects. Thus PICO should detect an object within a 10 meter range and the distance between PICO and a static or dynamic object must be at least 0.2 m and 0.5 m respectively.

The hospital employees are involved in the first, second and fifth requirement, the patients in the second and fifth and the software engineers in the first, third and fourth.

Functions

The finite state machine displayed in Figure 4 depicts the designed behavior of PICO, allowing it to complete the two challenges. The states are:

- State I - Initializing: PICO performs an initial scan of it’s surroundings and maps this data to find its position on the map. It is possible that insufficient information has been gathered to do this when, for instance, all objects are out of reach of the laser range finder.

- State II - Exploring: An exploration algorithm such as a potential field algorithm or a rapidly expanding random tree algorithm is used to explore the environment further. When PICO’s position within the map is known and the exploration algorithm has been executed for T seconds, the transition to State III occurs.

- State III - Planning: After PICO has localized itself on the map, enough information has been gathered to compute a path towards a target. An algorithm is used to compute a state trajectory, that is then translated into control actions.

- State IV - Executing: During this state a control loop is executed which controls PICO towards the reference coordinates (x,y) by measuring position and actuating velocities. When PICO runs into an unexpected close proximity object, the state is transferred to State V. When PICO runs into an unexpected distant proximity object, the state is transferred to State III, such that a new path can be planned before PICO arrives at this object.

- State V - Recover: During the recover state, PICO has encountered a close proximity object which it did not expect. PICO then immediately stops and when completely stopped, it transits to State II, such that the exploration algorithm can move PICO away from this unexpected object for T seconds. The loop (State II, State III, State IV, State V) can be executed repeatedly and can be counted, such that T increases when more loops are encountered.

- State VI - Reached: After the path execution has been finished and PICO has reached the end of the path, PICO stops moving as it has reached its target location.

| FUNCTION | DESCRIPTION |

|---|---|

| Localization | |

| InitialScan() | PICO makes a rotation of 190 [deg] to get a full 360 [deg] scan of the environment |

| GetMeasurement() | Read out the laser range finder sensor data |

| Detection | |

| ProximitySense() | Detects if an unexpected object is in close proximity |

| DistantSense() | Detects if an unexpected object is in distant proximity |

| Mapping | |

| UpdateMap() | Write measured data to Cartesian 2D world map used by path planner |

| UpdatePotField() | Write measured data into potential field map used by potential field algorithm |

| GetMap() | Retreive current 2D carthesian world map and (x, y) position of PICO |

| GetPotField() | Gets gradients from the potential field |

| Path Planning | |

| GetGoal() | Retrieve Cartesian coordinates of the goal (xg, yg) with respect to the map |

| PlanPath() | Path planner algorithm using the 2D world map to plan a state trajectory (x, y array) |

| ComputePotState() | Uses gradients from potential field as input, to compute a state reference ẋ, ẏ, \dot{θ} |

| ReadPath() | Get current (x, y) goal of path array |

| Motion Control | |

| Move() | Send position reference (x, y, θ) to PICO. Contains position control loop |

| StopMoving() | All movements of PICO are stopped: ẋ, ẏ, \dot{θ} = 0 |

| FullRotation() | PICO makes one full rotation |

| ExecutePath() | Translates (x, y) path reference into controller action for calling Move(). Loop over all (x, y) to achieve position reference tracking |

Software Architecture

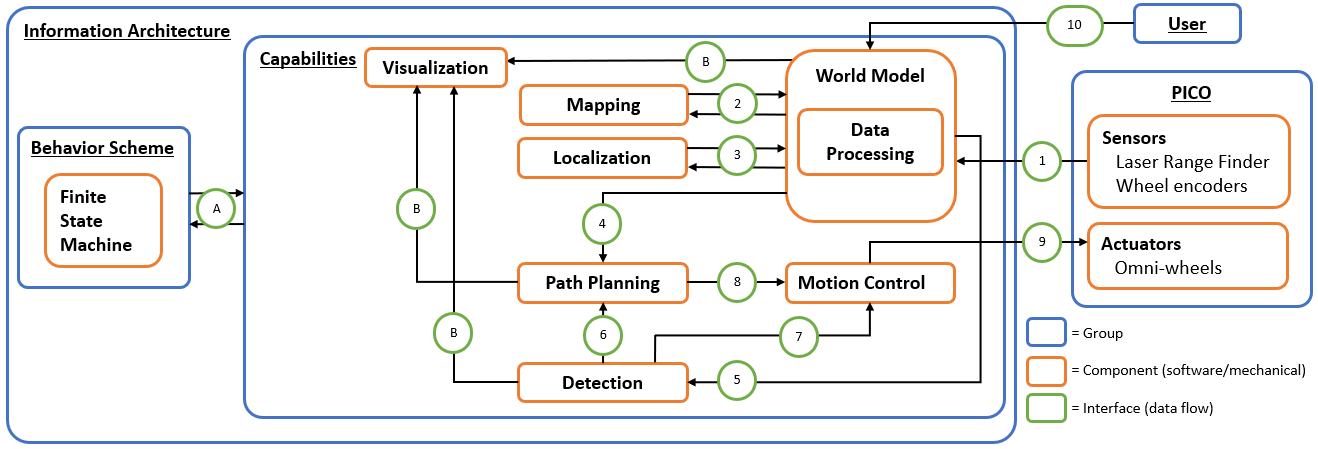

The proposed information architecture is depicted in Figure 5. It shows a generic approach, so note that a specific challenge may require additional functionalities and interfaces. Between the software and mechanical components, information is shared via interfaces marked in green and labeled with an arbitrary number or letter. These interfaces dictate what information is set by which component and what information is shared, and among which components. In the central section, this schematic overview visualizes what capabilities are intended to be embedded in the software. Firstly, interface 10 represents the data received from the user, which may include a JSON file with a map and markers, depending on the challenge. On the right, interface 1 between PICO (including the provided software layer) and the designed software shows the incoming sensor data, i.e. structs with distance data from the Laser Range Finder (LRF) and odometry data based onthe omni-wheel encoders. This data is (possibly) processed and used to map PICO’s environment (mapping), to estimate PICO’s position and orientation (localization), and to detect static/dynamic obstacles (detection), via interfaces 2, 3 and 5. This knowledge is stored in the world model,serving as a central data-base. Via interface 4, a combination of the current map, PICO’s poseand the current (user-provided) destination is sent, to plan an adequate path. This path is sent over interface 8, in the form of reference data for the (x,y,θ)-coordinates. The motion controlcomponent will then ensure that PICO follows this path by sending velocity inputs over interface 9. Simultaneously, the detection monitors any static/dynamics obstacles on the current path online. If this is the case, interfaces 6 and 7 serve to feed this knowledge back to either the path planning component to adjust the path or directly to motion control to make a stop. On the left, interface A between the behavior scheme and the capabilities shows that the high-level finite state machine manages what functionality is to be executed and when. Interfaces B represent the data that is chosen to be visualized to support the design process, i.e. the visualization serves as a form of feedback for the designer. The information that is visualized depends heavily on the design choices later on in the process and, therefore, this will not be explained further.

Implementation

The components described above are intended to be explicitly embedded in the code through the use of classes. The functionality that belongs to a certain component will then be implemented as a method of that specific class. At this stage of the design process, the specific algorithms/methodsthat will be used for the functionalities are not set, but some possibilities will be mentioned here. Simultaneous mapping and localization (SLAM) algorithms, based on Kalman filters or particle filters, could be used for the mapping and localization components. Moreover, for path planning, Dijkstra’s algorithm, the A* algorithm or the rapidly-exploring random tree algorithm can be used.For motion control, standard PI(D) control, possible with feedforward, can be used to track the reference trajectory corresponding to the computed path. Additionally, for detection, artificial potential fields can be used to stay clear from obstacles.

Escape Room Challenge

In this section, the strategy for the Escape Room Challenge is discussed, as well as the algorithms that are used. The software for this challenge can be roughly divided into three main parts: the finite state machine, the potential field algorithm and the exit detection algorithm. These will be covered next.

Finite state machine

"describe strategy on highest abstraction level, i.e. initialization, exploring, executing, reaching"

Potential field algorithm

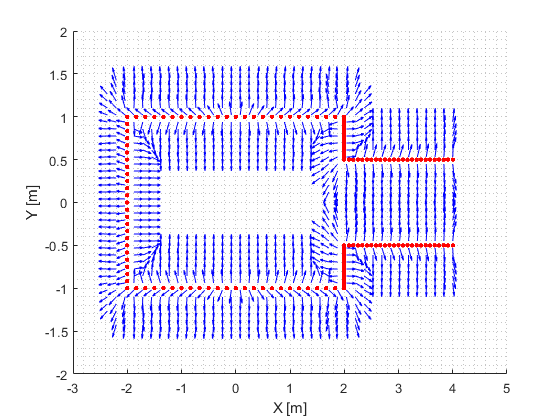

During the escape room challenge, the direction and magnitude of the velocity of Pico is guarded by the Potential Field Algorithm. The potential field within a mapped environment consists of a rejective field and an attractive field. An example of a gradient field from the rejective field is shown in Figure 6. For the escape room challenge however, it is chosen to not use a worldmap as this would unnesesarily overcomplicate computations. Therefore the rejective field vector at Pico’s current position is computed based on all current LRF data, such that the gradient w.r.t. Pico’s local coordinate frame based on its current measurements is computed. For the attractive field, the current goal relative to Pico’s local coordinate frame is required. This goal is provided by the Exit detection algorithm. The vector corresponding to the gradient of the attractive field is computed by means of a convex parabola in the (X,Y) plane w.r.t. the local coordinate frame of Pico, which has its minimum at the goal relative to Pico’s local coordinate frame. Both the rejective and the attractive field vectors are normalized to have a magnitude of 1. This avoids the effect that the rejective field is stronger when Pico sees more datapoints on wall, which could give a distorted effect.

The normalized field vectors are then used in the motion control of Pico. In order for Pico to always be able to see the goal, and thus obtain an estimation of the goal relative to Picos coordinate frame, the orientation of Pico is controlled such that it always aligns with the direction of the attractive field vector. This control is a feeback loop, with the error equal to the angle of the attractive field vector w.r.t. Pico’s X-axis and as controller a simple gain.

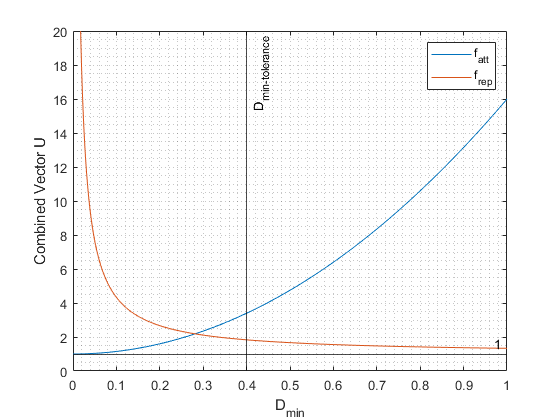

The velocity in X and Y w.r.t. Pico’s local coordinate frame is actuated using both the normalized attractive and repulsive field vectors. In order to combine these vectors, the minimum distance to an object “Dmin” is measured. This measurement is used to compute the contribution factors of the attractive and repulsive field as follows. When Dmin is smaller than a tolerance of 0.4, these factors are multiplied with the corresponding field vectors and then the average is used. This way the rejective field is stronger when Pico is near a wall. The 1/x function allows to drive fairly close to a wall but not collide with it. Furthermore when Dmin is larger than the tolerance of 0.4, The forward vector component in X-direction consists of the attractive field vector and repulsive field vector with factor 1 and in Y-direction both factors are equal to 1.

This thus yields a new vector relative to Pico’s local coordinate frame. This vector can still have a magnitude smaller and larger than 1. When the magnitude of this vector is larger than 1, it is limited to 1, such that the X and Y components of this vector can be multiplied with Pico’s maximum velocity such that the maximum velocity is never exceeded, but Pico can also drive more slowely, for example when moving through a narrow corridor in which the rejective field is very strong.

During testing it was noticed that the Exit detection algorithm can sometimes rapidly switch between detected targets relative to Pico. When this rapid switching occurs, we do not want Pico to rapidly adjust its direction. Therefore when this rapid switching is detected, the vector corresponding to the gradient of the attractive field is filtered using a low-pass filter with a relatively low bandwidth. When no switching is detected, thus in the regular case, this vector is filtered using a low-pass filter with a relatively high bandwidth allowing for faster adjustment of this vector.

Ofcourse it is possible that at a certain time instance Pico obtains a relative goal. At the next time instance it might be possible that the Exit Detection algorithm does not provide a goal. Therefore each time the potential field algorithm obtains a goal, it captures the orientation of its local coordinate frame w.r.t. the world coordinate frame based on odometry. Furthermore the distance to the goal relative to this local coordinate frame is logged. In next iteration, when the potential field algorithm is called with a flag ‘0’, corresponding to the case that at this call, no relative goal is provided, Pico uses the odometry data to move to the last known goal w.r.t. the last known coordinate frame. During this motion, Pico maintains the orientation of the last known attractive field vector when a goal was still provided. Of course when the distance to its last known coordinate frame becomes larger, the position error becomes larger. This way, when the Exit detection algorithm initially provides a goal which lies 1 [m] in front of Pico, and then can no longer detect this relative goal, Pico will move forward and measure using the odometry until it has traveled the distance of 1 [m] w.r.t. Pico’s local coordinate frame when the goal was provided. When this distance has been traveled Pico stops moving. This way some additional redundancy has been built in, such that Pico can still move when no goal can be provided at a certain time instance.

Exit detection

For the Escape Room Challenge, it is not required to compute PICO's global location and orientation, i.e. its global pose, within the room. In fact, this is not even possible, because the dimensions of the room are not known and the room may also not be perfectly rectangular. Even if it were, it is unlikely that it would be possible to uniquely compute the robot pose from the LRF data. Since the mere goal of this challenge is to drive out of the room via the corridor, the exit detection algorithm must detect where the exit is relative to PICO's current position. This information then serves as an input of the potential field algorithm to compute the attractive field. Looking forward, it is decided that it is preferred to already implement as much intelligence as possible which may later function as part of the localization component of the software for the final Hospital Challenge. For this reason, instead of simply detecting gaps in the LRF data, the exit detection algorithm incorporates data pre-processing, data segmentation, line fitting, corners and edges computations, and target computations. These parts of the exit detection algorithm will be further elaborated below.

Data pre-processing

Firstly, the LRF data is pre-processed by simply filtering out data that lies outside of the LRF range, i.e. below its smallest and above its largest measurable distances, 0.01 and 10 m, respectively. It is then converted to Cartesian coordinates: x_i = ρ_i*cos(θ_i) and y_i = ρ_i*sin(θ_i), where ρ_i is the measured distance and θ_i the corresponding angle.

Data segmentation

The procedure that is applied to divide the LRF data into segements is inspired by the famous image processing technique, split and merge segmentation. It is based on the criterion that, if all data points in a considered range lie within a treshold distance to a straight line from the first to the last data point of that range, a segment is found. This approach is especially suited in the context of the two challenges, because the walls of PICO's environment are (sufficiently) straight. Moreover, the static objects of the Hospital Challenge are (sufficiently) rectangular. The iterative procedure encompasses the following steps and computations:

1. Start with the first and last data points, corresponding to θ = -2 rad and θ = 2 rad, respectively (assuming these have not been filtered out during pre-processing). Set i_start and i_end, respectively, equal to the corresponding indices.

2. Compute the perpendicular distances, d_i, of all other data points to a straight line from data point i_start to i_end: d_i = |(y_2 – y_1) x_i – (x_2-x_1) y_i + x_2 y_1 – y_2 x_1| / \sqrt( (y_2 – y_1)^2 + (x_2 – x_1)^2 ). Here, (x_1,y_1) and (x_2,y_2) are the Cartesian coordinates of data points i_start and i_end, respectively.

3a. Compute the maximum of all distances computed at step 2 and the corresponding index. If this distance is below a certain treshold (which accounts for measurement errors), go to step 4, otherwise, go to step 3b.

3b.

4. A new segment is found.

Line fitting

Corners and edges computations

Target computations

Hospital Challenge

Deliverables

Design Document

In the design document, a generic approach for the Escape Room Competition and the Hospital Competition is introduced. Requirements are proposed, which are clarified by means of specifications. Based on these, a finite state machine is designed. In each state, groups of functions are proposed. This functionality is, in turn, structured in an information architecture which covers the components and the interfaces. Undoubtedly, throughout the remainder of the course, this document needs to be updated, but its current content functions more as a guideline. Since the Escape Room Competition is the first challenge, functional specifics may be more focused towards this goal. And because software design is use-case dependent, for the Hospital competition the current approach will need to be adapted and improved. The design document deliverable can be found here.