Embedded Motion Control 2019 Group 9

Group members

Merijn Floren | 0950600

Nicole Huizing | 0859610

Janick Janssen | 0756364

George Maleas | 1301527

Merijn Veerbeek | 0865670

Design Document

The Initial Design document can be found here: File:Initial Design Group9.pdf

The powerpoint presentation about the design is in this file: File:Presentation initial design.pdf

Meetings

On 1 May the first meeting of the team took place. Our tutor Marzieh also attended. The purpose of the meeting was to discuss the content of the design document and to derive a first strategy about the motion of PICO. After some brainstorming and discussion, tasks were assigned to each team member. Specifically:

- Nicole and George will focus on writing the design document

- Merijn F., Merijn V. and Janick will focus on writing some pieces of code to test next week on the first test with PICO.

On the 8th of May, the second meeting took place. The meeting was devided into two parts: at first, the powerpoint presentation was reviewed. The tutor had some suggestions we could change. Secondly, different strategies for finding the exit were discussed. The two options are: 1) following the walls and 2) rotate 360 degrees, look for exit and walk straight to exit. After the meeting, the first test session took place. Because of issues with Gitlab, we could not obtain useful data from this test session.

On the 9th of May, the second test session was held. The code of Merijn F was succesfully tested on the robot. The robot walks towards a wall, turns 90 degrees and walks toward the next wall. However, after reaching this wall, it keeps rotating. Data is stored to review later. The code of Merijn V could unfortunately not be tested on the robot within the available time. After the test session, an additional meeting without tutor took place. We devided the interfaces among us. Every task should be finished before Monday so that on monday, bugs can be fixed. The division is as follows.

- Perception & Monitoring: George

- World model & responsible for code structure: Merijn V

- Control & drawing rooms: Nicole

- Planning wall-following-strategy: Merijn F

- Planning finding-exit-strategy: Janick

Meeting 21/05

attendees: Nicole, Merijn&Merijn, Janick

During this meeting a plan has been drawn up in order to succesfully complete the hospital challenge, and to divide the workload evenly over time and group members.

Architecture components

Detection

- longest/smallest distance - distance at specific angles - temporal distance differences

Worldmodel

- Map - cabinets to visit - objects - snapshots

Monitoring

- localization(?) - moving object recognition - static object recognition - wall/corner recognition - cabinet recognition

Finite State Machine

- path planning (method ideas: polygon grid/vector field) - taking snapshots - speaking

Functions

- 1. reading JSON map and convert it to simulator readable version

- 2. identify starting location in the start room

- 3. identify (moving) objects

- 4. update them in the map

- 5. stop to prevent collisions

- 6. take snapshots

- 7. comment on current action audiowise

Milestones

Milestones are defined guided by the (expected) remaining test moments, the first of which being on 23/05 and the last a short time before the final challenge.

- 1. talking (audio signal of activities), detecting and moving to exit, recognize/position front cabinet

- 2. cabinet detection + moving towards, reading the map

- 3. global pathplanning

- 4. Recognise static objects and update them into the map

- 5. Identify moving objects

- 6. Testing with specific map snapshots

- 7. full functionality

M1

moving to exit: Merijn V recognition exit/obstacles : Janick Cleaning out the git (at least the master) ... (?)

Questions

A few topics related to the setup remain:

- Are there doors / are open doors swung open? - Is the cabinet location/orientation known a priori? - Can cabinet and static objects be rectangular - What is / how to identify a cabinets front side?

Code architecture

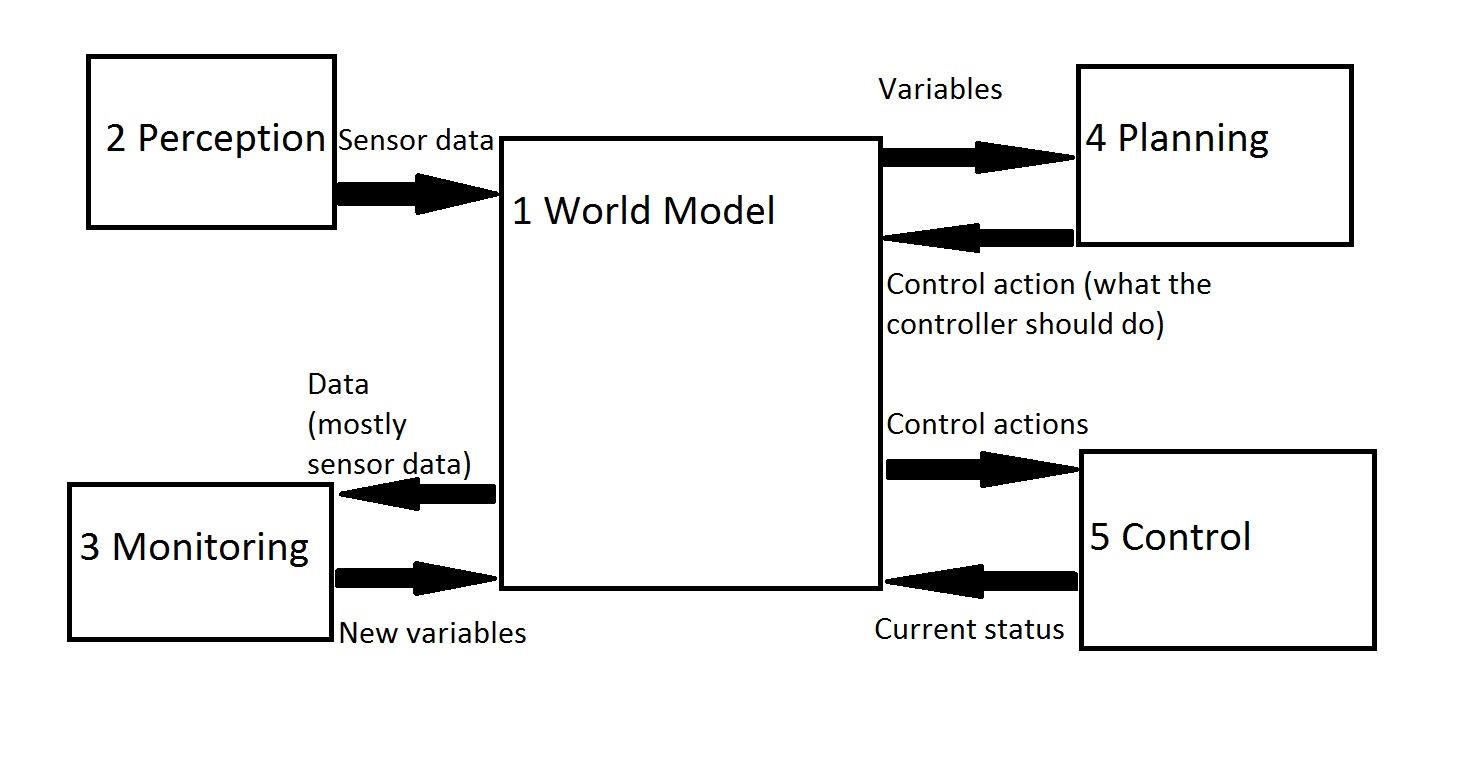

The code architecture can be visualized as in the following picture:

And the pseudocode is structured as follows:

- World model (Merijn V)

The world model contains all information about the world. It stores all useful variables. All functions for updating a variable or obtaining a variable value are coded in this part.

Pseudocode:

void getDistance():

return DISTANCE_VALUE

double updateDistance( newvalue ):

DISTANCE_VALUE = newvalue

- Perception (George)

Obtains sensor data and translates the data to useful data that the robot can use. For example, it obtains the sensor data from the LRF and calculates the value of the distance. It updates this value in the worldmodel. (It will use a function like worldmodel.updateDistance( NEWDISTANCE ). The perception part is the owner of the data variables stored in the world model. ONLY the perception module can update these variables. All other modules can only read these values for their required tasks.

Input: None

Output: Sensor data

Pseudocode:

While true:

Worldmodel.updateDistance( Data_From_LFR )

- Monitoring (George)

The monitoring module uses sensor data and does calculations with it. For example it determines if the robot is close to a wall or when the robot has turned 90 degrees. It will uses function like worldmodel.getVariable() to obtain the data and worldmodel.updateVariable(newvalue) to communicate with the worldmodel. The monitoring model is the owner of all of its output data and is the only part that can change these values. All other modules can only read the values produces by these module from the world model.

Input: Sensor data variable (from worldmodel. Worldmodel gets them from perception module)

Output: Processed data

Pseudocode:

While true:

Angle = worldmodel.getAngle()

If angle > 90:

Worldmodel.setTurn90(true);

Distance = worldmodel.getDistance()

If distance < threshold:

Worldmodel.setCollisionDistance(true);

- Planning (Merijn V & Janick)

This part determines what the motor should do. It determines what actions should be done based on the variables In the world model. This module is a FSM. This module produces control actions for the motor controllers.

Input: Sensor data and (mostly) data from the monitoring module.

Output: Control actions (states in which the motor should be) The planning module is the owner of the data it puts out.

Pseudocode:

typedef enum

{

turning = 1,

forward,

backward,

} state; // This part will be in a header file

// Maybe the state of the planning module doesn’t need to be in the worldmodel

While true:

Switch(worldmodel.getPlanningState())

case turning:

if worldmodel.getTurn90(): // has the robot turned 90 degrees

worldmodel.updateMotorState( MOVEFORWARD )

worldmodel. updatePlanningState ( forward )

case forward:

if WHATEVERCONDITION:

worldmodel.updateMotorState( SOMETHING)

worldmodel.updatePlanningState( something)

- Controller (Nicole)

This part controls activates the actuators. It should do the action determined by the planning module.

Input: Motor state

Output: None (optionally its current state, but I don’t think this is necessary)

Pseudocode:

While true:

Switch(worldmodel.getMotorState())

Case MOVEFORWARD:

Pico_drive.moveForward(Speed)

Case MOVEBACKWARD:

Pico_drive.moveBackWard(Speed)

Case TURNING:

Pico_drive.turn(rotatespeed)

Initial Finite State Machine model

The system will make use of a Finite State Machine model. The will take up the task of the executing the plan of the robot and determines the high-level control.

Link to Wikipedia:Finite-state machine.

An image will be added when image is ready.