Embedded Motion Control 2017 Group 3 / Maze Design

This page is part of the Embedded Motion Control Group 3 of 2017.

Changes after Corridor challenge

This page is about the design for the Maze challenge. After the corridor challenge we were left with a number of issues:

1.) The turning was not very robust outside of the simulation and would fail often

2.) During the last few days of testing and coding before the corridor challenge we made rapid changes to the codebase without properly refactoring so we had incurred some technical debt.

3.) Keeping the PICO aligned to the wall during navigation was a problem

To solve there problems we decided to go with a new approach for the motion control. We discarded a big part of the code and refactored the rest to make the code easyier to change and maintain.

World Interpreter

We removed the wall/path detection and replaced it with what we call the "world interpreter" whose function it is to take all the sensor data and do all the processing required for the rest of the code. For example dead end detection for the door supervisor and calculating the angle to the nearest obstacle pint for the wallfollowing function in the motion supoervisor. All the processed data is stored in the world model which is a struct that gets distributed to the supervisors in the main loop. This is clearly visible in the short (82 lines) and well documented main.cpp[1].

Virtual Circle

The pledge algorithm that we use require the motion controller to define 2 states where PICO either has to follow a wall on either the left or right side (the user can decide and we chose for right) or where PICO has to drive straight ahead in a direction until it meets an obstacle.

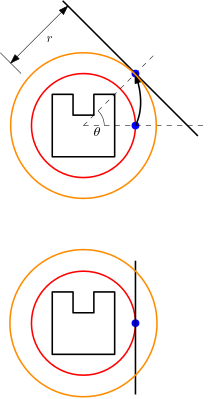

For following the wall we designed a method that we call the virtual circle following. The method looks if the nearest obstacle point is in an outer "sensing circle", if this it true it will attempt to allign the detected point to a set distance r at PICOs right hand side (-90 deg. from the front facing direction). It does this by applying 2 PID controllers. One for the distance r and one for the angle theta (shown in picture)

This method does not require any additional code for taking turns when following an obstacle (wall) because it will keep the nearest point on the right side while moving forward.

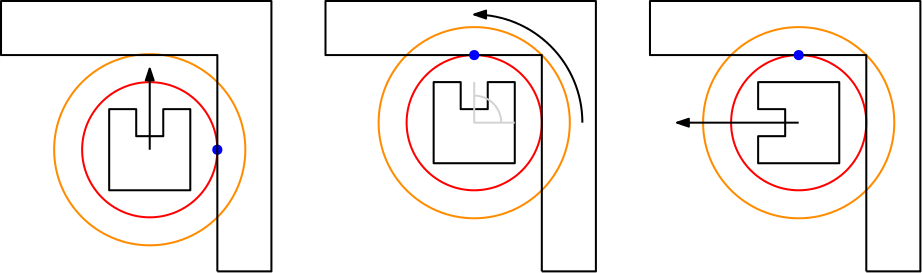

For an inner corner the closest point will suddenly be at 0. deg instead of -90 deg. so pico will turn and after it is mostly aligned again continue moving forward as demonstrated in this picture from left to right:

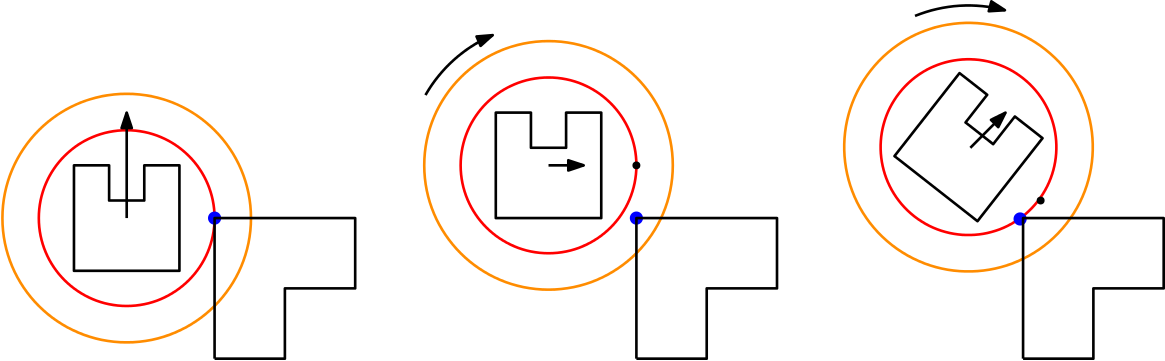

For an outer turn the closest point will "detach" from the inner virtual circle and by continuously correcting for that and then moving forward again the PICO will turn +90 deg. due to the 20 Hz control loop the turn taking appears quite smooth.

The function that implements the virtual circle is called stickToWall() and can be found here[2]

Turn Detection

Since the turn taking is now emergent behavior rather than a deliberate decision by the pathfinding supervisor due to the virtual circle method, we have to implement some code to count the number of turns taken so the pledge algorithm can detach from the wall when the turn count is zero, to do this we keep a small FIFO buffer of the previous odometer readings and look if there has been a significant increase or decrease over time from which we infer the direction of the turn. After some tweaking during the testing before the maze challenge the results we got from the turn detection appeared really robustly correct, unfortunately during the maze challenge the counter missed a turn causing the PICO to take an unnecessary long route. The code for the turn detection can be found here [3]