Embedded Motion Control 2013 Group 11

Group Members

| Name: | Student id: | Email: |

| Justin Wildschut | 0617495 | j.a.wildschut@student.tue.nl |

| Jeroen Zegers | 0638172 | j.c.zegers@student.tue.nl |

| Alex Andriën | 0652965 | a.r.p.andrien@student.tue.nl |

| Talha Ali Arslan | 0867550 | talhaaliarslan@gmail.com |

| Leroy Hazeleger | 0651762 | l.hazeleger@student.tue.nl |

Discussion and Possible Solutions

Winning!

So far, all the hard work paid of!

Decision Making Node

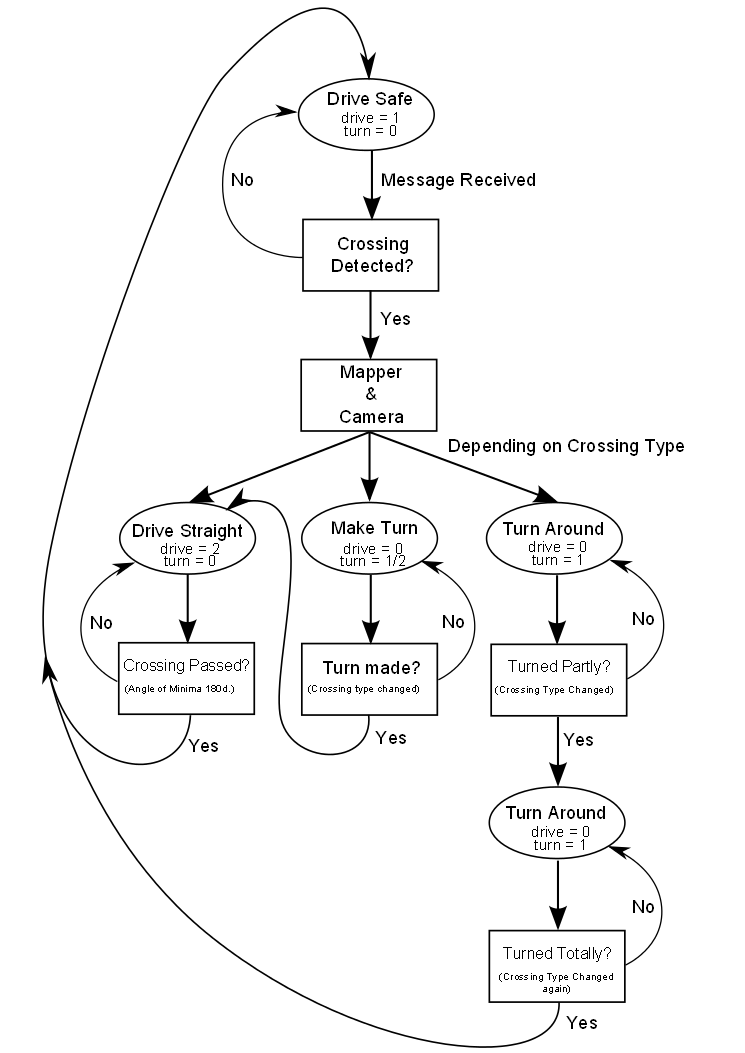

The structure of this node can best be explained using a program flow diagram as depicted below.

Drive Safe Node

(1 Oct 2013)

In this node we make sure that the robot does not hit the walls. Moreover, we ensure that the robot stays in within a certain margin in the middle of the corridors. The node can be divided in to three parts: Normal Driving, Entering Crossing, Exiting Crossing.

We use the info given by the Determine Position node, which tells us where the minimum distances to the wall are located, what the values of these minima are and at which indeces the laser detected these minima. Using these indices as well as the minimum angle and the angle increment we calculate the angle of the robot relative to the wall on both sides.

Normal Driving

In this situation Pico is in a corridor, so it is not entering a corner nor is it exiting one. This case is relatively simple, since there are only two minima: one on the left and one on the right.

If the distance on either side is smaller than the other one, we know at which side of the corridor we are at this moment. Then we use the angle of the robot relative to the wall to determine if the robot is either facing the side with the smaller distance or the one with the larger distance, e.g. if the robot is facing towards the near wall or moving away from it.

In the first case, we give the robot an angular velocity in the other direction to make it turn away from the near wall. We use the distance to the near wall to make the robot turn away faster if it is closer to this wall, and of course slower if it is further away from the wall. In the other case, the robot is already facing away from the near wall, so we do nothing.

To make sure the robot does not constantly correct the angle (we do not want the robot to 'wiggle'), we us a certain tolerance so it does not correct if it is near the middle of the corridor.

Entering Crossing

In this case the robot is entering a crossing, so it no longer has two minima at roughly 90 degree angles, but instead there are several minima detected and they are no longer at 90 degree angles (we also use this fact for our crossing detection). We know that is entering a corner when the two minima to the left and right of the robot are in opposite direction. The minima it uses for this are the ones with the first and last index (picture needed). Here there are also several cases depending on the crossing.

T-crossing with Dead End

Here we have three minima, one on both sides and one in the center, in the middle of the dead end. We use the minimum in the center, of which the index should be at zero degrees, or index 540. Using this knowledge we correct the robot if this is not the case, so suppose the index is at 580, we know the robot is oriented left, and we give it an angular velocity to the right. This happens within a certain margin around the index 540, so it does not correct if the index is at 540 [math]\displaystyle{ \pm }[/math] tolerance.

Left Turn, Right Turn, T-crossing Left and T-crossing Right

Four Point Crossing

Exiting Crossing

Crossing Detection

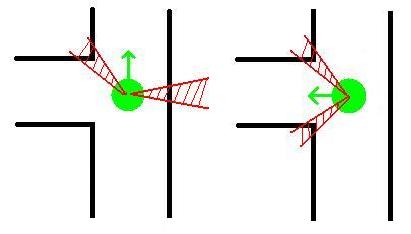

As is presented in the program structure below there is a node which detects the crossings in the maze. This node detects at which moment Pico is at the middle of a cross-section and if there is a side-path on its left and/or right and/or a path in front. These different type of cross-sections are detected using the positions of the minima in the laser-data. The positions of these minima are found in the 'Determine Position' node and send via a topic to this 'Detect Crossing' node. The principle of this function is illustrated using the pictures below.

Suppose the robot approaches a T-crossing like in the picture below and that we want the robot to go left.

The left picture above shows the moment at which Pico arrives at the middle of an intersection. If two minima are within the red area's we know that the robot is approximately in the middle of the T-crossing (assuming that the width of the corridor and the side-path are approximately equal). The 'Detect Crossing' node will give a message to the 'Decision Making' node that it is in the center of an intersection and which options there are to take (left, straight or back). If the 'Decision Making' node decides to make a turn left, the robot will turn left untill the minima are in the regions as is depicted in the right picture above, The detect crossing sends a message to the 'Decision Making' node when the minima are in these regions and thus the turn in made.

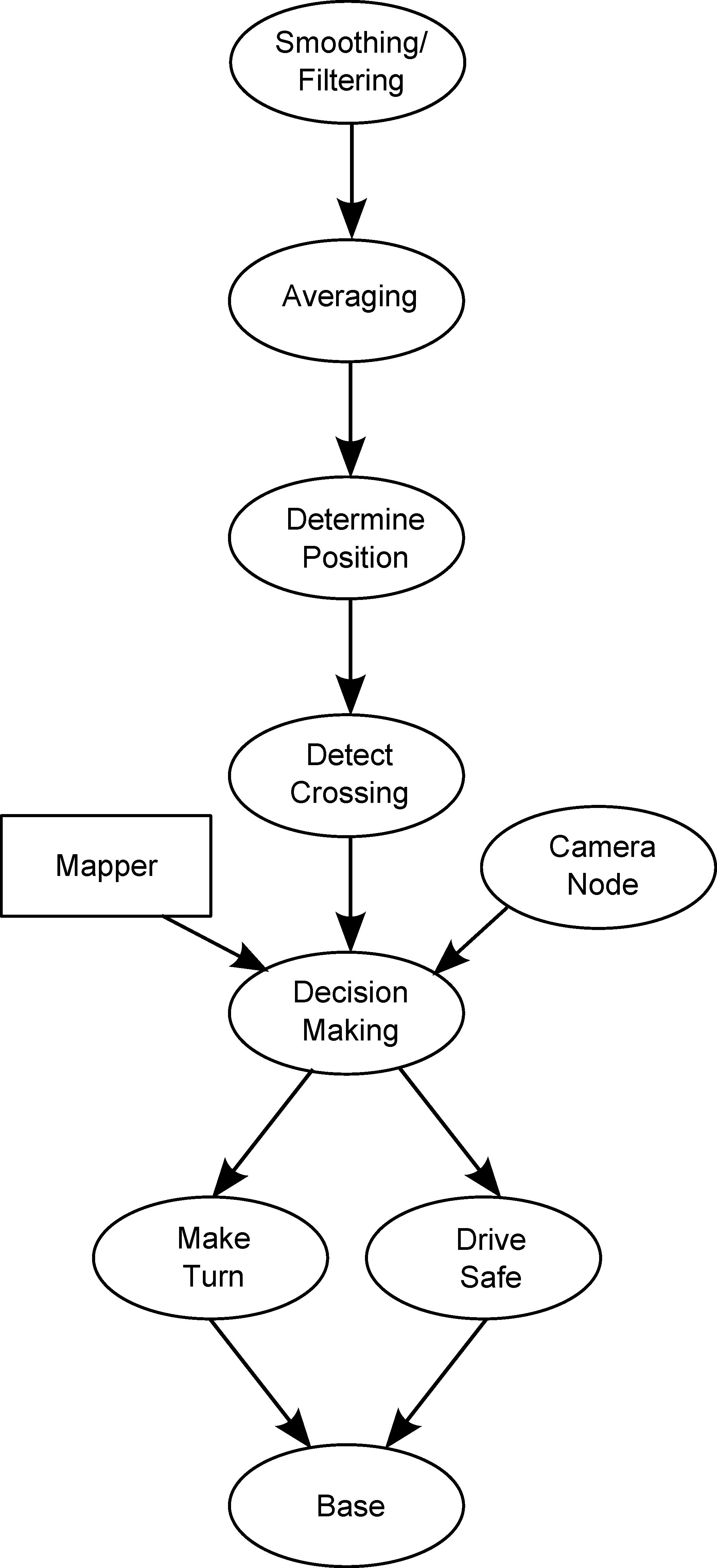

Program Structure

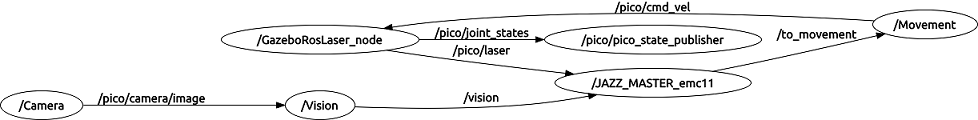

We designed a program structure to divide the tasks, make the program more transparant and easier to debug. The program is divided in the following nodes:

The nodes and topics are described in the following document: File:EMC Main.pdf

Filtering and Processing Laser Data

20 Sept 2013

By applying a set of filters, laser data is processed to give information which are easier to interpret for path planning. By detecting certain features of the environment, we now deal with smaller amount of data which are transferred by custom messages that can be modified.

At first, the filter process was created as a node and it got more complex as new stuff were added. So; for ease of programming,reading and debugging the code, we decided to split up some parts. Then it became much simpler, more fixes were done because it was easier to notice them now and the overall functionality of the processes increased. It was discussed that one down-side of creating more and simpler nodes is the possible lag between the nodes. So, we decided we are going to check and measure nodes' communication, how well they are synced and we are also not going to over-simplify and create too many nodes. But we are positive that separating the needed tasks may reduce the reaction time if the nodes are synced well; by using functions and options like ros::spin(),ros::spinOnce(),ros::Duration().sleep()(to save CPU) and message queues for publishing and subscribing.

After visualizing the environment as we wanted to; we now focus more on tasks of the robot as some of the tasks have to be carried out at all times (e.g. obstacle avoidance) and others have to be completed upon requests (e.g. change in trajectory due to decision making).

Laser data visualized

18 Sept 2013

The data from the laser can be interpreted as points given in a polar coordinate system, these points can be transformed to a cartesian coordinate system. This visualisation helps to create a strategy for detecting walls and corners.

ROS Nodes and Topics

17 Sept 2013

With ROS, one can use nodes and topics to create simpler and easy-to-handle structures. Usage of nodes is very useful when multiple tasks have to be done simultaneously. For this project, a 'Master' node, a 'Movement' node and a 'Vision' node can be created to perform given tasks as solving a maze and recognizing signs, which have to be done simultaneously and which are at times independent of each other. For the corridor competition, the task is simple; so the use of multiple nodes and especially the 'Vision' node will not be necessary. However, for the second competition goal of which will be solving the maze, these nodes can be implemented to perform recognition of signs and to solve the maze. Here is one of the first drafts of the nodal structure of the program:

Useful Things

Hardware info

Technical info about the laserscanner can be found in this document. Mostly to satisfy your curiosity, not specifically needed for programming. Hokuyo Laser Scanner

Creating a different map

Using GIMP Image Editor

1. Download GIMP Image Editor from the Software Center

2. Open the existing map ("maze.pgm") in '/home/s080482/ros/emc/general/gazebo_map_spawner/maps' with GIMP

3. Edit the map how you want it to be. Scroll all the way down in the 'Brushes' box in the bottom right of GIMP. Then there is a square on the second last row on the far right, called ' square (5x5)(5 x 5). (make sure you have this one and not one with 'blur'. Also select the 'Pencil Tool Hard Edge Painting Using a Brush' in the top left box.

4. Now just delete blocks and at add them were you want. Zooming in helps. You can switch color on the top left as well.

5. Also if you look closely, you can see a grey dot in the bottom center. I think this to mark the point where the robot spawns. I suggest we leave it where it is and edit the maze around it.

6. Save the map in the same folder but with a different name.

7. Go to the folder '/home/s080482/ros/emc/general/gazebo_map_spawner/scripts' and open spawn_maze in a text editor.

8. Change the name of the map in needs to load, i.e. change the OWN_MAP in the text below to your own map name.

rosrun gazebo_map_spawner map_spawner `rospack find gazebo_map_spawner`/maps/OWN_MAP.pgm

9. Now simply spawn the maze in exactly the same way as you did before, so with exactly the same commands!

Using Gazebo Model Builder

1. Open Gazebo

2. Go to 'Edit', 'Model Builder'.

3. You will see a 2-D image and 3-D world under it. Choose 'Add Wall' and start drawing walls on the 2-D image.

4. Check the locations and distances from the 3-D world.

5. Hit 'Done', save it to a file 'example.sdf'. Close 'Model Builder'. The model should spawn in the world.

6. Go to 'File', 'Save As'; again save as 'example.sdf'.

7. To load, go to the save folder and hit command:

gazebo example.sdf

Teleop Controller for Pico

To be able to move the robot around manually and easily is very useful when testing simple things in the algorithm. Here you can find the teleop controller for PR2:

And here is the revised version of the teleop controller for our robot pico:

/*

This code lets you move the jazz robot using your keyboard.

This is obtained from a sample code for pr2 from this link:

http://wiki.ros.org/pr2_simulator/Tutorials/Teleop%20PR2%20arm%20in%20simulation

It basically goes into a loop, where the terminal is set for user-input kind of operation

and everytime a button is pressed, velocities are published as the cmd_vel topic for pico.

--emc11

*/

#include <termios.h>

#include <signal.h>

#include <stdio.h>

#include <stdlib.h>

#include "ros/ros.h"

#include "geometry_msgs/Twist.h"

#define KEYCODE_A 0x61

#define KEYCODE_D 0x64

#define KEYCODE_S 0x73

#define KEYCODE_W 0x77

#define KEYCODE_Q 0x71

#define KEYCODE_E 0x65

class teleop_pico_keyboard

{

private:

geometry_msgs::Twist cmd_msg;

ros::NodeHandle n;

ros::Publisher cmd_pub;

public:

void init(){

cmd_pub = n.advertise<geometry_msgs::Twist>("/pico/cmd_vel", 1000);

cmd_msg.linear.x=0.0;

cmd_msg.linear.y=0.0;

cmd_msg.linear.z=0.0;

cmd_msg.angular.x=0.0;

cmd_msg.angular.y=0.0;

cmd_msg.angular.z=0.0;

cmd_pub.publish(cmd_msg);

};

~teleop_pico_keyboard() { };

void kloop();

};

int kfd = 0;

struct termios cooked, raw;

void quit(int sig)

{

tcsetattr(kfd, TCSANOW, &cooked);

exit(0);

}

int main(int argc, char **argv){

ros::init(argc, argv, "jazz_teleop_t");

teleop_pico_keyboard tpk;

tpk.init();

signal(SIGINT,quit);

tpk.kloop();

return 0;

}

void teleop_pico_keyboard::kloop()

{

char c;

bool dirty=false;

ros::Rate loop_rate(5);

// get the console in raw mode

tcgetattr(kfd, &cooked);

memcpy(&raw, &cooked, sizeof(struct termios));

raw.c_lflag &=~ (ICANON | ECHO);

// Setting a new line, then end of file

raw.c_cc[VEOL] = 1;

raw.c_cc[VEOF] = 2;

tcsetattr(kfd, TCSANOW, &raw);

puts("Reading from keyboard");

puts("---------------------------");

puts("Use 'WS' to forward/back");

puts("Use 'AD' to rotate left/right");

//puts("Use 'QE' to ...");

while(ros::ok())

{

// get the next event from the keyboard

if(read(kfd, &c, 1) < 0)

{

perror("read():");

exit(-1);

}

switch(c)

{

case KEYCODE_W:

cmd_msg.linear.x = 1.0;

cmd_msg.angular.z = 0.0;

dirty = true;

break;

case KEYCODE_S:

cmd_msg.linear.x = -0.5;

cmd_msg.angular.z = 0.0;

dirty = true;

break;

case KEYCODE_A:

cmd_msg.angular.z = 0.5;

cmd_msg.linear.x = 0.0;

dirty = true;

break;

case KEYCODE_D:

cmd_msg.angular.z = -0.5;

cmd_msg.linear.x = 0.0;

dirty = true;

break;

// You can use these for different tasks

// case KEYCODE_Q:

// dirty = true;

// break;

// case KEYCODE_E:

// dirty = true;

// break;

}

if (dirty == true)

{

cmd_pub.publish(cmd_msg);

}

}

}

Just create a ros package dependent on roscpp and geometry_msgs, compile and run it in a seperate terminal. As an example; by pressing 'W' in the terminal, you will move the robot forward in the simulator.