Embedded Motion Control 2012 Group 9

Group Members :

Ryvo Octaviano 0787614 r.octaviano@student.tue.nl Weitian Kou 0786886 w.kou@student.tue.nl Dennis Klein 0756547 d.klein@student.tue.nl Harm Weerts 0748457 h.h.m.weerts@student.tue.nl Thomas Meerwaldt 0660393 t.t.meerwaldt@student.tue.nl

Objective

The Jazz robot find his way out of a maze in the shortest amount of time

Requirement

The Jazz robot refrains from colliding with the walls in the maze

The Jazz robot detects arrow (pointers) to choose moving to the left or to the right

Planning

An intermediate review will be held on June 4th, during the corridor competition

The final contest will be held some day between June 25th and July 6

Progress :

Week 1 :

Make a group, find book & literature

Week 2 :

Installation :

1st laptop :

Ubuntu 10.04 (had an error in wireless connection : solved)

ROS Electric

Eclipse

Environmental setup

Learning :

C++ programming (http://www.cplusplus.com/doc/tutorial)

Chapter 11

Week 3 :

Installation :

SVN (Got the username & password on 8th May)

Learning :

ROS (http://www.ros.org/wiki/ROS/Tutorials)

Jazz Simulator (http://cstwiki.wtb.tue.nl/index.php?title=Jazz_Simulator)

To DO :

end of week 3

Finish setup for all computers

3 computers will use Ubuntu 11.10 and 1 computer uses Xubuntu 12.04

Week 4 :

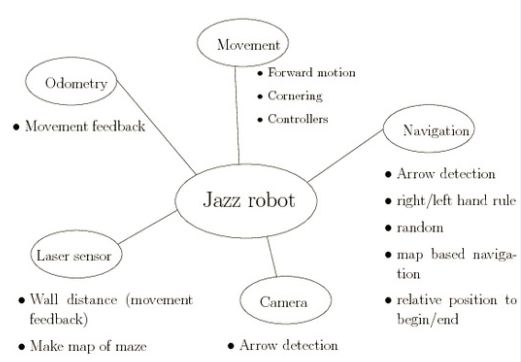

We did a brainstorm session on what functions we should make in the software, the result is below.

Week 5 :

We made a rudimentary software map and started developing two branches of software based on different ideas. The software map will be discussed and revised in light of the results of the software that was written early in week 6. The group members responsible for the lecture started reading/researching chapter 11.

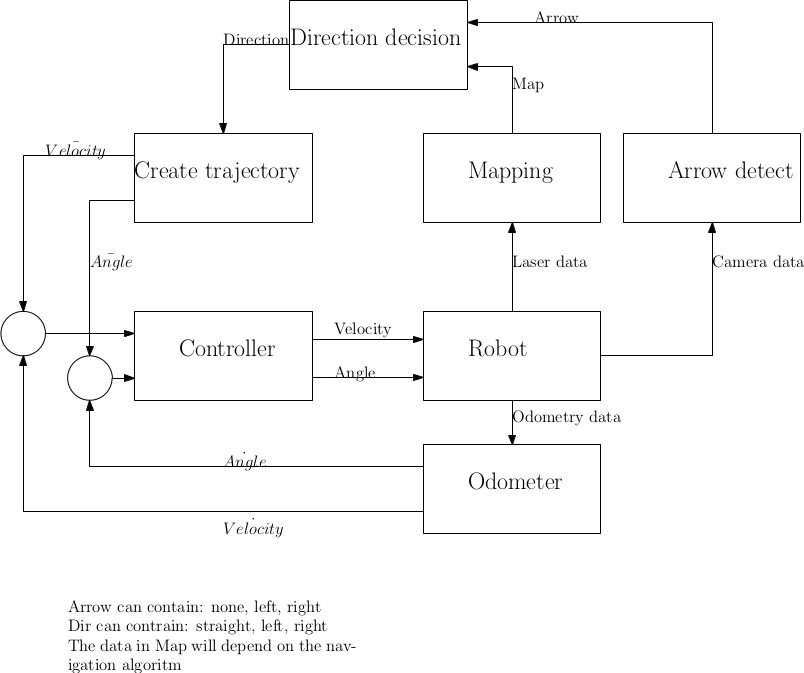

We decided to work on two branches of software, developing different ideas, in parallel. The first branch is based on the software map above. We started writing two nodes, one to process and publish laser data and one controller node to calculate and follow a trajectory. The former node reads laser data from the /scan topic and processes this to detect whether the robot is driving straight in a corner, detect and transmit corner coordinates and provide collision warnings. The second node is the controlling, which uses odometry data to provide input coordinates (i.e. a trajectory) for the robot to follow. The controller implemented is a PID controller, which is still to be tuned. The I and D actions might not be used/necessary in the end. The controller node will also use the laser data provided by the laser data node to be able to plan the trajectory for the robot. A decision algorithm (i.e. go left or right) is still to be developed.

Implementation So Far & Decision We Made

1. Navigation with Laser

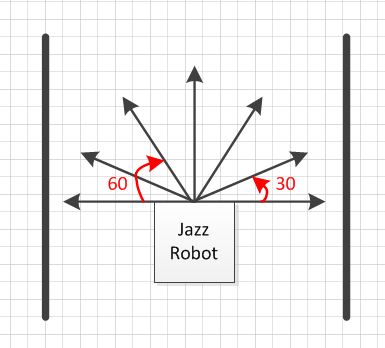

We are using 7 laser points with variety degrees as shown below :

1. The 0 is used for detecting the distance between the robot and the wall in front of the robot, it is also used for anti collision

2. The -90 and 90 are used to make the position of the robot looking forward and keep the distance between the robot and side wall