PRE2019 4 Group3

SPlaSh: The Plastic Shark

Group members

| Student name | Student ID | Study | |

|---|---|---|---|

| Kevin Cox | 1361163 | Mechanical Engineering | k.j.p.cox@student.tue.nl |

| Menno Cromwijk | 1248073 | Biomedical Engineering | m.w.j.cromwijk@student.tue.nl |

| Dennis Heesmans | 1359592 | Mechanical Engineering | d.a.heesmans@student.tue.nl |

| Marijn Minkenberg | 1357751 | Mechanical Engineering | m.minkenberg@student.tue.nl |

| Lotte Rassaerts | 1330004 | Mechanical Engineering | l.rassaerts@student.tue.nl |

Introduction

Problem statement

Over 5 trillion pieces of plastic are currently floating around in the oceans [1]. For a part, this so-called plastic soup, exists of large plastics, like bags, straws, and cups. But it also contains a vast concentration of microplastics: these are pieces of plastic smaller than 5mm in size [2]. There are five garbage patches across the globe [1]. In the garbage patch in the Mediterranean sea, the most prevalent microplastics were found to be polyethylene and polypropyline [3].

A study in the Northern Sea showed that 5.4% of the fish had ingested plastic [4]. The plastic consumed by the fish accumulates - new plastic does go into the fish, but does not come out. The buildup of plastic particles results in stress in their livers [5]. Beside that, fish can become stuck in the larger plastics. Thus, the plastic soup is becoming a threat for sea life. This problem is created by humans, and therefore humans must provide a solution for this problem.

In this project we would like to contribute to this solution. At this moment cleaning devices are already in use to clean up the ocean. However, these cleaning devices are harmful for the marine life, since they are not sophisticated enough to certain marine life [6]. Therefore, in this project there will be looked into contributing to the solution for this problem. Firstly, by providing a software tool that is able to distinguish garbage from marine life. This information could help the cleaning device navigate through the ocean and cleaning up the garbage without harming the marine life. Also, different parts of a clean up robot will be designed. These parts will be designed in such a way that they will contribute to making the robots less harmful for the marine life. Only parts of the robot will be designed, since designing the full robot will take too much time.

Objectives

- Do research into the state of the art of current recognition software, ocean cleanup devices and neural networks.

- Create a software tool that distinguishes garbage from marine life.

- Test this software tool and form a conclusion on the effectiveness of the tool.

Users

In this part the different users will be discussed. With users, the different groups that are involved with this problem are meant.

Businesses/organizations

Until now, not much businesses have taken on the challenge of cleaning up the oceans. Reason for this is, that no money can be made from it, which means non-profit organizations are the main contributors. These organizations have to collect money to fund its projects. This also means that they can use all help any university or other institution can provide. Another reason why businesses do not take on the challenge is that when the goal is reached, they are out of the job. This means that non-profit organizations really have to rely on good intentions of people, because investments most likely will not provide to be profitable.

Governments

Another group of users are governments. Since garbage patches are in the ocean, no government is held accountable for its cleanup, and no government wants to take on the responsibility. This means that providing technology that makes it easier to clean up the oceans could lead to eventually governments taking this responsibility.

Society

Society is one of the main contributors to polluting the oceans. However, they are also a big source of funding. Harm to marine life, while attempting to clean the ocean, does not contribute to the image of ocean cleaning devices and therefore hurts the funding. This means that developing technology that improves this image could lead to more funding from society.

Marine Life

The marine life is the group that is most harmed by ocean pollution. They would benefit the most of technology that makes it possible to distinguish garbage and marine life. Firstly, because it would speed up the implementation on larger scale of garbage cleaning equipment. And secondly, because it makes this equipment less harmful to them.

Requirements

The following points are the requirements. These requirements are conditions or tasks that must be completed to ensure the completion of the project.

Requirements for the Software

- The program that is written should be able to identify the difference between waste and marine life correctly 95% of the time based on a data set of … examples.

- The literature research for the software must provide information on at least the following subjects:

- Neural networks

- Image recognition

Requirements for the Design

The designed parts need to be

- water resistant;

- eco-friendly, i.e. not harmful to the environment, especially the marine life;

- easy to manufacture;

- able to be implemented in a current ocean cleaning device

Finally, literature research about the current ocean cleanup systems must be provided. At least 25 sources must be used for the literature research of the software and design.

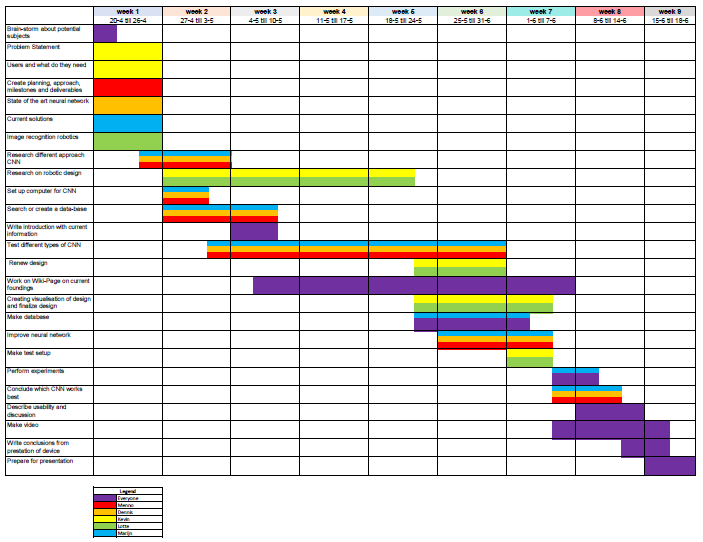

Planning

Approach

For the planning, a Gantt Chart is created with the most important things. The overall view of our planning is that in the first two weeks, a lot of research has to be done. This needs to be done for, among other things, the problem statement, users and the current technology. In the second week, more information about different types of neural networks and the working of different layers should be investigated to gain more knowledge. Also, this could lead to installing multiple packages or programs on our laptops, which needs time to test whether they work. During this second week, a data-set should be created or found that can be used to train our model. If this cannot be found online and thus should be created, this would take much more time than one week. However, it is hoped to be finished after the third week. After this, the group is split into people who create the design and applications of the robot, and people who work on the creation of the neural network. After week 5, an idea of the robotics should be elaborated with the use of drawings or digital visualizations. Also all the possible neural networks should be elaborated and tested, so that in week 6 conclusions can be drawn for the best working neural network. This means that in week 7, the Wiki-page can be concluded with a conclusion and discussion about the neural network that should be used and about the working of the device. Finally, week 8 is used to prepare for the presentation.

Currently, the activities are subdivided related to the Neural Network / image recognition and the design of the device. Kevin and Lotte will work on the design of the device and Menno, Marijn and Dennis will look work on the neural networks.

Milestones

| Week | Milestones |

|---|---|

| 1 (April 20th till April 26th) | Correct information and knowledge for first meeting |

| 2 (April 27th till May 3rd) | Further research on different types of Neural Networks and having a working example of a CNN. |

| 3 (May 4th till May 10th) | Elaborate the first ideas of the design of the device and find or create a usable database. |

| 4 (May 11th till May 17th) | First findings of correctness of different Neural Networks and tests of different types of Neural Networks. |

| 5 (May 18th till May 24th) | Conclusion of the best working neural network and final visualisation of the design. |

| 6 (May 25th till April 31th) | First set-up of wiki page with the found conclusions of Neural Networks and design with correct visualisation of the findings. |

| 7 (June 1st till June 7th) | Creation of the final wiki-page |

| 8 (June 8th till June 14th) | presentation and visualisation of final presentation |

Deliverables

- Design of the SPlaSh

- Software for image recognition

- Complete wiki-page

- Final presentation

State-of-the-Art

Ocean-cleanup solutions

To clean up the plastic soup, a couple of ideas are already proposed. An example that is already functioning is the WasteShark [7]. Other ideas to clean up the plastic soup are usually still concepts [8] that involve futuristic technology or lots of effort from local fishery.

The most recent development to clean up plastic soup was made by The Ocean Cleanup. The Ocean Cleanup is a Dutch foundation founded in 2013 by Boyan Slat. It aims to develop advanced technologies to get the plastic out of the ocean. They say that all measurements should be autonomous, energy neutral, and scalable [1]. Systems can be made autonomous through the help of algorithms. They can be made energy neutral by using solar energy-powered electronics. Scalability will be achieved by gradually increasing the amount of systems in the oceans.

Slat's plan was to collect plastic waste in the upper 10 feet of the ocean using a large floating snake (called Wilson), which acts as a barrier for the plastic. A submerged parachute, or boats, would drag the long tube along the ocean in a U-shape, making all waste clog up in the center.

However, this initiative also had problems. Their tests have, so far, lead to failures. During the first tests, in late 2018, it was found that collected plastic exited the system as easily as it entered [9]. Early in 2019, the system had even broken (as was expected [10]), requiring repairs. Beside breakdowns, it was also questioned whether Wilson is really eco-friendly. The floating snake could theoretically also trap floating sea life [6] and no proof was found of the contrary. In fact, the floating 'island' of plastic in the North Pacific was found to have become home to sea anemones, algae, clams, and mussels [10]. The system invented by Boyan Slat is unable to get rid of this plastic without harming the sea life there.

This is why it will be very important for future developments to have improved autonomy. Systems should, for example, be able to distinguish between fish and trash by themselves. That way, trash collection can be done while minimizing harm to sea life.

Neural Networks

Neural networks are a set of algorithms that are designed to recognize patterns. They interpret sensory data through machine perception, labeling or clustering raw input. The patterns they recognize are numerical, contained in vectors. Real-world data, such as images, sound, text or time series, needs to be translated into such numerical data to process it [11].

There are different types of neural networks [12]:

- Recurrent neural network: Recurrent neural networks, also known as RNNs, are a class of neural networks that allow previous outputs to be used as inputs while having hidden states. These networks are mostly used in the fields of natural language processing and speech recognition [13].

- Convolutional neural networks: Convolutional neural networks, also known as CNNs, are used for image classification.

- Hopfield networks: Hopfield networks are used to collect and retrieve memory like the human brain. The network can store various patterns or memories. It is able to recognize any of the learned patterns by uncovering data about that pattern [14].

- Boltzmann machine networks: Boltzmann machines are used for search and learning problems [15].

Convolutional Neural Networks

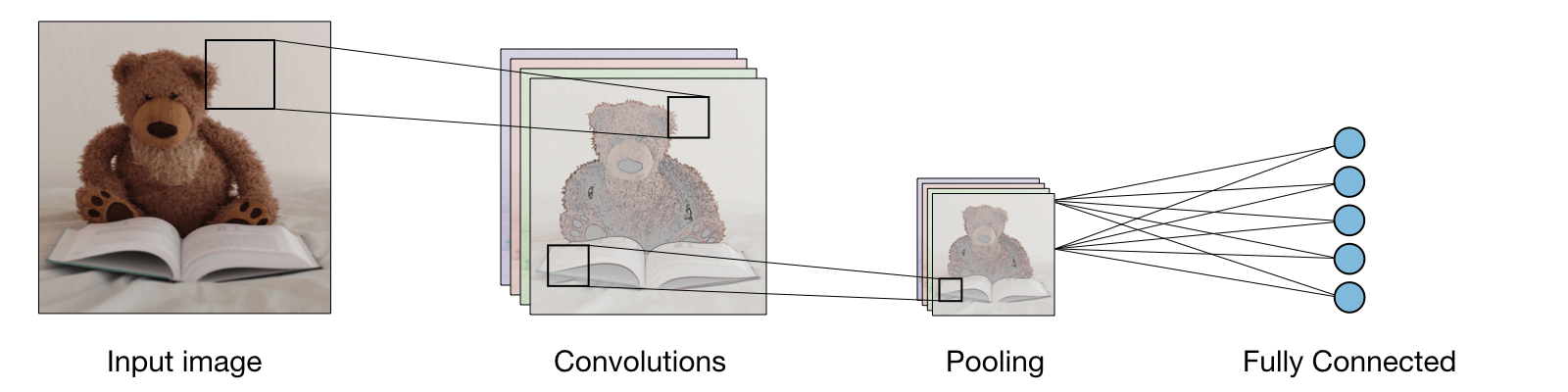

In this project, the neural network should retrieve data from images. Therefore a convolutional neural network will be used. Convolutional neural networks are generally composed of the following layers [16]:

The convolutional layer transforms the input data to detect patterns, edges and other characteristics in order to be able to correctly classify the data. The main parameters with which a convolutional layer can be changed are by choosing a different activation function, or kernel size. Max pooling layers reduce the number of pixels in the output size from the previously applied convolutional layer(s). Max pooling is applied to reduce overfitting. A problem with the output feature maps is that they are sensitive to the location of the features in the input. One approach to address this sensitivity is to use a max pooling layer. This has the effect of making the resulting downsampled feature maps more robust to changes in the position of the feature in the image. The pool-size determines the amount of pixels from the input data that is turned into 1 pixel from the output data. Fully connected layers connect all input values via separate connections to an output channel. Since this project has to deal with a binary problem, the final fully connected layer will consist of 1 output. Stochastic gradient descent (SGD) is the most common and basic optimizer used for training a CNN [17]. It optimizes the model using parameters based on the gradient information of the loss function. However, many other optimizers have been developed that could have a better result. Momentum keeps the history of the previous update steps and combines this information with the next gradient step to reduce the effect of outliers [18]. RMSProp also tries to keep the updates stable, but in a different way than momentum. RMSprop also takes away the need to adjust learning rate [19]. Adam takes the ideas behind both momentum and RMSprop and combines into one optimizer [20]. Nesterov momentum is a smarter version of the momentum optimizer that looks ahead and adjusts the momentum based on these parameters [21]. Nadam is an optimizer that combines RMSprop and Nesterov momentum [22].

Image Recognition

Over the past decade or so, great steps have been made in developing deep learning methods for image recognition and classification [23]. In recent years, convolutional neural networks (CNNs) have shown significant improvements on image classification [24]. It is demonstrated that the representation depth is beneficial for the classification accuracy [25]. Another method is the use of VGG networks, that are known for their state-of-the-art performance in image feature extraction. Their setup exists out of repeated patterns of 1, 2 or 3 convolution layers and a max-pooling layer, finishing with one or more dense layers. The convolutional layer transforms the input data to detect patterns and edges and other characteristics in order to be able to correctly classify the data. The main parameters with which a convolutional layer can be changed, is by choosing a different activation function or kernel size [25].

There are still limitations to the current image recognition technologies. First of all, most methods are supervised, which means they need big amounts of labelled training data, that need to be put together by someone [23]. This can be solved by using unsupervised deep learning instead of supervised. For unsupervised learning, instead of large databases, only some labels will be needed to make sense of the world. Currently, there are no unsupervised methods that outperform supervised. This is because supervised learning can better encode the characteristics of a set of data. The hope is that in the future unsupervised learning will provide more general features so any task can be performed [26]. Another problem is that sometimes small distortions can cause a wrong classification of an image [23] [27]. This can already be caused by shadows on an object that can cause color and shape differences [28]. A different pitfall is that the output feature maps are sensitive to the specific location of the features in the input. One approach to address this sensitivity is to use a max pooling layer. Max pooling layers reduce the number of pixels in the output size from the previously applied convolutional layer(s). The pool-size determines the amount of pixels from the input data that is turned into 1 pixel from the output data. Using this, has the effect of making the resulting down sampled feature maps more robust to changes in the position of the feature in the image [25].

Specific research has been carried out into image recognition and classification of fish in the water. For example, a study that used state-of-the-art object detection to detect, localize and classify fish species using visual data, obtained by underwater cameras, has been carried out. The initial goal was to recognize herring and mackerel and this work was specifically developed for poorly conditioned waters. Their experiments on a dateset obtained at sea, showed a successful detection rate of 66.7% and successful classification rate of 89.7% [29]. There are also studies that researched image recognition and classification of micro plastics. By using computer vision for analyzing required images, and machine learning techniques to develop classifiers for four types of micro plastics, an accuracy of 96.6% was achieved [30].

For these recognitions, image databases need to be found for the recognition of fish and plastic. First of all, ImageNet can be used, which is a database with many pictures of different subjects. Secondly 3 databases of different fishes have been found: http://groups.inf.ed.ac.uk/f4k/GROUNDTRUTH/RECOG/ https://wiki.qut.edu.au/display/cyphy/Fish+Dataset https://wiki.qut.edu.au/display/cyphy/Fish+Dataset (same?)

Further exploration

WasteShark

A good example of a working plastic-cleanup device is the WasteShark. This device is manufactured by RanMarine Technology and floats on the water surface of rivers, ports and marinas to collect plastics, bio-waste and other debris [31]. It currently operates at coasts, in rivers and in harbours around the world - also in the Netherlands. The idea is to collect the plastic waste before a tide takes it out into the deep ocean, where the waste is much harder to collect.

WasteSharks can collect 180 liters of trash at a time, before having to return to an on-land unloading station. They also charge there. The WasteShark has no carbon emissions, operating on solar power and batteries. The batteries can last 8-16 hours. Both an autonomous model and a remote-controlled model are available [31]. This model seems to tick all the boxes (autonomous, energy neutral, and scalable) set by The Dutch Cleanup.

Functionalities

How does the WasteShark work? Floating plastic that lies in the path of the WasteShark is detected using laser imaging detection and ranging (LIDAR) technology. This means the WasteShark sends out a signal, and measures the time it takes until a reflection is detected [32]. From this, the software can figure out the distance of the object that caused the reflection. The WasteShark can then decide to approach the object, or stop / back up a little in case the object is coming closer [33], this is probably for self-protection. The design of the WasteShark makes it so that plastic waste can go in easily, but can hardly go out of it. The only moving parts of the design are two thrusters which propel the WasteShark forward or backward [34]. This means that the design is very robust, which is important in the environments it is designed to work in.

The autonomous version of the WasteShark has some extra functionalities, too. It also collects water quality data, scans the seabed to chart its shape, and filters the water from chemicals that might be in it [33]. This helps harbour organisations to analyze the health of the water. To perform autonomously, this design also has a mission planning ability. In the future, the device should even be able to construct a predictive model of where trash collects in the water [34].

A fully autonomous model can be bought for just under $23000 [33], making it pretty affordable for governments to invest in.

The WasteShark is claimed to be harmless to humans or animals. Further research as to whether or why this claim is true is done below.

Location

Plastic

Fish

Boats

Useful sources

Convolutional neural networks for visual recognition [35]

Using Near-Field Stereo Vision for Robotic Grasping in Cluttered Environments [36]

Logbook

Week 1

| Name | hrs | Break-down |

|---|---|---|

| Kevin Cox | 6 | Meeting (1h), Problem statement and objectives (1.5h), Who are the users (1h), Requirements (0.5h), Adjustments on wiki-page (2h) |

| Menno Cromwijk | 9 | Meeting (1h), Thinking about project-ideas (4h), Working out previous CNN work (2h), creating planning (2h). |

| Dennis Heesmans | 8.5 | Meeting (1h), Thinking about project-ideas (3h), State-of-the-art: neural networks (3h), Adjustments on wiki-page (1.5h) |

| Marijn Minkenberg | 7 | Meeting (1h), Setting up wiki page (1h), State-of-the-art: ocean-cleaning solutions (part of which was moved to Problem Statement) (4h), Reading through wiki page (1h) |

| Lotte Rassaerts | 7 | Meeting (1h), Thinking about project-ideas (2h), State of the art: image recognition (4h) |

Week 2

| Name | Total hours | Break-down |

|---|---|---|

| Kevin Cox | hrs | Meeting (1.5h), ... |

| Menno Cromwijk | hrs | Meeting (1.5h), Installing CNN tools (2h) |

| Dennis Heesmans | hrs | Meeting (1.5h), Installing CNN tools (2h) |

| Marijn Minkenberg | 4 | Meeting (1.5h), Checking the wiki page (0.5h), Installing CNN tools (2h) |

| Lotte Rassaerts | hrs | Meeting (1.5h), ... |

Template

| Name | Total hours | Break-down |

|---|---|---|

| Kevin Cox | hrs | description (Xh) |

| Menno Cromwijk | hrs | description (Xh) |

| Dennis Heesmans | hrs | description (Xh) |

| Marijn Minkenberg | hrs | description (Xh) |

| Lotte Rassaerts | hrs | description (Xh) |

References

- ↑ 1.0 1.1 1.2 Oceans. (2020, March 18). Retrieved April 23, 2020, from https://theoceancleanup.com/oceans/

- ↑ Wikipedia contributors. (2020, April 13). Microplastics. Retrieved April 23, 2020, from https://en.wikipedia.org/wiki/Microplastics

- ↑ Suaria, G., Avio, C. G., Mineo, A., Lattin, G. L., Magaldi, M. G., Belmonte, G., … Aliani, S. (2016). The Mediterranean Plastic Soup: synthetic polymers in Mediterranean surface waters. Scientific Reports, 6(1). https://doi.org/10.1038/srep37551

- ↑ Foekema, E. M., De Gruijter, C., Mergia, M. T., van Franeker, J. A., Murk, A. J., & Koelmans, A. A. (2013). Plastic in North Sea Fish. Environmental Science & Technology, 47(15), 8818–8824. https://doi.org/10.1021/es400931b

- ↑ Rochman, C. M., Hoh, E., Kurobe, T., & Teh, S. J. (2013). Ingested plastic transfers hazardous chemicals to fish and induces hepatic stress. Scientific Reports, 3(1). https://doi.org/10.1038/srep03263

- ↑ 6.0 6.1 Helm, R. R. (2019, February 21). The Ocean Cleanup struggles to prove it will not harm sea life. Retrieved April 24, 2020, from https://www.deepseanews.com/2019/02/the-ocean-cleanup-struggles-to-prove-it-will-not-harm-sea-life/

- ↑ Nobleo Technology. (n.d.). Fully Autonomous WasteShark. Retrieved April 23, 2020, from https://nobleo-technology.nl/project/fully-autonomous-wasteshark/

- ↑ Onze missie. (2020, April 6). Retrieved April 23, 2020, from https://www.plasticsoupfoundation.org

- ↑ K. (2019, May 20). Wilson Update – Tweaking the System. Retrieved April 24, 2020, from https://theoceancleanup.com/updates/wilson-update-tweaking-the-system/

- ↑ 10.0 10.1 6 Reasons That Floating Ocean Plastic Cleanup Gizmo is a Horrible Idea. (2016, November 9). Retrieved April 24, 2020, from https://www.kcet.org/redefine/6-reasons-that-floating-ocean-plastic-cleanup-gizmo-is-a-horrible-idea

- ↑ Nicholson, C. (n.d.). A Beginner’s Guide to Neural Networks and Deep Learning. Retrieved April 22, 2020, from https://pathmind.com/wiki/neural-network

- ↑ Cheung, K. C. (2020, April 17). 10 Use Cases of Neural Networks in Business. Retrieved April 22, 2020, from https://algorithmxlab.com/blog/10-use-cases-neural-networks/#What_are_Artificial_Neural_Networks_Used_for

- ↑ Amidi, Afshine , & Amidi, S. (n.d.). CS 230 - Recurrent Neural Networks Cheatsheet. Retrieved April 22, 2020, from https://stanford.edu/%7Eshervine/teaching/cs-230/cheatsheet-recurrent-neural-networks

- ↑ Hopfield Network - Javatpoint. (n.d.). Retrieved April 22, 2020, from https://www.javatpoint.com/artificial-neural-network-hopfield-network

- ↑ Hinton, G. E. (2007). Boltzmann Machines. Retrieved from https://www.cs.toronto.edu/~hinton/csc321/readings/boltz321.pdf

- ↑ Amidi, A., & Amidi, S. (n.d.). CS 230 - Convolutional Neural Networks Cheatsheet. Retrieved April 22, 2020, from https://stanford.edu/%7Eshervine/teaching/cs-230/cheatsheet-convolutional-neural-networks

- ↑ Yamashita, Rikiya & Nishio, Mizuho & Do, Richard & Togashi, Kaori. (2018). Convolutional neural networks: an overview and application in radiology. Insights into Imaging. 9. 10.1007/s13244-018-0639-9

- ↑ Qian, N. (1999, January 12). On the momentum term in gradient descent learning algorithms. - PubMed - NCBI. Retrieved April 22, 2020, from https://www.ncbi.nlm.nih.gov/pubmed/12662723

- ↑ Hinton, G., Srivastava, N., Swersky, K., Tieleman, T., & Mohamed , A. (2016, December 15). Neural Networks for Machine Learning: Overview of ways to improve generalization [Slides]. Retrieved from http://www.cs.toronto.edu/~hinton/coursera/lecture9/lec9.pdf

- ↑ Kingma, D. P., & Ba, J. (2015). Adam: A Method for Stochastic Optimization. Presented at the 3rd International Conference for Learning Representations, San Diego.

- ↑ Nesterov, Y. (1983). A method for unconstrained convex minimization problem with the rate of convergence o(1/k^2).

- ↑ Dozat, T. (2016). Incorporating Nesterov Momentum into Adam. Retrieved from https://openreview.net/pdf?id=OM0jvwB8jIp57ZJjtNEZ

- ↑ 23.0 23.1 23.2 Seif, G. (2018, January 21). Deep Learning for Image Recognition: why it’s challenging, where we’ve been, and what’s next. Retrieved April 22, 2020, from https://towardsdatascience.com/deep-learning-for-image-classification-why-its-challenging-where-we-ve-been-and-what-s-next-93b56948fcef

- ↑ Lee, G., & Fujita, H. (2020). Deep Learning in Medical Image Analysis. New York, United States: Springer Publishing.

- ↑ 25.0 25.1 25.2 Simonyan, K., & Zisserman, A. (2015, January 1). Very deep convolutional networks for large-scale image recognition. Retrieved April 22, 2020, from https://arxiv.org/pdf/1409.1556.pdf

- ↑ Culurciello, E. (2018, December 24). Navigating the Unsupervised Learning Landscape - Intuition Machine. Retrieved April 22, 2020, from https://medium.com/intuitionmachine/navigating-the-unsupervised-learning-landscape-951bd5842df9

- ↑ Bosse, S., Becker, S., Müller, K.-R., Samek, W., & Wiegand, T. (2019). Estimation of distortion sensitivity for visual quality prediction using a convolutional neural network. Digital Signal Processing, 91, 54–65. https://doi.org/10.1016/j.dsp.2018.12.005

- ↑ Brooks, R. (2018, July 15). [FoR&AI] Steps Toward Super Intelligence III, Hard Things Today – Rodney Brooks. Retrieved April 22, 2020, from http://rodneybrooks.com/forai-steps-toward-super-intelligence-iii-hard-things-today/

- ↑ Christensen, J. H., Mogensen, L. V., Galeazzi, R., & Andersen, J. C. (2018). Detection, Localization and Classification of Fish and Fish Species in Poor Conditions using Convolutional Neural Networks. 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV). https://doi.org/10.1109/auv.2018.8729798

- ↑ Castrillon-Santana , M., Lorenzo-Navarro, J., Gomez, M., Herrera, A., & Marín-Reyes, P. A. (2018, January 1). Automatic Counting and Classification of Microplastic Particles. Retrieved April 23, 2020, from https://www.scitepress.org/Papers/2018/67250/67250.pdf

- ↑ 31.0 31.1 WasteShark ASV | RanMarine Technology. (2020, February 27). Retrieved May 2, 2020, from https://www.ranmarine.io/

- ↑ Wikipedia contributors. (2020, May 2). Lidar. Retrieved May 2, 2020, from https://en.wikipedia.org/wiki/Lidar

- ↑ 33.0 33.1 33.2 Swan, E. C. (2018, October 31). Trash-eating “shark” drone takes to Dubai marina. Retrieved May 2, 2020, from https://edition.cnn.com/2018/10/30/middleeast/wasteshark-drone-dubai-marina/index.html

- ↑ 34.0 34.1 CORDIS. (2019, March 11). Marine Litter Prevention with Autonomous Water Drones. Retrieved May 2, 2020, from https://cordis.europa.eu/article/id/254172-aquadrones-remove-deliver-and-safely-empty-marine-litter

- ↑ CS231n: Convolutional Neural Networks for Visual Recognition. (n.d.). Retrieved April 22, 2020, from https://cs231n.github.io/neural-networks-1/

- ↑ Leeper, A. (2020, April 24). Using Near-Field Stereo Vision for Robotic Grasping in Cluttered Envir. Retrieved April 24, 2020, from https://link.springer.com/chapter/10.1007/978-3-642-28572-1_18