Mobile Robot Control 2024 Robocop: Difference between revisions

mNo edit summary |

|||

| (16 intermediate revisions by 4 users not shown) | |||

| Line 24: | Line 24: | ||

|1859234 | |1859234 | ||

|} | |} | ||

== Exercise 1 == | |||

=== Method 1 === | |||

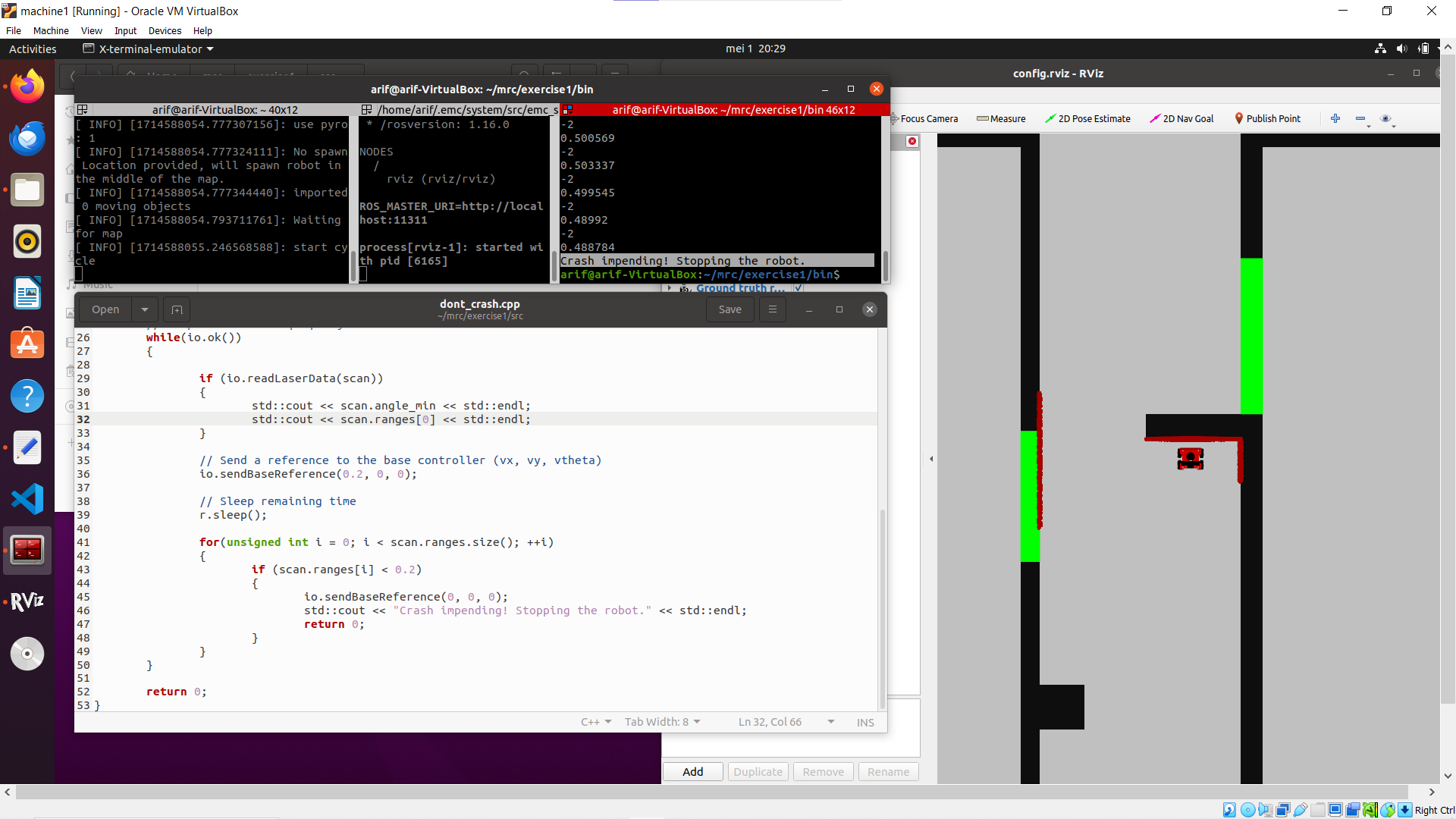

[[File:Dont crash 1.png|thumb|591x591px|Screenshot of final result for Method 1.]] | |||

In this method the LaserData struct is used to track the measured distances from the walls to the robot. To make the robot drive forward the sendBaseReference command is used, and the robot moves in the x direction at a speed of 0.2. Once it reaches the wall in front of it and the range values drop to below 0.2, motion is halted and a message is printed to the screen before exiting the program. | |||

Some experimentation was done to test different speeds and thresholds for the stopping range values. When the speed was higher than the stopping range value the robot would actually crash into the wall first before stopping, and if it was lower then the robot would stop slightly farther away from the wall. However, this only seemed to be the case when the stopping value was very low (e.g. 0.1), but increasing it to 0.2, for example, allowed the speed to be increased to 0.4 without any crashing. | |||

'''''On your wiki. Compare the different methods to one another. What situations do these methods handle well? Is there one "Best" method?''''' | |||

==Exercise 2== | |||

=== Method 1=== | |||

The previously described method was tested on the two provided maps with input speeds of (0.3, 0, +-0.3) and a stopping value of 0.2. With both maps the robot successfully came to a stop before crashing, although it struggled when driving into a corner and stopped much closer to the wall than it did in previous tests. | |||

<table> <tr> <td> [[File:Dont crash ex2 map1.png|thumb|268x268px|Method 1 on Map 1.|none]] </td> <td>[[File:Dont crash ex2 map2.png|thumb|273x273px|Method 1 on Map 2.|none]] </td> </tr> </table> | |||

==Practical Exercises 1== | |||

'''On the robot-laptop open rviz. Observe the laser data. How much noise is there on the laser? What objects can be seen by the laser? Which objects cannot? How do your own legs look like to the robot.''' | |||

When running the sim-rviz environment on the Bobo robot there was significant noise even when remaining stationary, as shown in the GIF below. Due to the density in hit points for the lasers, the measurement of solid flat walls was reliable. However, the noise had high amounts of variation when looking at the edges or corners of a wall, especially when corners were slightly behind the robot, which could indicate weak peripheral vision. Then, black coloured and thin objects were placed in front of the sensors and the robot struggled to detect them. Even when they ''are'' detected they still appear on the visualization with heavy amounts of noise. In contrast, our own legs, rolled up pieces of paper and contours were more clearly defined when placed in front of the robot.[[File:Noise rviz.gif|center|thumb|Video of noise measurements in the sim-rviz environment.]]'''Take your example of don't crash and test it on the robot. Does it work like in simulation? Why? Why not? (choose the most promising version of don't crash that you have.)''' | |||

Two different don't crash codes were tested on the robot. The first code contained the method of detecting walls within a certain distance and telling the robot to stop and remain in position once it came too close. The second code contained the more elaborate method where the robot looked for the next direction of movement that did not have any walls within a set distance, and then continued driving in that direction. For the first code, it became apparent that in the simulation there was a higher margin for error in terms of how close the robot could get to the walls without the system alerting the user of a crash. For the real life test to work, the moving speed had to be reduced and the distance threshold increased to account for things like the robot's size. For the second code, the robot was able to follow simulation results and managed to perform well without needing modifications. GIFs of both code tests can be seen below. | |||

<table> <tr> <td> [[File:Dont crash 1 gif.gif|thumb|307x307px|Video of tests on the first code.|none]] </td> <td>[[File:Dont crash 2 gif.gif|thumb|307x307px|Video of tests on the second code.|none]] </td> </tr> </table> | |||

'''If necessary make changes to your code and test again. What changes did you have to make? Why? Was this something you could have found in simulation?''' | |||

As stated before, some changes had to be made to the moving speed and threshold for distances that counted as a "crash". This is not something that was immediately apparent in the simulations beforehand, but in hindsight it could have been prevented before testing the code on the actual robot. Of course, the robot in the simulations might not have the same dimensions as its real life counterpart, and so, the measure tool in the rviz environment could have been use to precisely measure the distance at which the robot was stopping, and whether or not it would be far enough for an actual robot. | |||

==Local Navigation== | |||

===Dynamic Window Approach=== | |||

=====The approach===== | |||

The approach can be divided in the following sequential steps: | |||

# '''Calculate the union between the Possible (angular) Velocities (Vs, Ws) and the Reachable (angular) Velocities (Vd, Wd)''' | |||

#* EXPLAIN HOW | |||

# '''Iterate through the discretized values (''v, w'') of the union of Vs, Vd and Ws and Wd:''' | |||

##'''Calculate the Admissable (angular) Velocities (Va,Wa) using ''v'' and ''w''''' | |||

##* EXPLAIN HOW | |||

## '''Check if ''v'' and ''w'' are also in Va and Wa, if this is the case:''' | |||

##*'''Calculate the optimization function value. If this value is higher than the previous optimization value, set the current ''v and w'' to ''v_final'' and ''w_final''''' | |||

##**EXPLAIN HOW | |||

#'''Return ''v_final, w''_final''' | |||

Our approach for the Dynamic Window Approach (DWA) is that we first make a list of samples that fit inside the possible velocity limit (Vs) and reachable velocity and acceleration constraints (Vd). After which we | |||

===== Questions===== | |||

'''1. What are the advantages and disadvantages of your solutions?''' | |||

Advantages: | |||

#By constantly calculating possible trajectories it is able to avoid dynamic and static obstacles. | |||

#By including the robot acceleration and deceleration constraints it ensures for a smooth navigation without abrupt movements. | |||

Disadvantages: | |||

#DWA may get trapped in a local minima. | |||

#There is a high computational load in calculating the multiple trajectories in real-time | |||

'''2. What are possible scenarios which can result in failures?''' | |||

#Like mentioned in question 1 DWA can get trapped in a local minima. | |||

#*'''Solution:''' Include the global planner so it doesn't drive into a local minima | |||

#We also encounter some problems when the robot is driving to a straight wall and can't decide with direction to take. (see video: https://gitlab.tue.nl/mobile-robot-control/mrc-2024/robocop/-/blob/main/videos_and_photos/dwa_straight_wall.mp4) | |||

#*'''Solution:''' Increase Ks and decrease Kh so the robot is less constrained to drive directly towards the goal. | |||

'''3. How are the local and global planner linked together?''' | |||

===Vector Field Histograms=== | |||

=====The approach (main idea)===== | |||

The idea of the VFH is to create a vector field histogram from the laserdata of the robot. the VFH is a graph with angle on the x axis and likelihood of a blocking object on the y axis. When the y axis is below a certain threshold, we know that the robot can drive unobstructed in corresponding direction. We can now choose the angle that is unobstructed and closest to the wanted goal. In our approach of the circa 1000 laser be shapams 100 histogram bins are created. the circa 10 beams per bin get inversely (1/distance) added. This creates higher values of bins where there are obstructions. | |||

=====Results===== | |||

=====Questions===== | |||

#'''What are the advantages and disadvantages of your solutions?''' the algorithm is computationally efficient. the laser scan data gets looped through a few times (collecting laser data, making the VFH from the laserdata, optional filtering of the VFH, choosing the best angle). This way the algorithm updates the robot without to much delay from the real time data. a disadvantage of the algorithm is that it doesn't account well for size of the robot. even when inflating the walls in software the casting of the laser beam make it unpredictable if you actually drive aground a wall instead of next to the wall. It does drive itself around but the path isn't always optimal. | |||

#'''What are possible scenarios which can result in failures?'''the algorithm will not navigate mazes itself. If there are too much obstructions the robot could get stuck between a few points. When entering for example something like a U shape that is larger than its viewing window. The robot will enter the hallway thinking it's is free, when seeing the end walls it will turn around until it doesn't see the wall anymore. At that point the robot will again turn around to get stuck in a loop. | |||

#'''How would you prevent these scenarios from happening?'''By integrating a good global planner the robot already won't go into these U shapes. If it finds itself stuck on a point that a global map didn't see the robot can report this back and again find a global route that can be locally navigated. | |||

#'''How are the local and global planner linked together?''' | |||

==Global Navigation == | |||

=====Questions===== | |||

# '''How could finding the shortest path through the maze using the A* algorithm be made more efficient by placing the nodes differently? Sketch the small maze with the proposed nodes and the connections between them. Why would this be more efficient?''' | |||

#'''Would implementing PRM in a map like the maze be efficient?''' | |||

#'''What would change if the map suddenly changes (e.g. the map gets updated)?''' | |||

#'''How did you connect the local and global planner?''' | |||

#'''Test the combination of your local and global planner for a longer period of time on the real robot. What do you see that happens in terms of the calculated position of the robot? What is a way to solve this?''' | |||

#'''Run the A* algorithm using the gridmap (with the provided nodelist) and using the PRM. What do you observe? Comment on the advantage of using PRM in an open space.''' | |||

''Upload screen recordings of the simulation results and comment on the behavior of the robot. If the robot does not make it to the end, describe what is going wrong and how you would solve it (if time would allow)'' | |||

''Upload video's of the robot's performance in real life (same as simulation videos), and comment the seen behavior (similar to the previous question).'' | |||

==Localisation== | |||

Latest revision as of 19:44, 3 June 2024

Group members:

| Name | student ID |

|---|---|

| Matijs van Kempen | 2060256 |

| Luc Manders | 1729225 |

| Marc Quelle | 2044749 |

| Marijn Ruiter | 1489496 |

| Luke Alkemade | 1581643 |

| Arif Ashworth | 1544632 |

| Abhidnya Kadu | 1859234 |

Exercise 1

Method 1

In this method the LaserData struct is used to track the measured distances from the walls to the robot. To make the robot drive forward the sendBaseReference command is used, and the robot moves in the x direction at a speed of 0.2. Once it reaches the wall in front of it and the range values drop to below 0.2, motion is halted and a message is printed to the screen before exiting the program.

Some experimentation was done to test different speeds and thresholds for the stopping range values. When the speed was higher than the stopping range value the robot would actually crash into the wall first before stopping, and if it was lower then the robot would stop slightly farther away from the wall. However, this only seemed to be the case when the stopping value was very low (e.g. 0.1), but increasing it to 0.2, for example, allowed the speed to be increased to 0.4 without any crashing.

On your wiki. Compare the different methods to one another. What situations do these methods handle well? Is there one "Best" method?

Exercise 2

Method 1

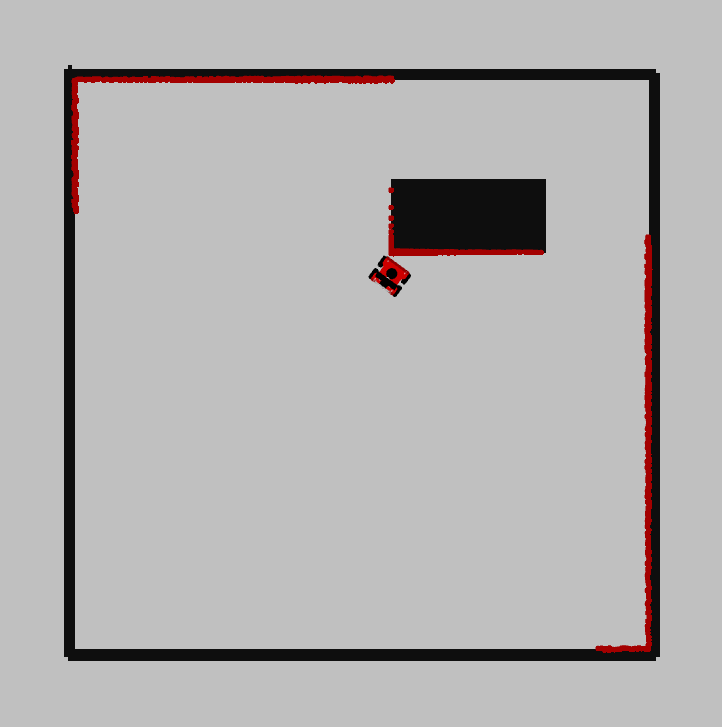

The previously described method was tested on the two provided maps with input speeds of (0.3, 0, +-0.3) and a stopping value of 0.2. With both maps the robot successfully came to a stop before crashing, although it struggled when driving into a corner and stopped much closer to the wall than it did in previous tests.

Practical Exercises 1

On the robot-laptop open rviz. Observe the laser data. How much noise is there on the laser? What objects can be seen by the laser? Which objects cannot? How do your own legs look like to the robot.

When running the sim-rviz environment on the Bobo robot there was significant noise even when remaining stationary, as shown in the GIF below. Due to the density in hit points for the lasers, the measurement of solid flat walls was reliable. However, the noise had high amounts of variation when looking at the edges or corners of a wall, especially when corners were slightly behind the robot, which could indicate weak peripheral vision. Then, black coloured and thin objects were placed in front of the sensors and the robot struggled to detect them. Even when they are detected they still appear on the visualization with heavy amounts of noise. In contrast, our own legs, rolled up pieces of paper and contours were more clearly defined when placed in front of the robot.

Take your example of don't crash and test it on the robot. Does it work like in simulation? Why? Why not? (choose the most promising version of don't crash that you have.)

Two different don't crash codes were tested on the robot. The first code contained the method of detecting walls within a certain distance and telling the robot to stop and remain in position once it came too close. The second code contained the more elaborate method where the robot looked for the next direction of movement that did not have any walls within a set distance, and then continued driving in that direction. For the first code, it became apparent that in the simulation there was a higher margin for error in terms of how close the robot could get to the walls without the system alerting the user of a crash. For the real life test to work, the moving speed had to be reduced and the distance threshold increased to account for things like the robot's size. For the second code, the robot was able to follow simulation results and managed to perform well without needing modifications. GIFs of both code tests can be seen below.

If necessary make changes to your code and test again. What changes did you have to make? Why? Was this something you could have found in simulation?

As stated before, some changes had to be made to the moving speed and threshold for distances that counted as a "crash". This is not something that was immediately apparent in the simulations beforehand, but in hindsight it could have been prevented before testing the code on the actual robot. Of course, the robot in the simulations might not have the same dimensions as its real life counterpart, and so, the measure tool in the rviz environment could have been use to precisely measure the distance at which the robot was stopping, and whether or not it would be far enough for an actual robot.

Dynamic Window Approach

The approach

The approach can be divided in the following sequential steps:

- Calculate the union between the Possible (angular) Velocities (Vs, Ws) and the Reachable (angular) Velocities (Vd, Wd)

- EXPLAIN HOW

- Iterate through the discretized values (v, w) of the union of Vs, Vd and Ws and Wd:

- Calculate the Admissable (angular) Velocities (Va,Wa) using v and w

- EXPLAIN HOW

- Check if v and w are also in Va and Wa, if this is the case:

- Calculate the optimization function value. If this value is higher than the previous optimization value, set the current v and w to v_final and w_final

- EXPLAIN HOW

- Calculate the optimization function value. If this value is higher than the previous optimization value, set the current v and w to v_final and w_final

- Calculate the Admissable (angular) Velocities (Va,Wa) using v and w

- Return v_final, w_final

Our approach for the Dynamic Window Approach (DWA) is that we first make a list of samples that fit inside the possible velocity limit (Vs) and reachable velocity and acceleration constraints (Vd). After which we

Questions

1. What are the advantages and disadvantages of your solutions?

Advantages:

- By constantly calculating possible trajectories it is able to avoid dynamic and static obstacles.

- By including the robot acceleration and deceleration constraints it ensures for a smooth navigation without abrupt movements.

Disadvantages:

- DWA may get trapped in a local minima.

- There is a high computational load in calculating the multiple trajectories in real-time

2. What are possible scenarios which can result in failures?

- Like mentioned in question 1 DWA can get trapped in a local minima.

- Solution: Include the global planner so it doesn't drive into a local minima

- We also encounter some problems when the robot is driving to a straight wall and can't decide with direction to take. (see video: https://gitlab.tue.nl/mobile-robot-control/mrc-2024/robocop/-/blob/main/videos_and_photos/dwa_straight_wall.mp4)

- Solution: Increase Ks and decrease Kh so the robot is less constrained to drive directly towards the goal.

3. How are the local and global planner linked together?

Vector Field Histograms

The approach (main idea)

The idea of the VFH is to create a vector field histogram from the laserdata of the robot. the VFH is a graph with angle on the x axis and likelihood of a blocking object on the y axis. When the y axis is below a certain threshold, we know that the robot can drive unobstructed in corresponding direction. We can now choose the angle that is unobstructed and closest to the wanted goal. In our approach of the circa 1000 laser be shapams 100 histogram bins are created. the circa 10 beams per bin get inversely (1/distance) added. This creates higher values of bins where there are obstructions.

Results

Questions

- What are the advantages and disadvantages of your solutions? the algorithm is computationally efficient. the laser scan data gets looped through a few times (collecting laser data, making the VFH from the laserdata, optional filtering of the VFH, choosing the best angle). This way the algorithm updates the robot without to much delay from the real time data. a disadvantage of the algorithm is that it doesn't account well for size of the robot. even when inflating the walls in software the casting of the laser beam make it unpredictable if you actually drive aground a wall instead of next to the wall. It does drive itself around but the path isn't always optimal.

- What are possible scenarios which can result in failures?the algorithm will not navigate mazes itself. If there are too much obstructions the robot could get stuck between a few points. When entering for example something like a U shape that is larger than its viewing window. The robot will enter the hallway thinking it's is free, when seeing the end walls it will turn around until it doesn't see the wall anymore. At that point the robot will again turn around to get stuck in a loop.

- How would you prevent these scenarios from happening?By integrating a good global planner the robot already won't go into these U shapes. If it finds itself stuck on a point that a global map didn't see the robot can report this back and again find a global route that can be locally navigated.

- How are the local and global planner linked together?

Questions

- How could finding the shortest path through the maze using the A* algorithm be made more efficient by placing the nodes differently? Sketch the small maze with the proposed nodes and the connections between them. Why would this be more efficient?

- Would implementing PRM in a map like the maze be efficient?

- What would change if the map suddenly changes (e.g. the map gets updated)?

- How did you connect the local and global planner?

- Test the combination of your local and global planner for a longer period of time on the real robot. What do you see that happens in terms of the calculated position of the robot? What is a way to solve this?

- Run the A* algorithm using the gridmap (with the provided nodelist) and using the PRM. What do you observe? Comment on the advantage of using PRM in an open space.

Upload screen recordings of the simulation results and comment on the behavior of the robot. If the robot does not make it to the end, describe what is going wrong and how you would solve it (if time would allow)

Upload video's of the robot's performance in real life (same as simulation videos), and comment the seen behavior (similar to the previous question).