Summary Chiel van der Laan

To go back to the summaries list: Article Summaries.

Hardware

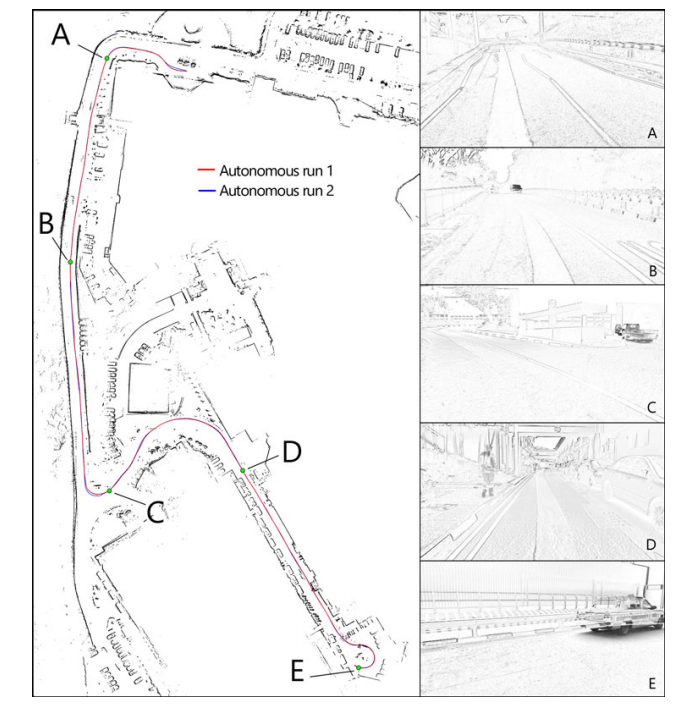

The problem of mapping can be solved by constructing a 2D scan with a LIDAR system from a 3D environment. [1] After which it the localization can be done in the 2D mapped environment for lower processing power.[2] An example of the visual validation of localization can be seen in figure 1. The LIDAR system for the mapping and localization has to be able to scan a large area at once and has to be high on top of the mobility scooter because of this.

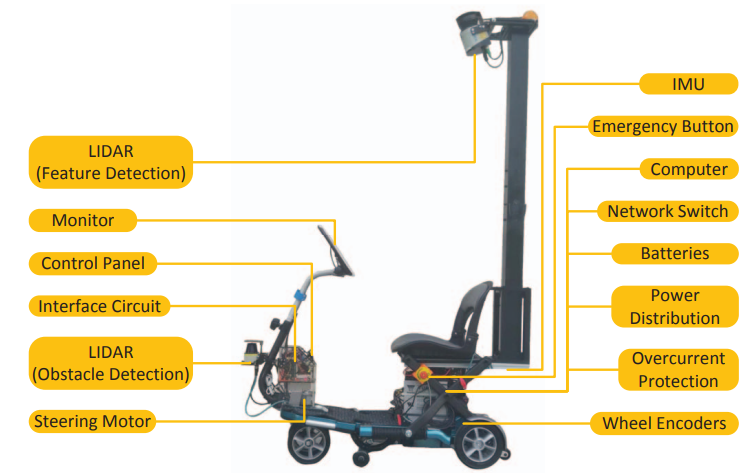

The more complex dynamic environment that has to be avoid pedestrians and other (smaller) moving vehicles can be done by a second LIDAR system lower to the ground. An example of the components of the mobility scooter can be seen in figure 2. In this example two external lead-acid batteries rated at 12 V and 22 Ah each are connected in series, to form an auxiliary 24 V power supply. (In the example used the mobility scooter is shared between multiple users, which is something we could explore too, as this may reduce the cost of being able to ride in an autonomous mobility scooter.)

Pedestrian detection Large-Field-Of-View

Since the mobility scooter will be in crowed areas, such at malls a fast method to scan for pedestrians is important. For autonomous cars the pedestrian detection can be done with a Large-Field-Of-View (LFOV) deep network, that uses machine learning to determine the location of pedestrians in an image. [4] The LFOV method divides the image in a grid of multiple images and can scan them simultaneously for pedestrians. This method is more successful because it can detect pedestrian at a speed of 280 ms per image, compared to prior methods which took seconds.

Navigation in the dark

For driving in the dark during night time normal cameras would not work. Infrared (IR) or thermal imaging can be a solution for this problem. Since pedestrian, cars and all motorized vehicles have a heat signature. [5] In combination with a LIDAR system to detect object that don’t have a heat signature, the scooter should be able to navigate the environment.

[1] “Mapping with synthetic 2D LIDAR in 3D urban environment,” IEEE International Conference on Intelligent Robots and Systems, vol. 3, no. 2, pp. 4715–4720, 2013.

[2] Z. J. Chong, B. Qin, T. Bandyopadhyay et al., “Synthetic 2D LIDAR for precise vehicle localization in 3D urban environment,” in IEEE International Conference on Robotics and Automation (ICRA), 2013, pp. 1554–1559.

[3] Pendleton, S. D., Andersen, H., Shen, X., Eng, Y. H., Zhang, C., Kong, H. X., … Rus, D. (2016). Multi-class autonomous vehicles for mobility-on-demand service. In 2016 IEEE/SICE International Symposium on System Integration (SII). IEEE.

[4] Angelova, A., Krizhevsky, A., & Vanhoucke, V. (2015). Pedestrian detection with a Large-Field-Of-View deep network. In 2015 IEEE International Conference on Robotics and Automation (ICRA). IEEE.

[5] John, V., Mita, S., Liu, Z., & Qi, B. (2015). Pedestrian detection in thermal images using adaptive fuzzy C-means clustering and convolutional neural networks. In 2015 14th IAPR International Conference on Machine Vision Applications (MVA). IEEE.