PRE2022 3 Group12/AI Object Detection: Difference between revisions

(Created page with "During week 2 and the carnival break, a lot of data was gathered and labelled such that a machine learning model for object detection could be trained. Photos were taken and videos were recorded in different environments, with different lightning conditions and backgrounds, and also with multiple different card decks, such that a robust model could be created that could deal with different conditions. All of this data was processed using a tool called Roboflow, which ha...") |

No edit summary |

||

| (One intermediate revision by the same user not shown) | |||

| Line 2: | Line 2: | ||

All of this data was processed using a tool called Roboflow, which has a lot of useful utilities for creating object detection models. Our Roboflow project can be found here: https://universe.roboflow.com/0lauk0/playing-cards-muou8/ | All of this data was processed using a tool called Roboflow, which has a lot of useful utilities for creating object detection models. Our Roboflow project can be found here: https://universe.roboflow.com/0lauk0/playing-cards-muou8/ | ||

[[File:Annotated training image.png|center|thumb|750x750px]] | [[File:Annotated training image.png|center|thumb|750x750px|Screenshot from Roboflow showing an annotated image of playing cards]] | ||

<br /> | <br /> | ||

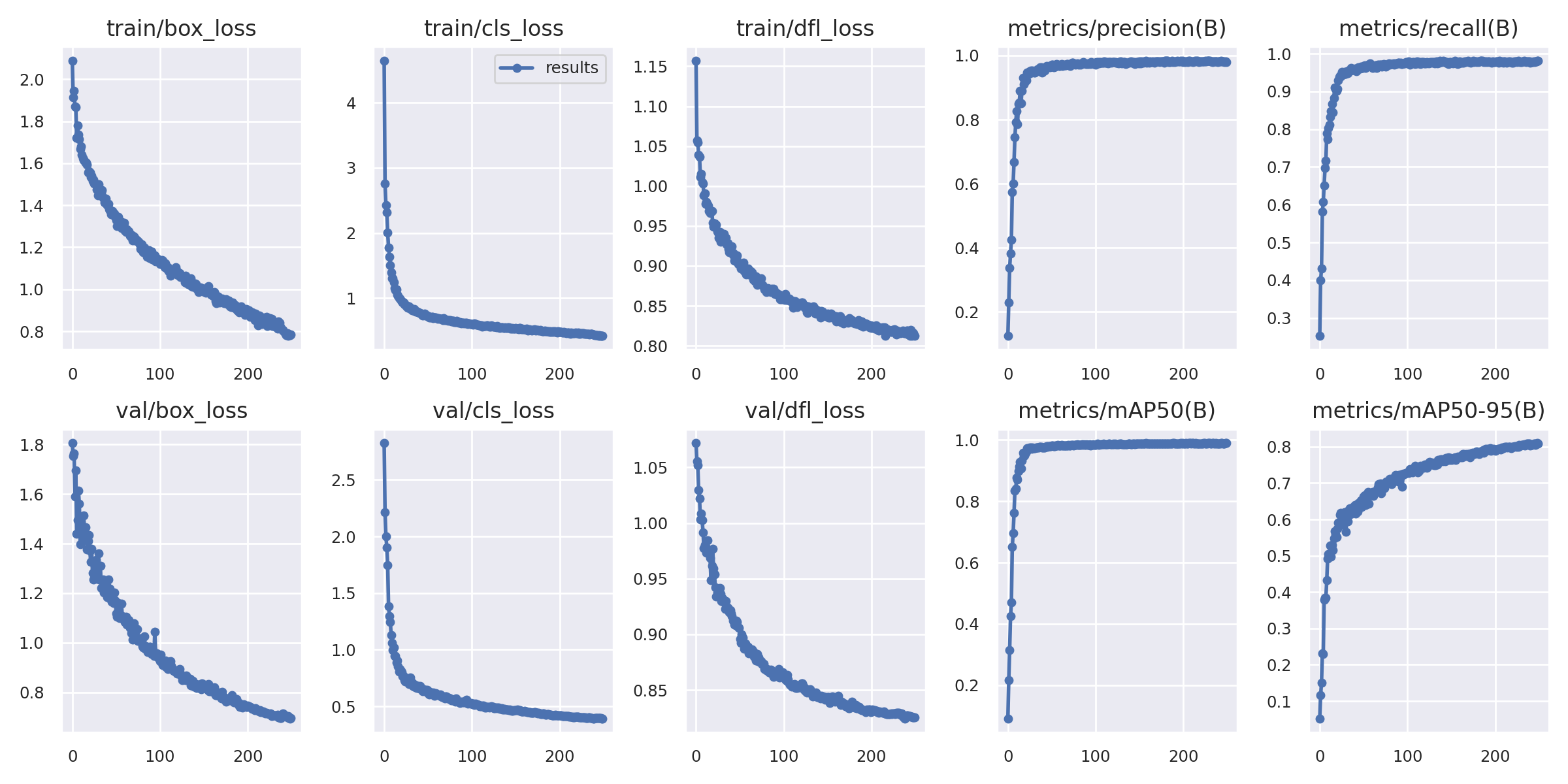

[[File:Model training results.png|thumb]] | [[File:Model training results.png|thumb|Graphs showing various training metrics of the created model.]] | ||

Using the almost 500 images gathered, a model was trained using the state-of-the-art [https://ultralytics.com/yolov8 YOLOv8] base model. After around 4 hours of training (250 epochs), the result was a model that can recognize playing cards pretty decently. Of course there is still room for improvement. Using this model | Using the almost 500 images gathered, a model was trained using the state-of-the-art [https://ultralytics.com/yolov8 YOLOv8] base model. After around 4 hours of training (250 epochs), the result was a model that can recognize playing cards pretty decently. Of course there is still room for improvement. Using this model, the process of gathering additional data becomes a lot faster, as this model can now be used to label new training images in Roboflow automatically. These labels can then be reviewed manually and corrected where necessary. Compared to the labour-intensive task of manually annotating all cards within an image, this will speed up the process a lot. | ||

Latest revision as of 12:20, 26 February 2023

During week 2 and the carnival break, a lot of data was gathered and labelled such that a machine learning model for object detection could be trained. Photos were taken and videos were recorded in different environments, with different lightning conditions and backgrounds, and also with multiple different card decks, such that a robust model could be created that could deal with different conditions.

All of this data was processed using a tool called Roboflow, which has a lot of useful utilities for creating object detection models. Our Roboflow project can be found here: https://universe.roboflow.com/0lauk0/playing-cards-muou8/

Using the almost 500 images gathered, a model was trained using the state-of-the-art YOLOv8 base model. After around 4 hours of training (250 epochs), the result was a model that can recognize playing cards pretty decently. Of course there is still room for improvement. Using this model, the process of gathering additional data becomes a lot faster, as this model can now be used to label new training images in Roboflow automatically. These labels can then be reviewed manually and corrected where necessary. Compared to the labour-intensive task of manually annotating all cards within an image, this will speed up the process a lot.