PRE2020 3 Group11

The acceptance of self-driving cars

Abstract

| Name | Studentnumber | |

|---|---|---|

| Laura Smulders | 1342819 | L.a.smulders@student.tue.nl |

| Sam Blauwhof | 1439065 | S.e.blauwhof@student.tue.nl |

| Joris van Aalst | 1470418 | J.v.aalst@student.tue.nl |

| Roel van Gool | 1236549 | R.p.v.gool@student.tue.nl |

| Roxane Wijnen | 1248413 | R.a.r.wijnen@student.tue.nl |

Problem statement

Self-driving cars are believed to be more safe than manually driven cars. However, they can not be a 100% safe. Because crashes and collisions are unavoidable, self-driving cars should be programmed for responding to situations where accidents are highly likely or unavoidable (Sven Nyholm, Jilles Smids, 2016). There are three moral problems involving self-driving cars. First, the problem of who decides how self-driving cars should be programmed to deal with accidents exists. Next, the moral question who has to take the moral and legal responsibility for harms caused by self-driving cars is asked. Finally, there is the decision-making of risks and uncertainty.

There is the trolley problem, which is a moral problem because of human perspective on moral decisions made by machine intelligence, such as self-driving cars. For example, should a self-driving car hit a pregnant woman or swerve into a wall and kill its four passengers? There is also a moral responsibility for harms caused by self-driving cars. Suppose, for example, when there is an accident between an autonomous car and a conventional car, this will not only be followed by legal proceedings, it will also lead to a debate about who is morally responsible for what happened (Sven Nyholm, Jilles Smids, 2016).

A lot of uncertainty is involved with self-driving cars. The self-driving car cannot acquire certain knowledge about the truck’s trajectory, its speed at the time of collision, and its actual weight. Second, focusing on the self-driving car itself, in order to calculate the optimal trajectory, the self-driving car needs to have perfect knowledge of the state of the road, since any slipperiness of the road limits its maximal deceleration. Finally, if we turn to the elderly pedestrian, again we can easily identify a number of sources of uncertainty. Using facial recognition software, the self-driving car can perhaps estimate his age with some degree of precision and confidence. But it may merely guess his actual state of health (Sven Nyholm, Jilles Smids, 2016).

The decision-making about self-driving cars is more realistically represented as being made by multiple stakeholders; ordinary citizens, lawyers, ethicists, engineers, risk assessment experts, car-manufacturers, government, etc. These stakeholders need to negotiate a mutually agreed-upon solution (Sven Nyholm, Jilles Smids, 2016). This report will focus on the relevant factors that contribute to the acceptance of self-driving cars with the main focus on the private end-user. Taking into account the ethical theories: utilitarianism, kantianism, virtue ethics, deontology, ethical plurism, ethical absolutism and ethical relativism.

State-of-the-art/Hypothesis

Research question:

What are the relevant factors that contribute to the acceptance of self-driving cars for the private end-user?

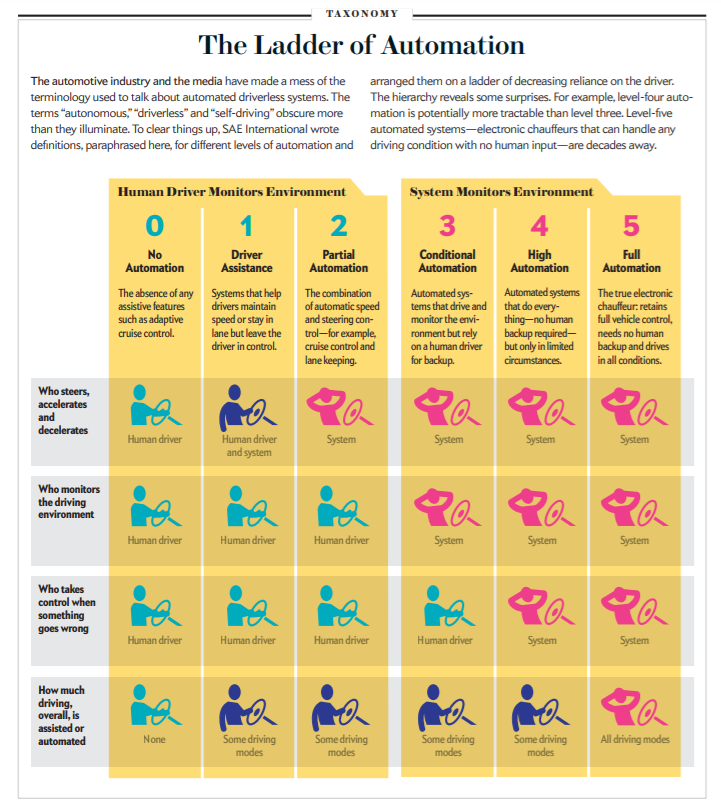

The developments and advances in the technology of autonomous vehicles have brought self-driving vehicles to the forefront of public interest and discussion recently. In response to the rapid technological progress of self-driving cars, governments have already begun to develop strategies to address the challenges that may result from the introduction of self-driving cars (Schoettle, B., 2014). The Dutch national government aims to take the lead in these developments and prepare the Netherlands for their implementation. The Ministry of Infrastructure and the Environment has opened the public roads to large-scale tests with self-driving passenger cars and trucks. The Dutch cabinet has adopted a bill which in the near future will make it possible to conduct experiments with self-driving cars without a driver being physically present in the vehicle (Mobility, public transport and road safety, n.d.).

The end-consumers (the actual drivers) will eventually decide whether self-driving cars will successfully materialize on the mass market. However, the lack of wider empirical evidence for the user perspective forms the rationale for this research. User resistance to change has been found to be an important cause for many implementation problems, so it is very probable that the self-driving car will meet considerable resistance. It is likely that a significant percentage of drivers may not be comfortable with full autonomous driving. People might experience driving to be adventurous, thrilling and pleasurable (König, M., 2017). There is also the question whether self-driving cars could be seen as providing the ultimate level of autonomy when making people dependent on the technology. Given that self-driving cars could be tracked steadily could lead to privacy issues. Another potential cause for barriers towards self-driving cars is the risk of ‘misbehaving computer system’. With autonomous vehicles, criminals or terrorists might be able to hack into and use their cars for illegal purposes. Further, the unavoidable rate of failure and crashes could lead to mistrust. Especially as people tend to underestimate the safety of technology while putting excessive trust in human capabilities like their own driving skills (König, M., 2017).

In several recent surveys on the topic of self-driving vehicles, the public has expressed some concern regarding owning or using vehicles with this technology. Looking at the survey of Public opinion about autonomous and self-driving vehicles in the U.S., the U.K, and Australia, the majority of respondents had previously heard of self-driving vehicles, had a positive initial opinion of the technology, and had high expectations about the benefits of the technology (Brandon Schoettle, 2014). However, the majority of respondents expressed high levels of concern about riding in self-driving cars, security issues related to self-driving cars, and self-driving cars not performing as well as actual drivers. Respondents also expressed high levels of concern about vehicles without driver controls (Schoettle, B., 2014). In the survey of User’s resistance towards radical innovations: The case of the self-driving car, findings are that people who used a car more often tended to be less open to the benefits of self-driving cars. The most pronounced desire of respondents was to have the possibility to manually take over control of the car whenever wanted. This indicates that the drivers want to be enabled to decide when to switch to self-driving mode and have the option to resume control in situations when the driver does not trust the technology. In the survey the most severe concern involving the car and the technology itself was the fear of possible attacks by hackers (König, M., 2017).

In literature research, scientific articles discuss three moral problems involving self-driving cars. These moral problems consist of the problem of who decides how self-driving cars should be programmed to deal with accidents, the moral question who has to take the moral and legal responsibility for harms caused by self-driving cars and the decision-making of risks and uncertainty (Nyholm, S., Smids, J., 2016). (As mentioned in the Problem Statement.)

This report will focus on the relevant factors that contribute to the acceptance of self-driving cars for the private end-user. Together with the literature research and the several surveys conducted on the topic of self-driving vehicles, these relevant factors will be the ethical theories, the moral and legal responsibility, safety, privacy and the perspective of the private end-user.

Survey

Introduction

Method

Fully completed surveys were received for 115 respondents.

Research design

For this questionnaire, a non-probability convenience sampling method was applied that leveraged the group’s broad networks. Even though convenience sampling means that the sample is not representative, it was a feasible opportunity to reach out to the crucial audience and to enable the collection of relevant data forming first evidence. As the questionnaire was conducted with the general public, there was no strict geographical scope in order to reach as many different people as possible. This allows first indications of driver’s attitudes towards self-driving vehicles not applying to certain regions. The survey is conducted in the Netherlands.

Data collection

Data was collected over a one-week time frame in March 2021 using an online questionnaire using Microsoft Forms (Microsoft Forms, 2021), a web-based survey company. Tis method was chosen for several reasons. Assessed information was widely available among the public. Due to Covid-19, an online approach made it easier to reach people to ensure physical distancing. And by not requiring an interviewer to be present, it reduced both potential bias and cost and time. Microsoft Forms is used because it has a safe environment and it meets EU privacy standards.

Respondents were reached by sending out emails and private messages on social media, via Whatsapp, including both personalized invitational letter, explicitly stating self-driving vehicles as the topic of the research, as well as a direct link to the online questionnaire. A consent from was included on the cover page of the questionnaire where respondents were assured of anonymity and confidentiality. Given the study’s exploratively, reaching a large number of respondents was prioritized. The minimum number of respondents favored was 100.

Measures

In the questionnaire, several relevant factors related to self-driving vehicles were examined. The main topics addressed in the questionnaire were as follows:

- Familiarity with self-driving vehicles

- Expected benefits of self-driving vehicles

- Concerns about different implementations of self-driving vehicles

- Favored ethical settings in self-driving vehicles

- Acceptance of legal responsibility in unavoidable crashes with self-driving vehicles

Personal car use and demographics

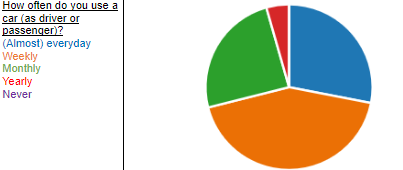

In the first part of the questionnaire, participants had to answer the question whether they have a drivers license. Additionally, the respondents were asked how often they drove a car presented with the answering options ‘(almost) every day, weekly, monthly, annually and never’. Furthermore, demographical questions regarding age and education were asked.

Familiarity with self-driving vehicles

Participant’s existing knowledge about self-driving vehicles was assessed. Respondents were confronted with a set of rating questions containing even, numerical Likert scales made up of four points ranging from ‘unfamiliar’ (1) to ‘familiar’ (4).

Expected benefits of self-driving vehicles

Participants were further asked to rate their agreement with statements reflecting presumed benefits of the use of self-driving vehicles. To allow for a ‘neutral’ opinion, the statements were combined with a 5-point scale ranging from ‘very unlikely’ (1) to ‘very likely’ (5). A 5-point Likert scale is used because in forced choice experiments, consisting of a 4-point Likert scale, choices are contaminated by random guesses.

Concerns about different implementations of self-driving vehicles

After the expected benefits of self-driving vehicles, respondents were asked to rate their concerns with statements regarding self-driving vehicles by using a 4-point Likert scale ranging from ‘not concerned’ (1) to ‘very concerned’ (4).

Favored ethical settings in self-driving vehicles

The preferred ethical setting, in which participants would like to see self-driving vehicles which are on the road, is assessed with 5 ranking options from first choice to last choice. Furthermore, there are statements regarding ethical settings used in self-driving vehicles assessed with a 5-point Likert scale, to allow the neutral opinion, ranging from ‘strongly disagree’ (1) to ‘strongly agree’ (5).

Acceptance of legal responsibility in unavoidable crashes with self-driving vehicles

Lastly, participants were asked to rate their agreement with statements about the legal responsibility in unavoidable crashes with self-driving vehicles. Again with a 5-point Likert scale, to allow the neutral opinion, ranging from ‘very unlikely’ (1) to ‘very likely’ (5).

The full text of the questionnaire is included in the appendix.

Results

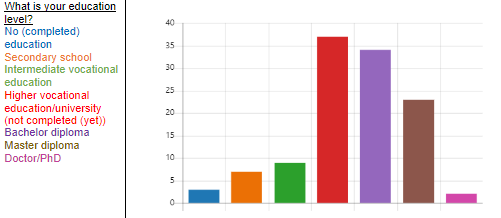

The major part of our respondents are highly educated. We have too little low educated respondents to compare between levels of education. We can only compare between age groups and we can see differences between how much people use a car and how willing they are to accept a self-driving vehicle.

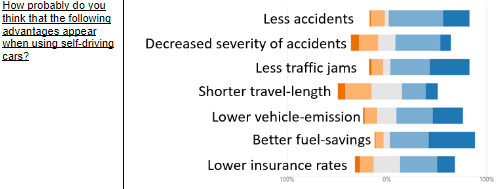

In general, we see that people think that there will be many advantages, but they also worry about some probable disadvantages. People think these advantages will occur in respective order from most probable to least probable:

1. Better fuel-savings (38.6% somewhat likely, 46.5% very likely)

2. Less traffic jams (39.5% somewhat likely, 39.5% very likely)

3. Less accidents (54.5% somewhat likely, 26.3% very likely)

4. Lower vehicle emissions (36% somewhat likely, 30.7% very likely)

5. Lower insurance rates (37.2% somewhat likely, 17.7% very likely)

6. Decreased severity of accidents (45.1% somewhat likely, 10.6% very likely)

7. Shorter travels (23.7% somewhat likely, 12.3% very likely)

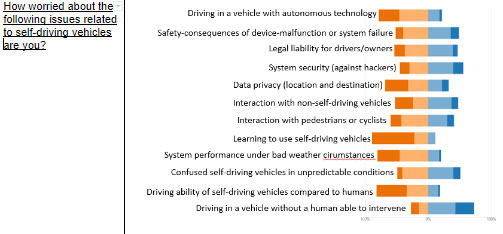

It is remarkable that the majority of the respondents agree with all advantages, accept shorter travels, whereas a computer is able to compute the most efficient route and a human being is not. Overall, we can conclude that people believe self-driving vehicles will bring many advantages with it. The disadvantages are ordered below in same way of ranking as the advantages:

1. Driving in a vehicle without a human able to intervene (42.7% worried, 30.1% very worried)

2. System security (against hackers) (39.3% worried, 15.9% very worried)

3. Confused self-driving vehicles in unpredictable conditions (39.8% worried, 11.1% very worried)

4. Safety consequences of device-malfunction or system failure (35.5% worried, 13.1% very worried)

5. Interaction with non-self-driving vehicles (36.9% worried, 10.7% very worried)

6. Legal liability for drivers/owners (38.8% worried, 7.8% very worried)

These are the six most likely disadvantages according to our respondents. We can conclude from this that self-driving vehicles will be much more accepted, if the option to intervene will be implemented. Luckily, this is not hard to realize for manufacturers, but this will sure have legal consequences. In general, people feel safer when they are in control, and however they must handle quickly to prevent an accident, they have a feeling of being in control. Second, we have system security. We can conclude that the majority of the people would like significant proof, that it is hard for hackers to break into the system of a car or multiple cars at the same time. This surely is important and a major part of the manufacturers’ focus already is on this aspect. It makes sense that people worry about this point, because when it is true that less accidents will happen, system breaches could deliver way more trouble, if incautiously secured. Slightly more than half of the respondents are worried about vehicles being confused by situations they don’t recognize and therefore hard to/impossible to predict. Over time this aspect will improve and maybe all situations will be predictable somewhere in the future. For now, safety could be guaranteed by making the car pull over when it really doesn’t know what to do else.

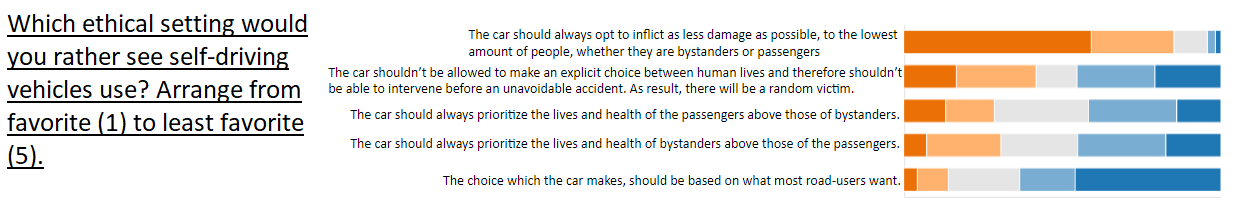

From the ninth question, we can make a top five of what ethical settings most respondents seem to prefer:

1. The car should always opt to inflict as less damage as possible, to the lowest amount of people, whether they are bystanders or passengers

2. The car shouldn’t be allowed to make an explicit choice between human lives and therefore shouldn’t be able to intervene before an unavoidable accident. As a result, there will be a random victim.

3. The car should always prioritize the lives and health of the passengers above those of bystanders.

4. The car should always prioritize the lives and health of bystanders above those of the passengers.

5. The choice which the car makes, should be based on what most road-users want.

We see that the respondents think that the car should not prioritize passengers or bystanders above each other in general. Instead, they want the least damage to be inflicted or the car not making a decision at all. People don’t think that the owner or bystanders should be preferred and this could be due to the fact that both groups don’t cause the accident mostly. Still, they have a slight preference for saving the owner’s life. This could encourage more people to make use of self-driving cars, which is what we want. Also, this could be due to the fact that bystanders are more likely to cause the accident than the owner, because the owner isn’t in control, but a bystander could jump in front of the car due to his own fault for example.

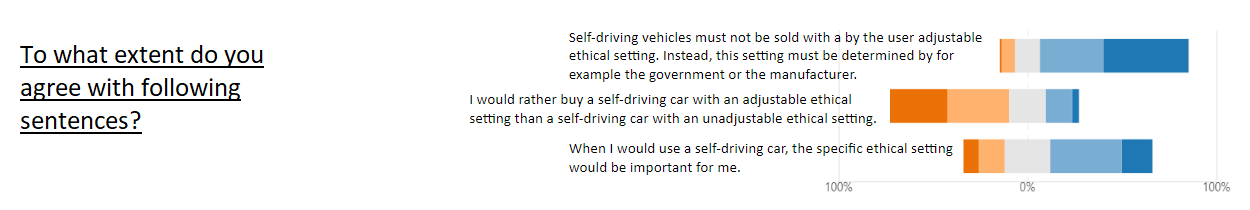

The conclusion of question 10 is very simple, people want the ethical setting to be set by the manufacturer, but they do think it is important which one they make. So

Amount of responses

- 115 responses.

- 104 responded to q2

- 115 responded to q3

- 114 responded to q4

- 114 responded to q5

- 114 responded to q6

- 112 responded to everything in q7.

- 1 person did not respond to subquestions 1, 2, 7

- 1 person did not respond to subquestions 2

- 1 person did not respond to subquestions 3-7

- So including partly complete responses there are 115 responses

- 67 responded to everything in q8. (-39 since had to throw out responses)

- 1 person did not respond to 3

- 1 person did not respond to 4

- 1 person did not respond to 6

- 3 persons did not respond to 12

- 1 person did not respond to 2-6, 8-10, 12

- 1 person did not respond to 1-12

- 1 person did not respond to 2-12

- So including partly complete responses there are 75 responses (1 person completely skipped q8).

- 115 responded to everything in q9

- 112 responded to everything in q10

- 1 person did not respond to 2,3

- 2 persons did not respond to 3

- So including partly complete responses there are 115 responses

110 responded to everything in q11

2 persons did not respond to 2

1 person did not respond to 4

1 person did not respond to 5

1 person did not respond to 2, 3, 4, 5

So including partly complete responses there are 115 responses

For the percentages in the next section I simply did not include blank answers. So if (for instance) for q7 3 people did not respond to subquestion 2, then the percentages of that section are calculated as a percentage of 112. In the grouped section I did include blank answers (except the removed answers from q8).

Also good to mention in discussion are the following two things: We should have made it so one could only complete the survey with an answer everywhere, since that would have avoided blank answers Although we cannot be sure why some people did not fill in some questions, it is likely that part of it was that they were not thoroughly reading everything. To combat this we could have included a test question

Basic results (not grouped per demographic category)

q2:

Since this is an open question, I’ve made two intervals. Youngest person is 17, oldest 80.

About half of respondents are between 17-30, and other half between 42-80 (no one between 30 and 42). This is likely since we asked people around us to fill in the survey, which mainly will be friends of similar age, and (older) family members. If I tried to make 3 categories, then there were too few people in one category to justify the category. Also, since there is an obvious dichotomy in the data, it makes sense to use that dichotomy.

51.3% <31 39.1% >41 9.6% no answer

(out of 115 responses)

q3: 2.6% no education/ incomplete primary education 6.1% High school diploma 7.8% MBO 32.2% HBO/WO (no diploma) 29.6% HBO/WO Bachelor diploma 20.0% HBO/WO Master diploma 1.7% Doctor, Phd

(out of 115 responses)

q4:

89.5% driving license

10.5 no driving license

(out of 114 responses)

q5: 28.1% (nearly) every day 43.0% Weekly 24.5% Monthly 4.4 % Yearly 0.0% Never

(out of 114 responses)

q6: 22.8% Unfamiliar 16.7% Somewhat unfamiliar 42.1% Somewhat familiar 18.4% Familiar

(out of 114 responses)

[q7:] For all the sub-questions of q7, these are the possible answers: (Very unlikely, Somewhat unlikely, No opinion/neutral, Somewhat likely, Very likely)

Fewer accidents: 0.9%, 14.0%, 4.4%, 54.4%, 26.3% Decreased severity of accidents: 8%, 19.5%, 16.8%, 45.1%, 10.6% Fewer traffic jams: 2.6%, 11.4%, 7%, 39.5%, 39.5% Shorter travel-length: 7.9%, 26.3%, 29.8%, 23.7%, 12.3% Lower vehicle emission: 1.8%, 12.3%, 19.3%, 36.0%, 30.7% Better fuel-savings: 0.9%, 7.9%, 6.1%, 38.6%, 46.5% Lower insurance rates: 5.3%, 13.3%, 26.5%, 37.2%, 17.7%

q8:

First 39 respondents were given a wrong survey for this question; one of the possible answers did not make sense, so we decided to toss out the first 39 respondents for question 8. Therefore we are left with 76 respondents (plus/minus the number of people per subquestion that did not fill in anything).

For all the sub-questions of q8, these are the possible answers: (Not concerned, slightly concerned, concerned, very concerned)

Driving in a vehicle with autonomous technology: 32.0%, 48.0%, 14.7%, 5.3% Safety-consequences of device-malfunction or system failure: 9.6%, 43.8%, 41.5%, 15.1% Legal liability for drivers/owners: 13.9%, 43.1%, 36.1%, 6.9% System security (against hackers): 12.5%, 33.3%, 40.3%, 13.9% Data privacy (location and destination): 31.5%, 36.9%, 17.8%, 13.7% interaction with non-self driving vehicles: 26.4%, 22.2%, 38.9%, 12.5% Interaction with pedestrians or cyclists: 13.5%, 39.2%, 33.8%, 13.5% Learning to use self-driving cars: 64.4%, 24.6%, 9.6%, 1.4% System performance under bad weather conditions: 36.1%, 50.0%, 9.7%, 4.2% Confused self-driving vehicles in unpredictable conditions: 8.2%, 43.9%, 35.6%, 12.3% Driving ability of self-driving vehicles compared to humans: 46.0%, 39.2%, 13.5%, 1.3% Driving in a vehicle without a human able to intervene: 12.9%, 15.7%, 44.3%, 27.1%

q9: This is a ranking based question, so here we note the percentage of people who had each of the options as first, second, …, fifth choice. The most popular options are listed first

Car should always opt to inflict as less damage as possible, to the lowest amount of people, whether they are bystanders or passengers: 59.1%, 26.1%, 10.4%, 2.6%, 1.7%

The car should not be allowed to make an explicit choice between human lives and therefore should not be able to intervene in the case of an unavoidable accident. As a result, there will be a random victim: 16.5%, 25.2%, 13.0%, 24.3%, 20.9%

The car should always prioritize the lives and health of the passengers above those of bystanders: 13.0%, 15.7%, 29.6%, 27.8%, 13.9%

The car should always prioritize the lives and health of the bystanders above those of the passengers: 7.0%, 23.5%, 24.3%, 27.8%, 17.4%

The choice that the car makes should be based on what the majority of road-users want: 4.3%, 9.6%, 22.6%, 17.4%, 46.1%

This can be summarized in the following table:

q10:

For all the sub-questions of q10, these are the possible answers:

(Strongly disagree, disagree, no opinion/neutral, agree, strongly agree)

Self-driving vehicles must not be sold with an ethical setting that the user can adjust. Instead this setting must be determined by, for example, the government or the manufacturer: 0.9%, 7.1%, 13.3%, 33.6%, 45.1%

I would rather buy a self-driving car with an adjustable ethical setting, than a self-driving car with an unadjustable ethical setting: 30.4%, 33%, 18.8%, 14.3%, 3.6%

When I will use a self-driving car, the specific ethical setting would be important for me: 8.1%, 13.5%, 23.4%, 37.8%, 16.2%

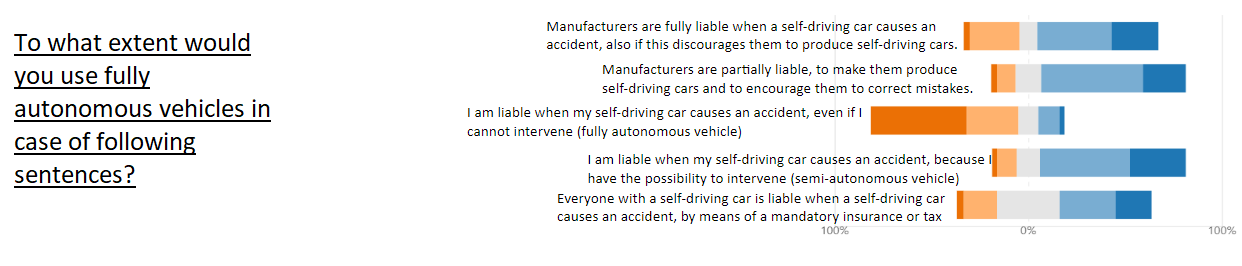

q11:

For all the sub-questions of q11, these are the possible answers:

(Very unlikely, somewhat unlikely, no opinion/neutral, somewhat likely, very likely)

Manufacturers are fully liable when a self-driving car causes an accident, even if this discourages them to produce self-driving cars: 2.6%, 26.1%, 8.7%, 38.3%, 24.3%

Manufacturers are partially liable, in order to make them produce self-driving cars while encouraging them to correct errors: 2.7%, 9.7%, 13.3%, 52.2%, 22.1%

I am liable when my self-driving car causes an accident, even if I cannot intervene (fully autonomous vehicle): 49.1%, 27.2%, 9.6%, 11.4%, 2.6%

I am liable when my self-driving car causes an accident, because I have the possibility to intervene (semi-autonomous vehicle): 2.7%, 9.7%, 12.4%, 46.0%, 29.2%

Everyone with a self-driving car is liable when a self-driving car causes an accident, by means of mandatory insurance or tax: 3.6%, 17.0%, 32.1%, 28.6%, 18.8%

Discussion

Discussion

Target Group

The major part of our respondents are highly educated. We have too little low educated respondents to compare between levels of education. We can only compare between age groups and we can see differences between how much people use a car and how willing they are to accept a self-driving vehicle. The benefit of choosing a target group of high educated people, is that they have much general knowledge and are able to understand possible effects of self-driving cars. The disadvantage is of course, that people without much general knowledge and with lower IQ’s have to accept self-driving cars as well. The abundance of respondents between 31-40 years can have a negative effect on the accuracy of our survey because the opinion of this age-group might differ from the other two age-groups. Also smaller and more specific age-groups may have worked out better if there were more respondents. Now the elderly are in the same category as people who are in their forties for example. Also, just 10.5% of the respondents didn’t have a driver’s license, which is very little. People without driver’s license may have different opinions, because use of cars will be more accessible for them. This group is not represented enough. 22.8% of the respondents indicates to be unfamiliar with self-driving vehicles. If they picked this answer appropriately, it means that they have never heard of self-driving vehicles and therefore they are unaware of the possible advantages or disadvantages or what a self-driving car is. This is almost a quarter of the respondents and they might have given random answers.

Survey

A few issues could have had impact on the outcome of our survey. At first, some questions were not answered by some respondents. These answers were treated as if they didn’t exist. This is why the amount of answers between questions can vary. Percentages were calculated with the amount of answers to a specific question, not by the amount of respondents in general. For example, three people didn’t respond to subquestion 7.2. In this case 112 answers would be 100%. Differences in numbers of total answers can negatively contribute to the accuracy of research. This could have been avoided by making the questions compulsory, so one can only submit the form when every question is answered. Why these questions haven’t been answered can be due to multiple reasons: At first, people may have quickly filled in the answers and submitted the form without very much thinking. We have seen massive differences in completion time of the survey. For example, the lowest completion time was 2 minutes and 10 seconds, while the mean completion time was 12:22. For this, it must be mentioned that there are also completion times of over two hours, which must have been caused by people who already opened the form in their browsers, but filled it in much later. This has no immediate effect on the accuracy of the answers, but it has heightened the completion time. Still, 2 minutes and 10 seconds for example, is very quick. Probably people who have filled it in this quick, forgot to fill in something or filled questions in inappropriately. Another reason why they may not have filled in something, is because they didn’t know what to answer or didn’t understand the question. In this case, it may be beneficial for a correct representation of truth, that they didn’t just pick one out. But of course, for these cases the option ‘neutral’ was included. This brings us to another point of discussion: when the form was first opened, question 8 included five possible answers. They were: ‘not worried’, ‘somewhat worried’, ‘neutral’, ‘worried’, ‘very worried’. Later on, when 39 respondents had already submitted the form, ‘neutral’ was removed from the list of possible answers because it didn’t fit in this scale. Neutral is of course quite similar to not worried, because when one is not worried, his/her thoughts are neutral about the subject. It doesn’t fit between ‘somewhat worried’ and ‘worried’. Answers of these first 39 respondents were omitted in the results, because this confusing scale of answers can have negatively affected the accuracy of the answers.

Answers

Question 7

It is remarkable that the majority of the respondents agree with all advantages, except shorter travels where it is almost equal. A computer is able to compute the most efficient route and a human being is not. Overall, we can conclude that people believe self-driving vehicles will bring many advantages with it. This means that people are positive towards self-driving vehicles in general, because they think it will bring many benefits. There is no significant difference between age-groups in the results. This is remarkable, since results from other surveys point out that elderly see less advantages than younger people. This could be caused by the fact that there isn’t a specific age-group for the elderly. If there was one, difference may be more significant.

Question 8

We can conclude from this question that self-driving vehicles will be much more accepted, if the option to intervene will be implemented. Fortunately, this is not hard to realize for manufacturers, but this will sure have legal consequences. If a human is able to intervene, partial or full liability could be on the ‘driver’ instead of on the manufacturer. In general, people feel safer when they are in control, and however they must handle quickly to prevent an accident, they have a feeling of being in control. Second, we have system security. We can conclude that the majority of the people would like significant proof, that it is hard for hackers to break into the system of a car or multiple cars at the same time. This surely is important and a major part of the manufacturers’ focus already is on this aspect. It makes sense that people worry about this point. Although many people see the advantage of less accidents, a system security breach could deliver way more trouble. If the network of a manufacturer is hacked by terrorists for example, they might be able to make every car crash. It would be positive for general acceptation if there will be invested in system security. Slightly more than half of the respondents are worried about vehicles being confused by situations they don’t recognize and therefore hard to/impossible to predict. Over time this aspect will improve and maybe all situations will be predictable somewhere in the future.

While older people are as positive as younger people about the advantages, they are more negative about the disadvantages. They are more worried to drive in a self-driving car (46.43% of people below 31 is not worried about driving in a self-driving car, while 23.08% of people above 42 is not worried about driving in a self-driving car). This might be due to the fact that they are longer used to conventional cars than younger people are, and therefore it will take more time for them to get used to self-driving cars. Also younger people are less worried about data privacy concerns (42.86% to 21.05% not worried). Either they don’t care with what happens with their data, or they don’t think their data will be handled insecurely. Still, more than 50% of all people are worried or very worried about data privacy. Existing privacy laws should be adapted to this new technology and new laws should be made to make more people accept these cars.

It is remarkable that older people are not more worried about how to use self-driving cars, while they are worried about driving a self-driving car in general. This could be because they think it’s a self-driving car, so they don’t have to do anything. In general, older people are more worried about the negatives than younger people are. This is in line with expectations, as already mentioned, in general older people are more negative about self-driving cars or new technologies. It was odd that there wasn’t a difference in the expectations of the positives between the age-groups, but older people are still more negative below the bottom line.

Question 10

The conclusion of question 10 is very simple, people want the ethical setting to be set by the manufacturer, but they do think it is important which one they make. It may seem on one hand a bit contradictory, because the ethical settings matter. If that matters much, why wouldn’t one rather buy a car when he can bend the settings to his will? It makes sense that people rather have other cars’ settings set by the manufacturer, because otherwise many people maybe would opt to be saved as driver and not a bystander. But why would that prevent one from buying one himself? When you can adjust the settings, you can choose precisely which one you want, and if the setting is important, this would be ideal. A logical explanation for this could be that people might not want to be responsible for the choice the car makes in the end. When you have chosen a specific setting, you are partially responsible for the outcome of an accident. If the manufacturer has chosen everything, you couldn’t have done anything about the outcome. Between age-groups there aren’t significant differences, except from that younger people see it as less of a problem when they can adjust ethical settings. Younger people answer 22.81% strongly disagree and 33.33% disagree, while older people answer 38.64% strongly disagree and the same for disagree. But still, overall it would be positive for acceptance if the manufacturer defines the ethical settings. Which is good, because then it is obvious what other cars will do and no one has to worry about choosing for others or not.

Question 11

Most people are willing to use self-driving cars in four of the five situations. We see that many people want manufacturers to be partially liable. This would encourage them to produce and develop self-driving cars, as mentioned in the answer. Many people could be pushed to this answer by the way the answer is formulated. This could have affected the view of reality. This answer includes the word ‘encourage’ which is positive and the answer with full liability includes the word ‘discourage’, which is negative. We should have in mind that some people could have chosen for the answer with partial liability over the answer with full liability because of this positive and negative tone. The answer with the highest positive response, was that users are liable themselves, when they are able to intervene. This means that an option to intervene can be advised, which agrees with the conclusion to question 8. As mentioned below question 8, users may be liable with that option, but we can conclude from this, that many don’t see that as a problem. Of course again, some people could be biased towards this answer, because this answer seems of the highest moral value.

Conclusions

Appendix

Acceptatie van volledig zelfrijdende auto's

Consent Form

Instemming onderzoeksdeelname voor onderzoek ‘Acceptatie van volledig zelfrijdende auto’s’. Dit document geeft u informatie over het onderzoek ‘Acceptatie van volledig zelfrijdende auto’s’.

Voordat het experiment begint is het belangrijk dat u kennis neemt van de werkwijze die bij dit experiment gevolgd wordt en dat u instemt met vrijwillige deelname. Leest u dit document a.u.b. aandachtig door.

Doel en nut van het experiment

Het doel van dit onderzoek is te meten welke relevante factoren bijdragen aan de acceptatie van de volledig zelfrijdende auto voor privégebruik. Het onderzoek wordt uitgevoerd door de studenten Laura Smulders, Sam Blauwhof, Joris van Aalst, Roel van Gool en Roxane Wijnen van de Technische Universiteit Eindhoven, onder supervisie van dr. ir. M.J.G. van de Molengraft.

Procedure

Dit onderzoek vult u online in via uw webbrowser. In dit onderzoek wordt u een aantal vragen gesteld over de volgende relevante factoren: gebruikersperspectief, veiligheid, ethische instelling, verantwoordelijkheid. Ook worden er wat additionele demografische vragen gesteld.

Duur

Het onderzoek duurt ongeveer 5-10 minuten.

Vrijwilligheid

Uw deelname is geheel vrijwillig. U kunt zonder opgave van redenen weigeren mee te doen aan het onderzoek en uw deelname op welk moment dan ook afbreken door de browser af te sluiten. Ook kunt u nog achteraf (binnen 24 uur) weigeren dat uw gegevens voor het onderzoek mogen worden gebruikt. Dit alles blijft te allen tijde zonder nadelige gevolgen.

Vertrouwelijkheid

Wij delen geen persoonlijke informatie over u met mensen buiten het onderzoeksteam. De informatie die we met dit onderzoeksproject verzamelen wordt gebruikt voor het schrijven van wetenschappelijke publicaties en wordt slechts op groepsniveau gerapporteerd. Alles gebeurt geheel anoniem en niets kan naar u teruggevoerd worden. Alleen de onderzoekers kennen uw identiteit en die informatie wordt zorgvuldig afgesloten bewaard.

Nadere inlichtingen

Als u nog verdere informatie wilt over dit onderzoek of voor eventuele klachten, dan kunt u zich wenden tot Roel van Gool (roel.vangool@gmail.com).

Instemming onderzoeksdeelname

Door onderstaand 'Volgende' aan te klikken geeft u aan dat u dit document en de werkwijze hebt begrepen en dat u ermee instemt om vrijwillig deel te nemen aan dit onderzoek van de bovengenoemde studenten van de Technische Universiteit Eindhoven.

Demografie

Wat is uw leeftijd?

Open vraag

Wat is uw geslacht?

- Man

- Vrouw

- Anders

Wat is uw hoogst behaalde opleidingsniveau?

- Geen opleiding/ onvolledige basisonderwijs

- Middelbaar diploma

- Middelbaar beroepsonderwijs (MBO)

- Hoger beroepsonderwijs of wetenschappelijk onderwijs zonder diploma (HBO/WO)

- Bachelor diploma (HBO/WO)

- Master diploma (HBO/WO)

- Doctor, PhD

Heeft u een auto rijbewijs?

- Ja

- Nee

Hoe vaak gebruikt u een auto?

- (Bijna) elke dag

- Wekelijks

- Maandelijks

- Jaarlijks

- Nooit

Gebruikersperspectief

Hoe bekend bent u met zelfrijdende auto’s?

Onbekend, Enigszins onbekend, Enigszins bekend, Bekend

Hoe waarschijnlijk denkt u dat de volgende voordelen optreden bij het gebruik van volledig zelfrijdende auto’s?

Zeer onwaarschijnlijk, Eenigszins onwaarschijnlijk, Geen mening/neutraal, Enigszins waarschijnlijk, Zeer waarschijnlijk

- Minder ongelukken

- Verminderde ernst van ongelukken

- Minder verkeersopstoppingen

- Kortere reizen

- Lagere voertuigemissies

- Betere brandstofbesparing

- Lagere verzekeringstarieven

Veiligheid

(Niet bezorgd, Enigszins bezorgd, Neutraal, Bezorgd, Zeer bezorgd)

Hoe bezorgd bent u over de volgende kwesties gerelateerd aan volledig zelfrijdende voertuigen?

- Autorijden in een voertuig met zelfrijdende technologie

- Veiligheids consequenties van apparaatstoring of systeem mislukking

- Wettelijke aansprakelijkheid voor chauffeurs/eigenaren bij ongelukken

- Systeembeveiliging (tegen hackers)

- Gegevensprivacy (locatie en bestemming)

- Interactie met niet-zelfrijdende voertuigen

- Interactie met voetgangers en fietsers

- Zelfrijdende voertuigen leren gebruiken

- Systeemprestaties in slecht weer

- Verwarde zelfrijdende voertuigen door onvoorspelbare situaties

- Rijvermogen van het zelfrijdende voertuig in vergelijking tot menselijk rijvermogen

- Rijden in een voertuig zonder dat de bestuurder kan ingrijpen

Ethische instelling

In sommige onvermijdbare ongelukken zal de auto een keuze moeten maken tussen verschillende mensenlevens, bijvoorbeeld tussen die van de bestuurder en voetgangers. Het zou zelfs mogelijk zijn een instelling aan een zelfrijdende auto toe te voegen die bepaalt welke keuze de auto zou moeten maken in het geval van een ongeluk. De onderstaande vragen gaan over deze ethische instelling.

Met welke ethische instelling ziet u het liefst zelfrijdende auto’s op de weg? Rangschik van favoriet (1) naar minst favoriet (5):

1. De auto zou altijd het leven en gezondheid van de inzittende(n) moeten prioriteren boven die van omstander(s).

2. De auto zou altijd het leven en gezondheid van de omstander(s) prioriteren boven die van de inzittende(n).

3. De auto zou altijd moeten kiezen om de minste hoeveelheid schade aan te richten bij de minste hoeveelheid mensen, of ze nu omstander of inzittend zijn.

4. De auto zou geen expliciete keuze mogen maken tussen mensenlevens, en zou dus bij een onvermijdbaar ongeluk niet moeten ingrijpen. Als gevolg zal er dus willekeurig een slachtoffer vallen.

5. De keuze die de auto maakt zou gebaseerd moeten zijn op wat het merendeel van de weggebruikers wilt.

Note to ourselves: from top to bottom they represent the following ethical theories: egoism, virtue ethics (kinda), utilitarianism, deontology, contractualism.

In hoeverre bent u het met de volgende stellingen eens:

Zeer oneens, Oneens, Geen mening/neutraal, Eens, Zeer eens

- Zelfrijdende auto’s moeten niet verkocht worden met een door de gebruiker instelbare ethische instelling. In plaats daarvan moet deze instelling vastgesteld worden door bijvoorbeeld de regering of de fabrikant.

- Ik zou liever een zelfrijdende auto kopen met een instelbare ethische instelling dan een zelfrijdende auto met een onaantastbare ethische instelling.

- Als ik een een zelfrijdende auto gebruik, zou voor mij de specifieke ethische instelling van belang zijn.

Verantwoordelijkheid

In hoeverre zou u het volledig zelfrijdende voertuig gebruiken in de volgende stellingen?

Zeer onwaarschijnlijk, Enigszins onwaarschijnlijk, Geen mening/neutraal, Enigszins waarschijnlijk, Zeer waarschijnlijk

1. Fabrikanten zijn volledig aansprakelijk als een zelfrijdende auto een ongeluk maakt, ook als dit ze ontmoedigt om zelfrijdende auto’s te produceren.

2. Fabrikanten zijn gedeeltelijk aansprakelijk zodat ze wel produceren, maar altijd aangemoedigd zijn om fouten te verbeteren.

3. Ik ben zelf aansprakelijk als mijn zelfrijdende auto een ongeluk maakt, ook al kan ik niet zelf ingrijpen (volledig autonome auto).

4. Ik ben zelf aansprakelijk als mijn zelfrijdende auto een ongeluk maakt, omdat ik de mogelijkheid heb om in te grijpen (semi-autonome auto).

5. Iedereen met een zelfrijdende auto is aansprakelijk als een zelfrijdende auto een ongeluk maakt door middel van een verplichte verzekering of belasting.

Relevant factors

Ethical theories

A key feature of self-driving cars is that the decision making process is taken away from the person in the driver’s seat, and instead bestowed upon the car itself. From this several ethical dilemmas emerge, one of which is essentially a version of the trolley problem. When an unavoidable collision will occur, it is important to define the desired behaviour of the self-driving car. It might be the case that in such a scenario, the car has to choose whether to prioritize the life and health of its passengers or the people outside of the vehicle. In real life such cases are relatively rare [reference 1] , but the ethical theory underlying that decision will have possibly an impact on the acceptance of the technology. Self-driving vehicles that decide who might live and who might die are essentially in a scenario where some moral reasoning is required in order to produce the best outcome for all parties involved. Given that cars seem not to be capable of moral reasoning, programmers must choose for them the right ethical setting on which to base such decisions on. However, ethical decisions are not often clear cut. Imagine driving at high speed in a self-driving car, and suddenly the car in front comes to a sudden halt. The self-driving car can either suddenly break as well, possibly harming the passengers, or it can swerve into a motorcyclist, possibly harming them. This scenario can be regarded as an adapted version of the trolley problem. One could argue that since the motorcyclist is not at fault, the self-driving car should prioritize their safety. After all, the passenger made the decision to enter the car, putting at least some responsibility on them. On the other hand, people who buy might buy the self-driving car will have an expectation to not be put in avoidable danger. No matter the choice of the car, and the underlying ethical theory that it is (possibly) based on, it is likely that the behaviour and decision-making of the car has more chance of being socially accepted if it can morally be justified. Therefore in this section there is first highlighted some possible ethical theories, and then we will discuss some relevant aspects that surround the implementation of all ethical theories.

Ethical theories under consideration

Although there are not a lot of actions a car could take in the above-described scenario, there are a lot of ethical theories that can help to inform the car to make such a decision. The most prominent ethical theories that might prima facie be useful are utilitarianism, deontology, virtue ethics, contractualism, and egoism. There are also three meta-ethical frameworks which should be considered: Relativism, absolutism, and pluralism. These frameworks can by themselves not influence the decision-making process as they make no normative claims of their own, but they are useful in deciding how the possible ethical knob of a self-driving car might work (see section …).

Utilitarianism considers consequences of actions, as opposed to the action itself. This means that the correct moral decision or action in any scenario is the one that produces the most good. Although ‘’good’’ is a subjective term, in most versions of utilitarianism this usually refers to the net happiness or welfare increase for all associated parties. [reference]. Circumstances or the intrinsic nature of an action is not taken into account, as opposed to Deontology. Deontology does not judge the morality of an action based on its consequences, but on the action itself. Deontology posits that moral actions are those actions which have been taken on the grounds of a set of pre-determined rules, which hold universally and absolutely. This means that for a deontologist, some actions are wrong or right no matter their outcome.

The third major normative ethical theory is virtue ethics. Virtue ethics emphasizes the virtues, or moral character, as opposed to rules or consequences. Virtues are seen as positive or ‘’good’’ character traits. Examples of such traits are courage, or modesty. A moral person should do actions which realize these traits, and therefore moral actions are those which cause a persons’ virtues to be realized.

Other than the three major classical normative ethics theories, there are two more prima facie relevant theories. The first of which is egoism. Normative egoism posits that the only actions that should be taken morally are those actions that maximize the individuals self-interest. An egoist only considers the benefit and detriments other people experience in so far as those experiences will influence the self-interest of the egoist. Although it may not seem like it, egoism is very similar to utilitarianism, except that utilitarianism focusses on the maximum happiness of all people involved, and egoism only focusses on the maximum happiness of the individual.

The last ethical theory that can be applied to the adapted trolley problem is (social) contractualism. Contractualism does not make any claims about the inherent morality of actions, but rather posits that a moral action is one that is mutually agreed upon by all parties affected by the action. What this agreement should look like exactly differs per version of contractualism: in some versions there must be unanimous consent, while in other versions there must be a simple or a supermajority. A good action is therefore one that can be justified by other relevant parties, and a wrong action is one that cannot be justified by the same.

Ethical theories applied to adapted trolley problem.

First, let us apply utilitarianism to the adapted trolley problem. On a micro level a self-driving car with a utilitarian ethical setting would firstly want to minimize the amount of deaths, and then minimize the total number of severe injuries sustained by all people who are affected by the collisions. This seems simple enough, but there are, among others, two issues with this implementation of a utilitarian setting. If for instance the technology is so advanced that it can target people based on if they’re wearing a helmet or not, then it would be safer for the car to collide with a biker wearing a helmet over a biker who is not wearing one, assuming all else is equal. Now the biker with a helmet is targeted, even though they are the one putting in effort to be safe. This is unfair, and if this is implemented, then it is possible some people will stop trying to take safety measures seriously, in order to not be targeted by a utilitarian self-driving car. This would ultimately reduce the overall safety on the road, which is exactly the opposite of what a utilitarian wants.

The second problem is that it seems that although people want other road-users in self-driving cars to adopt a utilitarian setting, they themselves would rather buy cars that give preferential treatment to passengers (REFEFRENCE). Therefore if self-driving cars are only sold with a utilitarian ethical setting, then less people might be inclined to buy them, again reducing the overall safety on the road.

There are multiple possible counters to these two issues that a ‘’true’’ utilitarian might propose. To counter the first problem, the utilitarian would simply not program the car to make a distinction between people who wear a helmet or those who do not wear a helmet. A distinction would also not be made in similar scenarios, since this solution is not only relevant to cases where helmets are involved. Of course, there are also be scenarios where the safer of the two options will be chosen by the self-driving car, assuming the same amount of people are at risk in both options. The difference between a valid safe choice and an invalid safe choice is that some safety measures are explicitly taken (such as the decision to put on a helmet), while others are more a byproduct of another decision (such as riding a bus versus driving a car). Driving a bus might be safer than driving a car, but most people who are passengers in a bus do not choose to be because of safety reasons. They might not have a car, or they do so out of concern of climate change. Since people in this scenario did not choose to ride a bus because of safety reasons, it is likely they will also not stop riding the bus because of a slightly increased chance of being hit by a self-driving car. Of course, this is only a thought-experiment, but if this also holds true in practice, then a utilitarian would find it acceptable for the self-driving car to choose the safer option in the bus vs car scenario, whereas in the helmet vs no helmet scenario the utilitarian would not find it acceptable to choose the safer option.

To counter the second problem, the ‘’true’’ utilitarian would ultimately want to reduce death and or/harm by reducing the amount of traffic accidents. If in practice that means that a significant number of people will not buy a self-driving car with a utilitarian setting, then the utilitarian would rather the self-driving cars be sold with an egoistic setting that gives passengers preferential treatment. This way, even though when an accident involving a self-driving car occurs it will be more deadly than with a utilitarian setting, accidents will overall decrease since more self-driving cars will be present.

There are more problems with a utilitarian approach to self-driving cars, but they are unrelated to the two micro vs macro utilitarian problems we just treated. One of these problems has to do with discrimination. In an unavoidable collision scenario where the self-driving car has to either hit an adult man or a child, the adult has more chance of survival. Is the car therefore justified in choosing the man? A utilitarian would say the car is indeed justified, except if this decision has been found to cause consumers to be turned away from purchasing and using self-driving cars. Prima facie, this would not seem to be the case, but there is no major literature on this topic that gives any definitive or exploratory answer (REFERENCE OR NOT). In some countries, it has already been made law that this type of discrimination is illegal, such as in Germany (REFERENCE).

The deontological ethical setting would not allow for a choice to be made that harms or kills a person, no matter the potential amount of saved lives. Therefore when faced with an unavoidable (possibly) deadly collision, the car would simply not make a decision at all, and events would play out ‘’naturally’’. In essence, this makes the actual ‘’chosen’’ collision somewhat random. As in the original trolley problem, the moral entity, in this case the car (or more accurately, the programmer who programs the ethics into the car), would simply not intervene at all. Deontologists are of the opinion that there is a difference between doing and allowing harm, and by not letting the car intervene in an unavoidable accident, both the passenger(s) and the programmer(s) are absolved of any moral responsibility. Some people might be happy with such a setting, since many people could not fathom being (morally) responsible for the deaths of others. By entering a self-driving car with a utilitarian ethical setting, the passenger(s) cannot be absolved of some moral responsibility in the case of an accident, since they made a conscious decision to buy a car that has been implemented to make explicit decisions. The same can not be said of passengers that enter a deontological self-driving car.

A virtue ethics response to the adapted trolley problem is very hard to come up with. An ethical setting based on virtue ethics would want the car to make a decision that improves the virtues of the moral entity. Therefore the decision that the car makes depends on which virtue we would want to improve. Take for instance bravery. One could posit that it is brave to take up danger to yourself if it means that other people will be safer for it. If we assume the moral entity/entities to be the passenger(s), then the self-driving car would always choose to put the passengers in danger, since this would improve on their bravery. There are two problems with this approach: firstly, it is hard to optimize any decision the car makes, since it is impossible to find a decision that always improves on all virtues. Also, what are those virtues in the first place? Is it for instance virtuous to sacrifice yourself if you leave behind a family? Secondly, since the car is not actually a moral agent, whose virtues should the decision the car makes improve upon? The programmers’ or the passengers’? This is unclear. If the programmers’ virtues should be improved, then it seems prima facie extremely unlikely that people would be willing to buy cars that might sacrifice themselves to improve the virtue(s) of a programmer they never met. If the passengers’ virtues should be improved, then people might be slightly more sympathetic, but even then I assume most people do not want to sacrifice their lives to improve upon an abstract notion of virtue and morality.

If we take the perspective of self-driving car buyers and users, the ethical egoist response is to prioritize the lives of the passengers above all else. As said in the ‘’utilitarian’’ part of this section, people who buy and use the car seem to prefer a self-driving car that always puts the lives of themselves above others (REFERENCE). This setting could also possibly be regarded as the setting of a ‘’true’’ utilitarian. There is another possible benefit to this ethical setting, namely that they are more predictable. If self-driving cars become very prevalent, that means that any self-driving car must always account for the decisions other self-driving cars are making. Therefore, if all self-driving cars prioritize themselves, their road behaviour becomes more predictable to other self-driving cars. However, this argument is theoretical in nature, and there are some game theorists who do not agree. The moral argument against ethical egoism is that it seems, and indeed is incredibly selfish. An ethical egoist might sacrifice hundreds of lives to save themselves. However, a ‘’true’’ ethical egoist is not always extremely selfish, since extremely selfish behaviour is not tolerated by others. A ‘true’’ ethical egoist would therefore also consider the feelings of other people, since their thoughts and decisions may influence the reward any egoist may get out of any given situation. In the case of unavoidable (deadly) accidents however, no matter the feelings of others, an egoist that values their own life above all else will not care for the feelings of others, since there can be nothing more important now or in the future than their own life.

Up until now we have considered only the perspective of buyers and users of self-driving cars, but the actual moral agent is the programmer (or a collection of people in the company that employs the programmer). Their egoist response would be based on how often they are planning to use the self-driving car for which they design the software. If they do not plan to use it at all, then the ethical egoist response of the programmer would be to implement a utilitarian ethical setting, since the programmer will be on average safer. If they however plan to use the self-driving car a lot, then the ethical egoist response is to implement an ethical setting that prioritizes the passenger.

A contractualist ethical setting is one that is agreed upon by all relevant parties. Unanimous consent seems impossible to get, so in practice this would probably a simple democratic vote, where an arrangement of ethical settings, or a combination of ethical settings are proposed. Each possible affected person can take a vote on these settings, and the democratic winner(s) will be implemented. The tough question is: who is affected by the decisions of a self-driving car? Self-driving cars can potentially drive across whole continents; from Portugal to China, or from Canada to Argentina. If the decisions of these self-driving cars can influence events in a collection of multiple countries, should people in all these countries be part of the decision making process? If so, should there be a global vote on the specific ethical settings that can be implemented? Or if the vote is done nationally, does that mean that the ethical setting of a car must be changed when the self-driving cars enters a country where citizens voted on a different ethical setting? In practice this seems very difficult to implement. If any or all of these contractualist ethical settings are practically possible, then this setting almost completely solves the responsibility aspect of self-driving cars: if all relevant parties can vote, then society as a whole can be held ethically and legally responsible. Since responsibility might be one of the factors that contribute to the acceptance of self-driving cars, having a realistic solution to the issue of responsibility will likely positively impact public perception of self-driving cars.

Letting the user decide the ethical setting or not. Also, all cars the same setting or not? It is clear that there is no ethical setting that is perfect for every scenario. For various different reasons, some authors advocate for people to be able to choose their own ethical settings. One can imagine an ‘’ethical knob’’, which has different programmable ethical settings. An ethical knob might be on a scale from altruistic, to egoistic, with an impartial setting in them middle. Maybe there will even be a deontological setting which does not intervene in unavoidable accidents. There are several reasons to implement such an ethical knob. People might want to be able to buy cars that mirror their own moral mindset. Millar (2015) observes that self-driving cars can be regarded as moral proxies, which implement moral choices. Implementing a moral knob also makes it easier to assign responsibility to someone in the case of an unavoidable accident (Sandberg & Bradshaw‐Martin, 2013; cf. Lin, 2014), since the passengers of the car have explicitly chosen the decision of the car. This might impact acceptance of the technology both positively and negatively. Prima facie it seems that people who want to buy self-driving cars might want to be able to choose their own ethical setting, but on the other hand people also do not like to be held responsible for any accident (REFERENCE). Also, other relevant road parties might not accept self-driving car passengers to choose their own ethical setting, since it is likely they will choose an egoistic setting, which negatively impacts their own road experience. This is especially true if the car would be equipped with an ‘’extremely egoistic’’ setting in which the value of the passengers life is worth even 100 or more other people. It seems likely people will not accept a self-driving car making such decisions, so perhaps manufacturers will limit how egoistic the ethical knob can be turned. Surely, these kind of ethical settings are too unpopular, perhaps even with people who might benefit from an extremely egoistic setting (REFERENCE).

The same can be said for an ethical knob that can not only be turned by the user to fit their moral convictions, but can even be modified by the user to fit other kind of preferences. An ethical knob that is able to discriminate on gender, or race might be technologically possible to make, but users’ should not be allowed to let their self-driving cars be racist or sexist. Discrimination based on race or sex is illegal in many countries, so these ethical settings, if even possible to implement, will likely be outlawed anyway, as Germany has already done (REFERENCE). To gauge which kind of settings are regarded as unacceptable, a contractualist might propose a democratic vote to gauge which kind of settings are regarded as unacceptable. The free choice of people to make their own-self driving car will then be limited by the democratic choice of all relevant road users. Such an arrangement might prove to be an acceptable middle ground between no ethical knob or a completely customizable ethical knob. However, whether this would actually lead to increased acceptance of the technology over the other two options has not been settled or mentioned in any other academic literature (REFERENCE IF POSSIBLE).

What do users appear to want? - Very few articles explicitly endorse an ethical theory to be applied in the case of self-driving cars. (page 6 of https://pure.tue.nl/ws/portalfiles/portal/101570905/Nyholm_2018_Philosophy_Compass.pdf). Few exceptions are papers by Gogoll and Müller and by Derek Leben. - Articles blablabla

Extra stuff (not per se in here, but relevant for discussion) - Not accounting for: ease of programming. Just assume it to be possible. It might be that some aspects of what we have discussed are not going to be relevant, since technology allows for it. But it is better to assume it will be possible and be prepared, than not know what to do when technology does arrive. - Maybe AI can figure out the best ethical theories, but that would essentially be a black box. Would we be comfortable with that?

In general, most of this is relevant to acceptance, since some of these settings increase or decrease the amount of accidents. We also hypothesize that when the number of accidents decrease, the acceptance will likely grow.

Responsibility

Whereas automated vehicles were a distant future a mere twenty years ago, they are a reality right now. For some years companies like for example Google have run trials with automated vehicles in actual traffic situations and have driven millions of kilometers autonomously. However, between December 2016 and November 2017, Waymo's self-driving cars for example drove about 350.000 miles and human driver retook the wheel 63 times. This is an average of about 5.600 miles between every disengagement. Uber has not been testing its self-driving cars long enough in California to be required to release its disengagement numbers (Wakabayashi, D., 2018). Though this research has been ground-breaking, there have also been some incidents in the past years. In 2016 a Tesla driver was killed while using the car’s autopilot because the vehicle failed to recognize a white truck (Yadron & Tynon, 2016). In 2018 a self-driving Volvo in Arizona collided with a pedestrian who did not survive the accident. It was believed to be the first pedestrian death associated with self-driving technology. When an Uber self-driving car and a conventional vehicle collided in Tempe in March 2017, city police said that extra safety regulations were not necessary, the conventional car was was at fault, not the self-driving vehicle (Wakabayashi, D., 2018).

One very important factor in the development and sale of automated vehicles is the question of who is responsible when things go wrong. In this section we will look in detail at all factors involved and come up with certain solutions. As brought up by Marchant and Lindor (2012), there are three questions that need to be analysed. Firstly, who will be liable in the case of an accident? Secondly, how much weight should be given to the fact that autonomous vehicles are supposed to be safer than conventional vehicles in determining who of the involved people should be held responsible? Lastly, will a higher percentage of crashes be caused because of a manufacturing ‘defect’, compared to crashes with conventional vehicles where driver error is usually attributed to the cause (Marchant & Lindor, 2012)?

Current legislation

If we take a look at how responsibility works for conventional vehicles, we find that responsibility is usually addressed to the driver due to failure to obey to the traffic regulations (Pöllänen, Read, Lane, Thompson, & Salmon, 2020). This can be as small and common as driving too fast or losing attention for a fraction of a moment, something nearly everyone is guilty of doing at some point. Where this usually doesn’t matter, sometimes it can lead to catastrophically results. This moment of misfortune still holds the driver responsible. As Nagel (1982) theorized, between driving a little too fast and killing a child than crosses the street unexpected and there being no child, there is only bad luck. The consequence, however, is vast for the child, but also for the driver (Nagel, 1982). This reasoning could also be applied to automated vehicles. If an accident happens it is just bad luck for the driver, and he will without doubt be liable. However, looking at the fact that this depends on luck, and the fact that most autonomous vehicles allow for restricted to no control, this option is not considered as a plausible one (Hevelke & Nida-Rümelin, 2015).

Blame attribution

A couple of studies have shown that the level of control is crucial in blame attribution. McManus and Rutchick (2018) showed that people attribute less blame to a driver in a fully automated vehicle in comparison to a situation where the driver selected a different algorithm (e.g. to behave selfishly) or drove manually (McManus & Rutchick, 2018). Another study (Li, Zhao, Cho, Ju, & Malle, 2016) investigated blame attribution between the manufacturer, government agencies, the driver and pedestrians. They found that blame is reduced for drivers when the vehicle is fully autonomous, whereas the blame for the manufacturer or government agencies increased.

The manufacturer

It would be obvious to say the manufacturer of the car is responsible. They designed the car, so if it makes a mistake, they are to blame. However, there are different types of defects in the manufacturing process. Firstly, there is a defect in manufacturing itself, where the product did not end up as it was supposed to, even though rules are followed with care. This error is very rare, since manufacturing these days is done with a very low error rate (Marchant & Lindor, 2012). A second defect lies in the instructions. When it is failed to adequately instruct and warn, this could result in a consumer defect. A third defect, and the most significant for autonomous vehicles, is that of design. This holds that the risks of harm could have been prevented or reduced with an alternative design (Marchant & Lindor, 2012).

Any flaw in the system that might cause the car to crash, the manufacturers could have known or did know beforehand. If they then sold the car anyway, there is no question in that they are responsible. However, by holding the manufacturer responsible in every case, it would immensely discourage anyone to start producing these autonomous cars. Especially with technology as complex as autonomous driving systems, it would be nearly impossible to make it flawless (Marchant & Lindor, 2012). In order to encourage people to manufacture autonomous vehicles and still hold them responsible, a balance needs to be found between the two. This is necessary, because removing all liability would also result in undesirable effects (Hevelke & Nida-Rümelin, 2015). In short, there needs to be found a way to hold the manufacturer liable enough that they will keep improving their technology.

Semi-autonomous vehicles

As stated above, there have been studies on blame attribution in fully autonomous vehicles, and those with certain pre-selected algorithms. A semi-autonomous vehicle (with a duty to intervene) has not been discussed yet. A good analogy for a semi-autonomous vehicle would be that of an auto-piloted airplane. The plane flies itself, though it is the responsibility of the pilot to intervene when something goes wrong (Marchant & Lindor, 2012). So, it could be suggested in the question of responsibility in case of an accident to hold the driver of the vehicle responsible. If the car is designed in such a way that the driver has the ability to take over and intervene, this could really be used in an argument against the driver. There is an argument in what the utility of the automated vehicle will be if they are designed like this. After all, when the driver has a duty to intervene, the vehicle can no longer be summoned when needed, it can no longer be used as a safe ride home when drunk, or when tired (Howard, 2013). However, as long as the vehicles will still reduce accidents overall, saying the driver has a duty to intervene or not would still be a better option than using conventional vehicles (Hevelke & Nida-Rümelin, 2015). It could be that the accident rate is dropped even more when the driver actually does have a duty to intervene, due to the fact that it can now intervene when it for example sees something the car doesn’t see. It would also mean that there is more of a transitioning phase when introducing the automated vehicles, instead of them suddenly being fully automatic.

On the other hand, asking the driver to intervene in a fully automated vehicle is questionable. It would assume that the driver can intervene at all times, and this is not always the case due to human error in reaction time or danger anticipation (Hevelke & Nida-Rümelin, 2015). It would be difficult to recognize whether or not the automated vehicle will fail to respond correctly, and thus unclear when the driver needs to intervene. In this case it would be unrealistic to expect the driver to predict a dangerous situation. When implementing this reasoning, another problem is possible to arise: the driver might intervene when it shouldn’t have, resulting in an accident (Douma & Palodichuk, 2012). Next to that, as argued by Hevelke & Ninda-Rümelin (2015), it seems impossible to ask a driver to pay attention all the time to be possible to intervene, while an actual accident is quite rare. All in all, it would be unreasonable to put responsibility on a driver that did not – or could not – intervene.

Shared liability

As is previously discussed, the responsibility of an accident can be placed on the individual driving the autonomous vehicle. For a number of reasons this was not ideal. An alternative would be to create a shared liability. People that drive cars everyday (especially when not necessary) take the risk of possibly causing an accident. They still make the choice to drive the car (Husak, 2004). You can extrapolate this thinking to the use of automated vehicles. If people choose to drive an automated vehicle, they in turn participate in the risk of an accident happening due to the autonomous vehicle. The responsibility of an accident is therefore shared with everyone else in the country also using the automated vehicle. In that sense the driver itself did not do something wrong, it did not intervene too late, it simply shoulders the burden with everyone else. A system that could work with this line of thinking is the entering of a tax or mandatory insurance (Hevelke & Nida-Rümelin, 2015).

So, it seems there are a couple of options. The manufacturer can be fully responsible; however, this could result in the intermittence of autonomous vehicle manufacturing. On the other hand, it is desirable that the manufacturer does have some sort of liability, so they keep investing to improve the vehicle. At the same time, giving the driver full responsibility only seems to be able to work in the beginning phase of autonomous vehicles. When they are still in development, and drivers really do have a duty to intervene. When the vehicles are more sophisticated and able to fully drive autonomously, the responsibility can be shared with all people through a tax or insurance.

Safety

One of the main factors deciding whether self-driving cars will be accepted is the safety of them. Because who would leave their life in the hands of another entity, knowing it is not completely safe. Though almost everyone gets into buses and planes without doubt or fear. Would we be able to do the same with self-driving cars? Cars have become more and more autonomous over the last decades. Furthermore, self-driving cars will operate in unstructured environments, this adds a lot of unexpected situations. (Wagner et al., 2015)

Traffic behaviour

The cars safety will be determined by the way it is programmed to act in traffic. Will it stop for every pedestrian? If it does pedestrians will know and cross roads wherever they want. Will it take the driving style of humans? How does the driving behaviour of automated vehicles influence trust and acceptance?

In a research two different designs were presented to a group of participants. One was programmed to simulate a human driver, whilst the other one is communicating with it’s surroundings in a way that it could drive without stopping or slowing down. The research showed no significant different in trust of the two automated vehicles. However, it did show that the longer the research continued the trust grew. (Oliveira et al., 2019) It is therefore to say that the driving behaviour does not necessarily influence the acceptance. But the overall safety of the driving behaviour determines this.

Errors

Despite what we think, humans are quite capable of avoiding car crashes. It is inevitable that a computer never crashes, think about how often your laptop freezes. A slow response of a mini second can have disastrous consequences. Software for self-driving vehicles must be made fundamentally different. This is one of the major challenges currently holding back the development of fully automated cars. On the contrary automated air vehicles are already in use. However, software on automated aircraft is much less complex since they have to deal with fewer obstacles and almost no other vehicles. (Shladover, 2016)

Cybersecurity

The software driving fully AV will have more than 100 million lines of code, so it is impossible to predict the security problems. Windows 10 is made of 50 million lines of code and there have been lots of bugs. Double the amount of code will result in an even higher probability of unknown vulnerabilities. (Parkinson et al., 2017)

Vs humans

Self-driving cars hold the potential of eliminating all accidents, or at least those caused by inattentive drivers. (Wagner et al., 2015) In a research done by Google it is suggested that the Google self-driving cars are safer than conventional human-driven vehicles. However, there is insufficient information to fully take a conclusion on this. But the results lead us to believe that highly-autonomous vehicles will be more safe than humans in certain conditions. This does not mean that there will be no car-crashes in the future, since these cars will keep on being involved in crashes with human drivers (Teoh et al., 2017)

The city