PRE2018 3 Group11: Difference between revisions

| Line 125: | Line 125: | ||

== Human factors == | == Human factors == | ||

Drones are finding more and more applications around our globe and are becoming increasingly available and used on the mass market. In various applications of drones, an interaction with humans who might or might not be involved in the task that a drone carries out, is inevitable and sometimes even the core of the application. Even though drones are still widely perceived as dangerous or annoying, there is a common belief that they will get more socially accepted over time [http://interactions.acm.org/archive/view/may-june-2018/human-drone-interaction#top]. However, since the technology and therefore the research on human-drone interaction is still | Drones are finding more and more applications around our globe and are becoming increasingly available and used on the mass market. In various applications of drones, an interaction with humans who might or might not be involved in the task that a drone carries out, is inevitable and sometimes even the core of the application. Even though drones are still widely perceived as dangerous or annoying, there is a common belief that they will get more socially accepted over time [http://interactions.acm.org/archive/view/may-june-2018/human-drone-interaction#top]. However, since the technology and therefore the research on human-drone interaction is still relatively new, our design should incorporate human factors without assuming general social acceptance of drones. | ||

The next sections will focus on different human factors that will influence our drone’s design with different goals. This will include the drone’s physical appearance and its users’ comfort with it, safety with respect to its physical design, as well as functionality-affecting factors which might contribute to a human's ability to follow the drone | The next sections will focus on different human factors that will influence our drone’s design with different goals. This will include the drone’s physical appearance and its users’ comfort with it, safety with respect to its physical design, as well as functionality-affecting factors which might contribute to a human's ability to follow the drone. | ||

=== Physical appearance === | === Physical appearance === | ||

The physical design of the drone depends on different factors. It is firstly defined and limited by technical requirements, as for example the battery size and weight, the size and number of propellers and motors and the system’s capacity for carrying additional weight (all discussed in “Technical considerations” [LINK?]). As soon as the basic functionality is given, the safety of the drone’s users has to be considered in the design. This is done in the section “Safety” [LINK?]. Taking all these requirements into account, further design-influencing human factors can now be discussed. However, the design process is not hierarchical, but rather iterative, which means that all mentioned factors can influence each other. | |||

* '''Drone size''': For a human to feel comfortable using a drone as a guiding assistant, the size will play an important role. Especially in landing or storing processes a drone of a big size will come in unwieldy, whereas a small drone might be more difficult to detect during the process of following it. In the study conducted in 2017 by Romell and Karjalainen [http://publications.lib.chalmers.se/records/fulltext/250062/250062.pdf], it was investigated what size of a drone people prefer for having a drone companion. The definition of a drone companion in this study lies close enough to our follow-me drone to consider the results of this study for our case. Given the choice between a small, medium or large drone, the majority of participants opted for the medium size drone, which was approximated at a diameter of 40cm. Giving priority to the technical limitations and the drone’s optimal functionality, we took this result into account. | |||

* '''Drone shape''' | |||

In the mentioned 2017 study by Romell and Karjalainen [http://publications.lib.chalmers.se/records/fulltext/250062/250062.pdf], also the optimal shape for such a drone companion was evaluated. It came to the conclusion that particularly round design features are preferred, which we considered as well in our design process. | |||

Additionally, while the drone is not flying, a user should intuitively be able to pick up and hold the drone, without being afraid to hurt him/herself or damage the drone. Therefore a sturdy frame or a visible handle should be included. | |||

* '''Drone color''' | |||

Since the color of the drone greatly contributes to the visibility of it, and is therefore crucial for the correct functioning of the system, the choice of color is discussed in the section “Salience in traffic” [LINK?]. | |||

=== Safety === | === Safety === | ||

The safety section will | The safety section considers all design choices that have to be made to ensure that no humans will be endangered by the drone. These choices are divided into factors that influence the physical aspects of the design, and factors which concern dynamic features of the functionality. | ||

* '''Safety of the physical design''' | |||

The biggest hazard of most drones are accidents caused by objects getting into a running propeller, which can damage the object (or injure a human), damage the drone, or both. Propellers need as much free air flow as possible directly above and below to function properly, which introduces some restrictions in creating a mechanical design that can hinder objects from entering the circle of the moving propeller. But most common drone designs involve an enclosure around the propeller (the turning axis), which is a design step that we also follow for safety reasons. | |||

Other than the propellers, we are not aware of another evident safety hazard that can be solved by changes in the physical design of the drone. | |||

* '''Safety aspects during operation''' | |||

Besides hazards that the drone is directly responsible for, like injuring a human with its propellers, there are also indirect hazards to be considered that can be avoided or minimized by a good design. | |||

The first hazard of this kind that we identified, is a scenario where the drone leads its user into a dangerous or fatal situation in traffic. As an example, if the drone is unaware of a red traffic light and keeps on leading the way crossing the trafficked road, and the user shows an overtrust into the drone and follows it without obeying traffic rules, this could result in an accident. | |||

Since this design will not incorporate real-time traffic monitoring, we have to assure that our users are aware of their unchanged responsibility as traffic participants when using our drone. However, for future designs, data from online map services could be used to identify when crossing a road is necessary and subsequently create a scheme that the drone follows before leading a user onto a trafficked road. | |||

Another hazard we identified concerns all traffic participants besides the user. There is a danger in the drone distracting other humans on the road and thereby causing an accident. This assumption is mainly due to drones not being widely applied in traffic situations (yet), which makes a flying object such as our drone, a noticable and uncommon sight. However, the responsibility lies with every conscious participant in traffic, which is why we will not let our design be influenced by this hazard greatly. The drone will be designed to be as easily visible and conspicuous for its user, while keeping the attracted attention from other road users to a minimum. | |||

=== Salience in traffic === | === Salience in traffic === | ||

As our standard use case described, the drone is aimed mostly at application in traffic. It should be able to safely navigate its user to | As our standard use case described, the drone is aimed mostly at application in traffic. It should be able to safely navigate its user to her destination, no matter how many other vehicles, pedestrians or further distractions are around. A user should never get the feeling to be lost while being guided by our drone. | ||

To reach this requirement, the most | To reach this requirement, the most evident solution is constant visibility of the drone. Since human attention is easily distracted, also conspicuity, which describes the property of getting detected or noticed, is an important factor for users to find the drone quickly and conveniently when lost out of sight for a brief moment. However, the conspicuity of objects is perceived similarly by (almost) every human, which introduces the problem of unwillingly distracting other traffic participants with an overly conspicuous drone. | ||

We will focus on two factors of salience and conspicuity: | We will focus on two factors of salience and conspicuity: | ||

* '''Color''' | * '''Color''' | ||

The color characteristics of the drone can be a leading factor in increasing the overall visibility and conspicuity of the drone. The more salient the color scheme of the drone proves to be, the easier it will be for its user to detect it. Since the coloring of the drone alone would not emit any light itself, we assume that this design | The color characteristics of the drone can be a leading factor in increasing the overall visibility and conspicuity of the drone. The more salient the color scheme of the drone proves to be, the easier it will be for its user to detect it. Since the coloring of the drone alone would not emit any light itself, we assume that this design property does not highly influence the extent of distraction for other traffic participants. | ||

Choosing a color does seem like a difficult step in a traffic environment, where | Choosing a color does seem like a difficult step in a traffic environment, where certain colors have implicit, but clear meanings that many humans got accustomed to. We would not want the drone to be confused with any traffic lights or street signs, since that would lead to serious hazards. But we would still need to choose a color that is perceived as salient as possible. Stephen S. Solomon conducted a study about the colors of emergency vehicles [http://docshare01.docshare.tips/files/20581/205811343.pdf]. The results of the study were based upon a finding about the human visual system: It is most sensitive to a specific band of colors, which involves ‘lime-yellow’. Therefore, as the study showed, lime-yellow emergency vehicles were involved in less traffic accidents, which does indeed let us draw conclusions about the conspicuity of the color. | ||

This leads us to our consideration for lime-yellow as base color for the drone. | This leads us to our consideration for lime-yellow as base color for the drone. | ||

To increase contrast, similar to emergency vehicles, red is chosen as a contrast color. This is implemented by placing stripes or similar patterns on some well visible parts of the drone. | |||

* '''Luminosity''' | * '''Luminosity''' | ||

To further increase the visibility of the drone, light should be reflected and emitted from it. Our design of the drone will only guarantee functionality by daylight, since a lack of light drastically limits the object and user detection possibilities. But an emitting light source on the drone itself is still valuable in daylight situations. | |||

We assume that a light installation which is visible from every possible angle of the drone should be comparable in units of emitted light to a standard LED bicycle lamp, which has about 100 lumen. As for the light color, we choose a color that is not preoccupied by any strict meaning in traffic and can be seen normally by color blind people. This makes the color blue the most rational choice. | |||

=== Positioning in the visual field === | === Positioning in the visual field === | ||

A correct positioning of the drone will be extremely valuable for a user but due to individual preferences, there will be difficulties in deriving a default positioning. The drone should not deviate too much from its usual height and position within its users attentional field, however, it might have to avoid obstacles, wait, and make turns without confusing the user. | |||

According to a study of Wittmann et. al. (2006) [https://doi.org/10.1016/j.apergo.2005.06.002], it is easier for the human visual-attentional system to track an arbitrarily moving object horizontally than it is vertically. For that reason, our drone should try to avoid objects with only slight movements in vertical direction, but is more free to change its position horizontally on similar grounds. Since the drone will therefore mostly stay at the same height while guiding a user, it makes it easier for the user to detect the drone, especially with repeated use. | |||

In a study that investigated drone motion to guide pedestrians [https://dl.acm.org/citation.cfm?id=3152837], a small sample was stating their preference on the distance and height of a drone guiding them. The results showed a mean horizontal distance of 4 meters between the user and the drone as well as a preferred mean height of 2.6 meters of the drone. As these values can be individually configured for our drone, we see them merely as default values. We derived an approximate preferred viewing angle of 15 degrees, so we are able to adjust the default position of the drone to each users body height, which is entered upon first configuration of the drone. | |||

<!-- | |||

=== User satisfaction and experience design === | === User satisfaction and experience design === | ||

A user should feel comfortable using this technology. This section will treat how this could be achieved and how a user’s satisfaction with the experience could be increased. | A user should feel comfortable using this technology. This section will treat how this could be achieved and how a user’s satisfaction with the experience could be increased. | ||

--> | |||

== Legal Issues == | == Legal Issues == | ||

Revision as of 20:52, 13 March 2019

<link rel=http://cstwiki.wtb.tue.nl/index.php?title=PRE2018_3_Group11&action=edit"stylesheet" type="text/css" href="theme.css"> <link href='https://fonts.googleapis.com/css?family=Roboto' rel='stylesheet'>

Organization

The group composition, deliverables, milestones, planning and task division can be found on the organization page.

Brainstorm

To explore possible subjects for this project, a brainstorm session was held. Out of the various ideas, the Follow-Me Drone was chosen to be our subject of focus.

Problem Statement

The ability to navigate around one’s environment is a difficult mental task whose complexity is often left unacknowledged by most of us. By ‘’difficult’’, we are not referring to tasks such as pointing north while blindfolded, but rather much simpler ones such as: following a route drawn on a map, recalling the path to one’s favourite cafe. Such tasks require complex brain activity and fall under the broad skill of ‘navigation’, which is present in humans in diverse skill levels. People whose navigation skills fall on the lower end of the figurative scale are slightly troubled in their everyday life; it might take them substantially longer than others to be able to find their way to work through memory alone; or they might get lost in new environments every now and then.

Navigation skills can be traced back to certain distinct cognitive processes (to a limited extent, nevertheless, as developments in neurosciences are still progressing [1]), which means that a lesion in specific brain regions can cause a human’s navigational abilities to decrease dramatically. This was found in certain case studies with patients that were subject to brain damage, and labeled “Topographical Disorientation” (TD), which can affect a broad range of navigational tasks [2]. Within the last decade, cases started to show up in which people showed similar symptoms to patients with TD, without having suffered any brain damage. This disorder in normally developed humans was termed “Developmental Topographical Disorientation” (DTD) [3], which, as was recently discovered, might affect 1-2% of the world's population (this is an estimate based on informal studies and surveys) [4] [5].

Many of the affected people cannot rely on map-based navigation and sometimes even get lost on everyday routes. To make their everyday life easier, one solution is to use a drone. The idea of the playfully-named “follow-me drone” is simple: By flying in front of its user, accurately guiding them to a desired location, the drone takes over the majority of a human’s cognitive wayfinding tasks. This makes it easy and safe for people to find their destination with minimized distraction and cognitive workload of navigational tasks.

The first design of the “follow-me drone” will be mainly aimed at severe cases of (D)TD, who are in need of this drone regularly, with the goal of giving those people some of their independence back. This means that the design incorporates features that make the drone more valuable to its user with repeated use and is not only thought-of as a product for rare or even one-time use as part of a service.

User

Society

The societal aspect of the follow-me drone regards the moral standpoint of society towards cognitively-impaired individuals. Since impairments should not create insuperable barriers in the daily life of affected people, it can be seen as a service to society as a whole to create solutions which make it easier to live—even if that solution only helps a small fraction of the world's population.

Enterprise

Since this solution is unique in its concept and specifically aimed at a certain user group, producing the drone is a worthy investment opportunity. However, the focus should always stay on providing the best possible service to the end-users. User-centered design is a key to this development.

User specification

While we are well aware that the target user base is often not congruent with the real user base, we would still like to deliver a good understanding of the use cases our drone is designed for. Delivering this understanding is additionally important for future reference and the general iterative process of user centered design.

Approach

In order to get to a feasible design solution, we will do research and work out the following topics:

- User requirements

- Which daily-life tasks are affected by Topographical Disorientation and should be addressed by our design?

- What limitations does the design have to take into account to meet the requirements of the specified user base?

- Human technology interaction

- What design factors influence users’ comfort with these drones?

- Which features does the technology need to incorporate to ensure intuitive and natural guiding experiences?

- How to maximise salience of the drone in traffic?

- What velocities, distances and trajectories of the drone will enhance the safety and satisfaction of users while being guided?

- Which kind of interfaces are available in which situations for the user to interact with the drone?

- User tracking

- How exact should the location of a user be tracked to be able to fulfill all other requirements of the design?

- How will the tracking be implemented?

- Positioning

- How to practically implement findings about optimal positions and trajectories?

- Obstacle avoidance

- What kind of obstacle avoidance approaches for the drone seem feasible, given the limited time and resources of the project?

- How to implement a solution for obstacle avoidance?

- Special circumstances

- What limitations does the drone have in regards to weather (rain, wind)?

- How well can the drone perform at night (limited visibility)?

- Physical design

- What size and weight limitations does the design have to adhere to?

- What sensors are needed?

- Which actuators are needed?

- Simulation

Alternative Solutions

3D Navigation is an alternative technology that can be used instead of the Follow-Me Drone. TomTom 3D Navigation is already used in new generation Peugeot cars. Apple has a Flyover feature that lets you get 3D views of a certain area. While it does not act as a navigator, it lays a foundation for the development of 3D mobile navigation.

- Google Maps

Google Maps has announced and demonstrated a new feature that works in tandem with Street View. Now the user will be able to navigate streets with a live view on his phone’s screen overlaid with navigational instructions. The user can walk around streets pointing their camera towards buildings and receiving information about those buildings. This is perfect for DTD patients because they have trouble recognizing landmarks. On top of that, it provides personalized suggestions, so the user can essentially get a notification once they point their phone at the direction of a building what this building is and why it is suggested. The overlay becomes more effective when combined with an earlier project **Google Glass**, especially for DTD patients.

References:

Google Maps is getting augmented reality directions and recommendation features Google made AR available for a small group of users already

- iPhone Flyover Apple Flyover

In Maps on iphone you can fly over many of the world's major cities and landmarks. It lets you get 3D views of a selected area.

“WRLD 3D maps provide a simple path to create virtual worlds based on real-world environments”. This provides a platform for further app development which means even if Google and Apple do not provide APIs to the backends of their respective technologies, then the WRLD platform facilitates the same functionality.

Solution

Here we discuss our solution. If it exists of multiple types of sub-problems that we defined in the problem statement section, then use separate sections (placeholders for now).

Requirements

Aside from getting lost in extremely familiar surroundings, it appears that individuals affected by DTD do not consistently differ from the healthy population in their general cognitive capacity. Since we can't reach the targeted group, it is difficult to define a set of special requirements.

- The Follow-Me Drone has a navigation system that computes the optimal route from any starting point to the destination.

- The drone must guide the user to his destination by taking the decided route hovering in direct line of sight of the user.

- The drone must guide the user without the need to display a map.

- The drone must provide an interface to the user that allows the user to specify their destination without the need to interact with a map.

- The drone announces when the destination is reached.

- The drone must fly in an urban environment.

- The drone must keep a minimum distance of two? meters to the user.

- The drone must not go out of line of sight of the user.

- The drone must know how to take turns and maneuver around obstacles and avoid hitting them.

- The drone should be operable for at least an hour between recharging periods back at home.

- The drone should be able to fly.

- (Optional, if the drone should guide a biker) The drone should be able to maintain speeds of 10m/s.

- The drone should not weigh more than 4 kilograms.

Human factors

Drones are finding more and more applications around our globe and are becoming increasingly available and used on the mass market. In various applications of drones, an interaction with humans who might or might not be involved in the task that a drone carries out, is inevitable and sometimes even the core of the application. Even though drones are still widely perceived as dangerous or annoying, there is a common belief that they will get more socially accepted over time [6]. However, since the technology and therefore the research on human-drone interaction is still relatively new, our design should incorporate human factors without assuming general social acceptance of drones.

The next sections will focus on different human factors that will influence our drone’s design with different goals. This will include the drone’s physical appearance and its users’ comfort with it, safety with respect to its physical design, as well as functionality-affecting factors which might contribute to a human's ability to follow the drone.

Physical appearance

The physical design of the drone depends on different factors. It is firstly defined and limited by technical requirements, as for example the battery size and weight, the size and number of propellers and motors and the system’s capacity for carrying additional weight (all discussed in “Technical considerations” [LINK?]). As soon as the basic functionality is given, the safety of the drone’s users has to be considered in the design. This is done in the section “Safety” [LINK?]. Taking all these requirements into account, further design-influencing human factors can now be discussed. However, the design process is not hierarchical, but rather iterative, which means that all mentioned factors can influence each other.

- Drone size: For a human to feel comfortable using a drone as a guiding assistant, the size will play an important role. Especially in landing or storing processes a drone of a big size will come in unwieldy, whereas a small drone might be more difficult to detect during the process of following it. In the study conducted in 2017 by Romell and Karjalainen [7], it was investigated what size of a drone people prefer for having a drone companion. The definition of a drone companion in this study lies close enough to our follow-me drone to consider the results of this study for our case. Given the choice between a small, medium or large drone, the majority of participants opted for the medium size drone, which was approximated at a diameter of 40cm. Giving priority to the technical limitations and the drone’s optimal functionality, we took this result into account.

- Drone shape

In the mentioned 2017 study by Romell and Karjalainen [8], also the optimal shape for such a drone companion was evaluated. It came to the conclusion that particularly round design features are preferred, which we considered as well in our design process. Additionally, while the drone is not flying, a user should intuitively be able to pick up and hold the drone, without being afraid to hurt him/herself or damage the drone. Therefore a sturdy frame or a visible handle should be included.

- Drone color

Since the color of the drone greatly contributes to the visibility of it, and is therefore crucial for the correct functioning of the system, the choice of color is discussed in the section “Salience in traffic” [LINK?].

Safety

The safety section considers all design choices that have to be made to ensure that no humans will be endangered by the drone. These choices are divided into factors that influence the physical aspects of the design, and factors which concern dynamic features of the functionality.

- Safety of the physical design

The biggest hazard of most drones are accidents caused by objects getting into a running propeller, which can damage the object (or injure a human), damage the drone, or both. Propellers need as much free air flow as possible directly above and below to function properly, which introduces some restrictions in creating a mechanical design that can hinder objects from entering the circle of the moving propeller. But most common drone designs involve an enclosure around the propeller (the turning axis), which is a design step that we also follow for safety reasons. Other than the propellers, we are not aware of another evident safety hazard that can be solved by changes in the physical design of the drone.

- Safety aspects during operation

Besides hazards that the drone is directly responsible for, like injuring a human with its propellers, there are also indirect hazards to be considered that can be avoided or minimized by a good design.

The first hazard of this kind that we identified, is a scenario where the drone leads its user into a dangerous or fatal situation in traffic. As an example, if the drone is unaware of a red traffic light and keeps on leading the way crossing the trafficked road, and the user shows an overtrust into the drone and follows it without obeying traffic rules, this could result in an accident.

Since this design will not incorporate real-time traffic monitoring, we have to assure that our users are aware of their unchanged responsibility as traffic participants when using our drone. However, for future designs, data from online map services could be used to identify when crossing a road is necessary and subsequently create a scheme that the drone follows before leading a user onto a trafficked road.

Another hazard we identified concerns all traffic participants besides the user. There is a danger in the drone distracting other humans on the road and thereby causing an accident. This assumption is mainly due to drones not being widely applied in traffic situations (yet), which makes a flying object such as our drone, a noticable and uncommon sight. However, the responsibility lies with every conscious participant in traffic, which is why we will not let our design be influenced by this hazard greatly. The drone will be designed to be as easily visible and conspicuous for its user, while keeping the attracted attention from other road users to a minimum.

Salience in traffic

As our standard use case described, the drone is aimed mostly at application in traffic. It should be able to safely navigate its user to her destination, no matter how many other vehicles, pedestrians or further distractions are around. A user should never get the feeling to be lost while being guided by our drone.

To reach this requirement, the most evident solution is constant visibility of the drone. Since human attention is easily distracted, also conspicuity, which describes the property of getting detected or noticed, is an important factor for users to find the drone quickly and conveniently when lost out of sight for a brief moment. However, the conspicuity of objects is perceived similarly by (almost) every human, which introduces the problem of unwillingly distracting other traffic participants with an overly conspicuous drone. We will focus on two factors of salience and conspicuity:

- Color

The color characteristics of the drone can be a leading factor in increasing the overall visibility and conspicuity of the drone. The more salient the color scheme of the drone proves to be, the easier it will be for its user to detect it. Since the coloring of the drone alone would not emit any light itself, we assume that this design property does not highly influence the extent of distraction for other traffic participants.

Choosing a color does seem like a difficult step in a traffic environment, where certain colors have implicit, but clear meanings that many humans got accustomed to. We would not want the drone to be confused with any traffic lights or street signs, since that would lead to serious hazards. But we would still need to choose a color that is perceived as salient as possible. Stephen S. Solomon conducted a study about the colors of emergency vehicles [9]. The results of the study were based upon a finding about the human visual system: It is most sensitive to a specific band of colors, which involves ‘lime-yellow’. Therefore, as the study showed, lime-yellow emergency vehicles were involved in less traffic accidents, which does indeed let us draw conclusions about the conspicuity of the color. This leads us to our consideration for lime-yellow as base color for the drone. To increase contrast, similar to emergency vehicles, red is chosen as a contrast color. This is implemented by placing stripes or similar patterns on some well visible parts of the drone.

- Luminosity

To further increase the visibility of the drone, light should be reflected and emitted from it. Our design of the drone will only guarantee functionality by daylight, since a lack of light drastically limits the object and user detection possibilities. But an emitting light source on the drone itself is still valuable in daylight situations. We assume that a light installation which is visible from every possible angle of the drone should be comparable in units of emitted light to a standard LED bicycle lamp, which has about 100 lumen. As for the light color, we choose a color that is not preoccupied by any strict meaning in traffic and can be seen normally by color blind people. This makes the color blue the most rational choice.

Positioning in the visual field

A correct positioning of the drone will be extremely valuable for a user but due to individual preferences, there will be difficulties in deriving a default positioning. The drone should not deviate too much from its usual height and position within its users attentional field, however, it might have to avoid obstacles, wait, and make turns without confusing the user. According to a study of Wittmann et. al. (2006) [10], it is easier for the human visual-attentional system to track an arbitrarily moving object horizontally than it is vertically. For that reason, our drone should try to avoid objects with only slight movements in vertical direction, but is more free to change its position horizontally on similar grounds. Since the drone will therefore mostly stay at the same height while guiding a user, it makes it easier for the user to detect the drone, especially with repeated use. In a study that investigated drone motion to guide pedestrians [11], a small sample was stating their preference on the distance and height of a drone guiding them. The results showed a mean horizontal distance of 4 meters between the user and the drone as well as a preferred mean height of 2.6 meters of the drone. As these values can be individually configured for our drone, we see them merely as default values. We derived an approximate preferred viewing angle of 15 degrees, so we are able to adjust the default position of the drone to each users body height, which is entered upon first configuration of the drone.

Legal Issues

In this project, a flying drone is designed to move autonomously through urban environments to guide a person to their destination. It is important however to first look at relevant legal issues. In the Netherlands, laws regarding drones are not formulated in a clear way. There are two sets of laws: recreational-use laws and business-use laws. It is not explicitly clear which set of laws applies to this project if any at all. The drone is not entirely intended for recreational use because of its clear necessity to the targeted user group. On the other hand, it does not fall under business use either it is not “used by a company to make money”---this does not include selling it to users. In that case, it is in the users’ ownership and is no longer considered used by the company. If the drone is rented, however, it still might fall under business use. In conclusion, neither set of laws applies to our project. Since drones are not typically employed in society, this is not unexpected and we might expect new laws to adapt to cases such as ours.

According to law, drone users are not allowed to pilot drones above residential areas regardless of their use. Our drone, however, flies through residential areas for its intended purpose, not above them.

Legislation is subject to change that can be in accordance with our project as well as against it. That being said, we can safely design our drone as intended without worrying about legislation. Our design does not explicitly violate any laws.

Technical considerations

Battery life

One thing to take into consideration while developing a drone is the operating duration. Most commercially available drones can not fly longer than 30 minutes [12],[13]. This is not a problem for a walk to the supermarket around the block, but to commute for example to work longer battery life would be preferred. Furthermore wind and rain have a negative influence on the flight time of the drone but the drone should still be usable during these situations so it is important to improve battery life.

In order to keep the battery life as long as possible, a few measures could be taken. First of all a larger battery could be used. This however makes the drone heavier and thus also would require more power. Practical energy density of a lithium-polymer battery is 150Wh/kg [14]. However if the battery is discharged completely it will degrade, decreasing its lifespan. Therefore the battery should not be drained for more than 80% meaning that the usable energy density is about 120Wh/kg.

Another way to improve flight time is to keep spare battery packs. It could be an option in this project to have the user bring a second battery pack with them for the trip back. For this purpose the drone should go back to the user when the battery is nearly low and land. The user should then pick the drone up and swap the batteries. This would require the batteries to be easily (dis)connectable from the drone. A possible downside to this option is that the drone would temporarily shut down and might need some time to start back up after the new battery is inserted. This could be circumvented by using a small extra battery in the drone that is only used for the controller hardware.

For this project first it is researched whether a continuous flight of around 60 minutes is feasible. If this is not possible it is chosen to use the battery swapping alternative. Unfortunately no conclusive scientific research is found about the energy consumption of drones so instead existing commercially available drones are investigated. In particular the Blade Chroma Quadcopter Drone [15] is researched. It weighs 1.3kg and has a flight time of 30 minutes. It uses 4 brushless motors and has a 11.1V 6300mAh LiPo battery. The amount of energy can be calculated with [math]\displaystyle{ E[Wh]=U[V]\cdotC[Ah] }[/math] which gives about 70Wh. The battery weighs about 480g [16] so this seems about right with previously found energy density. The drone has a GPS system, a 4K 60FPS camera with gimball and a video link system. The high-end camera will be swapped for a lighter simpler camera that uses less energy since the user tracking and obstacle avoidance work fine with that. The video link system will also not be used. The drone will only have to communicate simple commands to the user interface. This does spare some battery usage but on the other hand a computer board will be needed that runs the user tracking, obstacle avoidance and path finding. For now it is assumed that the energy usage remains the same. Unfortunately the flight time of 30 minutes is calculated for ideal circumstances and without camera. The camera would decrease the flight time by about 7 minutes . For the influence of weather more research is needed but for now it is assumed that the practical flight time is 15-20 minutes.

If a flight time of 60 minutes is required that would mean that a battery at least 3 times as strong is needed. However since these battery packs weigh 480g, that would increase the drone weight almost by a factor of 2. This would in itself require so much more power that even more battery and motor power is required. Therefore it can be reasonably assumed that this is not a desirable option. Instead the user should bring 2 or 3 extra battery packs to swap these during longer trips.

It is however still possible to design a drone that has sufficient flight time on a single battery. Impossible aerospace claims to have designed a drone that has a flight time of 78minutes whit a payload [17]. To make something similar a large battery pack and stronger motors would be needed.

Propellers and motors

To have a starting point which propellers and motors to use, the weight of the drone is assumed. Two cases are investigated. One is where the drone has flight time of 15-20 minutes and its battery needs to be swapped during the flight. In this case the drone is assumed to have a weight of approximately 1.5kg which is slightly heavier than standard for commercially available long flight time drones. The other case is a drone that is designed to fly uninterupted for one hour. The weight for this drone is assumed to be 4kg, the maximum for recreational drones in the Netherlands.

Multi charge drone with interchangeable batteries

With a weight of 1.5kg the thrust of the drone would at least have to be 15N. However, with this thrust the drone would only be able to hover and not actually fly. Several fora and articles on the internet state that for regular flying (no racing and acrobatics) at least a thrust twice as much as the weight is required (e.g. [18] and [19]) so the thrust of the drone needs to be 30N or higher. If four motors and propellers are used, this comes down to 7.5N per motor-propeller pair.

APC propellers has a database with the performance of all of their propellers [20] which will be used to get an idea of what kind propeller will be used. There are way to many propellers to compare individually so as starting point a propeller diameter of around the 10" is used (the propeller diameter for the perviously discussed Blade Chroma Quadcopter) and the propeller with the lowest required torque at a static thrust (thrust when the drone does not move) of 7.5N or 1.68lbf is searched. This way the 11x4 propeller is found. It has the required thrust at around 6100RPM and requires a torque of approximately 1.03in-lbf or 11.6mNm.

With the required torque and rotational velocity known a suiting motor can be chosen.

User Interface Design

The user interacts with the drone via multiple interfaces. Here we list the specific design elements and dynamics of each of these interfaces.

- A Natural Language User Interface (NLUI) to answer questions, make recommendations on desired destinations, take permission to change route mid flight, and similar speech-based interactions.

- A Graphical User Interface displayed through a web page that provides enter or change the destination and current location, add favorite locations for quick access. Allows user to change the altitude of the drone, stop and start the drone. Search bar: search for place or address, icons buttons that say food and drink, shopping, fun, and travel. Pressing food and drink shows a list of restaurants and bars and cafes with yelp reviews. Pressing travel shows gas stations, landmarks, airports, bus stations, train stations, hotels. Pressing fun shows cinemas and other entertaining places. Favorites show frequently used addresses.

- A Motion User Interface. Can interpret motion gestures.

Distance Estimation

An integral part of the operation of a drone is distance computation and estimation.

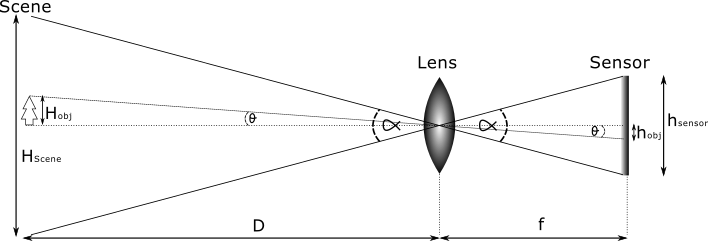

Since a camera can be considered to be a part of a drone, we present a formula for distance computation, given some parameters. The only assumption made is that the type of lens is rectilinear. The explained formula does not hold for other types of lenses.

[math]\displaystyle{ H_{obj} = D \cdot \frac{ h_{obj}(px)}{h_{sensor}(px)} \cdot V_{FOV}(radians) }[/math]

The parameters

- [math]\displaystyle{ H_{obj} }[/math] : The (real-world) height of the object or user in a metric system.

- [math]\displaystyle{ D }[/math] : The distance between the lens on the drone and an object or user in a metric system.

- [math]\displaystyle{ h_{obj}(px) }[/math] : The height of the object or user in pixels.

- [math]\displaystyle{ h_{sensor}(px) }[/math] : The pixel-height of the sensor of the camera. This can be computed in terms of the sensor’s pixel-size (which should be present in the specification of the used lens) and resolution of the digital image (which should easily be retrievable) [21].

- [math]\displaystyle{ V_{FOV}(radians) }[/math] : The vertical field of view of the lens. This is denoted by [math]\displaystyle{ \alpha }[/math] in the image above, and is either in the specification of the used lens, or can be computed using [math]\displaystyle{ f }[/math], the focal length and sensor size which both must be in said specification. It possibly needs a conversion to radians afterwards.

Rewriting the equation for [math]\displaystyle{ D }[/math], the distance gives the following.

[math]\displaystyle{ D = \frac{ H_{obj} }{ \frac{ h_{obj}(px)}{h_{sensor}(px)} \cdot V_{FOV}(radians) } }[/math]

Obstacle detection

While it may seem very difficult for a drone to implement obstacle detection and avoidance, when using the correct software technology this difficult problem is reduced to a less difficult one. One of the software technologies that we consider a good thing to be used is the 'Octomap' framework. It is an efficient probabilistic 3D mapping framework written in C++ that is based on octrees, freely available at github.

An octree is a tree-like datastructure, as the name implies. (It is assumed that the reader is familiar with a tree data structure. If this is not the case, an explanation can be found [ https://xlinux.nist.gov/dads/HTML/tree.html here]). The octree datastructure is structured according to the rule : "A node either has 8 children, or it has no children". These children are generally called voxels, and represent space contained in a cubic volume. Visually this makes more sense.

.

By having 8 children, and using an octree for 3D spatial representation, we are effectively recursively partitioning space into octants. As it is used in a multitude of applications dealing with 3 dimensions (e.g. collision detection, view frustum culling, rendering, ...) it makes sense to use the octree.

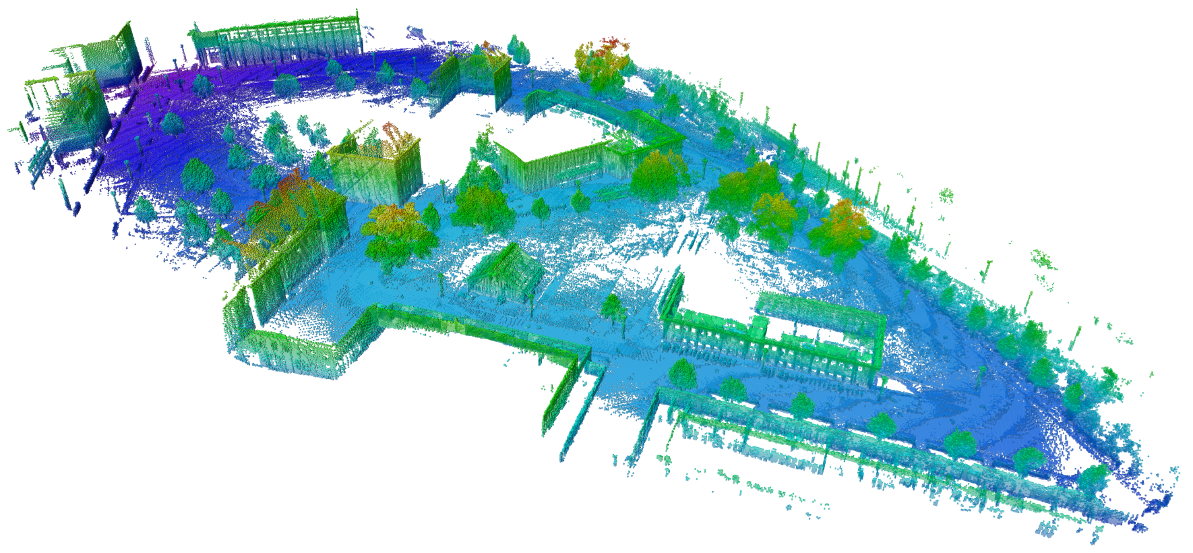

The OctoMap framework creates a full 3-dimensional model of the space around a point (e.g. sensor) in an efficient fashion. For the inner workings of the framework, we refer you to the original paper. Important notions that need to be mentioned is that one can set the depth and resolution of the framework. As it uses a depth-first-search traversal of nodes in an octree, by setting the maximal depth to be traversed one can influence the running time greatly. Moreover, the resolution of the resulted mapping can be changed by means of a parameter. Different resolutions are shown in an image to the side.

With the information presented in said paper, it is doable to convert any sort of sensory information (e.g. from a laser rangefinder or lidar) into an Octree representation ready for use. An example of such a representation can be seen in an image to the side.

Using an octree representation, the problem of flying a drone w.r.t. obstacles has been reduced to only obstacle avoidance, as detection is a simple check in the octree representation found by the octomap framework.

Obstacle avoidance

In order for a drone to navigate effectively through an urban environment, it must circumvent various obstacles that such an environment entails. To name some examples: traffic lights, trees and tall vehicles may form an obstruction for the drone to follow its route. To solve this problem, we will apply a computationally simple method utilizing a potential field [22].

The goal of this method is to continuously adjust the drone’s flightpath as it moves towards a target, so that it never runs in to any obstacles. For this subproblem, we assume the position and velocity of the drone, target and obstacles are known.

The potential field is constructed using attractive and repulsive potential equations, which pull the drone towards the target and push it away from obstacles. The attractive and repulsive potential fields can be summed together, to produce a field guides the drone past multiple obstacles and towards the target.

We consider potential forces to work in the x, y and z dimensions. To determine the drone’s velocity based on the attractive and repulsive potentials, we use the following functions:

[math]\displaystyle{ p_d^{att}(q_d, p_d) = \lambda_1 d(q_d, q_t) + p_t + \lambda_2 v(p_d, p_t)\\ p_d^{rep}(q_d, p_d) = -\eta_1 \dfrac{1}{d^3(q_o, q_d)} - \eta_2 v(p_o, p_d) }[/math]

where [math]\displaystyle{ \lambda_1, \lambda_2, \eta_1, \eta_2 }[/math] are positive scale factors, [math]\displaystyle{ d(q_d, q_t) }[/math] is the distance between the drone and the target and [math]\displaystyle{ v(p_d, p_t) }[/math] is the relative velocity of the drone and the target. Similarly, distance and velocity of an obstacle [math]\displaystyle{ o }[/math] are used.

There may be multiple obstacles and each one has its own potential field. To determine the velocity of the drone, we sum all attractive and repulsive velocities together:

[math]\displaystyle{ p_d(q_d, p_d) = p_d^{att} + \sum_o p_d^{rep} }[/math]

User Tracking

It should be clear that tracking the user is a large part of the software for the Follow-Me drone. We present python code (will be updated at the end of the project) that can be used with a webcam as well as normal video files, which can use various tracking algorithms.

Tracker Comparisons

Here we give a (short) overview of the different trackers used and their performance with respect to obstacles and visibility with downloaded videos. The videos that were used were taken from pyimagesearch. The overview will be on a video-basis, that is, we discuss different trackers in terms of each video used. After discussing the downloaded videos, we will discuss our own footage as well as an export from Unity.

Comparison: Initial (downloaded) --- american_pharoah.mp4

This video is from a horse race. For each tracker we select the horse in first position at the start of the video as object to be tracked. During the video, the horse racers take a turn, making the camera perspective change. There are also small obstacles, which are white poles at the side of the horse racing track.

- BOOSTING tracker: The BOOSTING tracker is fast enough, but cannot deal with obstacles. The moment that there is a white pole in front of the object to be tracked, the BOOSTING tracker fatally loses track of its target. This tracker cannot be used to keep track of the target when there are obstacles. It can, however, be used in parallel with another tracker, sending a signal that the drone is no longer in line of sight with the user.

- MIL tracker: The MIL tracker, standing for Multiple Instance Learning, uses a similar idea as the BOOSTING tracker, but implements it differently. Surprisingly, the MIL tracker manages to keep track of the target where BOOSTING fails. It is fairly accurate, but does not run fast with a measly 5 FPS average.

- KCF tracker: The KCF tracker, standing for Kernelized Correlation Filters, builds on the idea behind BOOSTING and MIL. It reports tracking failure better than BOOSTING and MIL, but still cannot recover from full occlusion. As for speed, KCF runs fast enough at around 30 FPS.

- TLD tracker: The TLD tracker, standing for tracking, learning and detection, uses a completely different approach to the previous three. Initial research showed that this would be well-performing, however, the amount of false positives is too high to use TLD in practice. To add to the negatives, TLD runs the slowest of the up to this point tested trackers with 2 to 4 FPS.

- MedianFlow tracker: The MedianFlow tracker tracks the target in both forward and backward directions in time and measures the discrepancies between these two trajectories. Due to the way the tracker works internally, it follows that it cannot handle occlusion properly, which can be seen when using this tracker on this video. Similarly to the KCF tracker, the moment that there is slight occlusion, it fails to detect and continues to fail. A positive point of the MedianFlow tracker when compared with KCF is that its tracking failure reporting is better.

- MOSSE tracker: The MOSSE tracker, standing for Minimum Output Sum of Squared Error, is a relative new tracker. It is less complex than previously discussed trackers and runs a considerable amount faster than the other trackers at a minimum of 450 FPS. The MOSSE tracker also can easily handle occlusion, and be paused and resumed without problems.

- CSRT tracker: Finally, the CSRT tracker, standing for Discriminative Correlation Filter with Channel and Spatial Reliability (which thus is DCF-CSRT), is, similar to MOSSE, a relative new algorithm for tracking. It cannot handle occlusion and cannot recover. Furthermore, it seems that CSRT gives false positives after it lost track of the target, as it tries to recover but fails to do so.

At this point it has been decided that only the MIL, TLD and MOSSE trackers can be used for actual tracking purposes. For the next video, only these trackers were compared as a final comparison, even though it should be clear that MOSSE is the best one for tracking a user.

Comparison: final (downloaded) --- dashcam_boston.mp4

This video is taken from a dashcam in a car, in snowy conditions. The car starts off behind a traffic light, then accelerates and takes a turn. The object to track has been selected to be the car in front.

- MIL tracker: Starts off tracking correctly. Runs slow (4-5 FPS). Does not care about the fact that there is snow (visual impairment) or the fact that the camera angle is constantly changing in the turn. Can perfectly deal with the minimal obstacles occuring in the video.

- TLD tracker: It runs both slower and inferior to MIL. There are many false positives. Even a few snowflakes were sometimes selected as object-to-track, making TLD impossible to use as tracker in our use case.

- MOSSE tracker: Unsurprisingly, the MOSSE tracker runs at full speed and can track without problems. It does, however, make the bounding box slightly larger than initially indicated.

Comparison: real-life scenario 1 --- long video

For the long video we have had Noah film Pim as subject to track, outside, near MetaForum on the TU/e campus. Daniel and Nimo will be considered as obstacles in this video, as they purposefully walked between Noah and Pim for the purpose of testing the different tracking algorithms. There are 7 points of interest defined in the video for the purpose of splitting it up into smaller fragments to be more detailed with testing, as the original footage was over 2 minutes long. These 7 points are as follows:

- Daniel walks in front of Pim.

- Nimo walks in front of Pim.

- Everyone makes a normal-paced 180 degrees turn around a pole.

- Pim almost gets out of view in the turn.

- Nimo walks in front of Pim.

- Daniel walks in front of Pim.

- Pim walks behind a pole.

- BOOSTING tracker: Runs at a speed of around 20 - 25 FPS.

Points of Interest:

- This is not seen as an obstruction. The BOOSTING tracker continues to track Pim.

- The tracker follows Nimo until he walks out of the frame. Then, it stays at the place where Nimo would have been while the camera pans.

- As mentioned, the bbox (the part that shows what the tracker is tracking) stays at the place where Nimo was.

- At this point the tracker has finally started tracking Pim again, likely due to luck.

- see 2. and notes.

- see 2. and notes.

- see 2. and notes.

Notes: Does not handle shaking of the camera well. It's also bad at recognizing when the subject to track is out of view; the moment there is some obstruction (points 2, 5, 6, 7), it follows the obstruction and tries to keep following it even though .

- MIL tracker: Runs at a speed of around : 10 - 15 FPS.

Points of Interest:

- The tracker follows Daniel for a second, then goes back to the side of Pim, where it was before.

- The tracker follows Nimo until he walks out of frame. Then it follows Daniel as he is closer to the bbox than Pim.

- The tracker follows Daniel.

- The tracker follows Daniel

- The tracker follows Nimo until he is out of view.

- The tracker follows Daniel until he is out of view.

- The tracker tracks the pole instead of Pim.

Notes: The bbox seems to be lagging behind in this video (it's too far off to the right). Similarly to BOOSTING, MIL cannot deal with obstruction and is bad at detection tracking failure.

- KCF tracker: Runs at a speed of around: 10 - 15 FPS

Points of Interest:

- The tracker notices this for a split second and outputs tracking failure, but recovers easily afterwards.

- The tracker reports a tracking failure and recovers.

- no problem.

- no problem.

- The tracker does not report a tracking failure. This was not seen as an obstruction.

- The tracker does not report a tracking failure. This was not seen as obstruction.

- The tracker reports a tracking failure. Afterwards, it recovers.

Notes: none.

- TLD tracker: Runs at a speed of around: 8 - 9 FPS.

Points of Interest:

- TLD does not care.

- TLD started tracking Daniel before Nimo could come in front of Pim, then switched back to Pim after Nimo was gone.

- TLD likes false positives such as water on the ground, random bushes and just anything except Pim.

- see notes.

- see notes.

- see notes.

- see notes.

Notes: The BBOX immediately focusses only on Pims face. Then it randomly likes Nimo's pants. It switches to Nimo's hand, Pim's cardigan and Pim's face continuously even before point 1. At points 4 through 7 the TLD tracker is all over the place, except for Pim.

- Medianflow tracker: Runs at a speed of around: 70 - 90 FPS

Points of Interest:

- No probem.

- It tracks Nimo until he is out of view, then starts tracking Daniel as he is closer similar to MIL.

- It tracks Daniel.

- It tracks Daniel.

- It reports a tracking error only to return to tracking Daniel

- It keeps tracking Daniel until out of view, then reports tracking error for the rest of the video.

- Tracking error.

Notes: The bbox becomes considerably larger when the perspective changes (e.g. Pim walks closer to the camera). Then, it becomes smaller again but stops focussing on Pim and reports tracking failure.

- MOSSE tracker: Runs at a speed of around: 80 - 100 FPS

Points of Interest:

- Doesn't care

- It follows Nimo.

- It stays on the side.

- It is still on the side.

- It follows Nimo

- It had stayed on the side and tracked Daniel for a split second.

- It tracks the pole.

Notes: Interestingly MOSSE doesn't perform well in a semi real-life scenario. It is bad at detecting when it is not actually tracking the subject to track.

- CSRT tracker: Runs at a speed of around: 5 - 7 FPS

Points of Interest:

- It doesnt care.

- It follows Nimo, then goes to the entire opposite of the screen and tracks anything in the top left corner.

- Tracks anything in the top left corner.

- Tracks anything in the top left corner.

- Tracks Nimo, then keeps tracking the top left corner.

- was at top right

- Goes to top left

Notes: Deals badly with obstruction. Likes to track the top left and right corners of the screen.

Comparison: real-life scenario 2 --- short video

The short video is simply Pim walking in a straight line. We have added this video, as we saw after the fact that in the first one we had filmed, Pim was not completely in the video.

- BOOSTING tracker: Runs at a speed of around: 25 - 30 FPS

Can surprisingly deal with having Pim's head cut off in the shot. Fails to follow when Nimo walks in front of Pim (e.g. it follows Nimo).

- MIL tracker: Runs at a speed of around: 8 - 10 FPS

Can somewhat deal with having Pim's head cut off in the shot, but doesn't reset bbox to keep tracking his head as well as his body. Fails to follow when Nimo walks in front of Pim (e.g. it follows Nimo).

- KCF tracker: Runs at a speed of around: 12 - 13 FPS

Can deal with having Pim's head cut off in the shot and nicely resets bbox to include head afterwards. Can deal with Nimo walking in front and reports tracking failure.

- TLD tracker: Runs at a speed of around: 8 - 10 FPS

Can not deal with having Pim's head cut off in the shot (it tracks the stone behind him). Can deal with Nimo walking in front, but does not report tracking failure.

- Medianflow tracker: Runs at a speed of around: 60 - 70 FPS

Can deal with having Pim's head cut off in the shot, but seems to be bad with tracking in general (again the bbox is off on the right side). Fails to follow when Nimo walks in front of Pim (e.g. it follows Nimo).

- MOSSE tracker: Runs at a speed of around: 70 - 80 FPS

Can deal with having Pim's head cut off in the shot and nicely resets bbox to include head afterwards. Fails to follow when Nimo walks in front of Pim (e.g. it follows Nimo).

- CSRT tracker: Runs at a speed of around: 4 - 6 FPS

Can deal with having Pim's head cut off in the shot and nicely resets bbox to include head afterwards. Shortly switches to Nimo when he walks in front of Pim, but then switches back.

Tracker conclusion

For the downloaded video sources, it is clear that the MOSSE tracker is the superior tracker for the purpose of tracking a user. On the remaining (downloaded) videos, which are drone.mp4, nascar_01.mp4, nascar_02.mp4 and race.mp4, the MOSSE tracker can pretty much track our selection without problems. It should be noted that when a race car is driving at high speeds, and the camera pans quickly, that MOSSE might make the bounding box larger than should be.

For our own footage however, we come to a different conclusion. The KCF tracker outperforms MOSSE in terms of tracking capabilities as well as notifying when the user is not in view anymore. Since KCF only has problems with complete obstruction of the subject to track, we have decided to run two trackers at the same time. These will be KCF and MOSSE. In the code, we manually reset the bounding box of the MOSSE tracker to the most recent one of KCF whenever occlusion occurs. This way, MOSSE will be used for tracking in full occlusion and KCF for all other cases with MOSSE merely as a backup. Since MOSSE runs in real-time, running both at the same time will have little to no impact on the running time of KCF.

Line of Sight

It is important for the drone to stay in line of sight of the user. We need to detect when this is no longer the case. Instead of designing a novel algorithm, we can use one of the trackers discussed in the Tracker Comparisons section that cannot handle occlusion and is good at failure reporting. As can be read in its conclusion, KCF is a good candidate. For the downloaded videos, MedianFlow also worked relatively well, but since KCF outshines MedianFlow in our own footage, and because we are already running it, we have decided to simply use it for deciding when the user is out of line of sight.