Implementation MSD19

Getting started with Crazyflie 2.X

Need introduction here.

Manual Flight

This section explains in detail on how to setup a Crazyflie 2.X drone starting from hardware assembly to first manual flight. We used Windows to continue with initial setup of the software part for manual flight. However, Linux (Ubuntu 16.04) was preferred for the autonomous flight. Following additional hardware is required to setup first manual flight.

- Bitcraze Crazyradio PA USB dongle

- A remote control (PS4 Controller or Any USB Gaming Controller)

This link was used to get started with assembly and setting up the initial flight requirements

NOTE: It must be noted that the Crazyflie is running on its latest firmware. The steps to flash the Crazyflie with latest firmware are discussed here.

The Crazyflie client is used for controlling the Crazyflie, flashing firmware, setting parameters and logging data. The main UI is built up of several tabs, where each tab is used for a specific functionality. We used this link to get started with first manual flight of the drone and to develop an understanding of Crazyflie Client. Assisted flight mode is recommended as an initial manual testing mode which is explained here. But before this assisted flight mode is executed, loco-positioning system needs to be setup as explained in next section.

Autonomous Flight

To get started with autonomous flight, the pre-requisites are as follows:

- Loco Positioning System: Please refer to “Loco Positioning System Section” for more details. The link explains the whole setup of loco-positioning system.

- Linux (Ubuntu 16.04)

- Python Scripts: We used autnomousSequence.py file from crazyflie python library. We found this script to be the starting point of autonomous flight.

This link was also used develop a comprehensive understanding of autonomous flight. After setting up the LPS, modifications in the python script of autonomousSequence.py file were made to adjust it according to desired deliverables.

Modifications

The following modifications were made in the autonomousSequence.py file.

- Data Logging of Crazyflie Position from Path Planning and Simulator to CSVs

- Path Planning

These modifications can be observed by comparing the original autonomousSequence.py file with our file here. The difference is easily visible and understandable. This is the git-repository of our work. The modified autnomousSequence.py file can be accessed in Motion Control folder.

Troubleshooting

The drone positioning parameters i.e. roll, pitch and yaw are not stable. The solution is to trim the parameters which can be seen in Firmware Configuration headline in the following link. For autonomous flights, issues like updating the anchor nodes and assigning them identification numbers may appear in Windows, but they can be solved by updating the nodes in Linux.

Localization

Need introduction here.

Local Positioning System (Loco Deck)

The core of localization tool used for the drone is based on a purpose-built Loco Positioning system (LPS) that provides a complete hardware and software solution by Bitcraze. This system comes with a documentation regarding installation, configuration, and technical information in the online directory. Before starting, the following preparations are made:

- 1 Loco positioning deck installed on Crazyflie

- 8 Loco positioning nodes positioned within the room

- Node fixed on stands 3D printed from makefile

- USB power source for anchors

- Ranging mode chosen as TWR

Overall hovering ability observed during hardware testing matches with what has been stated in company measurements as +/- 5 cm range.

Laser Range and Optical Sensor (Flow Deck 2.0)

An additional positioning component is a combination of VL53L0x Time of Flight sensor that measures ground distance and a PMW3901 optical flow sensor measures that ground planar movement. The expansion deck is plug and play. However, technical information is available.

Loco Deck + Flow Deck

Integration of sensor information occurs automatically as drone firmware can identify which types of sensors are available. Subsequently, the governing controller handles the data accordingly. To accept more sensor input, the Crazyflie utilizes an Extended Kalman Filter. The information flow from component to component at low level can be seen below;

Recommendations and Troubleshooting

- It is vital to have sensors operating in proper environmental conditions. Such as non-reflective floor surface for (Flowdeck) laser ranger, textured surface for (Flowdeck) camera/ laser ranger, empty room for clear radio signalling to (LPS) UWBs.

- For packaging purposes of the battery, Flowdeck and LPS deck it is better to use long pins such that proper power and signal distribution is supported. If not, the contact pins for mounting these devices becomes only marginally sufficient and at some points will not power the devices properly.

- Stable flight can be achieved if at least 4 anchors are identified so to make sure there is sufficient coverage over the (Tech United) field it is best to use 8 anchors. With this set up there is a better chance that range data will be continuously pinged from the Crazyflie 2.0 deck and back.

- LPS and Flowdeck together when used especially with TWR mode the Loco deck crashes after a while. The issue also raised in ticket and another. If encountered, Issue #368 re-flash the drone. Link does not work.

- While testing it has sometimes been observed that beacons on ground level do not communicate with Client at start of connection. A ticket has been opened concluding some alternative solutions in the forum.

- Some parameter setting could be changed to test in the CF Firmware in cases which EKF is getting confused with conflicting data from flow and the LPS at the same time. Things to try could be to increase the standard deviation: For Flow sensor, For Range sensor, For UWB data parameter

Path Planning

Algorithm 1

Assumptions

Solution

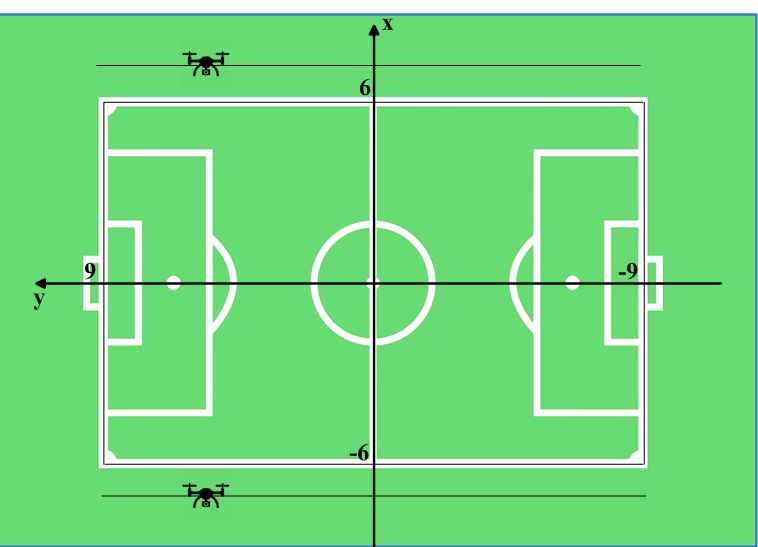

This is a 18m x 12m football pitch. In the x-y coordinate, the origin is at the center of the pitch. The x and z-coordinate reference of the drone is xd_r (can be set as 7 and -7) and zd_r.

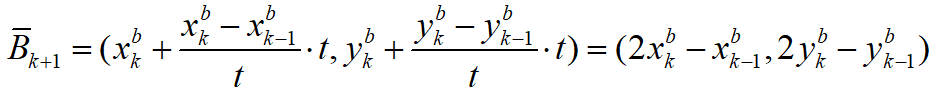

First of all, the predicted position of the ball is computed as below:

At sampling time k-1, the ball position is Bk-1 = (xbk-1, ybk-1)

At sampling time k-1, the ball position is , and the drone position is . Note that xd_r is the reference of the drone’s x-coordinate and is a constant. In addition, the reference of the drone’s z-coordinate zd_r is also a constant.

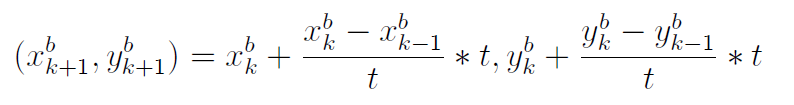

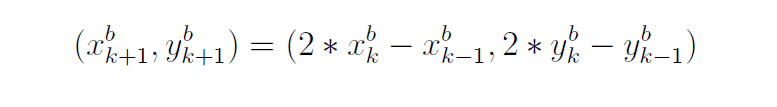

The predicted ball position at sampling time k+1 is

Which we denote as Bk+1 = (xbk+1, ybk+1).

The path planning method is described as follows:

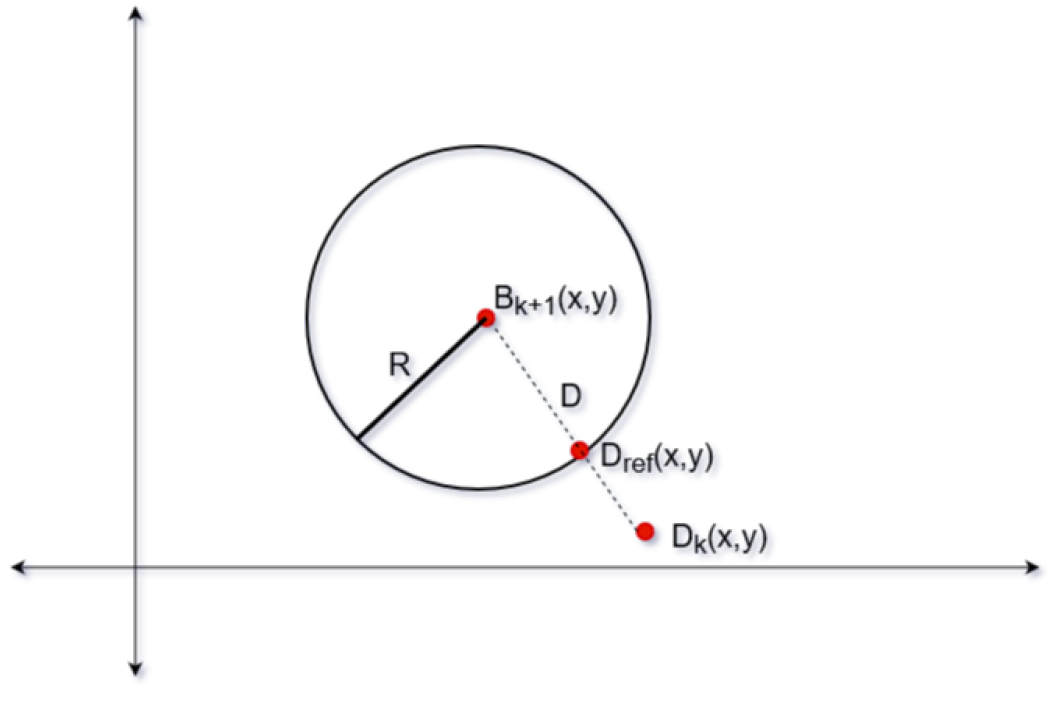

We assume there is a circle, with a radius of R, whose centre is Bk+1. The distance between Bk+1 and Dk is

Algorithm 2

The objective of this path planning algorithm is to find the shortest path for the drone while keeping the ball in the field of view. This is to minimise the mobility of the drone under given performance constraints to ensure a stable view from the onboard camera. This is done by keeping the ball's position as reference and thereafter, using the ball velocity to predict the future ball position.

Assumptions

- The altitude, zdrone of the drone is same throughout the duration of the game.

- The ball is always on the ground, i.e., zball = 0.

- The drone encounters no obstacles at altitude, zdrone.

Solution

Let R and D be the radius of the circle around the ball, and the distance of the drone from the ball respectively. The drone holds its current position for D < R and tracks the ball as D exceeds R as shown in Fig below.

Let Bk-1 = (xbk-1, ybk-1) be the position of the ball at sampling time (k - 1), Bk = (xbk; ybk) be the sampling position of the ball at sampling time k. The predicted position of the ball is therefore given by the following equation:

where t is the time taken by the drone to travel from position Bk-1 to Bk. Distance, D is then defined as follows:

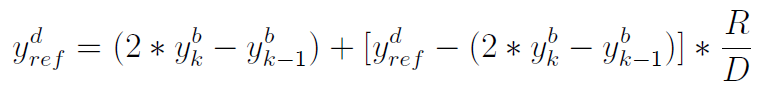

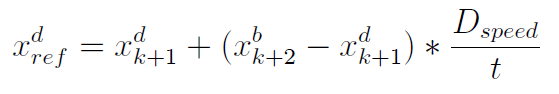

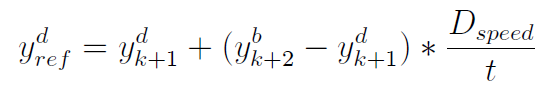

Dref = (xdref ; ydref ) are the reference coordinates for the drone to achieve within time period, t and is denoted by the following equation:

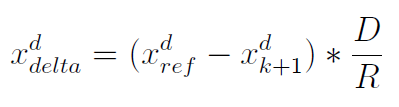

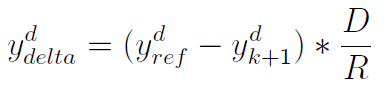

The error between the reference position, Dref and the current position, Dk is estimated using the performance constraints of the Crazyflie and fed back to the algorithm to compute Dk+1. The error terms are estimated as follows:

Therefore, the reference position, Dref is computed as follows:

The drone reference position is then updated at every sampling frequency of the Visualiser that is also used to provide the information of ball position in the soccer field.

Simulation

Need introduction here.

Previous Game Data Extraction

To demonstrate the performance of our system, we planned to fly the drone both in the virtual world and the physical world. The virtual drone is for demonstrating that the output from our path planner is practical, and the physical drone is for demonstrating that our capability of flying the drone autonomously according to the output of the path planner.

To describe the whole scenario in detail. In the virtual world, a previous game of Tech United will be played, and the drone will fly around the field according to the output from the path planner. On the monitor, what the camera on the drone is filming will be shown. On the other hand, in the physical world, a drone will also be flying according to the command from the path planner, but there will be no game playing.

To realize the above-mentioned scenario, we need to extract the ball position from previous game data and transfer it to the path planner. In the beginning, we tried to retrieve the ball position from the data file loaded when replaying the previous game. After a few attempts, it turns out that it would be more straightforward to save the ball position into a matrix when it is used in the original program. A function is built to implement this.

Drone Camera View Control

To show what the camera is supposed to be filming in the virtual world, we need the position and yaw angle of the drone, and the pitch angle of the camera when it is mounted on the drone. With these defined inputs, we built a function which will read those data from a CSV format file, and change the displayed view in the simulator accordingly.

Perception

The fundamental goal is being able to referee the game of football. While testing for this project is a two vs. two game between two robots at the Tech United pitch facilities, the ultimate vision is that the system could be scaled and adapted for use in a real outdoor game between human beings. From the experience of previous projects, the client imposed the direction that the developed system uses drones with cameras. The main benefit of this being the relative convenience of setting up and the assumption that this dynamic capability would save cost by allowing for fewer cameras for comparable camera coverage.

The work activity relating to the camera system was divided into two workstreams to allow for concurrent work activity. Firstly, the selection and integration of the hardware, such that it would not adversely affect the flight dynamics of the drone. Owing to the small size of the Crazyflie 2 drone, and the additional safety issues with using the larger drone variant with extra load capacity, we were restricted to using a small FPS camera because of latency issues with other options, as discussed in Yussuf's section. While this option allows for a live camera feed to an external referee, this option seemingly does not allow for further processing of those images without significant delay. So this option would only ever allow for the most basic of systems, requiring humans firmly in the driving seat of all decision making. While this simplification was deemed an acceptable compromise for the scope of this project, it is assumed that the project will be continued by others, and so this is an essential factor to consider. In this project, we also assumed that the position of the ball and players is known, as this data has been taken from previous games that have been recorded from the server. In the real case, this would not be available, and mechanisms to determine this input must be a part of the system itself. Intending to address both of those points, the second workstream relating to the camera system focused on obtaining information from the data captured in the video streams. As the first step in that direction, the specific objective here was focused on getting the ball position so that this could input into Song and Ankita's control software and also update the simulator views. At this stage, the player positions have not been considered, as this information is required for more granular decisions.

Workstream 1

The initial step in developing this interpretation aspect of the camera system was to construct a scale model of the real pitch. Owing to logistical reasons, such as availability of the actual pitch and distance from our office, this proved to be a useful and convenient tool in early-stage prototyping of ideas. From this, we determined a first attempt strategy of a fixed camera, independent of the drone, to capture the ball and whole pitch. Then by taking the homography, we could determine the world co-ordinate of the ball with respect to the pitch from the image space. Several issues where identified from conducting scale model testing, such as bright spots, occlusions, and changes in lighting condition. In order to overcome these issues, the first step would be to apply a mask to the image; this would block out the background so that only the pitch area would be considered. At this early stage, occlusions would just be accepted, and in such cases, the system would not be able to provide a decisive location for the ball. This was deemed to be acceptable because the dynamics of the game mean that occlusions are usually temporary, and the drone would only move with a quite significant change in ball position anyway. From testing on the model and the actual pitch, it was found that occlusions are not as substantial as was expected. Of course, the use of extra cameras would also further reduce this impact. To address the issue of changing light conditions, using Hue, Saturation, Value (HSV) color rather than RGB color significantly reduced the effect of this. For this first proof of concept in the specific test environment, a bright orange ball was used, and so we could expect high contrast with all other actors on the pitch; also, the light conditions at the Tech United facilities are well controlled. To improve system quality, several filters are applied to the image, such as erosion and dilation, and the position of the ball in the image is identified by blob detection and taking the centroid.

image of the model pitch in the office here

It was found that the most convenient option would be to use the camera built into a mobile phone. From a practical point of view, this also has the benefit that everyone tends to carry a cellular phone around with them. In order to do this, the Epoccam app was used that allows the mobile phone to be used as a wireless webcam. It was found that if both the phone and laptop where connected to the Eduroam network, then there was no discernable latency issue. This was also tested using a mobile data hotspot, and this also proved to have excellent performance. In order to mount the mobile phone, a camera mount was constructed from a selfy-stick and a 3d printed base that allowed it to be secured to a scaffolding pole at the pitch site. On the pitch, testing showed that the delay and accuracy were both completely reasonable. At the extreme corners of the pitch from the camera, the system placed the ball within less than one meter of precision on a first attempt; this was deemed adequate just for informing the drone position. At the center of the pitch, the precision was within 20cm. Due to the coronavirus, further testing and refinement were not possible. There is, however, the scope for plenty of improvement if it should be needed. The camera was only placed 2m off the ground, and so this means that, at that height, at the extremes of the field, the pitch line angles are very acute. Also, the image quality was only set to low during that particular test. The testing that was done at the higher resolution showed no noticeable drop in latency.

Workstream 2

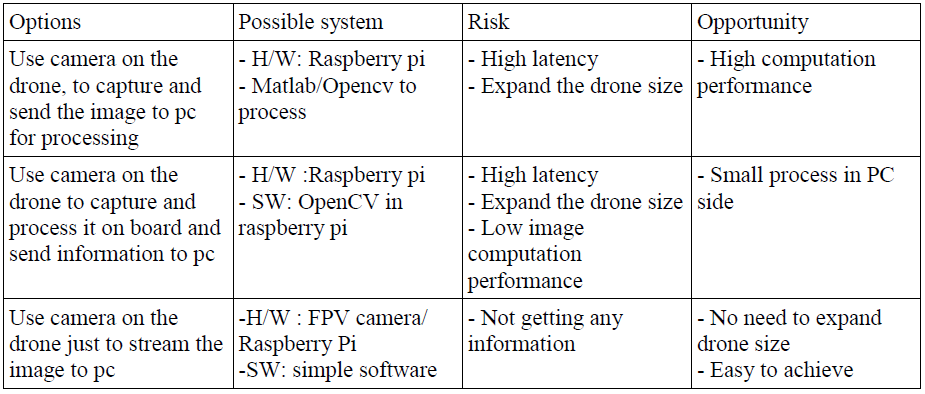

Vision system is required in order to get the information from the actual field. During the projects three options are considered and analyzed, see Table below.

For the first option, we tried to investigate the communication performance between raspberry pi and pc. The communication uses IP communication give ref link here. . To verify the performance, some setting is investigated, such as changing from TCP protocol to UDP and use different wifi router. The result shows high latency with the fastest would be 0.6s. This shows that wireless communication is not reliable to give field information (e.g ball location), hence we leave this option out. For the second option, it could be risky and will spent a lot of time since it will add more work on the drone system (e.g it needs to be expanded). Hence, we leave this option out as well. We conclude that the requirement for the vision system is only to stream video. Therefore, fpv camera that is attached to basic crazyflie drone is the vision system implement in our project. See figure below.

Final vision system setup:

- Wolfwhoop WT05FPV Camera

- Skydroid 5.7 dual receiver

Operation:

- Connect the power cable from FPV camera with crazyflie battery.

- Connect skydroid receiver to pc run any webcam software/choose as second webcam for opencv.

- Set radio frequency in FPV camera into skydroid receiver frequency .

Integration

This task integrates all the subsystem that follows the strategies/deliveries defined. For that we have two strategies/deliveries

Path Planning and Crazyflie

Path Planning and Simulator

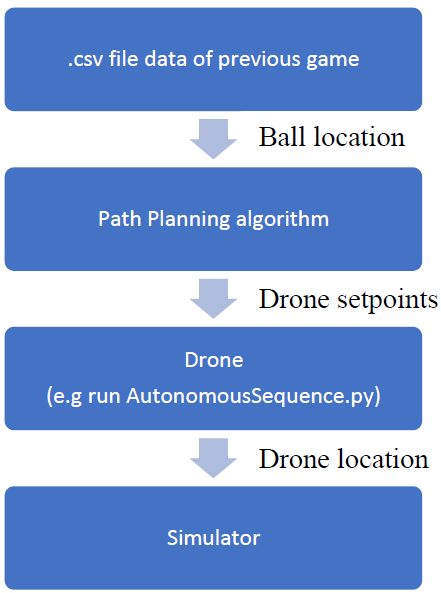

The goal for this strategy is to verify the path planning algorithm and drone behavior based on setpoints given (e.g response time, maximum speed).

The plan is to extract the ball location data of previous game data (e.g to .csv file) and translate that into drone setpoint which will be equal to camera location in simulator. After the path planning is verified, these drone setpoint is implemented with crazyflie.

This strategy will be the first delivery of the project where the drone flies in the actual field based on the setpoint sequence generated, while the drone location is fed to simulator that playing a game data. The expected result would be the location of the camera in simulator is synchronized with the ground truth of drone location and hence the goals are achieved.

This integration task involves drone, simulator, and path planning subsystem. The sub-tasks which are addressed should be:

- Transform frame reference from simulator and drone. Since both use different frame reference, transforming the coordinate is required between loco positioning system(lps) and simulator. We decided to add this functionality in drone system: drone transform the setpoint to its lps coordinate and transform its ground truth position to simulator coordinate which is fed to simulator as camera position.

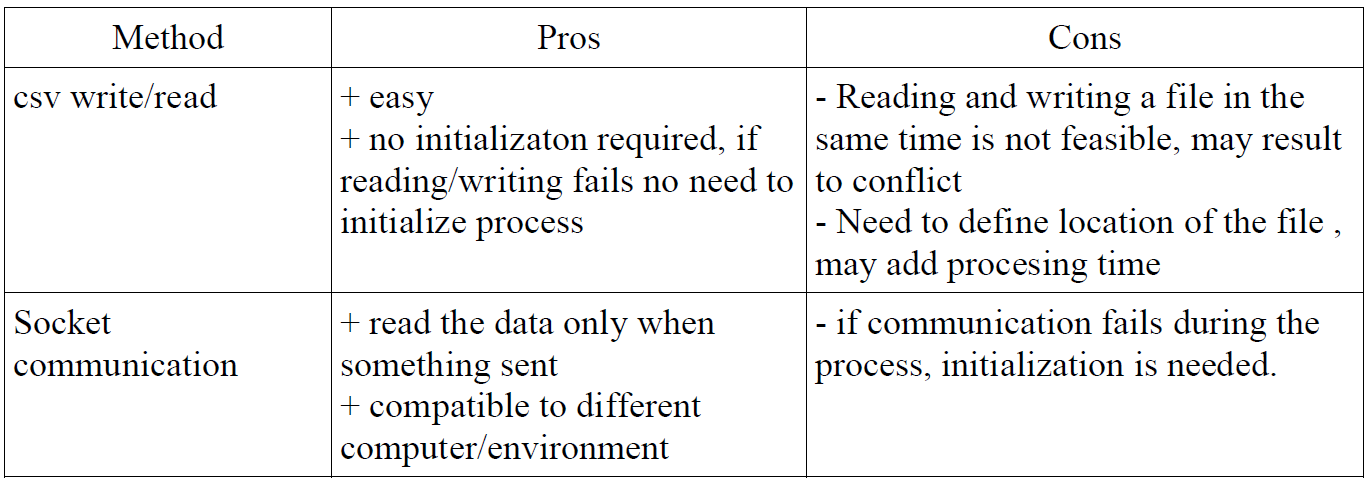

- Communication between drone and simulator The simulator is run in matlab enviroment while the drone is in python environment. To enable transferring the data from the drone to simulator, two options are considered:

- Drone write a file (e.g csv file) that contains drone actual location, and simulator read the file as simulator camera position.

- Use socket communication, to communicate between the process.

Both are feasible and tested with minimum code <get link here> <socket communication> <csv communication>. The second option has been tested with crazyflie running. Following are the pros and cons between the options:

Perception, Path Planning and Simulator (Not Implemented)

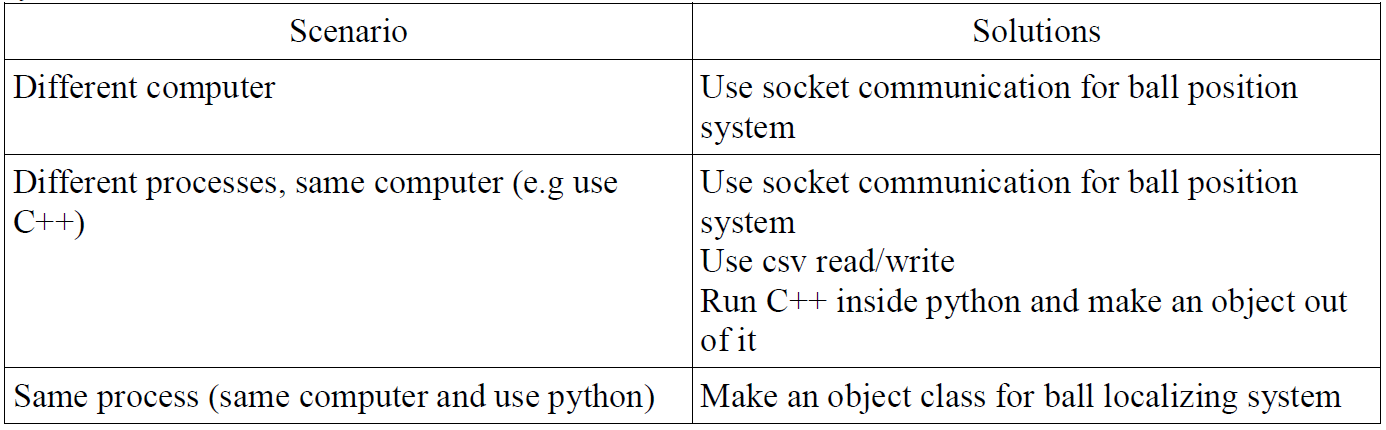

Figure shows how this strategy will look like. In the general planning we decide to use system out of the drone to get the ball location based on camera system consideration. Hence, we use a static camera to feed ball location into path planning algorithm that later will generate the drone set point.

Since the ball localizing system can be developed in any system, integration between ball localizing system is considered based on different scenarios.

[math]\displaystyle{ abc }[/math]

Testing

The computed path planning algorithms are tested on the Visualiser and the hardware itself as explained as follows:

- For a given recorded game, the Simulator is capable of providing the ball coordinates which are then fed into the path planning algorithm. The python script for both the algorithms generate a .CSV file with information on drone's reference position along with the yaw angle of the drone. This is sufficient information to set a frame of view within the game and visualise the activity of the active players and the ball. More information on the implementation of this method can be found here.

- After the successful implementation of the algorithm on the simulator, the reference drone position points are plugged into AutonomousSequence.py python script. These points are fed into the drone to have the ball in the field of view of drone's camera.

In a real test case scenario, the drone gets the information of the ball's position using the onboard camera and objective of the drone would then be to keep the ball in its field of view.