Firefly Eindhoven - Localization - Top Camera: Difference between revisions

| (25 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

==Software | [[File:Soccer_field.png|thumb|500px|The soccer field as seen by the camera]] | ||

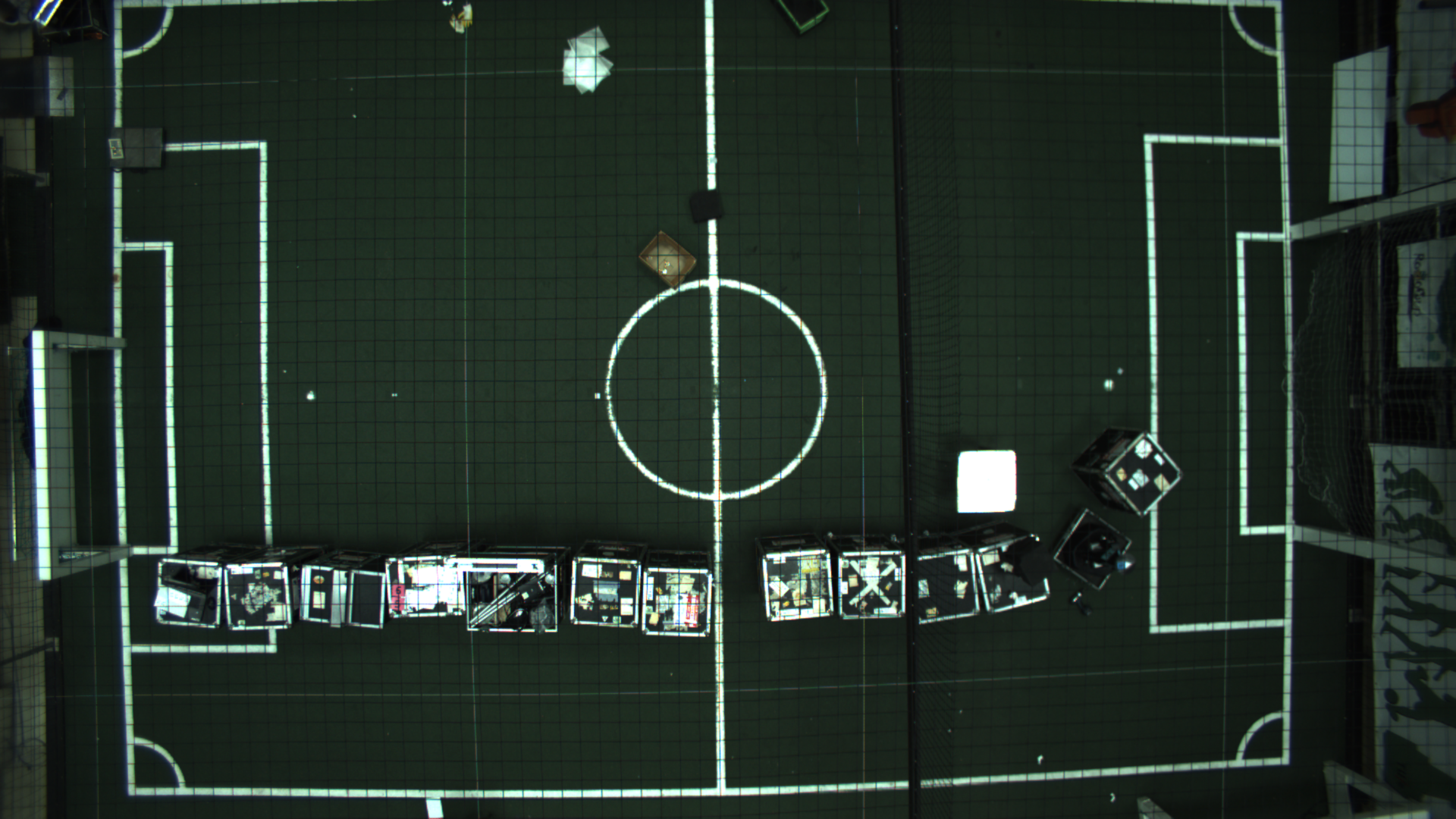

* | The top camera is mounted above the soccer field of the Drone lab and provides a nearly full view of the soccer field. In the Firefly project it is used for localization of drones and ground robots using LED markers on these devices. We have developed software to capture images from the camera, process them and to track moving targets equipped with markers. Compared to the Ultra-wideband and ultrasound technologies a high refresh rate of around 17 FPS is possible, but the detection is less precise and quite unreliable due to reflections and LEDs blocked by the net spanning the soccer field. Further development of the detection algorithm may remedy these deficiencies to some extent. | ||

* | |||

==Hardware== | |||

The camera is manufactured by [https://www.alliedvision.com/en/products/cameras.html Allied Vision] (exact model unknown) and can capture images and live video at 20-30 FPS in Full HD resolution (1920x1080). It is an industrial camera and can be connected to a laptop using the Ethernet cable labeled "Laptop" (ethernet connected cameras are typically referred to as GigE cameras, named after the interface). Allied vision provides the [https://www.alliedvision.com/en/products/software.html Vimba] Software Development Kit (SDK) to interface with the camera in software, you will need to install this before you can use the tracking application. | |||

==Software== | |||

[[File:Class_diagram_Topcam_tracker.png|thumb|700px|Class diagram for the tracker application]] | |||

The topcam-tracker application uses the Vimba SDK to capture live images from the camera, processes these to find the locations of drones and then sends these over UDP for other services to work with the location data. | |||

The source code for the topcam tracker is available on [https://github.com/tue-firefly/topcam-tracker GitHub]. | |||

===Architecture=== | |||

The application is structured as follows: | |||

* Main: the main function is the entrypoint of the program. It parses command line arguments, then instructs the ApiController to open the camera and start the processing. | |||

* ApiController: provides an interface to the VimbaSystem API. It provides the StartContinuousAcquisition function that instantiates the FrameObserver, opens the camera and registers the FrameObserver to receive a callback when new frames arrive. | |||

* FrameObserver: processes new frames as they arrive. It reads the frame buffer provided by the Vimba API into an OpenCV matrix, uses the DroneDetector to locate drones and then sends this data over UDP using the UDPClient. | |||

* DroneDetector: detects individual LEDs in the input image by looking for bright spots, then clusters these into groups and returns a list of DroneStates (position and rotation) derived from the positions of the LEDs in each cluster. The previous states as stored to correlate new drone locations with old ones and transfer the drone ID of the old state to the new one (using a nearest neighbour approach). | |||

* DroneState: structure to describe the state of a drone. It contains a unique ID to identify each drone, the position (x and y coordinates) of the drone, and the rotation in the xy plane. | |||

===Data protocol=== | |||

The drone states are sent over UDP to a host and port configurable at runtime using command line arguments. Each drone state is sent in a separate UDP packet, the structure of such a packet is the following: | |||

{| class="wikitable" border="1" | |||

|- | |||

! Attribute | |||

! id | |||

! x | |||

! y | |||

! psi | |||

|- | |||

! Data type | |||

| uint32_t | |||

| double | |||

| double | |||

| double | |||

|- | |||

! Size (bytes) | |||

| 4 | |||

| 8 | |||

| 8 | |||

| 8 | |||

|- | |||

! Unit | |||

| n/a | |||

| meters | |||

| meters | |||

| radians | |||

|} | |||

Note that the origin (x, y) = (0, 0) is exactly under the top camera on the soccer field. | |||

===Continuous testing=== | |||

Continuous testing is enabled on the GitHub repository. When a new pull request is created, the code is automatically compiled in a clean environment and tests are performed to verify that the DroneDetector is able to properly locate the drones in a set of test images. It also verifies that the UDPClient sends messages to the proper host and port and adheres to the data protocol described above. | |||

The topcam-tracker is only supported on Linux platforms, but even with this restriction we faced portability issues of the software: Sometimes code that compiled and worked perfectly on [https://www.archlinux.org/ Arch Linux] would refuse to build on [https://www.ubuntu.com/ Ubuntu] because of a difference in GCC version etc. | |||

Furthermore, installing the software on new systems was annoying because a lot of packages had to be installed, and the Vimba SDK required some setup. | |||

For this reason an installation script was developed that works on all supported platforms (Arch, Ubuntu 16.04, Ubuntu 17.10) so that installing the software would become easy. The Continous Integration setup was extended to start a [https://www.docker.com/ docker] instance of each supported distribution and run the install script, build the app and execute all tests. | |||

Our current setup thus guarantees that the topcam-tracker can be installed on any of the supported platforms, and future changes will not break compatibility. | |||

In a later stage we want to add more realistic test cases using real video captured on the top camera while a drone was flying, as the tests currently only cover some very trivial single image cases. We would also like to create a tool to analyse the performance of the DroneDetector using a large video dataset, so we can more easily experiment with new approaches and immediately evaluate the performance. | |||

==Extension to multidrone procedure== | ==Extension to multidrone procedure== | ||

| Line 9: | Line 68: | ||

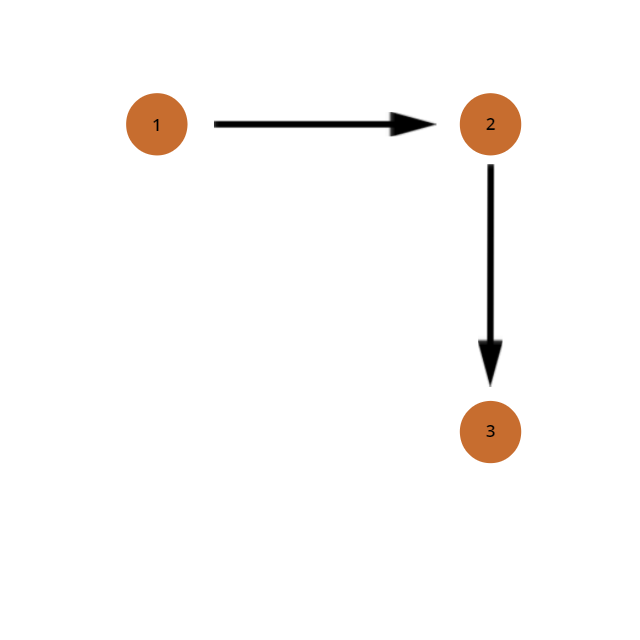

To get from the I shape to the L shape, the geometry of the code had to be changed. It was decided to create vectors between the three points, and to find the direction between the two vectors. This was done by rotating the first vector 90 degrees clockwise and taking the dot product between the rotated vector and the second vector. | To get from the I shape to the L shape, the geometry of the code had to be changed. It was decided to create vectors between the three points, and to find the direction between the two vectors. This was done by rotating the first vector 90 degrees clockwise and taking the dot product between the rotated vector and the second vector. | ||

This uniquely determines which of the two vectors is part of the front of the drone, because if the angle is negative (meaning turned less than 180 degrees counter clockwise with respect to the previous vector), this means that the second vector could be made by rotating the first one counter clockwise, meaning that the second vector is the vector between the two LEDs at the front of the drone. If the angle is positive, the second vector could be made by rotating the first one clockwise, and therefore the first vector is the vector between the two LEDs at the front of the drone. | |||

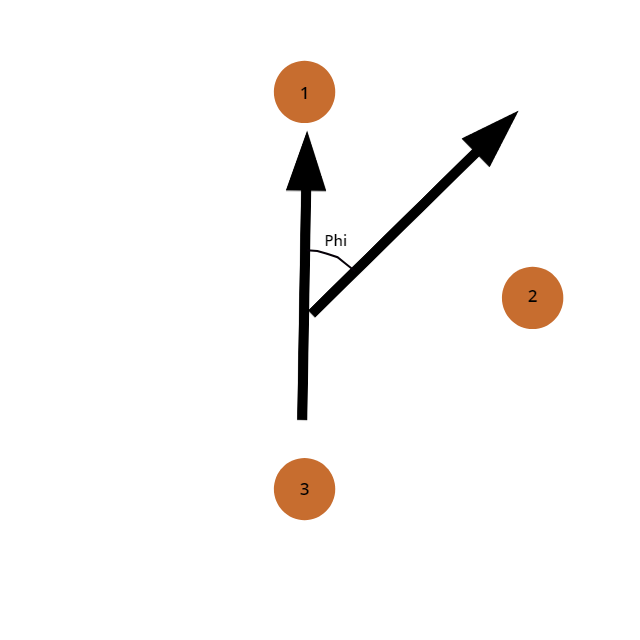

Bearing this in mind, the angle of the drone can be determined by creating a vector perpendicular to the vector between the points in the front and exactly in the middle of those two points. The angle of the drone will be the angle between an arbitrary vector that is pointing into the phi is zero direction and the vector just explained. The x and y position of the drone can be easily calculated by taking the average between the two outer points. | Bearing this in mind, the angle of the drone can be determined by creating a vector perpendicular to the vector between the points in the front and exactly in the middle of those two points. The angle of the drone will be the angle between an arbitrary vector that is pointing into the phi is zero direction and the vector just explained. The x and y position of the drone can be easily calculated by taking the average between the two outer points. | ||

| Line 15: | Line 74: | ||

===Getting from one to multiple drones=== | ===Getting from one to multiple drones=== | ||

To get the camera to detect multiple drones, a distinction had to be made between LEDs of one drone and another. To do this, a K-means algorithm was used to find | To get the camera to detect multiple drones, a distinction had to be made between LEDs of one drone and another. To do this, a K-means algorithm was used to find | ||

clusters. These clusters of LEDs were analyzed to see if | <math>ceil(\frac{\#_{LEDs}}{3})</math> | ||

clusters, where | |||

<math>\#_{LEDs}</math> | |||

is equal to the amount of LEDs the algorithm has found. | |||

This means that the algorithm would find clusters equal to the amount of LEDs it found divided by three, rounded up. This was done to make sure the K-means algorithm would prefer to make groups of three LEDs, which is the amount of LEDs on a drone. | |||

These clusters of LEDs were analyzed to see if they had more or less than three points, or if these points were too close together or too far away to be a drone. If any of these conditions were true, the cluster is deleted from the list. If no clusters are left after this procedure, the exposure of the camera would be changed to find more or less LEDs. The clusters of three LEDs would then be given an ID based on the previous positions of the drones, if available. If the program finds an extra drone or it is the first frame, the IDs are given in the order the K-means gives the results, which can be seen as random. | |||

===Design decisions=== | ===Design decisions=== | ||

During the design, certain decisions had to be made in terms of robustness and accuracy. These decisions are summarized | During the design, certain decisions had to be made in terms of robustness and accuracy. These decisions are summarized next. | ||

The first design decision that had to be made was which points to use to calculate the angle. Using two points will be less accurate than using three points, but taking the third point into account will decrease the robustness as checking if this point is on the right line to validate the results afterwards will not make sense if this point is also used in the calculations. It was decided to go with the two points and using the third point as a check because if the point was too far off to be of use, the check after the K-means would already have gotten rid of the drone and the robustness was valuated as more important than the accuracy due to this. | The first design decision that had to be made was which points to use to calculate the angle. Using two points will be less accurate than using three points, but taking the third point into account will decrease the robustness as checking if this point is on the right line to validate the results afterwards will not make sense if this point is also used in the calculations. It was decided to go with the two points and using the third point as a check because if the point was too far off to be of use, the check after the K-means would already have gotten rid of the drone and the robustness was valuated as more important than the accuracy due to this. | ||

The second design decision that had to be made was when to throw away results and when to keep them after the K-means. This is because a four LED result could still be a drone but a fourth LED could have appeared due to reflections. Checking these results would make sure that more drone results will be displayed and the frequency that a drone would not be detected for a single frame would decrease, but the computation time would take longer and if the algorithm would be wrong and would detect a drone without there being one, the drone which it detected wrongly would think it would be at a completely different position and therefore would suddenly give a high thrust in an unexpected direction, potentially ruining the show. Therefore the robustness of the program would decrease greatly if not implemented correctly. For this reason, it was decided not to implement this. | The second design decision that had to be made was when to throw away results and when to keep them after the K-means. This is because a four LED result could still be a drone but a fourth LED could have appeared due to reflections. Checking these results would make sure that more drone results will be displayed and the frequency that a drone would not be detected for a single frame would decrease, but the computation time would take longer and if the algorithm would be wrong and would detect a drone without there being one, the drone which it detected wrongly would think it would be at a completely different position and therefore would suddenly give a high thrust in an unexpected direction, potentially ruining the show. Therefore the robustness of the program would decrease greatly if not implemented correctly. For this reason, it was decided not to implement this. | ||

Latest revision as of 22:21, 24 May 2018

The top camera is mounted above the soccer field of the Drone lab and provides a nearly full view of the soccer field. In the Firefly project it is used for localization of drones and ground robots using LED markers on these devices. We have developed software to capture images from the camera, process them and to track moving targets equipped with markers. Compared to the Ultra-wideband and ultrasound technologies a high refresh rate of around 17 FPS is possible, but the detection is less precise and quite unreliable due to reflections and LEDs blocked by the net spanning the soccer field. Further development of the detection algorithm may remedy these deficiencies to some extent.

Hardware

The camera is manufactured by Allied Vision (exact model unknown) and can capture images and live video at 20-30 FPS in Full HD resolution (1920x1080). It is an industrial camera and can be connected to a laptop using the Ethernet cable labeled "Laptop" (ethernet connected cameras are typically referred to as GigE cameras, named after the interface). Allied vision provides the Vimba Software Development Kit (SDK) to interface with the camera in software, you will need to install this before you can use the tracking application.

Software

The topcam-tracker application uses the Vimba SDK to capture live images from the camera, processes these to find the locations of drones and then sends these over UDP for other services to work with the location data.

The source code for the topcam tracker is available on GitHub.

Architecture

The application is structured as follows:

- Main: the main function is the entrypoint of the program. It parses command line arguments, then instructs the ApiController to open the camera and start the processing.

- ApiController: provides an interface to the VimbaSystem API. It provides the StartContinuousAcquisition function that instantiates the FrameObserver, opens the camera and registers the FrameObserver to receive a callback when new frames arrive.

- FrameObserver: processes new frames as they arrive. It reads the frame buffer provided by the Vimba API into an OpenCV matrix, uses the DroneDetector to locate drones and then sends this data over UDP using the UDPClient.

- DroneDetector: detects individual LEDs in the input image by looking for bright spots, then clusters these into groups and returns a list of DroneStates (position and rotation) derived from the positions of the LEDs in each cluster. The previous states as stored to correlate new drone locations with old ones and transfer the drone ID of the old state to the new one (using a nearest neighbour approach).

- DroneState: structure to describe the state of a drone. It contains a unique ID to identify each drone, the position (x and y coordinates) of the drone, and the rotation in the xy plane.

Data protocol

The drone states are sent over UDP to a host and port configurable at runtime using command line arguments. Each drone state is sent in a separate UDP packet, the structure of such a packet is the following:

| Attribute | id | x | y | psi |

|---|---|---|---|---|

| Data type | uint32_t | double | double | double |

| Size (bytes) | 4 | 8 | 8 | 8 |

| Unit | n/a | meters | meters | radians |

Note that the origin (x, y) = (0, 0) is exactly under the top camera on the soccer field.

Continuous testing

Continuous testing is enabled on the GitHub repository. When a new pull request is created, the code is automatically compiled in a clean environment and tests are performed to verify that the DroneDetector is able to properly locate the drones in a set of test images. It also verifies that the UDPClient sends messages to the proper host and port and adheres to the data protocol described above.

The topcam-tracker is only supported on Linux platforms, but even with this restriction we faced portability issues of the software: Sometimes code that compiled and worked perfectly on Arch Linux would refuse to build on Ubuntu because of a difference in GCC version etc. Furthermore, installing the software on new systems was annoying because a lot of packages had to be installed, and the Vimba SDK required some setup.

For this reason an installation script was developed that works on all supported platforms (Arch, Ubuntu 16.04, Ubuntu 17.10) so that installing the software would become easy. The Continous Integration setup was extended to start a docker instance of each supported distribution and run the install script, build the app and execute all tests. Our current setup thus guarantees that the topcam-tracker can be installed on any of the supported platforms, and future changes will not break compatibility.

In a later stage we want to add more realistic test cases using real video captured on the top camera while a drone was flying, as the tests currently only cover some very trivial single image cases. We would also like to create a tool to analyse the performance of the DroneDetector using a large video dataset, so we can more easily experiment with new approaches and immediately evaluate the performance.

Extension to multidrone procedure

From I to L shape

To get from the I shape to the L shape, the geometry of the code had to be changed. It was decided to create vectors between the three points, and to find the direction between the two vectors. This was done by rotating the first vector 90 degrees clockwise and taking the dot product between the rotated vector and the second vector.

This uniquely determines which of the two vectors is part of the front of the drone, because if the angle is negative (meaning turned less than 180 degrees counter clockwise with respect to the previous vector), this means that the second vector could be made by rotating the first one counter clockwise, meaning that the second vector is the vector between the two LEDs at the front of the drone. If the angle is positive, the second vector could be made by rotating the first one clockwise, and therefore the first vector is the vector between the two LEDs at the front of the drone.

Bearing this in mind, the angle of the drone can be determined by creating a vector perpendicular to the vector between the points in the front and exactly in the middle of those two points. The angle of the drone will be the angle between an arbitrary vector that is pointing into the phi is zero direction and the vector just explained. The x and y position of the drone can be easily calculated by taking the average between the two outer points.

Getting from one to multiple drones

To get the camera to detect multiple drones, a distinction had to be made between LEDs of one drone and another. To do this, a K-means algorithm was used to find

[math]\displaystyle{ ceil(\frac{\#_{LEDs}}{3}) }[/math]

clusters, where [math]\displaystyle{ \#_{LEDs} }[/math] is equal to the amount of LEDs the algorithm has found. This means that the algorithm would find clusters equal to the amount of LEDs it found divided by three, rounded up. This was done to make sure the K-means algorithm would prefer to make groups of three LEDs, which is the amount of LEDs on a drone.

These clusters of LEDs were analyzed to see if they had more or less than three points, or if these points were too close together or too far away to be a drone. If any of these conditions were true, the cluster is deleted from the list. If no clusters are left after this procedure, the exposure of the camera would be changed to find more or less LEDs. The clusters of three LEDs would then be given an ID based on the previous positions of the drones, if available. If the program finds an extra drone or it is the first frame, the IDs are given in the order the K-means gives the results, which can be seen as random.

Design decisions

During the design, certain decisions had to be made in terms of robustness and accuracy. These decisions are summarized next. The first design decision that had to be made was which points to use to calculate the angle. Using two points will be less accurate than using three points, but taking the third point into account will decrease the robustness as checking if this point is on the right line to validate the results afterwards will not make sense if this point is also used in the calculations. It was decided to go with the two points and using the third point as a check because if the point was too far off to be of use, the check after the K-means would already have gotten rid of the drone and the robustness was valuated as more important than the accuracy due to this. The second design decision that had to be made was when to throw away results and when to keep them after the K-means. This is because a four LED result could still be a drone but a fourth LED could have appeared due to reflections. Checking these results would make sure that more drone results will be displayed and the frequency that a drone would not be detected for a single frame would decrease, but the computation time would take longer and if the algorithm would be wrong and would detect a drone without there being one, the drone which it detected wrongly would think it would be at a completely different position and therefore would suddenly give a high thrust in an unexpected direction, potentially ruining the show. Therefore the robustness of the program would decrease greatly if not implemented correctly. For this reason, it was decided not to implement this.