Embedded Motion Control 2019 Group 2: Difference between revisions

| Line 19: | Line 19: | ||

The components and their functions are split in software components and hardware components. | The components and their functions are split in software components and hardware components. | ||

'''Software components | '''Software components''' | ||

World model | <table style="border-collapse:collapse;border-spacing:0" class="tg"><tr><th style="font-family:Arial, sans-serif;font-size:14px;font-weight:normal;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:inherit;text-align:left;vertical-align:top"><span style="font-weight:bold">Software block</span></th><th style="font-family:Arial, sans-serif;font-size:14px;font-weight:normal;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:inherit;text-align:left;vertical-align:top"><span style="font-weight:bold">General functionality</span></th></tr><tr><td style="font-family:Arial, sans-serif;font-size:14px;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:inherit;text-align:left;vertical-align:top">World model</td><td style="font-family:Arial, sans-serif;font-size:14px;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:inherit;text-align:left;vertical-align:top">1. Storing all the relevant data (Map / tasks / position/ etc.)<br>2. Data communication between the other components (All data<br>goes through the world model)<br></td></tr><tr><td style="font-family:Arial, sans-serif;font-size:14px;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:black;text-align:left;vertical-align:top">Task manager</td><td style="font-family:Arial, sans-serif;font-size:14px;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:black;text-align:left;vertical-align:top">1. Set operation modes of other blocks depending on the current status of the blocks and high level tasks</td></tr><tr><td style="font-family:Arial, sans-serif;font-size:14px;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:black;text-align:left;vertical-align:top">Perceptor</td><td style="font-family:Arial, sans-serif;font-size:14px;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:black;text-align:left;vertical-align:top">1. Reading sensor data<br>2. Identifying walls and objects based on laser data and create local map<br>3. Fit local map onto global map (Hospital Competition)<br>4. Locate robot on local map (Escape room challenge)<br>5. Locate robot on global map (Hospital Competition)<br>6. Keep local map aligned with global map (Hospital Competition)</td></tr><tr><td style="font-family:Arial, sans-serif;font-size:14px;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:black;text-align:left;vertical-align:top">Path planner</td><td style="font-family:Arial, sans-serif;font-size:14px;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:black;text-align:left;vertical-align:top">1. Create a path from the combined map, current position and the desired position<br>2. Check if path is free and plan new path if current path is blocked<br>3. Keep track of open and closed doors</td></tr><tr><td style="font-family:Arial, sans-serif;font-size:14px;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:black;text-align:left;vertical-align:top">Drive controller</td><td style="font-family:Arial, sans-serif;font-size:14px;padding:10px 5px;border-style:solid;border-width:1px;overflow:hidden;word-break:normal;border-color:black;text-align:left;vertical-align:top">1. Actuates the robot such that it arrives at the desired location (keep speed and acceleration in mind)<br>2. Check if the desired direction is safe to drive to (based on laser data obtained from perceptor, not based on the map)</td></tr></table> | ||

- Storing all the relevant data (Map / tasks / position) | |||

Perceptor | |||

- Reading sensor data and | |||

- | |||

Path planner | |||

- Create a path from the combined map, current position and the desired position<br /> | |||

Drive controller | |||

- Actuates the robot such that | |||

| Line 59: | Line 42: | ||

- OS: Ubuntu 16.04 (64-bit): Software that allows execution of programs. <br /> | - OS: Ubuntu 16.04 (64-bit): Software that allows execution of programs. <br /> | ||

- ROS with own software layer: Allows to easily make connections between software. <br /> | - ROS with own software layer: Allows to easily make connections between software. <br /> | ||

== Environment == | == Environment == | ||

Revision as of 09:22, 3 June 2019

Group members

- 1. Bob Clephas | 1271431

- 2. Tom van de laar | 1265938

- 3. Job Meijer | 1268155

- 4. Marcel van Wensveen | 1253085

- 5. Anish Kumar Govada | 1348701

Design document

To complete the two assignments for the course “Embedded motion control” specific software must be written. In this design document the global architecture of the software is explained, and the given constraints and hardware is listed. This document is a first draft and will be updated during the project. The first version can be found here.

Requirements and specifications

The requirements and related specifications are listed in the following table. The listed specifications are required for the final assignment and the escape room challenge.

Components and functions

The components and their functions are split in software components and hardware components.

Software components

Hardware components and their general functionalities

PICO Robotic platform:

- Jazz telepresence robot: General framework with all the hardware. This framework will allow to execute the assignments

Sensors:

- Laser range finder: scan environment and detect objects.

- wheel encoders: determine the traveled distance by the wheels.

- 170 deg wide angle camera: can be used for vision system (object detection for example).

Actuators:

- Holonomic base (omni-wheels): allows the robot to move on the ground.

- Pan-tilt unit for head: can be used to move the head with the display and camera.

Computer:

- Intel I7 processor: Perform computations.

- OS: Ubuntu 16.04 (64-bit): Software that allows execution of programs.

- ROS with own software layer: Allows to easily make connections between software.

Environment

The environments for both assignments will meet the following specifications:

Escape room challenge

- Rectangular room, unknown dimensions. One opening with a corridor.

- Starting point and orientation is random, but equal for all groups.

- Opening will be perpendicular to the room.

- Far end of the corridor will be open.

- Wall will not be perfectly straight, walls of the corridor will not be perfectly parallel.

- Finish line is at least 3 meters in the corridor, walls of the corridor will be a little bit longer.

Final challenge

- Walls will be perpendicular to each other.

- Dynamic elements will be in the area.

- Not all objects will have the same orientation.

- Multiple rooms with doors.

Interface

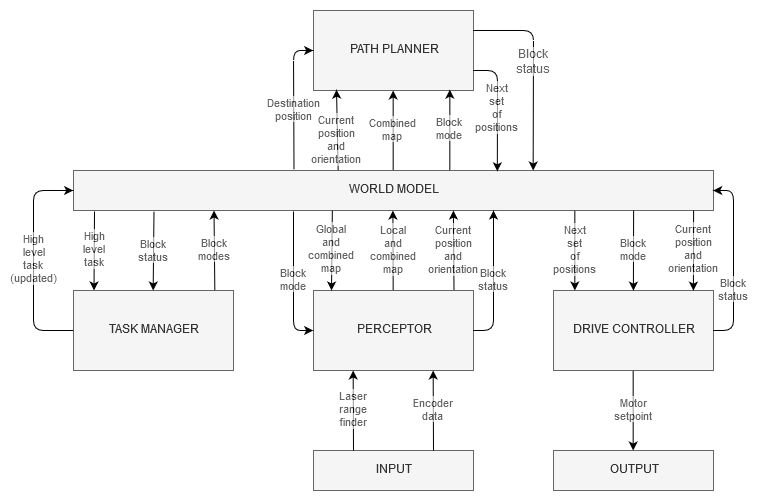

The overall software is split in several building blocks:

Escape Room Challenge

Our strategy for the escape room challenge was to use the software structure for the hospital challenge as much as possible. Therefore, the room is scanned from its initial position. From this location a local map of the room is created by the perceptor. Including, convex or concave corner points, doors and possible doors (if it is not fully certain the door is real). Based on this local map the task manager gives commands to the drive controller and path planner to position in front of the door. Once in front of the the possible door and verified as a real door the path planner sends the next position to the world model. Which is the end of the finish line in this case, which is detected by two lose ends of the walls. Also the robot is able to detect if there are objects in front of the robot to eventually avoid them.

Simulation and testing:

Multiple possible maps where created and tested. In most of the cases the robot was able to escape the room. However, in some cases such as the room in the escape room challenge the robot could not escape. The cases were analyzed but there was enough time to implement these cases. Furthermore, the software was only partly tested with the real environment at the time of the escape room challenge. Each separate function worked, such as driving to destinations, making a local map with walls, doors and corner points, driving trough a hallway and avoiding obstacles.

What went good during the escape room challenge:

The robot was made robust, it could detect the walls even though a few walls were placed under a small angle and not straight next to each other. Furthermore, the graphical feedback in from of a local map was implemented on the “face” of the Pico. The Pico even drove to a possible door when later realizing this was not a door.

Improvements for the escape room challenge:

Doors can only be detected if it consists of convex corners, or two loose ends facing each other. In the challenge it was therefore not able to detect a possible door. The loose ends were not facing each other as can be seen in the gif below. Furthermore, there was not back up strategy when no doors where found, other then scanning the map again. Pico should have re-positioned itself somewhere else in the room or the pico could have followed a wall. However, we are not intending to use a wall follower in the hospital challenge. Therefore, this does not correspond with our chosen strategy. Another point that can be improved is creating the walls. For now walls can only be detected with a minimal number of laser points. Therefore, in the challenge it was not able to detect the small wall next to the corridor straight away. This was done to create a robust map but therefore also excluded some essential parts of the map.

In the simulation environment the map is recreated including the roughly placed walls. As expected in this simulation of the escaperoom the pico did not succeed to find the exit, the reasons are explained above.

Block descriptions

Each block as shown in the overall Interface block scheme is described in full detail below.

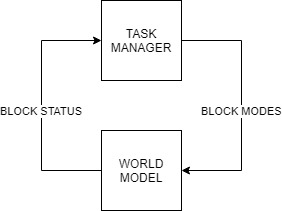

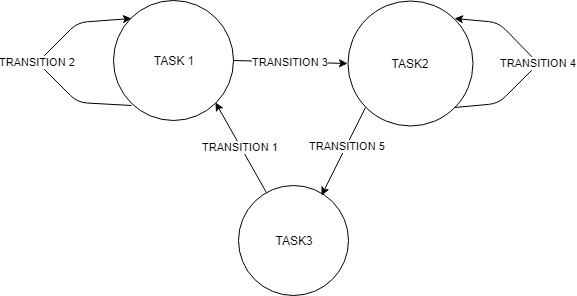

Task manager:

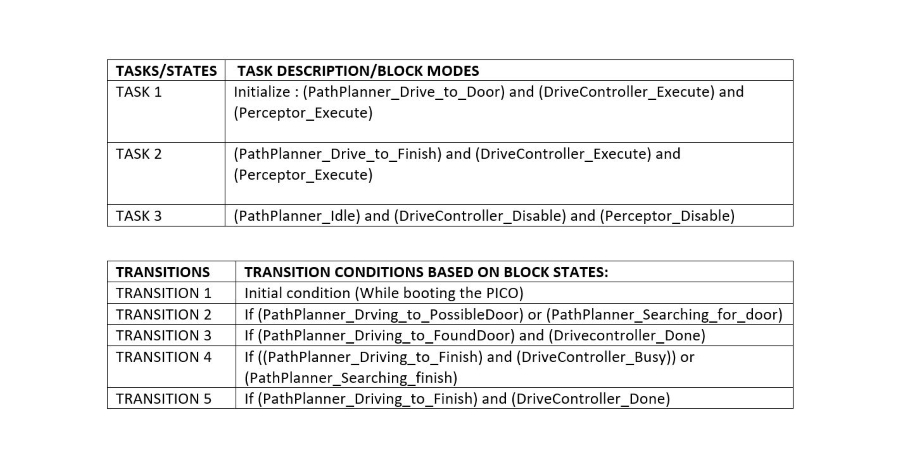

The task manager functions as a finite state machine which switches between different tasks/states. It is used to send commands to specific blocks to perform a certain task based on the status sent in by that block. It communicates with the other blocks via the World model.

ESCAPE ROOM CHALLENGE :

BASIC BLOCK DIAGRAM :

INITIALIZATION:

The path planner is given a command “Drive_to_door” while the drive controller and the preceptor are given a command “Execute” as a part of the initialization process.

EXECUTION:

The high-level tasks “Drive_to_door”, “Drive_to_exit”, “Execute”, “Idle” and “Disable” were given to appropriate blocks as shown below:

KEY:

HOSPITAL ROOM CHALLENGE :

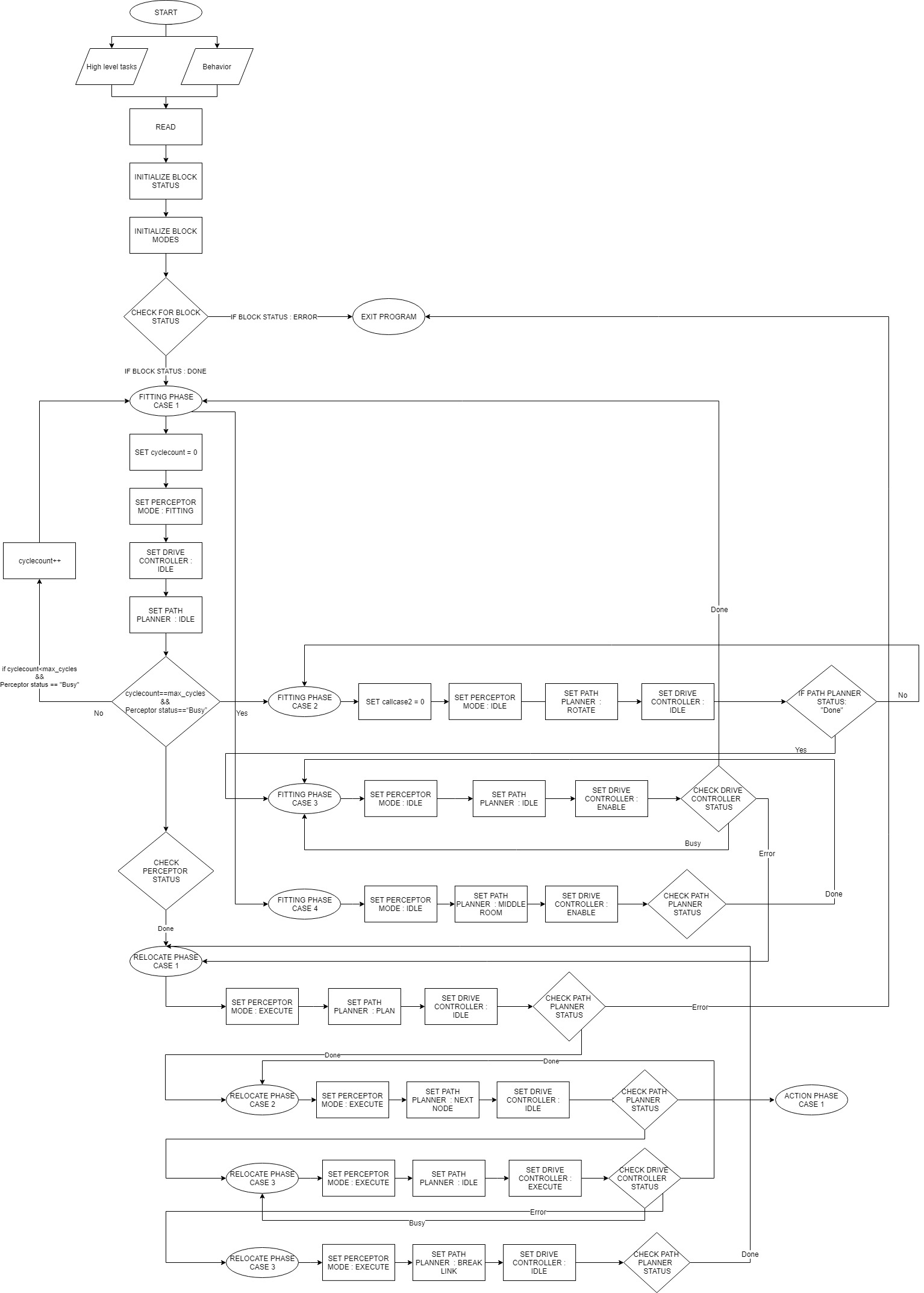

FLOW CHART :

The function description can be found here : File:TASK MANAGERfin.pdf

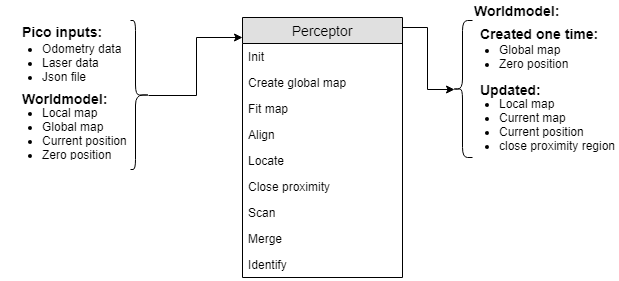

Perceptor:

The perceptor receives all of the incoming data from the pico robot and converts the data to useful data for the worldmodel. The incoming data exists of odometry data obtained by the wheel encoders of the pico robot. The laserdata obtained by the laser scanners. A Json file containing the global map and location of the cabinets, this file is provided a week before the hospital challenge. Moreover, the output of the perceptor to the world model consists of the global map, a local map, a combined map, the current/zero position and a close proximity region. The incoming data is handled within the perceptor by the following functions. A detailed description on what each function does in the preceptor and more information about the data flow can be found here.

The inputs and outputs of the perceptor are shown in the following figure:

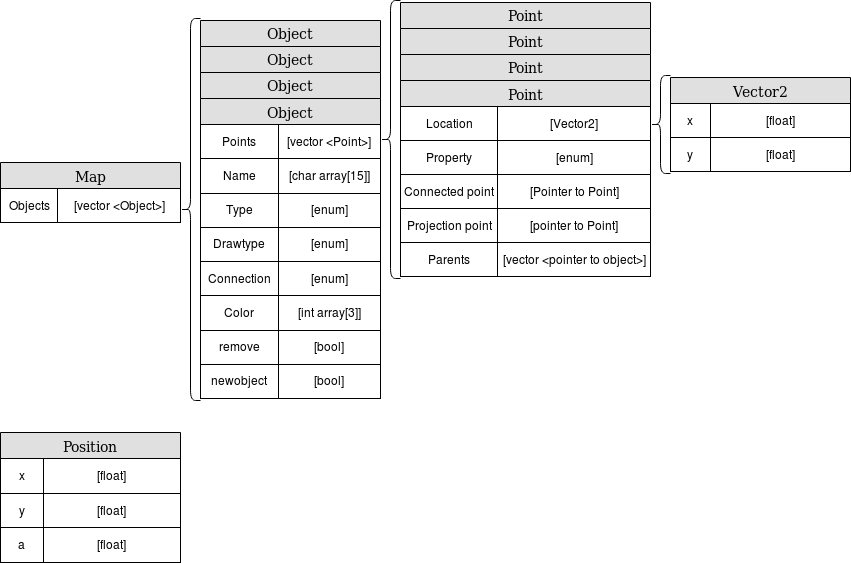

The data used in the perceptor:

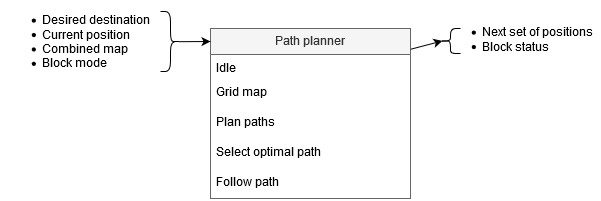

Path planner:

The path planner determines the path for the PICO robot based on the combined map, the current location and the desired location. The planned path is a set of positions that the PICO robot is going to drive towards, this set is called the ‘next set of positions’. This next set of positions is saved in the world model and used by the task manager to send destination points to the drive controller.

If the path planner is idle, it is waiting for the task manager to start planning. Once the path planner is planning the path from the position of the PICO robot towards a given destination, the following things happen. First the map is gridded, creating all possible locations that the PICO robot is able to move towards. Then different paths are planned, for example through different doors. After that the most optimal path is planned and sent to the world model as next set of positions. When the PICO robot is following the path, the path planner checks if no unexpected objects are interfering with the planned trajectory.

The inputs, functions and outputs of the path planner are as follows:

A detailed description can be found here.

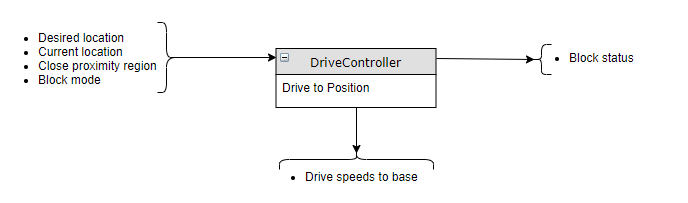

Drive controller

The drive Controller ensures that the pico robot drives to the desired location. It calculate the distance and direction to the desired location, relative to the current location. It checks if this direction is free of obstacles, if not it calculates an alternative direction that brings the pico robot closer to the desired destination. Finally it uses three PI controllers, one for each axis (rotational, X and Y) to calculate the desired speed for each axis and this is send to the pico-robot with the build-in function.

The inputs and outputs of the Drive Controller are as follows:

The full details of the Drive Controller, including a flowchart and function descriptions can be found in the Drive Controller functionality description document found here.