Embedded Motion Control 2014 Group 7: Difference between revisions

No edit summary |

No edit summary |

||

| Line 245: | Line 245: | ||

A choice is made to use the base_local_planner inside the move_base stack. From incoming sensor data, angular and linear velocities of the pico are chosen properly by the local planner to traverse the segment of the global path. | A choice is made to use the base_local_planner inside the move_base stack. From incoming sensor data, angular and linear velocities of the pico are chosen properly by the local planner to traverse the segment of the global path. Trajectory Rollout is used as the local planner. | ||

For the global planner, navfn is chosen. This makes use of Dijkstra's algorithm as explained in lecture | |||

| Line 472: | Line 474: | ||

<pre>$ rosrun tf view_frames</pre> | <pre>$ rosrun tf view_frames</pre> | ||

GMapping and Move_base can be used in the simulations: | GMapping and Move_base can be used in the simulations: | ||

Revision as of 22:21, 26 June 2014

Group members

| Name | Id number | |

|---|---|---|

| Maurice van de Ven | m dot l dot j dot v dot d dot ven @ student dot tue dot nl | |

| Pim Duijsens | p dot j dot h dot duijsens @ student dot tue dot nl | |

| Glenn Roumen | g dot h dot j dot roumen @ student dot tue dot nl | 0753028 |

| Wesley Peijnenburg | w dot peijnenburg @ student dot tue dot nl | |

| Roel Offermans | r dot r dot offermans @ student dot tue dot nl |

Planning

| Week | Deadline | General plan | Groupmember | Specific task |

|---|---|---|---|---|

| 4 | 16-05-2014 | Complete Corridor Challenge | ||

| - - - - | - - - - - | - - - - - | - - - - - | - - - - - |

| 5 | 19-05-2014 | Research Maze Strategies and define modules | ||

| 19-05-2014 before 10:45 h. | Roel | Research possibilities for wall and corner detection with OpenCV | ||

| 19-05-2014 before 10:45 h. | Glenn | Research possibilities for wall and corner detection with OpenCV | ||

| 19-05-2014 before 10:45 h. | Wesley | Research possibilities for arrow detection with OpenCV | ||

| 19-05-2014 before 10:45 h. | Maurice | Research possibilities for path planning and path tracker/driver | ||

| 19-05-2014 before 10:45 h. | Pim | Research possibilities for path planning and path tracker/driver | ||

| - - - - | - - - - - | - - - - - | - - - - - | - - - - - |

| 6 | 26-05-2014 | Research functionality of ROS Packages: Gmapping and move_base | ||

| 28-05-2014 | Decide: use of packages or write complete new code | |||

| 30-05-2014 | Conceptual version of all modules are finished | |||

| - - - - | - - - - - | - - - - - | - - - - - | - - - - - |

| 7 | 09-06-2014 | Development of modules: | Cutting corners | Glenn |

| t.b.d. | Publishing and listening to arrow | Wesley/Maurice | ||

| t.b.d. | Exploration | (Pim/Glenn) | ||

| 09-06-2014 | OpenCV - local rectangles solver | Wesley/Maurice | ||

| 09-06-2014 | (OpenCV -) floodfill solver | Roel | ||

| - - - - | - - - - - | - - - - - | - - - - - | - - - - - |

| 8 | 13-06-2014 | Final presentation of the Maze Strategy | ||

| - - - - | - - - - - | - - - - - | - - - - - | - - - - - |

| 9 | 20-06-2014 | Maze Competition |

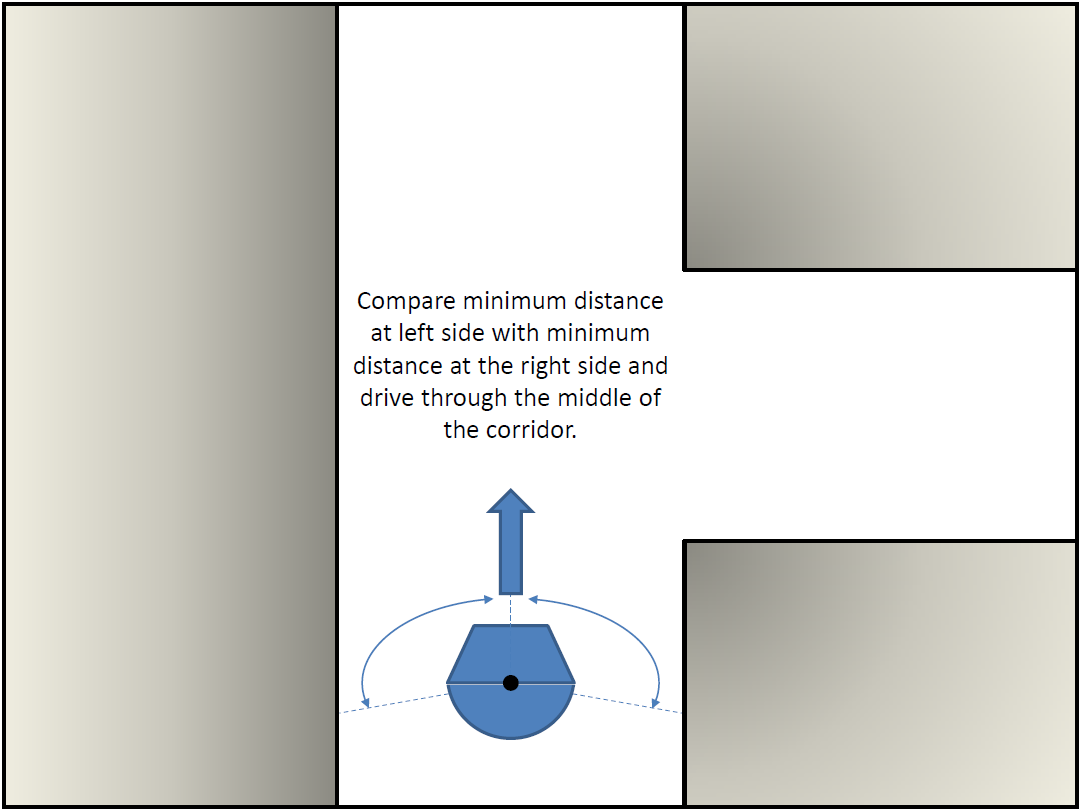

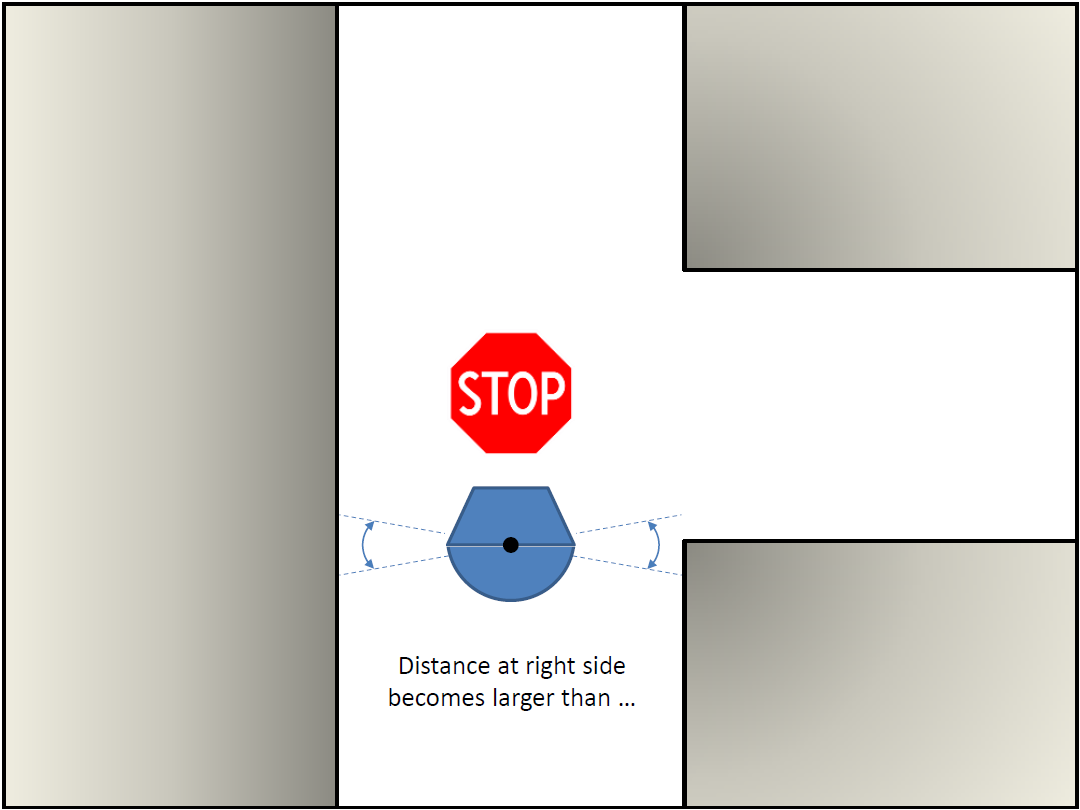

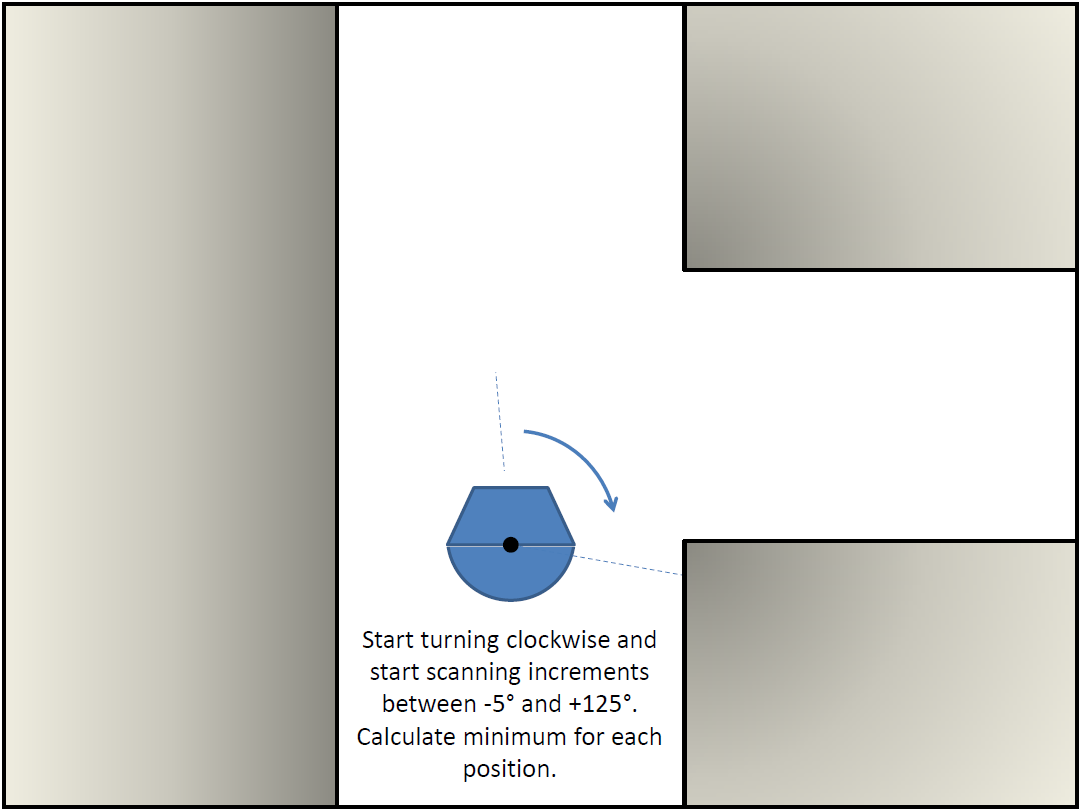

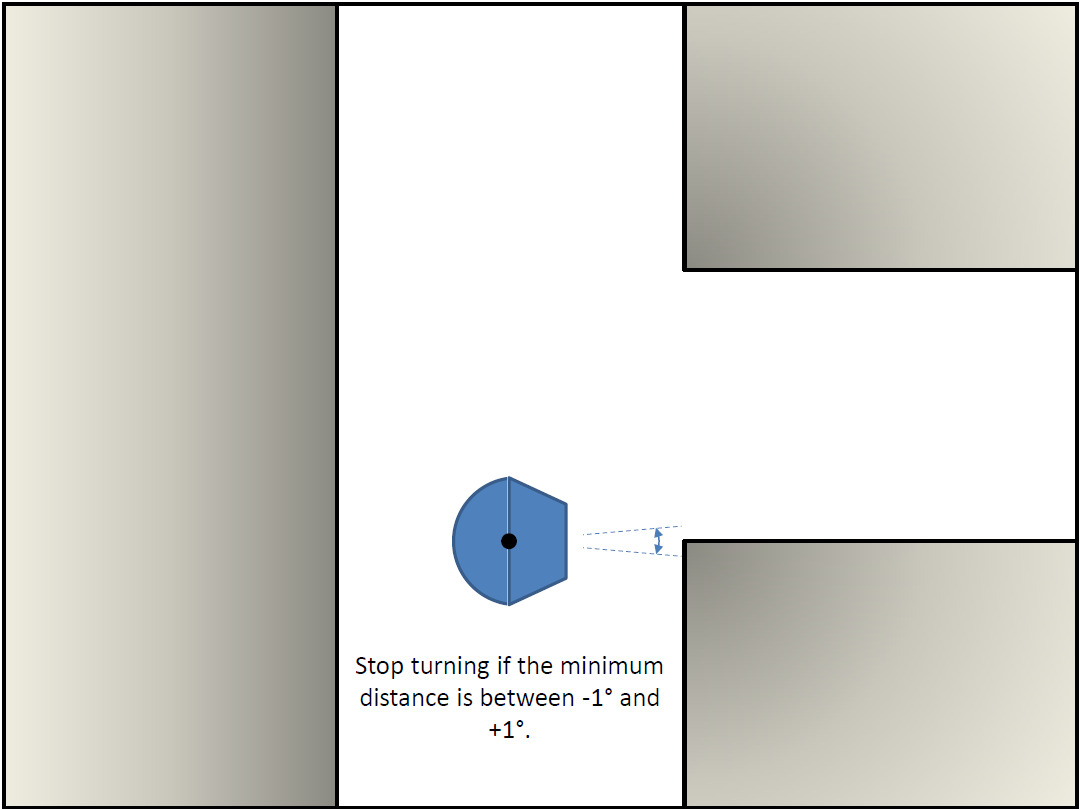

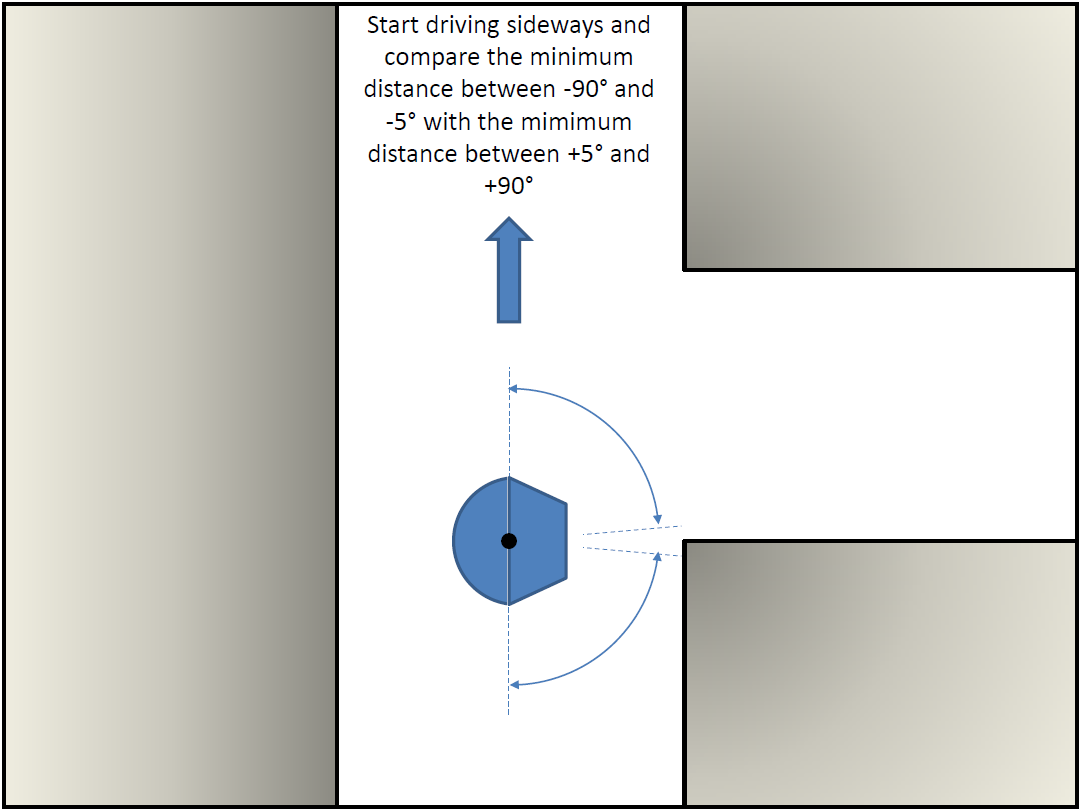

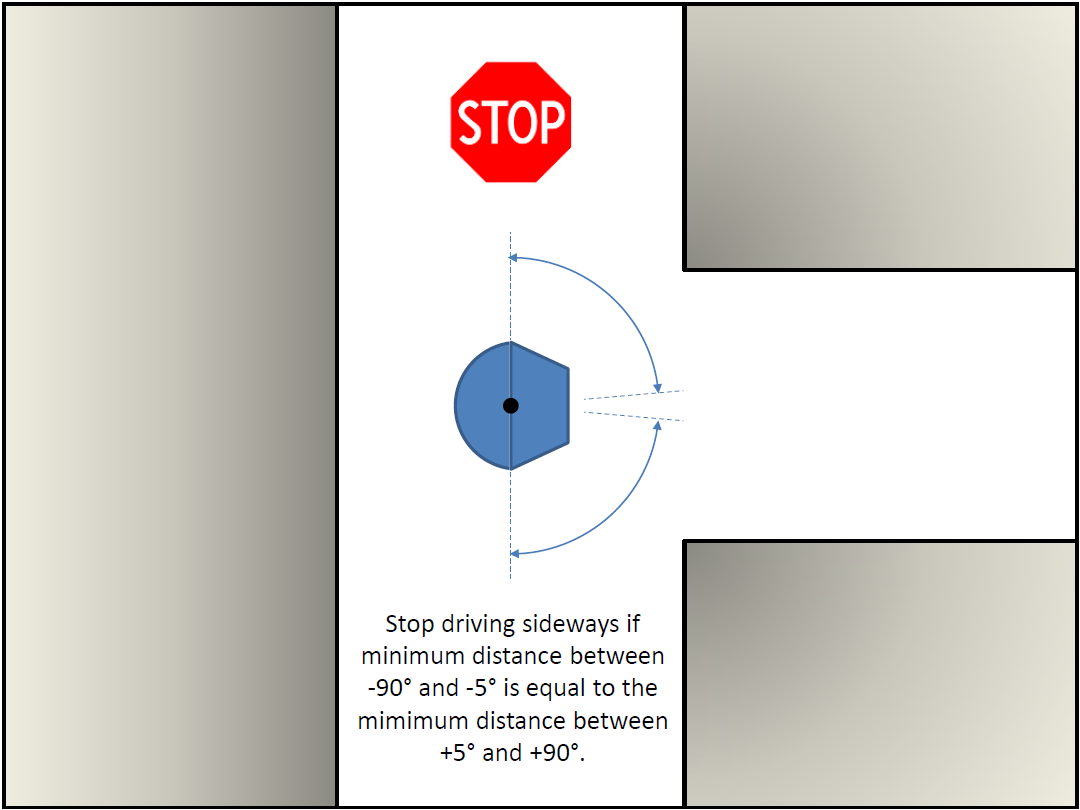

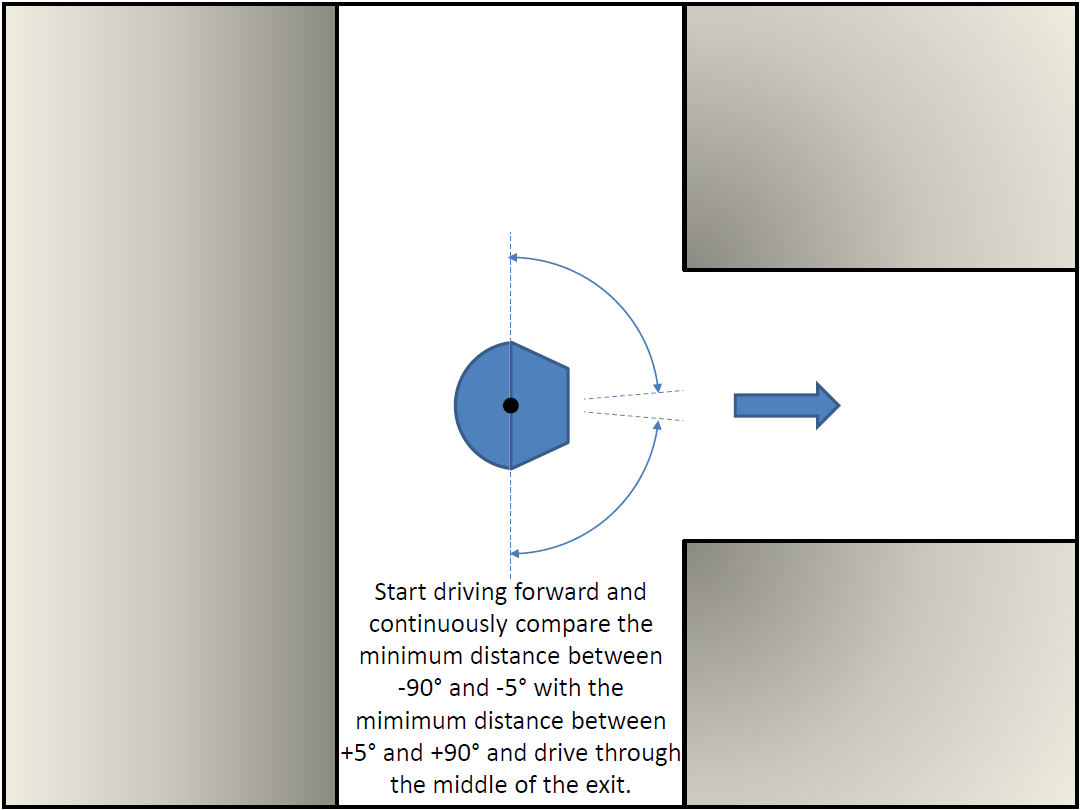

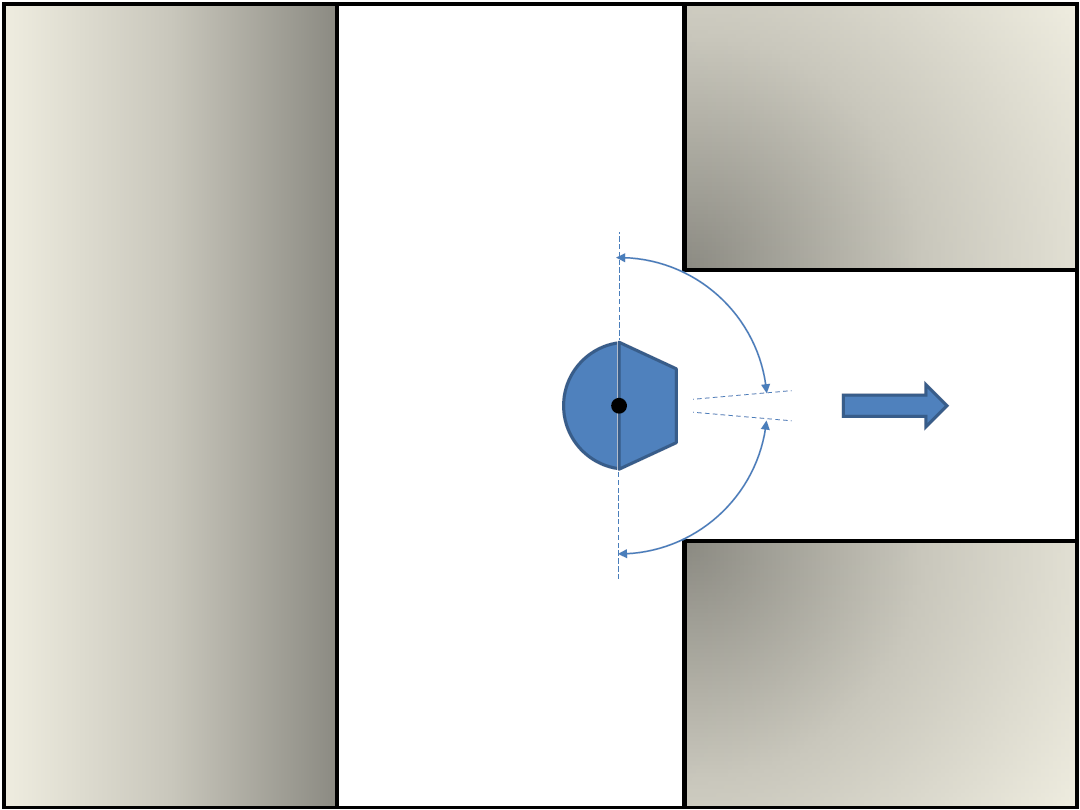

Corridor strategy

The provided safe_drive file is extended to a file which solves the corridor challenge for a left and right corridor. The strategy, stated below, is implemented and tested. From these tests, it can be concluded that the strategy works properly. Everything is designed and implemented in one single node. The disadvantages of this approach are that it is hard to maintain, hard to extend and teamwork becomes hard. Therefore, it is desired to use different nodes for the maze challenge.

Maze strategy

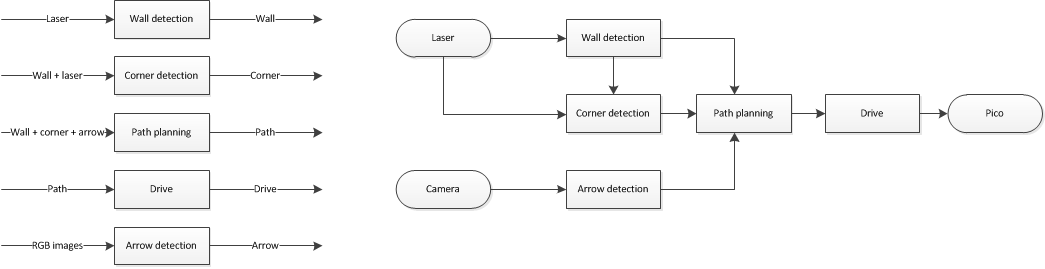

A choice is made to analyze two different approaches to solve the maze challenge. The maze can be solved by using different nodes and design each node from scratch, or by using existing ros packages. If the first method will be chosen, the different nodes and flow chart can be used as stated below.

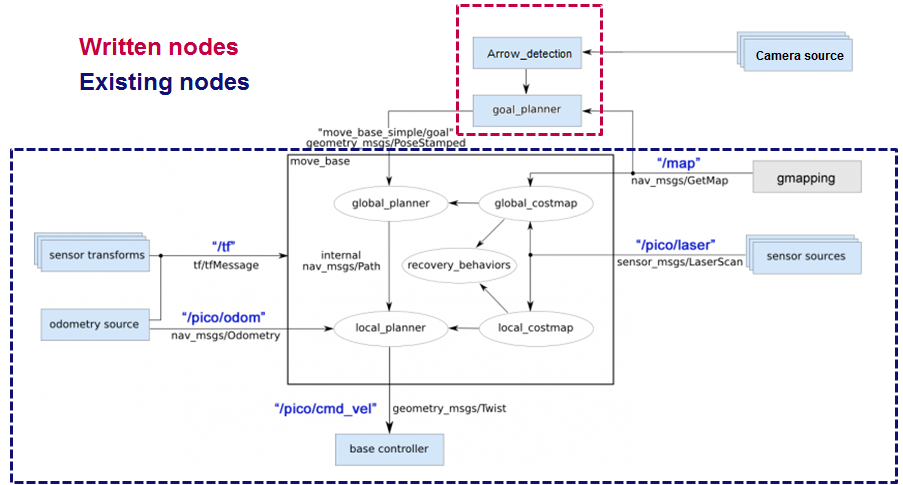

The choice is made to start with using existing ros packages. This is because it is known that ros packages work relatively well for different applications and we thought some packages would be very useful for our case. First, different ros packages are analyzed. The packages GMapping in combination with Move_base, and as an alternative the package Exploration are studied and implemented. Since, GMapping and Move_base only covers the mapping, localization and navigation of the pico, a goal planner has to be implemented. The node for the goal planner, the node for the arrow recognition and the integration of both nodes are created from scratch. A more detailed description of the packages and approach are stated in the next paragraph. The strategy is stated in the diagram below.

GMapping & Move_base

GMapping

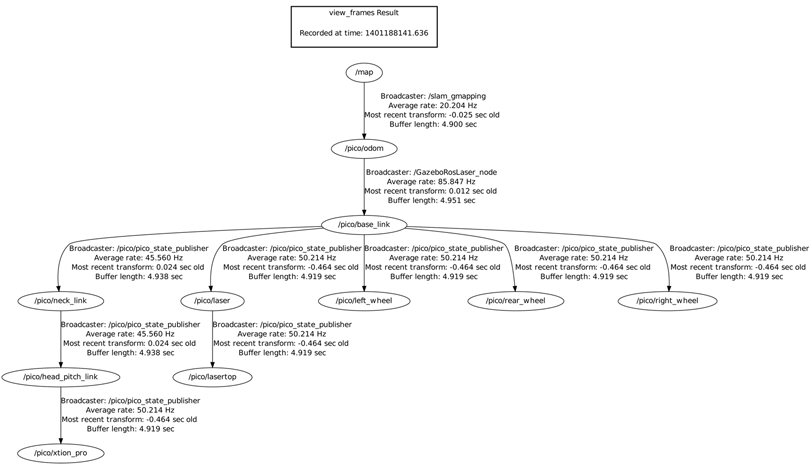

GMapping is being investigated as SLAM algorithm. The pico can, with this simultaneous localization and mapping (SLAM), make a map of an unknown environment and localize itself inside this map. The strategy is to combine the map and the position of the pico with a navigation stack and a goal planner. GMapping subscribes on /pico/laser and /tf, and publishes on /tf and /map.

In order to run GMapping correctly, a launch file gmap.launch is setup which initializes the correct settings. In this launch file the parameter base_frame is set as /pico/base_link, map_frame as /map and odom_frame as /pico/odom. Also, scan is remapped to /pico/laser. GMapping uses a transform from /map to /pico/odom and vice versa and from /pico/odom to /Pico/base_link and vice versa. To see how GMapping works, a GIF is presented below. The black pixels represent an obstacle, the light grey the known area and the dark grey the unknown area.

Move_base

As navigation stack, Move_base is used. Move_base provides an action that will attempt to reach a given goal with a mobile base. It makes use of a local and global planner in order to solve a global navigation task. Move_base also maintains a costmap for the global planner and a costmap for the local planner. These costmaps are used to accomplish navigation tasks.

A choice is made to use the base_local_planner inside the move_base stack. From incoming sensor data, angular and linear velocities of the pico are chosen properly by the local planner to traverse the segment of the global path. Trajectory Rollout is used as the local planner.

For the global planner, navfn is chosen. This makes use of Dijkstra's algorithm as explained in lecture

To launch the move_base stack, again a launch file is created which will initialize the correct settings. The move_base.launch file consist of the files listed below. It also performs a remap from /cmd_vel to /pico/cmd_vel.

costmap_common_params.yaml global_costmap_params.yaml local_costmap_params.yaml base_local_planner_params.yaml

The contents of these files are:

| costmap_common_params.yaml: | global_costmap_params.yaml: | local_costmap_params.yaml: |

|---|---|---|

obstacle_range: 2.5

raytrace_range: 3.0

inflation_radius: 0.35

robot_radius: 0.30

observation_sources: laser_scan_sensor

laser_scan_sensor: {sensor_frame: pico/laser, data_type: LaserScan, topic: /pico/laser, marking: true, clearing: true}

|

global_costmap: global_frame: /map robot_base_frame: /pico/base_link update_frequency: 5.0 static_map: true |

local_costmap: global_frame: /pico/odom robot_base_frame: /pico/base_link update_frequency: 5.0 publish_frequency: 2.0 static_map: false rolling_window: true width: 3.0 height: 3.0 resolution: 0.05 |

base_local_planner_params.yaml

TrajectoryPlannerROS: acc_lim_x: 2.5 acc_lim_y: 2.5 acc_lim_theta: 3.2 max_vel_x: 0.2 min_vel_x: 0.0 max_vel_theta: 1.0 min_vel_theta: -1.0 min_in_place_vel_theta: 1.0 escape_vel: -0.2 holomonic_robot: true y_vels: [-0.2, -0.0, 0.0, 0.2] yaw_goal_tolerance: 0.05 xy_goal_tolerance: 0.10 latch_xy_goal_tolerance: false sim_time: 2.0 sim_granularity: 0.005 angular_sim_granularity: 0.025 vx_samples: 5 vtheta_samples: 10 meter_scoring: true pdist_scale: 2.0 gdist_scale: 1.0 occdist_scale: 0.05 heading_lookahead: 0.325 heading_scoring: false heading_scoring_timestep: 0.8 dwa: false publish_cost_grid_pc: false global_frame_id: /pico/odom

While GMapping and move_base are running a tf tree can be made to see how the different frames are linked. For the chosen settings of GMapping and Move_base, this results in:

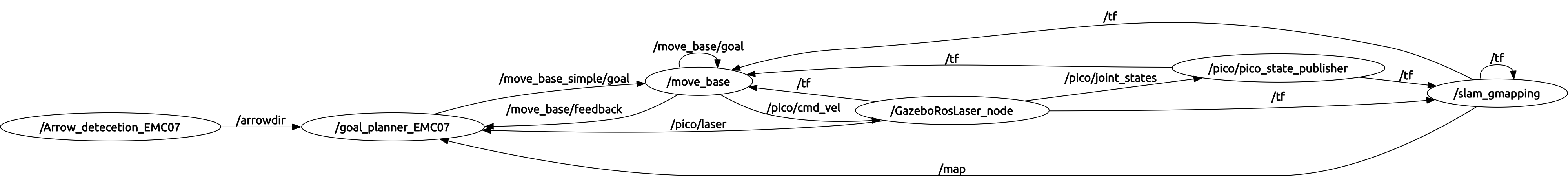

Using rqt_graph, the following graph is given:

Exploration

Explore_stage

An alternative for GMapping and Move_base, is the explore_stage package. This package is an algorithm that uses a map to find exits and tries to navigate to it. Which is useful in our case. In order to start this package, again a launch file is made. The same local and global costmap settings have been used as in the previous packages. To use explore_stage:

1. Get in the right directory:

$ roscd explore_stage

2. Launch the explore_stage launch file:

$ roslaunch explore_slam.launch

The following problems occur: when using RVIZ, it can be seen that a path is planned to an exit, but mose of the time the pico seems stuck. Using different settings for the inflation radius and the radius of pico won't solve this problem. It looks like there is a problem with move inside the explore_stage package. Also, when defining a new goal manually by RVIZ, it repeats saying that a node is added on the coordinates: X.X and X.X but the pico does nothing. It also repeats the following warning: Entropy has not been updated. Loop closure opportunities may be ignored.

Goal planning - OpenCV

In some cases, your script will not find the required include:

#include <move_base_msgs/MoveBaseActionFeedback.h> // Contains the location of pico

The solution (ubuntu command):

sudo cp -a /opt/ros/groovy/stacks/navigation/move_base_msgs/msg_gen/cpp/include/. /opt/ros/groovy/include/

Strategy

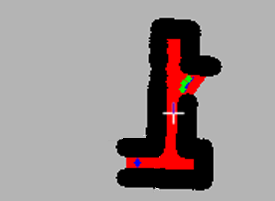

The image below shows strategy for goal planning in several steps.

1: - The laser data is converted to an image which can be processed with OpenCV.

2: - All BLACK pixels are inflated (Pico keeps distance from them) - Unknown areas are inflated to filter noise and remove areas that are unreachable. - A floodfill is used to mark all reachable area’s pink

3: - All pink pixels that have a grey neighbour are coloured green.

4:

- A binary is created, for green pixels only.

- This binary input is used to detect lines. The midpoint of each line will be our goal(s).

5:

- The final result is a set of goals (green dots) that are sent as output.

Recovery mode

If no valid goals are found, no path can be planned to a goal. Therefore pico will not move and no new goals will be planned. In this situation pico is stuck and a recovery is needed. If pico does not move for a certain time, pico will get in recovery mode. Pico will rotate at his current position. Rotating causes gmapping to update the map. From the updated map new valid goals can be found.

Arrow recognition - OpenCV

The OpenCV C++ files can be compiled using one of the given commands below. For the g++ command, the packages need to be specified manually.

$ g++ demo_opencv.cpp `pkg-config opencv --cflags --libs` -o demo_opencv

Or you can make have ROS find the libaries for you:

$ rosmake demo_opencv

Executing the program:

$ ./demo_opencv <argcount> <arg(s)>

Troubleshooting:

There have been problems with the OpenCV and Highgui libraries (static libraries). This problem can be solved by replacing the following code:

// #include "cv.h" // (commented out) #include "opencv2/opencv.hpp" // #include <highgui.h> // (commented out) #include <opencv/highgui.h>

The program might not always respond to input, this is can be due to a subscription to the wrong topic. Make sure you are streaming the images and check the ros-topic with:

rostopic list

Then the output gives "/pico/camera", "/pico/asusxtion/rgb/image_color", or a different name. Replace the referece in the following line of code:

ros::Subscriber cam_img_sub = nh.subscribe("/pico/asusxtion/rgb/image_color", 1, &imageCallback);

Runnig OpenCV on an image stream from a bag file:

In three separate terminals:

roscore rosbag play <location of bag file> ./demo_opencv

Arrow recognition strategy:

The image is cropped using:

cv::Rect myROI(120,220,400,200); // Rect_(x,y,width,height) cv::Mat croppedRef(img_rgb, myROI); // The window is 480 rows and 640 cols

The image is converted from red-green-blue (RGB) to hue-saturation-value (HSV) (picture 1). A double for- loop is used to convert every red-tinted pixel to black. A binary (two-colour) image is created based on the black and non-black colours (picture 2). This image is then blurred and an edge detection algorithm gives an output of the edges that it can find (picture 3).

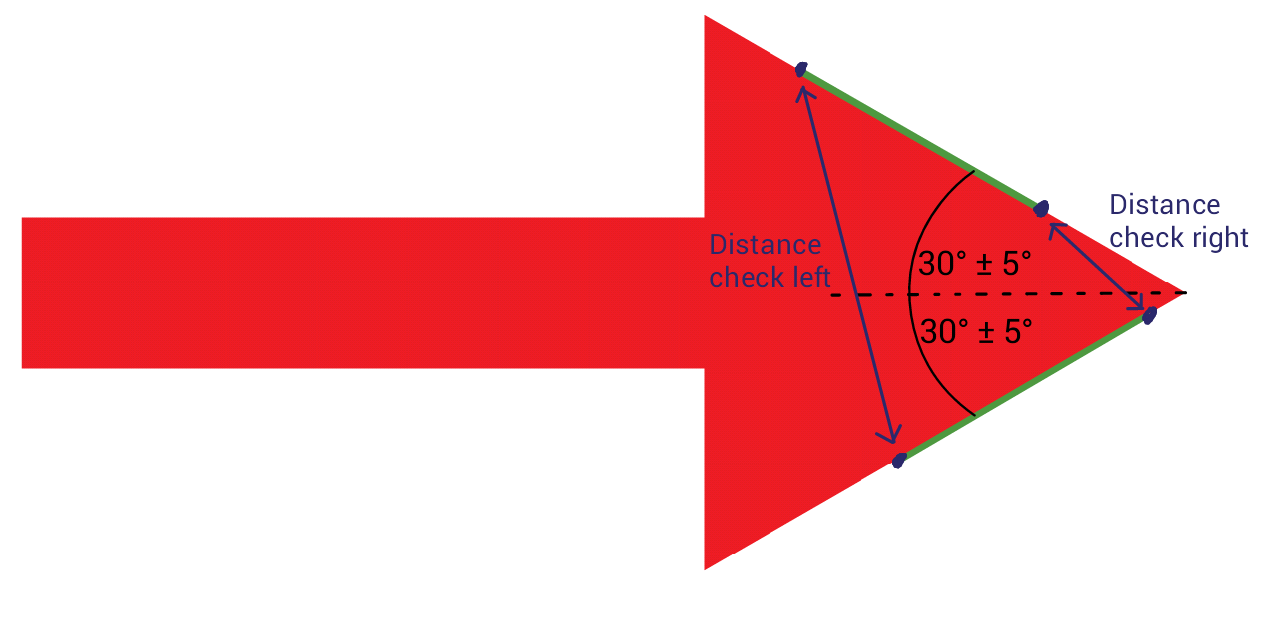

The list of edges is the basis for the arrow recognition, all lines are defined from left to right (so: x1 < x2). Lines are defined as follows: Vec4i line[x1,y1,x2,y2]. The lines are filtered, and the ones that remain are lines with a slope of +/-30 with a +/- 5 degrees margin. All lines with a negative slope are compared with all lines that have a positive slope. Both the +30 [deg] and the -30 [deg] line have a beginning and end point. If the left (begin) points are close enough to eachother (3 pixels), the output is "LEFT ARROW", and vice versa.

The image below shows the +/-30 [deg] lines which this algorithm focusses on:

Practical issues

General commands

Viewing a tf tree can be done by:

$ rosrun tf view_frames

GMapping and Move_base can be used in the simulations:

1. Start Gazebo:

$ gazebo

2. Using another terminal, spawn the maze:

$ rosrun gazebo_map_spawner spawn_maze

3. Launch PICO:

$ roslaunch pico_gazebo pico.launch

4. Start RViz to visualize how PICO perceives the world through its sensors.

$ rosrun pico_visualization rviz

5. Launch the gmapping plugin for PICO

$ roslaunch pico_2ndnav gmap.launch

6. Launch the move_base package for PICO to navigate through the maze with the preferred settings.

$ roslaunch pico_2ndnav move_base.launch

Setup Pico Experiment

Terminal 1:

ssh pico pico123 pstart

Terminal 2:

pico-core rviz rviz

Terminal 3:

ssh pico pico123 roscd gmapping **check if group 11 has gmapping if so delete gmapping map** cd .. rm -rf gmapping cd ros/groovy/emc/groups/emc07 svn up roscd pico_2ndnav roslaunch pico_2ndnav gmap.launch

Terminal 4:

ssh pico pico123 roscd pico_2ndnav roslaunch pico_2ndnav move_base.launch

Terminal 5:

ssh pico pico123 roscd goal_planner_roel2 rosmake goal_planner_roel2 ./bin/goal_xtc

Terminal 6:

ssh pico pico123 roscd demo_opencv_wesley rosmake demo_opencv_wesley ./bin/demo_xtc

Time Table

| Lectures | Group meetings | Mastering ROS and C++ | Preparing midterm assignment | Preparing final assignment | Wiki progress report | Other activities | |

|---|---|---|---|---|---|---|---|

| Week 1 | |||||||

| Pim | 2 | ||||||

| Roel | 2 | ||||||

| Maurice | 2 | ||||||

| Wesley | 2 | ||||||

| Glenn | 2 | ||||||

| Week 2 | |||||||

| Pim | 2 | 2 | 4 | 3 | |||

| Roel | 0 | 2 | 10 | 6 | |||

| Maurice | 2 | 2 | 6 | 3 | |||

| Wesley | 2 | 2 | 4 | 2 | |||

| Glenn | 2 | 2 | 5 | 2 | |||

| Week 3 | |||||||

| Pim | 2 | 1 | 12 | 2 | |||

| Roel | 2 | 4 | 10 | 2 | |||

| Maurice | 2 | 3 | 10 | 4 | |||

| Wesley | 2 | 3 | 10 | 1 | |||

| Glenn | 2 | 2 | 13 | 4 | |||

| Week 4 | |||||||

| Pim | 2 | 5 | 1 | 7 | 3 | ||

| Roel | 2 | 5 | |||||

| Maurice | 2 | 5 | 15 | ||||

| Wesley | 2 | 5 | 9 | 1 | |||

| Glenn | 2 | 5 | 6 | 1 | |||

| Week 5 | |||||||

| Pim | 2 | 2.5 | 7 | 0.5 | |||

| Roel | 2 | 2.5 | 6 | 1 | |||

| Maurice | 2 | 2.5 | 8 | 0.5 | |||

| Wesley | 2 | 5 | 7 | 3 | |||

| Glenn | 2 | 2.5 | 7 | ||||

| Week 6 | |||||||

| Pim | 4 | 6 | |||||

| Roel | 4 | 6 | |||||

| Maurice | 4 | 8 | |||||

| Wesley | 4 | 8 | 14 | ||||

| Glenn | 4 | 8 | 1 | ||||

| Week 7 | |||||||

| Pim | 2 | 2.5 | 10 | ||||

| Roel | 2 | 4 | 8 | 3 | |||

| Maurice | 2 | 4 | 9 | 2 | |||

| Wesley | 2 | 4 | 2 | 12 | |||

| Glenn | 2 | 2 | 8 | 5 | |||

| Week 8+9 | |||||||

| Pim | 0.5 | 21 | |||||

| Roel | 2.5 | 20 | 4 | ||||

| Maurice | 0.5 | 19 | |||||

| Wesley | 0.5 | 19 | |||||

| Glenn | 10 | 5 | |||||