Embedded Motion Control 2014 Group 6

Wout Laarakkers 0828580

Rik Boonen 0805544

Dhruv Khandelwal 0868893

Suraj Prakash 0870060

Hans Reijnders 0806260

Updates

- Include Bool action_done and Bool wall_close in topic drive for handshake (23 May)

Planning

Week 1 (2014-04-25 - 2014-05-02)

- Installing Ubuntu 12.04

- Installing ROS

- Following tutorials on C++ and ROS.

- Setup SVN

Week 2 (2014-05-03 - 2014-05-09)

- Finishing tutorials

- Interpret laser sensor

- Positioning of PICO

- having ore first meeting

Week 3 (2014-05-12 - 2014-05-16)

- Programming corridor competition

- Corridor competition

Week 4 (2014-05-19 - 2014-05-23)

- Creating basic structure for programming in Ros

- Planning

- Dividing tasks

* Drive node: Dhruv Khandelwal

* Decision node: Hans Reijnders, Rik Boonen

* Arrow node: Wout Laarakkers

- Programming individual parts

Week 5 (2014-05-26 - 2014-05-30)

- Programming individual parts

- Testing parts

- Integrating parts

Week 6 (2014-06-02 - 2014-06-06)

- Programming individual parts

- Testing parts

- Integrating parts

- Deadline for the nodes

Changes in group

Unfortunately Suraj Prakash has decided to quit the course, because he doesn't has enough time.

Corridor competition Software design

Software design

Structure

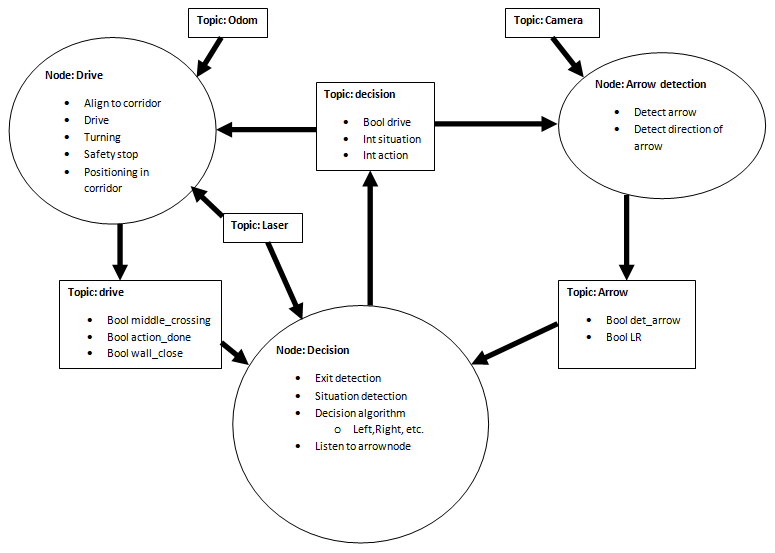

The first draft of the structure of nodes and topics is shown below.

The integer 'situation' and 'action' have certain values corresponding to different cases. These cases are defined as shown below.

| Situation | |

| 1 | Detected corridor |

| 2 | Detected dead-end |

| 3 | Detected corner right |

| 4 | Detected corner left |

| 5 | Detected T-crossing (right-left) |

| 6 | Detected T-crossing (right-straight) |

| 7 | Detected T-crossing (left-straight) |

| 8 | Detected crossing |

| 9 | Unidentified situation |

.

| action | |

| 1 | Stop |

| 2 | Straight |

| 3 | Right |

| 4 | Left |

| 5 | Turn around |

| 6 | Follow left wall |

| 7 | Follow right wall |

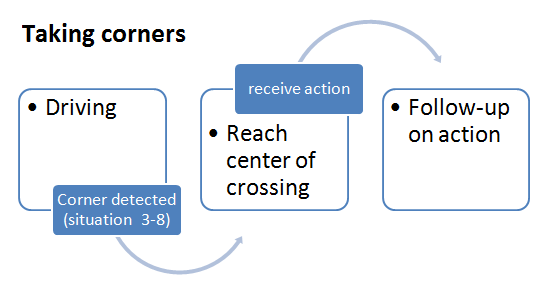

Taking corners

Decision node

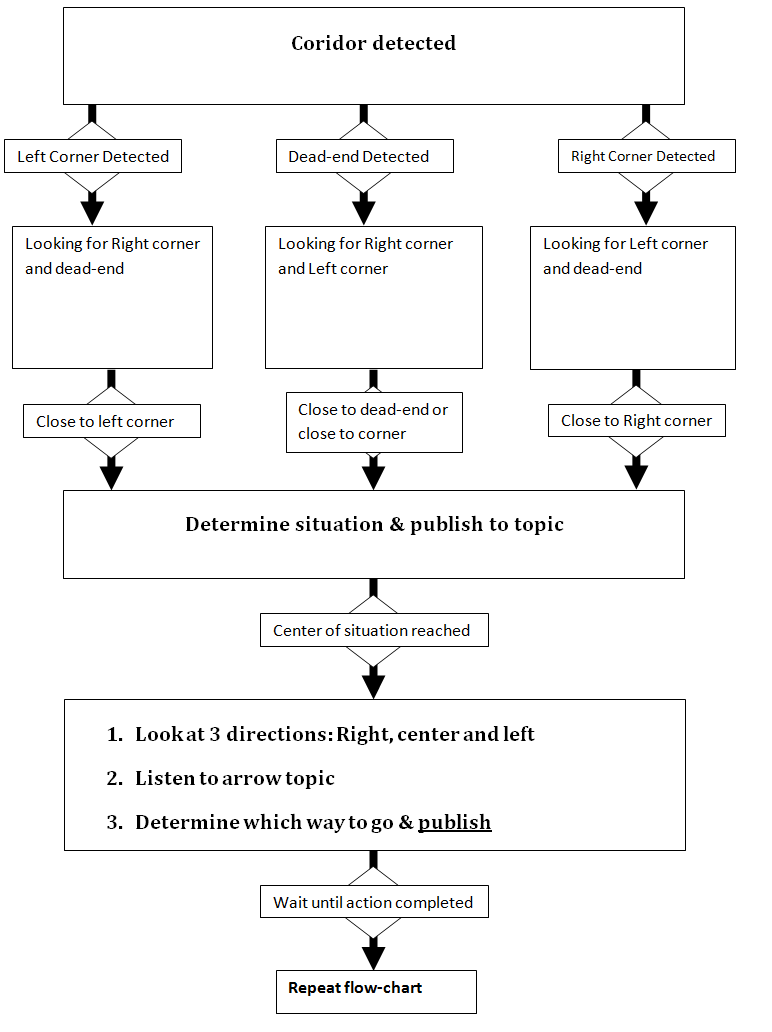

The decision node is designed to act like a navigation tool. It tells the drive node where to go. The decision node does this by detecting which situation we are approaching (like corner left/right or T-crossing), taking into acount the information of the arrow node and previous turns and then tells the drive node where to go.

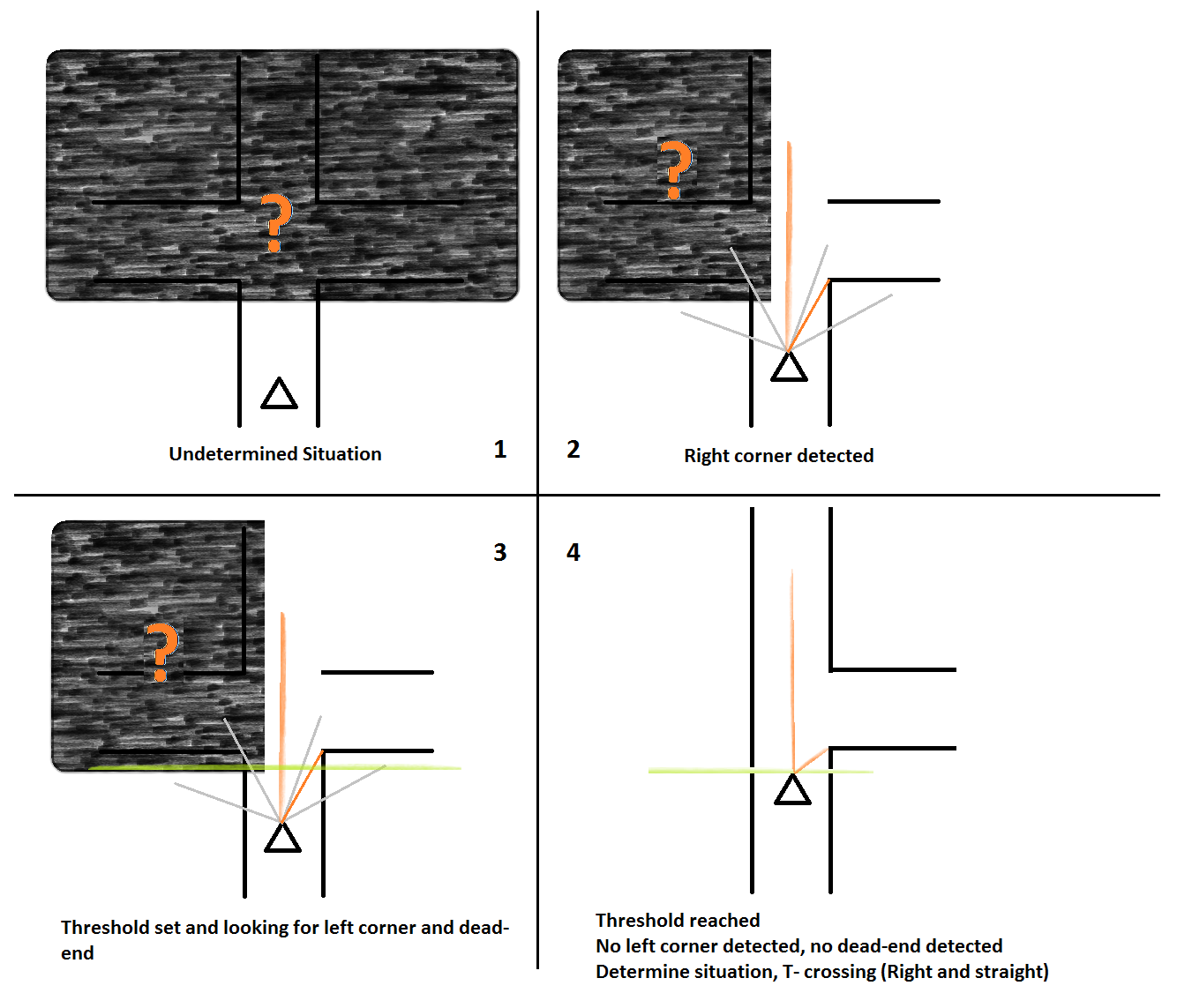

Situation determination

In order to determine which situation we are approaching, we want to know 3 things: is there a corner to the left, is there a corner to the right and can we go straight ahead?

We determine these 3 things by using the laser data. Unfortunately we will not always detect the corners and dead end at the same time, so when we detect one of these 3 we will drive to a fixed distance from the situation. In this time we drive we will determine if we also find the other two charateristics of the situation. Using the information we have we can determine the situation and publish it on the topic.

The flowchart shown below shows the steps in this algorithm. Also there is an example situation to clearify the algorithm better.

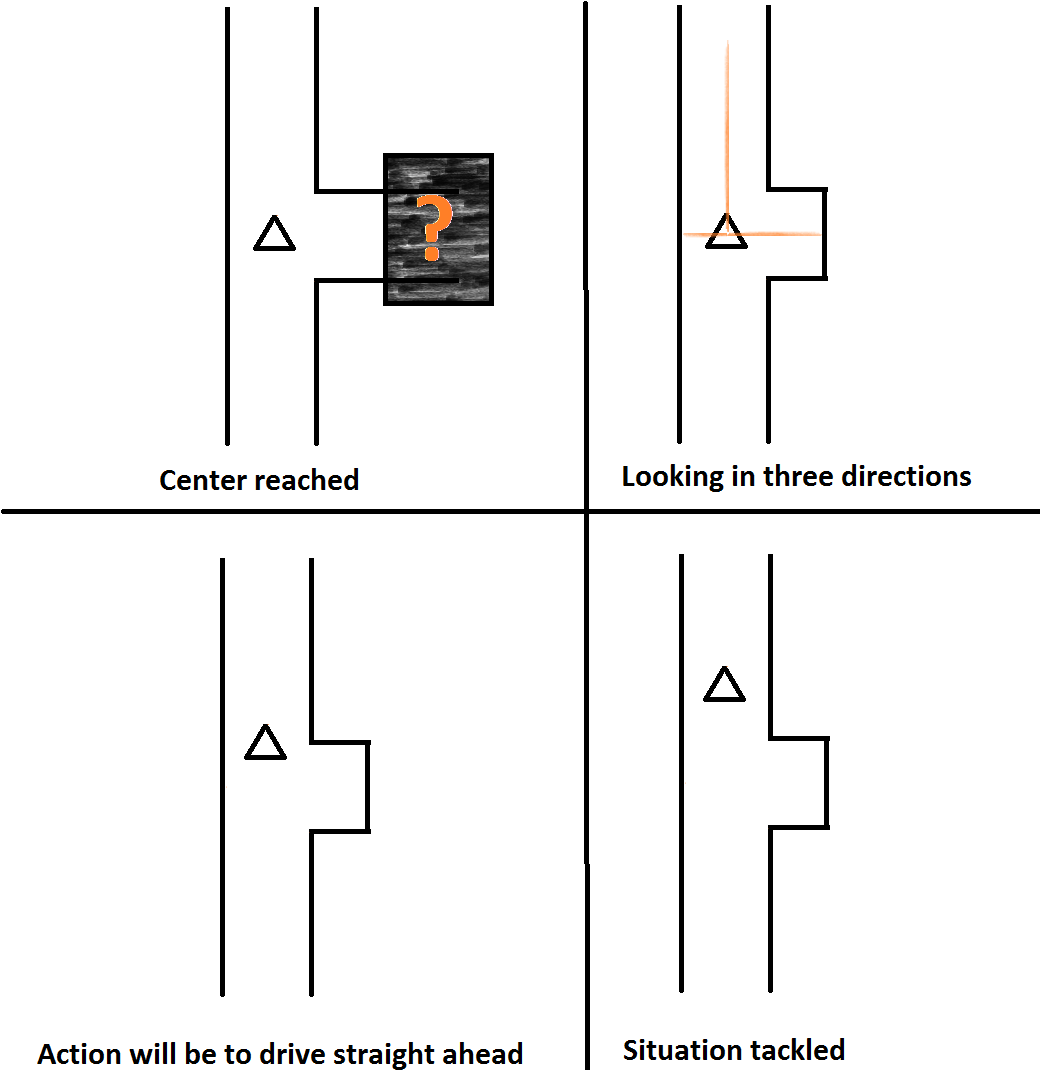

Action determination

When we have published a situation, then the drive node makes sure we drive to the center of the situation. If we are in the center, we will determine if there are deadends in any turns, look if there are any arrows and then make a decision which way to go.

Flowchart

Example

Drive Node

The drive node is designed to operate like the driver of a car. The Decision node provides localalized information about the current situation to the Drive node, which drives Pico to the centre of the situation. The Decision node can then make a better choice of the action required. After recieving the action from the Decision node, the Drive node performs the action, and then listens to the Decision node for the next situation.

The Drive node listens to the Decision topic, the Laserdata topic and the Odometry topic to perform it's various functions. The Drive node consists of many small modular functions that perform specific tasks, such as driving straight with a specified velocity, performing a left turn, and so on. These small fuctions are called repeatedly and in an ordered fashion to perform the action suggested by the Decision node.

Reaching the middle of a corner/crossing

The Drive node includes a corner detection algorithm, that allows it to drive Pico to the centre of a corner/crossing. However, while testing, we realized that detecting the corners alone was not sufficient to drive to the centre of a corner/crossing, due to the limited range of the laser data. Hence we included data from the Odometer to make this action more reliable. Thus, the node uses laser data to look forward and odometer to look back to reach the middle.

The Drive topic

The drive node publishes on the cmd_vel topic and the drive topic. The drive topic consists of the following flags:

- action_done

- wall_close

- middle_reached

These flags inform the Decision node the position of Pico in the maze. The wall_close flag informs the Decision node that Pico is very close to a wall. The middle_reached flag informs the Decision node that Pico has reached the middle of the conren/crossing, and that the Decision node should make a decision on the action. The action_done flag informs the Decision node that Pico has completed the action and that the Decision node should start looking for the current situations again.

Safe Drive

The Drive node is also responsible to ensure that Pico does not run into a wall. The node does this by checking all the laser data distances. If a wall is detected withing 30 cms, all motion is stopped and Pico is subsequently aligned to the corridor and driven to the center of the corridor's width. All actions are then resumed.

Aligning

Our software structure relies heavily on the fact that Pico will be able to align itself when it is in a coridor. This allows better sitaution determination for the Descision node and also allows the drive node to drive Pico to the middle of a situation once the situation is determined. Hence, we decided to implement a two fold alignment algorithm which aligns Pico parallel to the two walls of a corridor and also drives Pico to the lateral center of the corridor.

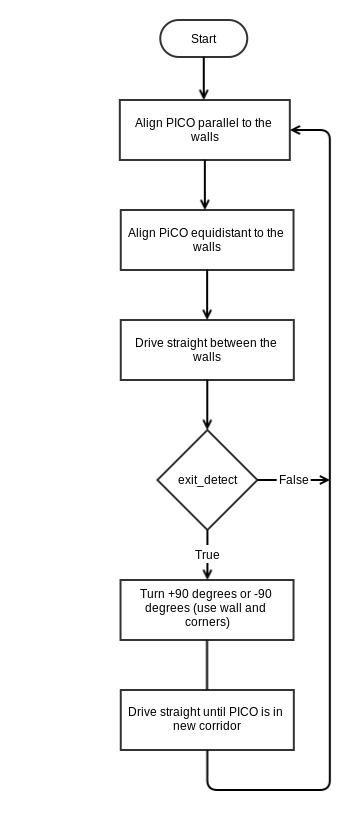

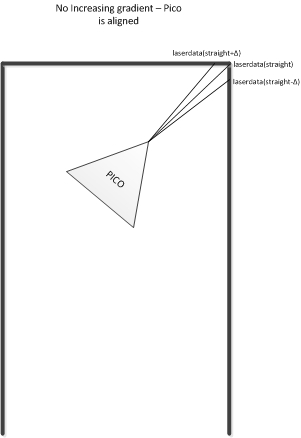

To align parallel to the walls, we implemented an algorithm similar to a gradient ascent algorithm. We use the laserdata values to calculate the gradient around the 0 degrees direction, and then turn Pico along the positive gradient until the laser range at 0 degrees hits a maxima. The algoithm is illustrated in the figure below.

The algorithm described above turns Pico such that it faces the longest direction when it is in a corridor, and hence this algorithms fails if the error in Pico's direction is more than 90 degrees. However, we do not expect such a large error to occur during the maze competition. Another weakness of this algorithm is that when Pico is close to the end of the corridor, this algorithm will make Pico turn towards the corner of the corridor and not straight ahead, as shown in the figure below:

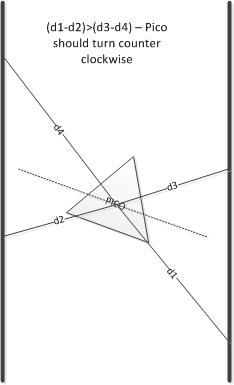

To correct this error, we implemented another algorithm which aligns Pico parallel to the walls by comparing the distances on the left and on the right of Pico. The algorithm is illustrated in the figure shown below:

To check if Pico is at a tilt compared to the walls of the corridor, we calculate the distances (d1-d2) and (d3-d4), and if these distances are of opposite signs, i.e. if (d1-d2)*(d3-d4)<0, Pico can be aligned further. To make the algorithm robust, some tolerance values were used for the comparison. While this method of aligning Pico worked in simulation, we decided not to include it in the software for the final maze competetion as we did not get a chance to test this on the actual hardware setup.

Aligning Pico to the center of the corridor is much simpler; we simply measure the distance to the left and right of Pico, and move Pico laterally until the distances are within a tolerance range of each other.

Before using these alignment techniques, the drive node first checks whether Pico is in a corridor as these algorithms will fail if Pico is in, for example, a corner or a crossing.

Function Description

A breif description of the functions created to perform the modular tasks is given below. The flow of logic in the node will be explained using these function names.

- align_parallel(): This function uses the laser data to align Pico parallel to the two walls of the corridor it is currently in. This fuction uses an adapted form of a gradient ascent algorithm.

- align_center(): Once Pico has been aligned parallel to the walls, this function is used to bring Pico to the center of the corridor.

- driveStraight(): This fuction drives Pico straight.

- turnAround(): This function turns Pico by 180 degrees. It integrates the pose values of the odometry sensor until the result is pi.

- turnLeft(): This function turns Pico by 90 degrees to the left.

- turnRight(): This function turns Pico by 90 degrees to the right.

- reachMiddle_right(): This function allows Pico to reach the middle of a right hand corner. Here, a distinction has been made between a right hand corner and a right-straight T crossing. This function does not make use of corner detection.

- reachMiddle_right_T(): This function allows Pico the reach the middle of a T-crossing with a right exit. It makes use of corner detection.

- reachMiddle_left(): This function allows Pico to reach the middle of a left hand corner. Here, a distinction has been made between a left hand corner and a left-straight T crossing. This function does not make use of corner detection.

- reachMiddle_left_T(): This function allows Pico the reach the middle of a T-crossing with a left exit. It makes use of corner detection.

- stop(): This function stops Pico.

- detectCornersLeft(): This function detects corners on the left side of Pico, i.e from 0 degrees to 120 degrees.

- detectCornersRight(): This function detects corners on the right side of Pico, i.e from 0 degrees to -120 degrees.

Sequence of Actions

Based on the situation and the subsequent action recieved by the Drive node, the sequence of actions performed by the Drive node is shown in the table below.

| Default | Action 1 | Action 2 | Action 3 | Action 4 | Action 5 | |

|---|---|---|---|---|---|---|

| Situation 1 | alignParallel(), alignCenter() | stop() | driveStraight() | Error | Error | Error |

| Situation 2 | - | stop() | Error | Error | Error | turnAround() |

| Situation 3 | reachMiddle_right() | stop() | Error | turnRight(), finishTurn() | Error | turnAround() |

| Situation 4 | reachMiddle_left() | stop() | Error | Error | turnLeft(), finishTurn() | turnAround() |

| Situation 5 | reachMiddle_right_T() | stop() | Error | turnRight(), finishTurn() | turnLeft(), finishTurn() | turnAround() |

| Situation 6 | reachMiddle_right_T() | stop() | driveStraight() | turnRight(), finishTurn() | Error | turnAround() |

| Situation 7 | reachMiddle_left_T() | stop() | driveStraight() | Error | turnLeft(), finishTurn() | turnAround() |

| Situation 8 | reachMiddle_left_T() | stop() | driveStraight() | turnRight(), finishTurn() | turnLeft(), finishTurn() | turnAround() |

Flowchart

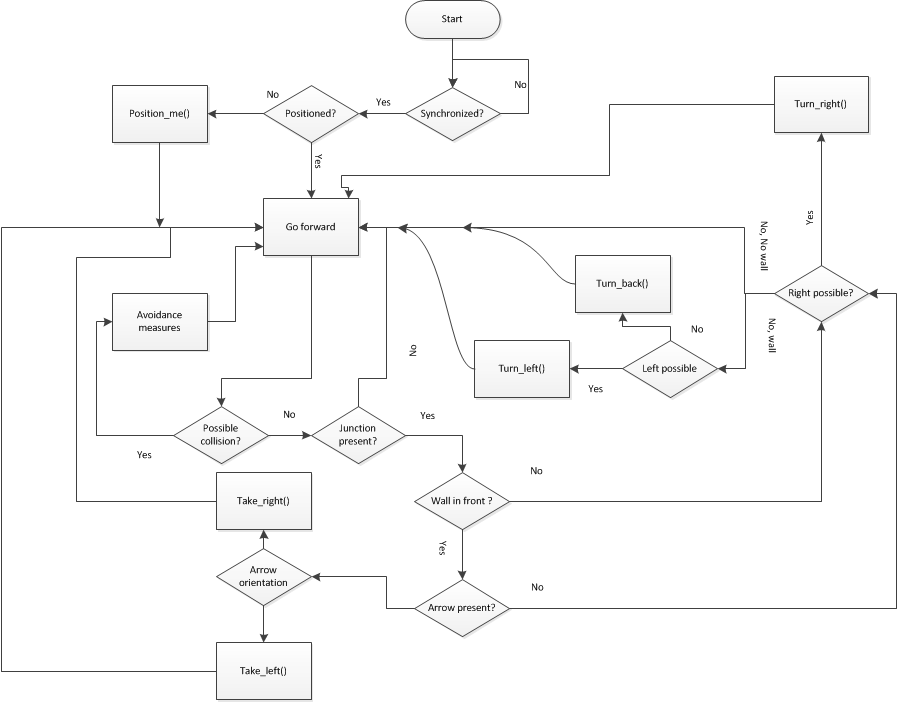

For better illustration, a flowchart depicting the flow of clogic for the first 4 situations (corridor, dead-end, right corner and left corner) is shown below:

Arrow Node

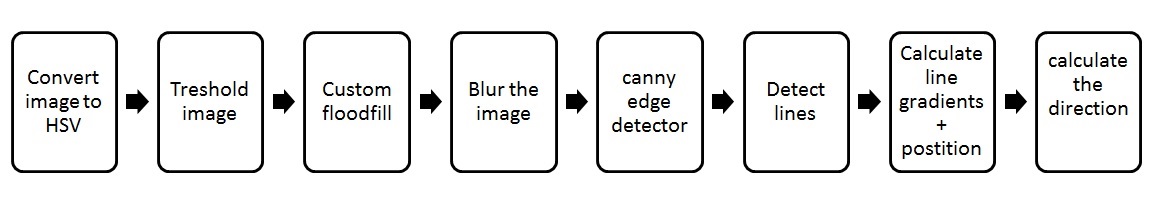

The arrow node is made for detecting a arrow and its orientation of a arrow when asked by the information node. The info this node publishes then helps the decision node decide what turn to take.

Convert image to hsv

First the camera rgb camera image is converted to a hsv image. this for making the following step easyer

Threshold image

The image is now threshold so that only the red parts of that would be red in a rgb picture become wit and the rest black (a binary image)

Custom flood fill

A flood fill is used to fill in the small imperfections in the arrow and also make the edges of the arrow smoother. This custom flood fill also removes "alone" pixels that can be in the image from the camera noise

Blur the image

The binary image is blurred to make the edges even smoother for the next step.

Canny edge detector

a canny line algorithm is used to detect the outer lines of the arrow image

Detect lines

The lines are then detected by using a Hough transform algoritm

Calculate line gradients + position

The lines are filtered so only left 2 and right 2 lines are saved. For these 4 lines the gradient is calculated with the following formula

[math]\displaystyle{ \nabla = \frac{y_{1} - y_{2}}{x_{1} - x_{2}} }[/math]

By looking the gradient and the postion of the lines the direction of the arrow can be calculated

Calculate the direction

by letting the above steps happen for 2 seconds and save how many time there was a arrow left right and no detected it can be sad if there is a arrow.