Embedded Motion Control 2013 Group 3: Difference between revisions

m (edited progress for Yorrick and Matthijs week5) |

No edit summary |

||

| Line 124: | Line 124: | ||

==Progress== | ==Progress== | ||

'''Week5''' <br/> | '''Week5''' <br/> | ||

(MA & YO) : Removed scanning functionality from the states and conditions and put it in a seperate function that returns a struct "Laser_data" containing info about wall locations and scanner range/resolution etc. Did some tuning on the gap_detection algorithm to make it more robust and made some minor changes on the decision tree. (now only checks nect condition if previous one returns false). | (MA & YO) : Removed scanning functionality from the states and conditions and put it in a seperate function that returns a struct "Laser_data" containing info about wall locations and scanner range/resolution etc. Did some tuning on the gap_detection algorithm to make it more robust and made some minor changes on the decision tree. (now only checks nect condition if previous one returns false). <br/> | ||

'''Week6''' <br/> | '''Week6''' <br/> | ||

'''Week7''' <br/> | '''Week7''' <br/> | ||

Revision as of 13:11, 1 October 2013

Contact info

| Vissers, Yorrick (YO) | 0619897 | y.vissers@student.tue.nl |

| Wanders, Matthijs (MA) | 0620608 | m.wanders@student.tue.nl |

| Gruntjens, Koen (KO) | 0760934 | k.g.j.gruntjens@student.tue.nl |

| Bouazzaoui, Hicham (HI) | 0831797 | h.e.bouazzaoui@student.tue.nl |

| Zhu ,Yifan (YI) | 0828010 | y.zhu@student.tue.nl |

Meeting hours

Mondays 11:00 --> 17:00

Wednesdays 8:45 --> 10:30

Thursday 9:00 --> 10.00 Testing on Pico

Meet with tutor: Mondays at 14:00

Planning

Ma 09 sept:

- Finish installation of everything

- Go through ROS (beginner) and C++ manual

Wo 11 sept:

- Finish ROS, C++ manuals

- Start thinking about function architecture

Ma 16 sept:

- Design architecture

- Functionality division

- Divide programming tasks

Do 19 sept:

- Finish "state stop" (Koen)

- Finish "drive_parallel" (Matthijs, Yorrick)

- Creating a new "maze/corridor" in Gazebo (Yifan)

- Simulate and build the total code using Gazebo (Hicham)

- Testing with robot at 13:00-14:00

Vr 20 sept:

- Finish "crash_avoidance"

- Coding "gap_detection" (Yifan)

- Coding "dead_end_detection" (Matthijs, Yorrick)

- Coding "maze_finished" (Koen, Hicham)

Ma 23 sept:

- Finish "drive_parallel"

- Putting things together

- Testing with robot at 12:00-13:00 (Failed due to network down)

Unfortunately we encountered some major problems with the Pico robot due to a failing network. We discussed the approach for the corridor competition. At this point the robot is able to drive parallel through the corridor and can look for gaps either left or right. We a gap is reached the robot will make a smooth circle through the gap. This is all tested and simulated. For the corridor competition we will not check for death ends. There isn't enough time to implement this function before Wednesday 25 September. The corresponding actions such as "turn around" won't be finished either. Without these functions we should be alble to pass the corridor competition successfully.

Di 24 sept:

- Testing with robot at 13:00-14:00

- Finding proper parameters for each condition and state

Wo 25 sept:

- Finish clean_rotation

- Finish gap_handling

- Putting things together

- Corridor Challenge

Week 5:

- Design and simulate code

- Create a structure "laser_data" which processes the data of the laser. This contains the calculation of (Ma + Yo):

- Shortest distance to the wall

- Distance to the right wall with respect to Pico

- Distance to the left wall with respect to Pico

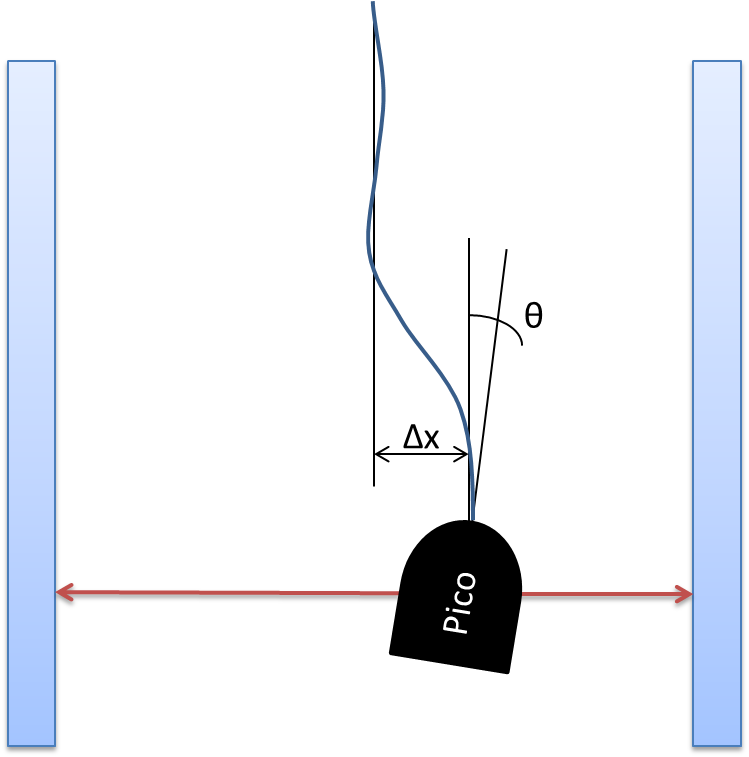

- Edit/improve state "drive_parallel" with a feedback controller according to the angle with respect to the right wall (Ma + Yo).

- Create the condition "dead_end". When a dead end is detected switch to the state "turn_around" (Yi + Ko).

- Edit/improve the condition "gap_detect_left/right". Make this condition more robust (Yi + Ko).

- Start researching the properties of the camera of Pico (Hi).

- Create a structure "laser_data" which processes the data of the laser. This contains the calculation of (Ma + Yo):

- Testing on Pico (Listed by priorities)

1) Test robustness of the feedback controller and gap_detection

2) Tune the translational and rotational speed (faster)

3) Test detecting dead ends

4) Test strange intersections

Week 6:

- Design and simulate code

- Create state "turn_around" which is the state when a dead end is detect. Pico must do a 180 degrees clean rotation on the spot (Yi + Ko).

- Create the condition "finished". This is the condition when Pico exists the maze. Switch to state "finalize" (Yi + Ko).

- Improve the priorities list. Pico needs to make smart decisions when multiply conditions occur. Basically the priorities are listed as (Ma + Yo):

1) Turn right

2) Go straight

3) Turn left

- Continue researching the properties of the camera of Pico (Hi)

- Testing on Pico (Listed by priorities)

1) Test the state "turn_around"

2) Test the condition "finished"

3) Building a small maze containing a t-intersection and a dead end and test the priorities (Can also be done properly during simulation)

Week 7:

- Design and simulate code

- Edit/improve the state "reset". This states handles the condition when a object is detected close to Pico.

- Imaging processing. Detect arrows on the wall (Hi).

- Testing on Pico (Listed by priorities)

1) Build a maze and solve it without using camera.

2) Test camera

Week 8:

- Design and simulate code

- Finalize the code

- Imaging processing. Detect arrows on the wall.

- Testing on Pico (Listed by priorities)

1) Build a maze and solve it using camera.

2) Test camera

Progress

Week5

(MA & YO) : Removed scanning functionality from the states and conditions and put it in a seperate function that returns a struct "Laser_data" containing info about wall locations and scanner range/resolution etc. Did some tuning on the gap_detection algorithm to make it more robust and made some minor changes on the decision tree. (now only checks nect condition if previous one returns false).

Week6

Week7

Week8

PICO usefull info

- minimal angle = 2,35739

- maximal angle = -2,35739

- angle increment = 0,00436554

- scan.range.size() = 1081

Strategy

For navigating through the maze we use a "wall follower" strategy. This means that the robot will always stick to the right wall and always find it's way to the exit. At a later stage we will extend this base strategy with extra features such as the camera to detect instructions. The main robot controller can be in six states according to the conditions. The conditions are:

- Dead_end_detection

- Input(s): (sensor_msg) LaserScan_data

- Output(s): (Bool) dead_end_detect

- The flag "dead_end_detect" is set when the robot measures a death end in front.

- Gap_detection

- Input(s): (sensor_msg) LaserScan_data

- Output(s): (Bool) gap_detect

- The flag "gap_detect" is set to true when the robot is located at the center of the gap. Only gaps on the right-hand side will be noticed.

- Crash_avoidance

- Input(s): (sensor_msg) LaserScan_data

- Output(s): (Bool) chrash_detect

- The flag "chrash_detect" is set when the robot measures an object closer then 30 centimeters over the entire laser range.

- Maze_finished

- Input(s): (sensor_msg) LaserScan_data

- Output(s): (Bool) finalize

- This flag will be set to true when the robot exits the maze and detect no object closer then 2 meters around it.

Within these functions a flag is set when the robot is in a certain condition. All based on the data of the laser scanner.

Based on the flag which is set the robot encounters a certain condition and will be in the corresponding state.

The six states are:

- state_drive_parallel: Keep the robot at a fixed distance (setpoint) from the right wall while driving.

- state_gap_handling: When arriving at the center of a gap (to the right). Rotate in place untill parallel with new right wall.

- state_stop: Stops the robot when necessary.

- state_finalize: When no longer between two walls and no maze in front, abort.

- state_turn_around: when at a dead end, turn around.

The state functions will be called according to the flag and determines the linear and rotational velocities. We will use one node that "spins" and subscribes to the laser data, and publishes the velocities.

The figure below depicts the simplified architecture of the program at this point.

Design approach & conventions

- datastructures.h: put all global variables and datastructures here.

- states: each contains the functionality of a state.

- conditions: each checks a certain condition to determine the next state.

- theseus is name of the package/node to execute

- theseus_controller is the controller that is iterated and runs:

Gather conditions e.g.: crash_avoidance, gap_detection. Based on the information a state will be chosen and executed. Publish velocities.

Corridor Competition

Conditions

Crash avoidance:

Finished:

When Pico exists the maze the condition "Finished" is reached. The following steps are carried out to check if Pico behaves in this condition:

- Read out the laser data from -90 to +90 degrees with respect to the robot

- Check if all distances are larger than 2 meters. When this is the case. Maze finished!

Gap detection left:

Gap detection right:

This conditions is the same as gap detection left, but obviously for the right side.

Dead end detection:

Arrow detection:

States

Reset:

Final:

State Final is called when Pico satisfies the condition Finalize. This state will ensure that Pico will stop moving by sending 0 to the wheels for translation and rotation.

Gap handling:

drive parallel:

Drive parallel is the default state and is called when all other conditions return false. In this state a setpoint is calculated as the middle of the closest point to the left and right of pico. On top of that we define the deviation from this setpoint as the error, and the current rotation as theta, both relative to closest point left and right. With these definitions seven situations are distinguished that require different velocities (translational an rotational) to converge to the setpoint quickly and in a stable manner. This is currently done by fixed values tuned on pico, but will be improved with feedback of the error and theta to improve performance.

The 7 situations are visualised here:

Turn around:

Pico is switched to this state when a dead end is detected. When Pico is in this state he makes a clean rotation on the spot of 180 degrees. Turning Pico is done by a very simple open loop function. We will set a specific angular velocity to the wheels and wait for a couple iterations until the desired rotation is reached. We don't use any feedback to compensate slip and friction etc. In terms of accuracy this will not be the best solution but the small error due to slip and friction will be handled by the state drive_parallel which does contain a feedback controller.