Doering, M., & Glas, D., & Ishiguro, H., (2019). Modeling Interaction Structure for Robot Imitation Learning of Human Social Behavior.

Robots show a potential in the roles of elder care, personal companions, hotel concierges, day-to-day interaction.

One of the difficulties of introducing robots to new domains is the creation of social interaction logic. An imitation learning based approach could address this.

It’s very difficult to correctly respond to context-dependent, ambiguous actions. The scenario used in the paper is a travel agency. The topic of conversation (travel packages) are abstract entities, not directly observable from the sensor data.

The second important problem is interpretability: When the decisions of a robot may have significant consequences, it is imperative to understand the reasoning behind those decisions.

In this study both problems are solved by modeling the structure of the interaction itself and learning human-readable robot-interaction rules.

The main focus of this study is to model the topic of conversation as it changes throughout a conversation.

The proposed method uses human-human interaction data to train a robot to perform socially appropriate behaviors in face-to-face interactions with a human.

The system is applicable to any domain in which the participants’ actions are highly repeatable. For demonstration a travel agent interaction scenario is used.

A customer enters a room, approaches a table with the travel agent and they proceed to converse about the available travel packages until the customer decides whether or not to purchase one.

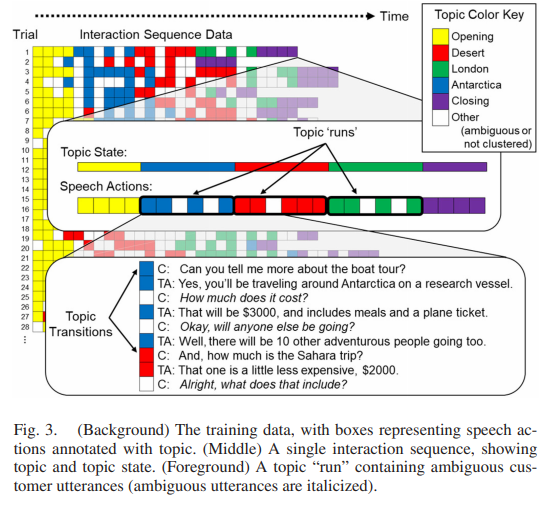

Three packages were created to talk about: A trip to the Sahara Desert, to London and a boat cruise along the coast of Antarctica. Each package had six attributes: Destination, duration, price, what is included in the package, what there is to do on the trip and who else will be going on the trip.

For the data collection, six participants took turns playing the roles of travel agent and customer and Google Speech API was used to convert the recorded audio to text.

The speech was vectorized using word stems and keywords using Python NLTK’s WordNet-based lemmatizer and AlchemyAPI.

After which the speech was clustered.

This data is then used to train the robot.

The system was evaluated on 192 human-human trained interactions using hold-one-out cross-validation and a human evaluator categorized the system’s responses to each customer utterance as either correct or incorrect.

The system learned many correct interaction rules, but had some incorrect rules as well. Example: The system learned that the utterance “Okay, and how long is that trip?” only contains a single predicted travel agent action: “That is 10 days.” Which is only true for the Antarctica package. Many customer utterances are ambiguous with respect to which travel package is under discussion, so predicting the actions based on the customer’s action alone is insufficient (only 39% of the system’s responses to ambiguous customer actions were correct, compared to 66% of the responses to unambiguous customer actions).

A topic state estimator was incorporated to model the topic of conversation (see Fig 3). Speech actions that are not associated with only a single topic are white. For each timestep t of an interaction the topic state st was determined. For each step the topic estimator determines whether the topic state st remains the same or changes based on the most recent action.

After training, the topic state estimator achieved a prediction accuracy of 70.3%.

The system performed better with the topic state included. Responses to ambiguous customer utterances were correct 62% (versus 39%) of the time.

A user study was concluded to evaluate the proposed system with a real robot interacting with real people.

The participants were instructed to start by walking up to the robot and greeting it, and ending with stating their choice of travel package or if they were still undecided. During the interaction the participant’s speech was collected in real time and fed into the system. Afterwards the participants were asked to fill in a questionnaire.

The system achieved a mean robot action prediction correctness rate of 65.2%. The proposed topic state estimator estimated the state correctly 69% of the time and in case of errors recovered on the next turn 18% of the time.