0LAUK0 2018Q1 Group 2 - Behavior Experiment

Notice

This page contains information on the behavior experiment performed by PRE2018 1 Group2 for the course Project Robots Everywhere (0LAUK0).

Goal, research question and design of the study

One of the most important aspects of our prototype entails that it would be capable to respond to movements of the user. However, we did not have concrete number about how much and how often a user will change their posture while working with their computer.

We set out to give our face tracking software a test run by performing an experiment where we measured how much someone moves around while working on their computer. This was a qualitative study, with the intent to find out if people other than the researchers themselves move around while working on their computer. A colleague of one of our team members was asked to take part in the scenario described in the ‘scenario’ section. The participant signed an informed consent form and was debriefed after the experiment was completed.

The research question of this research is as follows: do computer users move their face around while using their computer. The face tracking software that we used uses an image from the user’s webcam to detect faces. For each face its horizontal location and vertical location on the image is recorded, as well as the height and width of the face. All measurements are made in pixels, which means the following:

- The captured has a size of 640 by 480 pixels.

- If the horizontal location is smaller than 320, the user is to the left of the camera (when looking outward from the camera’s point of view). If the horizontal location is larger than 320, the user is to the right of the camera.

- If the vertical location is smaller than 240, then the user is located in the top half of the image. Similarly, if the vertical location is larger than 240, then the user is located in the bottom half of the image.

- Furthermore, the size of the face is also recorded. Larger values for the width and height of the face indicate that the user’s face takes up more space in the image, and is therefore closer to the camera. Since the camera is mounted above the screen of the user’s laptop, this automatically means that the user is sitting closer to the screen of their laptop.

Our research hypothesis entails that the user will move around. If we graph the horizontal and vertical location of the user over time we expect not to see flat lines, as a flat line would indicate that the user sat perfectly still in front of their display. If we graph the height and width of the user’s face, we expect not to see flat lines, as a flat line would again indicate that the user sat perfectly still in front of their display.

Scenario

An independent user is doing homework behind his laptop. The monitoring software that will control the monitor arm is running on the user’s laptop and uses the laptop’s built-in camera to track the user’s posture. Measurements were taken every 2 seconds.

Results

The data was stored as a .csv file. It was imported using Stata and converted to a .dta file. All analyses took place using Stata (StataCorp, 2015).

Due to a typo in the source code of the posture tracking software, we were not able to perform all measurements. Instead of measuring horizontal location, vertical location, face width, and face height, we only measured horizontal location, face width and face height. Due to the typo the height of the face was stored twice for each observation. One of these measurements per observation should have been the vertical location of the face.

Our dataset and analysis files are available for download on our google drive project page. They will also be linked at the end of this page. Our research question allows us to only use descriptive statistics, instead of using inferential statistics.

The experiment lasted 38 minutes. The team member that performed the experiment noted that the first two and the last two minutes of the measurements might contain measurements from the experimenter instead of the subject, because he had to enable and disable the software. As such measurements from the first two and last two minutes are ignored for the analysis of this experiment.

First we summarize the measured variables. As indicated earlier we were not able to measure the vertical location of the user, therefore it is omitted from the remainder of this analysis. We inspect that the width of the face and the height of the face are perfectly correlated, as the program creates a triangle around the users face.

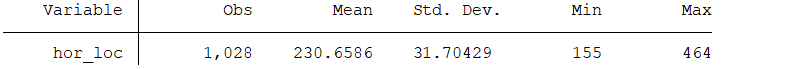

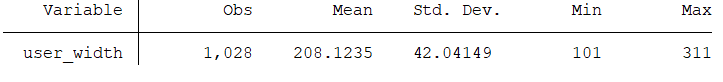

We can see that both the horizontal location (table 1) and the width of the face (table 2) vary across measurements. We also see that the width and height of the user’s face are perfectly correlated (table 3), as such we can say that the users face moves has variation in terms of moving toward and away from the screen.

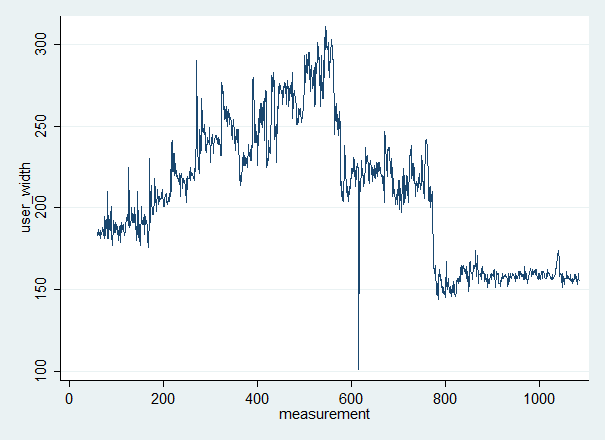

When we plot the changes in location and size, the variation becomes even more visible. Note that the spike around measurement 600 can be a false positive measurement by the software. This spike could also have been caused by rapid head movement of the subject, but without additional data we cannot be sure about the true cause of this spike.

Looking at the size of the face it becomes clear that for the first half of the measurements the user slowly starts to sit closer and closer to their screen, until measurement ~600, where he has decided to sit further away from the monitor. At measurement ~800, he has again moved further away from the screen. We think that these two plateaus show the user changing their posture and maintaining that posture.

Conclusion

The results from our experiment show that the user does move around while looking at their monitor, we may have even seen posture changes in action in this dataset. We conclude that this qualitative study has shown that our system will actually show activity, as the user will most likely move around while using our smart monitor.

Links

- Raw data: https://drive.google.com/open?id=1z5xOYMXbyGj_JLgtNJ4fMm4fSbnCJNi9

- Dataset: https://drive.google.com/open?id=1fEoM8BKZwy_aexUnZW2_AyHo63XGmIo4

- Analysis script: https://drive.google.com/open?id=1OwWYwQrK2NBSQEsf4Q0445srGfFEYfMD

References

- StataCorp. (2015). Stata. College Station: StataCorp. Retrieved from http://www.stata.com