Mobile Robot Control 2024 Robocop: Difference between revisions

| (69 intermediate revisions by 7 users not shown) | |||

| Line 14: | Line 14: | ||

|Marijn Ruiter | |Marijn Ruiter | ||

|1489496 | |1489496 | ||

|- | |||

|Luke Alkemade | |||

|1581643 | |||

|- | |||

|Arif Ashworth | |||

|1544632 | |||

|- | |||

|Abhidnya Kadu | |||

|1859234 | |||

|} | |} | ||

== Exercise 1 == | |||

=== Method 1 === | |||

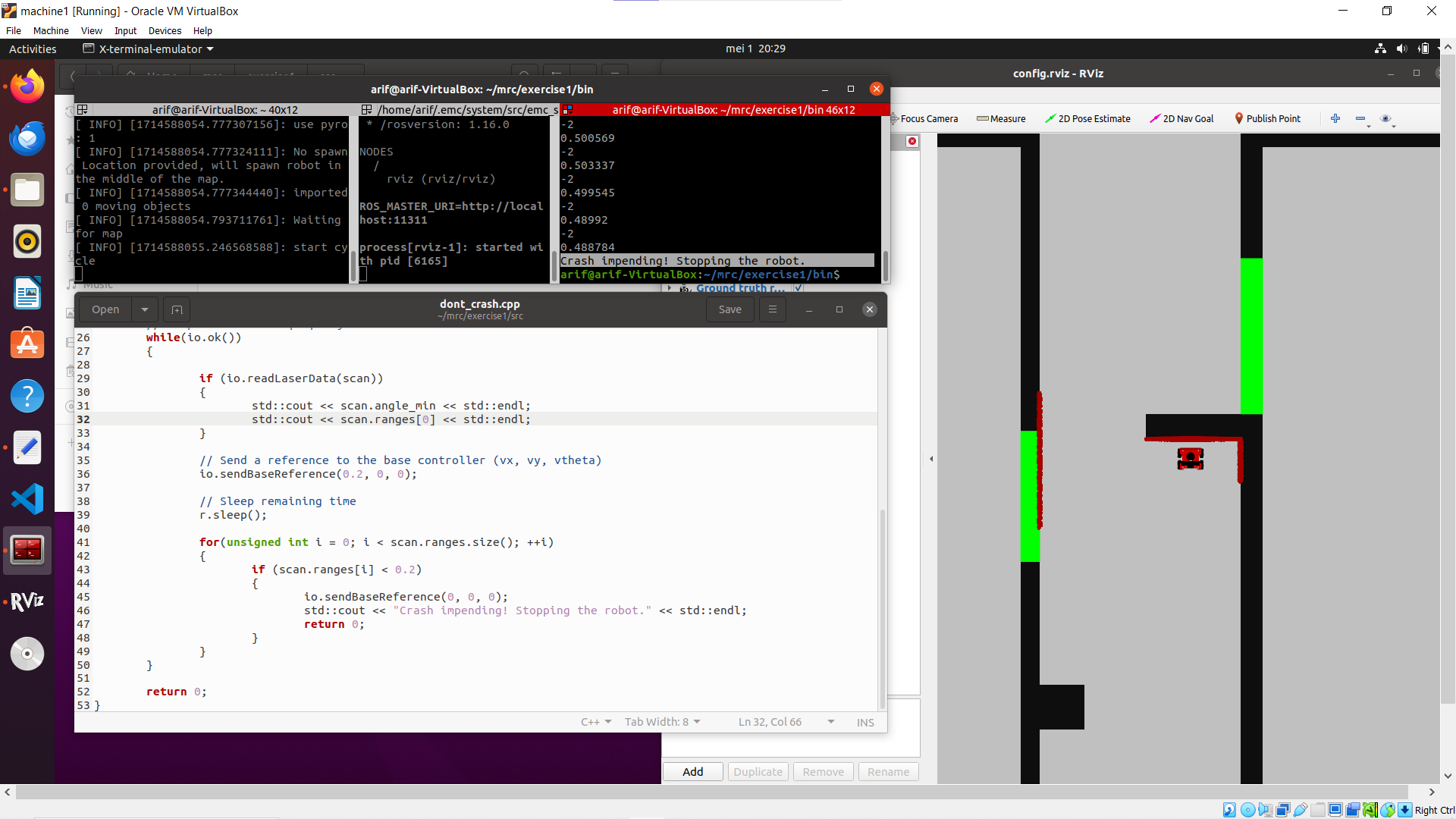

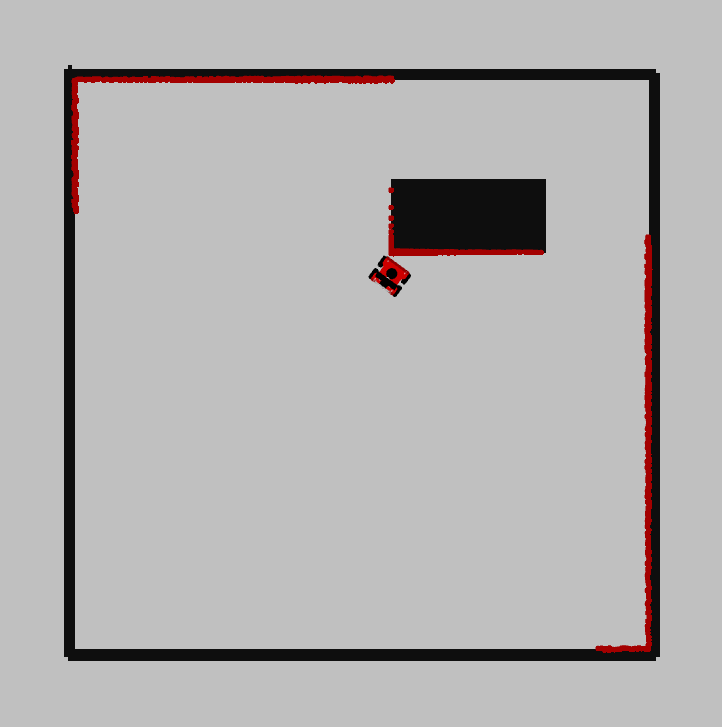

[[File:Dont crash 1.png|thumb|591x591px|Screenshot of final result for Method 1.]] | |||

In this method the LaserData struct is used to track the measured distances from the walls to the robot. To make the robot drive forward the sendBaseReference command is used, and the robot moves in the x direction at a speed of 0.2. Once it reaches the wall in front of it and the range values drop to below 0.2, motion is halted and a message is printed to the screen before exiting the program. | |||

Some experimentation was done to test different speeds and thresholds for the stopping range values. When the speed was higher than the stopping range value the robot would actually crash into the wall first before stopping, and if it was lower then the robot would stop slightly farther away from the wall. However, this only seemed to be the case when the stopping value was very low (e.g. 0.1), but increasing it to 0.2, for example, allowed the speed to be increased to 0.4 without any crashing. | |||

'''''On your wiki. Compare the different methods to one another. What situations do these methods handle well? Is there one "Best" method?''''' | |||

=== Method 2 === | |||

In method 3 a LaserData structure is created to get measurements of the distance between the robot and its surroundings. A variable of type double is created which is named '''LowerLimit''', referring to the minimal distance between the wall and the robot where action should be taken. This lower limit is set at 0.3 meters. Furthermore, four other variables of type double are defined to create two cones, a left and right cone. Namely, '''LeftCone1''' (n = 999) referring to the end of the measurement range, '''LeftCone2''' (n = 500) referring to the beginning of the second half of the measurement range, '''RightCone1''' (n = 499) referring to the end of the first half of the measurement range and '''RightCone2''' (n = 0) referring to the beginning of the measurement range. Lastly, turning angles of pi/6 to the right and left are defined as '''TurningAngleRight''' and '''TurningAngleLeft''', respectively. | |||

After creating all necessary variables, a while-loop is created which holds true for io.ok(). First, a base reference with a velocity of 0.2 in the X-direction is send to the robot using the function '''io.sendBaseReference(0.2,0,0)'''. Then, the LaserData structure is read, checking if there is new data. If this holds true a for-loop is created that iterates over all the distance measurements. Two if-statements then check if a certain distance measurement is in the right or left cone and below the lower limit. When in the right cone and below the lower limit a command is send to the robot to stop moving and then turn left with the function '''io.sendBaseReference(0,0,TurningAngleLeft)'''. Else, when in the left cone and below the lower limit a command is send to the robot to again stop moving and then turn to the right with the function '''io.sendBaseReference(0,0,TurningAngleRight)'''. | |||

Testing with the first given map it is clear no collision is made between the robot and the walls. Although when it changes direction it does so with a fixed value, overall not making any significant progress when driving around the space. Testing with different values for turning angles, smaller cones or a higher lower limit all produce different behaviours but one can't be called better than the other. | |||

[[File:Screencast from 06-06-24 12 06 40.gif|thumb|1013x1013px|Method 3 working as intended. Difficulty with getting out of two corners, due to a large turning angle. |center]] | |||

==Exercise 2== | |||

=== Method 1=== | |||

The previously described method was tested on the two provided maps with input speeds of (0.3, 0, +-0.3) and a stopping value of 0.2. With both maps the robot successfully came to a stop before crashing, although it struggled when driving into a corner and stopped much closer to the wall than it did in previous tests. | |||

<table> <tr> <td> [[File:Dont crash ex2 map1.png|thumb|268x268px|Method 1 on Map 1.|none]] </td> <td>[[File:Dont crash ex2 map2.png|thumb|268x268px|Method 1 on Map 2.|none]] </td> </tr> </table> | |||

method 2: | |||

for method 2 there was a slightly different approach. laserdata was scanned (not full range about 200 to 800). every time it runs into a wall the robot turns randomly. it wil turn the same direction until it sees the robot is free to drive. This added random turning makes it possible that the robot randomly finds exits and explores the map. The code translated wel to bobo where it stopped without bumping and turned around to explore. | |||

{| class="wikitable" | |||

|+ | |||

![[File:Ezgif-2-95d463a1c6.gif|none|thumb|live test]] | |||

![[File:Screencast from 06-05-2024 02 31 03 PM.gif|thumb|546x546px|Method 2 on general map]] | |||

! | |||

|} | |||

=== Method 3: === | |||

In the two videos where both maps are simulated with method 3 of the don_crash algorithm, the results are very similar to the results in the map of exercise 1. | |||

[[File:Screencast from 06-06-24 13 45 09.gif|center|thumb|793x793px|Method 3 riding around without collision on map 1]] | |||

[[File:Screencast from 06-06-24 13 48 37.gif|center|thumb|792x792px|Method 3 riding around without colliding on map 2]] | |||

==Practical Exercises 1== | |||

'''On the robot-laptop open rviz. Observe the laser data. How much noise is there on the laser? What objects can be seen by the laser? Which objects cannot? How do your own legs look like to the robot.''' | |||

When running the sim-rviz environment on the Bobo robot there was significant noise even when remaining stationary, as shown in the GIF below. Due to the density in hit points for the lasers, the measurement of solid flat walls was reliable. However, the noise had high amounts of variation when looking at the edges or corners of a wall, especially when corners were slightly behind the robot, which could indicate weak peripheral vision. Then, black coloured and thin objects were placed in front of the sensors and the robot struggled to detect them. Even when they ''are'' detected they still appear on the visualization with heavy amounts of noise. In contrast, our own legs, rolled up pieces of paper and contours were more clearly defined when placed in front of the robot.[[File:Noise rviz.gif|center|thumb|Video of noise measurements in the sim-rviz environment.]]'''Take your example of don't crash and test it on the robot. Does it work like in simulation? Why? Why not? (choose the most promising version of don't crash that you have.)''' | |||

Two different don't crash codes were tested on the robot. The first code contained the method of detecting walls within a certain distance and telling the robot to stop and remain in position once it came too close. The second code contained the more elaborate method where the robot looked for the next direction of movement that did not have any walls within a set distance, and then continued driving in that direction. For the first code, it became apparent that in the simulation there was a higher margin for error in terms of how close the robot could get to the walls without the system alerting the user of a crash. For the real life test to work, the moving speed had to be reduced and the distance threshold increased to account for things like the robot's size. For the second code, the robot was able to follow simulation results and managed to perform well without needing modifications. GIFs of both code tests can be seen below. | |||

<table> <tr> <td> [[File:Dont crash 1 gif.gif|thumb|288x288px|Video of tests on the first code.|none]] </td> <td>[[File:Dont crash 2 gif.gif|thumb|307x307px|Video of tests on the second code.|none]] </td> </tr> </table> | |||

'''If necessary make changes to your code and test again. What changes did you have to make? Why? Was this something you could have found in simulation?''' | |||

As stated before, some changes had to be made to the moving speed and threshold for distances that counted as a "crash". This is not something that was immediately apparent in the simulations beforehand, but in hindsight it could have been prevented before testing the code on the actual robot. Of course, the robot in the simulations might not have the same dimensions as its real life counterpart, and so, the measure tool in the rviz environment could have been use to precisely measure the distance at which the robot was stopping, and whether or not it would be far enough for an actual robot. | |||

==Local Navigation== | |||

===Dynamic Window Approach=== | |||

=====The approach===== | |||

The approach can be divided in the following sequential steps: | |||

# '''Calculate the union between the Possible (angular) Velocities (Vs, Ws) and the Reachable (angular) Velocities (Vd, Wd)''' | |||

#* EXPLAIN HOW | |||

# '''Iterate through the discretized values (''v, w'') of the union of Vs, Vd and Ws and Wd:''' | |||

##'''Calculate the Admissable (angular) Velocities (Va,Wa) using ''v'' and ''w''''' | |||

##* EXPLAIN HOW | |||

## '''Check if ''v'' and ''w'' are also in Va and Wa, if this is the case:''' | |||

##*'''Calculate the optimization function value. If this value is higher than the previous optimization value, set the current ''v and w'' to ''v_final'' and ''w_final''''' | |||

##**EXPLAIN HOW | |||

#'''Return ''v_final, w''_final''' | |||

Our approach for the Dynamic Window Approach (DWA) is that we first make a list of samples that fit inside the possible velocity limit (Vs) and reachable velocity and acceleration constraints (Vd). After which we | |||

===== Questions===== | |||

'''1. What are the advantages and disadvantages of your solutions?''' | |||

Advantages: | |||

#By constantly calculating possible trajectories it is able to avoid dynamic and static obstacles. | |||

#By including the robot acceleration and deceleration constraints it ensures for a smooth navigation without abrupt movements. | |||

Disadvantages: | |||

#DWA may get trapped in a local minima. | |||

#There is a high computational load in calculating the multiple trajectories in real-time | |||

'''2. What are possible scenarios which can result in failures?''' | |||

#Like mentioned in question 1 DWA can get trapped in a local minima. | |||

#*'''Solution:''' Include the global planner so it doesn't drive into a local minima | |||

#We also encounter some problems when the robot is driving to a straight wall and can't decide with direction to take. (see video: https://gitlab.tue.nl/mobile-robot-control/mrc-2024/robocop/-/blob/main/videos_and_photos/dwa_straight_wall.mp4) | |||

#*'''Solution:''' Increase Ks and decrease Kh so the robot is less constrained to drive directly towards the goal. | |||

'''3. How are the local and global planner linked together?''' | |||

===Vector Field Histograms=== | |||

Vector Field Histograms (VFH) are a robot control algorithm used for real-time obstacle avoidance. VFH works by creating a histogram grid that represents the robot's surroundings, where each cell contains a value corresponding to the density of obstacles. The algorithm calculates a polar histogram from this grid to determine the direction with the least obstacle density, allowing the robot to navigate safely by selecting a path with minimal risk of collision. This approach is efficient and effective for navigating through complex and dynamic environments. | |||

==== Case specific aproach ==== | |||

In our implementation of VFH, the following steps were taken: | |||

# '''Laser Data Acquisition''': Laser data is obtained using the <code>getLaserdata</code> function, ensuring compatibility with all robots. | |||

# '''Data Preparation''': The number of laser readings is rounded down to the nearest multiple of ten to avoid erroneous values. | |||

# '''Data Binning''': The laser readings are divided into bins of ten. | |||

# '''Histogram Calculation''': These bins are used to create the vector field histogram. This histogram is a graph with angles on the x-axis and the likelihood of a blocking object on the y-axis. | |||

# '''Path Selection''': Angles where the y-axis value is below a certain threshold indicate unobstructed paths. The algorithm selects the angle that is both unobstructed and closest to the desired goal. | |||

This approach ensures accurate and efficient obstacle avoidance, allowing the robot to navigate safely and effectively. | |||

==== The optimization ==== | |||

[[File:Fig circle explain.jpg|thumb|A schematic overivew of the inclusions of the robot dimensions. ]] | |||

To optimize the Vector Field Histogram (VFH) algorithm for robot control, the robot's dimensions must be considered to ensure it maintains a safe distance from walls and corners. The robot should ideally stay at least 25 cm away from obstacles to account for noise and potential calculation errors. Directly integrating the robot's radius into the measured distance is complex due to the angled measurements of the lasers, which can distort data, especially over longer distances. This distortion may lead to missed corners, falsely indicating large open spaces in the histogram. | |||

To address this, an algorithm was developed that generates circles at the points where the laser detects an object. These circles are used to determine intersections with other laser points. If a laser intersects a circle, the measured distance is adjusted to the closest intersection point. This approach effectively blocks corners and narrow spaces, ensuring the robot maintains a safe distance from walls, as demonstrated in the results. | |||

==== Results ==== | |||

The robot successfully navigates its environment while maintaining a safe distance from the walls, as demonstrated in the videos. Currently, it operates at a speed of 0.15 m/s, which is relatively low. This slow speed is necessary because the histogram calculations can yield suboptimal values if the decision-making time is too short. The most significant area for improvement in the system is increasing its decision-making efficiency. Future enhancements will include a stop mechanism that allows the robot to halt at critical decision points. At these points, the robot will rotate and scan the environment again before proceeding. This will reduce incorrect movements and enhance the robot's robustness and reliability.<table> | |||

<tr> | |||

<td> | |||

[[File:VFH map 1.gif|none|thumb|600x600px|Test on Map 1.]] | |||

<div></div> | |||

</td> | |||

<td> | |||

[[File:VFH map 2.gif|none|thumb|600x600px|Test on Map 2.]] | |||

<div></div> | |||

</td> | |||

</tr> | |||

<tr> | |||

<td> | |||

[[File:VFH map 3.gif|none|thumb|600x600px|Test on Map 3.]] | |||

<div></div> | |||

</td> | |||

<td> | |||

[[File:VFH map 4.gif|none|thumb|600x600px|Test on Map 4.]] | |||

<div></div> | |||

</td> | |||

</tr> | |||

</table> | |||

====Questions==== | |||

#'''What are the advantages and disadvantages of your solutions?''' the algorithm is computationally efficient. the laser scan data gets looped through a few times (collecting laser data, making the VFH from the laserdata, optional filtering of the VFH, choosing the best angle). This way the algorithm updates the robot without to much delay from the real time data. a disadvantage of the algorithm is that it doesn't account well for size of the robot. even when inflating the walls in software the casting of the laser beam make it unpredictable if you actually drive aground a wall instead of next to the wall. It does drive itself around but the path isn't always optimal. | |||

#'''What are possible scenarios which can result in failures?'''the algorithm will not navigate mazes itself. If there are too much obstructions the robot could get stuck between a few points. When entering for example something like a U shape that is larger than its viewing window. The robot will enter the hallway thinking it's is free, when seeing the end walls it will turn around until it doesn't see the wall anymore. At that point the robot will again turn around to get stuck in a loop. | |||

#'''How would you prevent these scenarios from happening?'''By integrating a good global planner the robot already won't go into these U shapes. If it finds itself stuck on a point that a global map didn't see the robot can report this back and again find a global route that can be locally navigated. | |||

#'''How are the local and global planner linked together?'''The VFH algorithm is structured using header files and separate class files. There is one main file, "Local_Nav.cpp", which runs the EMC environment and executes things like the import of odometry and laser data, and it then feeds those values into functions that will handle the calculations of the algorithm. In "Pos.cpp" there are functions defined for updating the robot's positional information (x, y and alpha), as well as, a function to inform it on its starting position (if it is not equal to (0, 0, 0)). Lastly, the bulk of the code can be found in "VFH.cpp", where actions like forming the bins of the histogram, calculating the next angle of movement, and so forth are completed. Most importantly, the execution of the VFH algorithm can be done with the getClosestFreeAngle() function, which accepts the x and y coordinates of the goal/waypoint as an input. Thus, it would be straightforward to sequentially input the waypoints of the global planner for the local planner to follow. | |||

==Global Navigation== | |||

=== A* Path Planning === | |||

The A* algorithm is a highly regarded pathfinding and graph traversal method, renowned for its efficiency and accuracy. It utilizes the strengths of Dijkstra's Algorithm and Greedy Best-First Search to identify the shortest path between nodes in a weighted graph. While its computational demands are somewhat higher compared to the aforementioned algorithms, A* has the distinct advantage of guaranteeing the discovery of the shortest route, provided one exists. | |||

The algorithm constructs a tree of paths that begin at the start node and progressively extends these paths one edge at a time until the goal node is reached. During each iteration, it evaluates which path to extend next by considering both the actual cost incurred to reach a node and the estimated cost to reach the target from that node, known as the heuristic cost. The heuristic cost, which can differ across various implementations, typically represents the straight-line distance between the current node and the goal. To guarantee the algorithm's optimality, it is essential that this heuristic cost is never overestimated. | |||

By ensuring the heuristic cost remains a lower bound on the true cost to reach the target, the A* algorithm can efficiently prioritize paths that are more likely to lead to the goal. This balance between actual cost and heuristic estimation allows A* to navigate complex graphs effectively, making it a powerful tool in fields ranging from robotics to game development and beyond. The precision and adaptability of the heuristic function are key factors in the algorithm's performance, influencing both its speed and accuracy in finding the shortest path. | |||

===== Implementation: ===== | |||

The A* algorithm was implemented to find the shortest path from the start to the exit of a maze where node lists and connections are provided. | |||

# Initialization of Nodes and Cost Calculation: The algorithm begins by initializing the necessary data structures and setting up initial conditions. It iterates through the entire node list, setting the cost-to-come (g) to infinity for each node, indicating that the initial cost to reach any node is unknown. The heuristic cost-to-go (h) is calculated as the Euclidean distance from each node to the goal node using the calculate_distance function. The total cost (f) for each node is the sum of g and g. Each node's parent ID is initialized to NODE_ID_NOT_SET indicating that no parent has been assigned yet. Two lists, open_nodes and close_nodes, are maintained to keep track of nodes to be evaluated and nodes that have already been evaluated, respectively. The entrance node is added to the open list with a cost-to-come of 0, and its total cost is recalculated. | |||

# Finding the Node to Expand Next: The first exercise is to find, in every iteration, the node that should be expanded next. The algorithm selects the node in the open list with the lowest f value, representing the node that currently appears to be the most promising for reaching the goal efficiently. This selection process ensures that the node with the smallest estimated total cost to the goal is chosen for expansion. If the selected node is the goal node, the algorithm terminates, having successfully found the optimal path. | |||

# Exploring Neighbors and Updating Costs: The second exercise is to explore its neighbors and update them if necessary. For the selected node, the algorithm examines all its neighboring nodes (nodes connected by an edge). It checks if each neighbor has already been evaluated by looking for it in the closed list. If the neighbor is not in the closed list, the algorithm then checks if it is in the open list. If it is not in the open list, the neighbor is added to the open list. The cost-to-come (g) and total cost (f) for this neighbor are updated based on the cost to reach it from the current node. The current node is also set as the parent of this neighbor. If the neighbor is already in the open list, the algorithm checks if a lower cost path to it is found via the current node. If so, it updates the g, f, and parent node ID for this neighbor. After processing all neighbors, the current node is moved from the open list to the closed list. | |||

# Tracing Back the Optimal Path: The third exercise is to trace back the optimal path. Once the goal node is reached, the algorithm reconstructs the optimal path by backtracking from the goal node to the start node using the parent node IDs. Starting at the goal node, the algorithm moves to its parent node repeatedly until the start node is reached. The node IDs along this path are collected in a list, representing the sequence of nodes from the entrance to the goal. The algorithm then updates the planner's state to indicate that a path has been found and sets the list of node IDs that constitute the optimal path. | |||

The A* algorithm is tested on the small and big map provided for testing, and presented below are the results from the test on small map. It is evidently visible that the A* algorithm finds an optimal path to reach the goal node.[[File:Smallmap.png|thumb|509x509px]][[File:Optimalpath.png|center|thumb|324x324px]] | |||

=== Probabilistic Road Maps (PRM)=== | |||

=====The approach:===== | |||

In the given code for the generation of the PRM, the three incomplete functions were coded using the following methods: | |||

#'''Inflating the walls of the map:''' The first step of the approach is to inflate the walls of the map to accommodate for the size of the robot. Thus, providing a path from A to B where it will not hit the walls. Inflating the walls is done by iterating through the pixels of the map. Due to the map having two distinct colors, black for the walls and white for the space accessible by the robot, they can be easily distinguished from one another. When a wall is detected (black pixel) in the '''original''' map, it sets the surrounding pixels in a '''copied''' map to black. By iterating through one map and making the changes in another the walls will only be inflated once. | |||

#'''Checking if the vertex is valid:''' First, an arbitrary distance of 0.8 m was set as the threshold for the distance that the new vertex had to be from existing vertices (this would be optimised later on). Then, by looping through the existing G.vertices given in the class Graph G, the provided distanceBetweenVertices function was used to check the Euclidean distance between each existing vertex and the newly generated one. If any of the distances fell below the set value, the acceptVert boolean was set to False. | |||

#'''Checking if the vertices are close:''' This function was completed in the same manner as the previous one. By using the same distanceBetweenVertices function, the two input vertices have their Euclidean distance calculated and compared with a set certain_distance. Should the calculated distance fall below the certain_distance then the boolean verticesAreClose is left as True, and otherwise it is set to False. | |||

#'''Check if edge crosses obstacle'''[[File:Bresenham's Algorithm.png|thumb|403x403px|Example of pixel approximation of a line using Bresenham's algorithm.]]To complete this function, a pixel based approach was taken for checking the intersections between the line connecting two points and any existing obstacles/walls. The reason for doing so was so that we could implement the Bresenham line algorithm, and more importantly, avoid making the function computationally heavy with calculations such as storing the vectors connecting two points, calculating exact intersection points, and so forth (INSERT CITATION). Through Bresenham's algorithm, the code became more straightforward as loops over each existing pixel value would be sufficient for the check. To actually implement these ideas, the code was structured as follows: | |||

##First, the two vertices accepted as inputs have their coordinates converted from metres to pixels by dividing their x and y values by the resolution. For example, a vertex at (1,1) would be located on the pixel at row 20 and column 20. Note that in the code, the calculations for the y coordinates are mirrored, because without that the Bresenham line generation became flipped in the x axis. | |||

## Variables for iterating over each pixel, as per Bresenham's algorithm, variables are defined to determine the direction in which to iterate through the pixels. So, the algorithm will iterate left to right and up to down depending on the sign of the gradient values, dx and dy. Additionally, the error term, error = dx – dy, is defined which will keep track of whether the algorithm should stay on the same row/column or jump to the next one to keep following the line connecting the two vertices. | |||

##A while loop is initialized to continue running until one of the internal if statements become true. Starting with the x1 and y1 coordinates, an if statement checks that it is within the map boundaries and then checks the greyscale value of the pixel at that location. If the coordinate’s pixel has a greyscale value of less than 5 (essentially black), then EdgeCrossesObstacle is set to True and the code breaks out of the while loop. | |||

##If the starting coordinate does not contain a black/wall pixel, then the gradient and error terms inform the algorithm of which pixel to consider next. A new error term, e2, is declared by doubling the error term, err. If is larger than the negative of dy, then we know that the line connecting the two vertices continues on the next column and the x coordinate is shifted by one. If it is smaller than dx, then the line continues on the next row, so the y coordinate shifts by one. In both cases, err is updated by dx and/or -dy to ensure that the comparison with e2 is accurate. | |||

##Once the pixels on the line are iterated through, and the new pixel coordinate matches that of the final vertex (x2, y2), then the code breaks out of the while loop and EdgeCrossesObstacle remains False. | |||

====Results: ==== | |||

When the script is executed, the walls are initially inflated by the radius of the robot to ensure safe navigation. Following this, 100 vertices are randomly generated within the environment, and their placement is confirmed by terminal output and a PNG file depicting the inflated walls and vertices. | |||

Each vertex is then checked for validity to ensure it lies within navigable space and not within any inflated walls. The edges connecting these vertices are also validated to confirm they represent feasible, obstacle-free paths. Once the valid vertices and edges are established, the A* algorithm is used to determine the optimal route from the robot's starting position to the predefined target, ensuring the shortest and most efficient path is selected. | |||

With the roadmap generated, the robot's route is visualized in blue on the R-VIS tool, and the robot follows this route to navigate towards its destination. During navigation, an object is simulated in the environment to demonstrate the integration of local and global navigation strategies. Upon detecting the object, the robot dynamically adjusts its path to maintain a safe distance, using the next checkpoint as an interim target. After successfully maneuvering around the obstacle, the robot resumes following the global navigation path until it reaches its final destination. | |||

This sequence highlights the robustness of the navigation system, combining global and local strategies for efficient and safe travel through the environment.[[File:PRM VFH with obstacle.gif|thumb|691x691px|Test on map_compare for global and local planner with an added obstacle.|center]] | |||

=====Questions===== | |||

#'''How could finding the shortest path through the maze using the A* algorithm be made more efficient by placing the nodes differently? Sketch the small maze with the proposed nodes and the connections between them. Why would this be more efficient?''' | |||

#*Finding the shortest path through the maze using the A* algorithm can be made more efficient by placing the nodes at the locations in the map where the robot has to take the decision of taking a turn or maintain a straight line. So, the new proposed nodes should be placed at key points such as corners and intersections where paths branch, rather than at every possible point along the path. This reduces the number of nodes the algorithm must evaluate, speeding up the computation while maintaining accurate path representation. By placing nodes at strategic locations, navigation between nodes becomes straightforward, and the algorithm can focus on critical decision points, thereby enhancing overall path planning efficiency.[[File:Proposednodes.png|thumb|230x230px]] | |||

#'''Would implementing PRM in a map like the maze be efficient?''' | |||

#*Implementing PRM in a maze would not be efficient. The maze includes a lot of small corridors and very little open space, causing PRM difficulty when adding valid edges. To make it a viable option the amount of vertices should be increased substantially and/or the random distribution of vertices should be changed to an uniform distribution. | |||

#'''What would change if the map suddenly changes (e.g. the map gets updated)?''' | |||

#*In the case where an avoidable obstacle, like a small block, suddenly appears the local navigation makes sure to that the robot goes around it. In the case where an unavoidable obstacle, like a road block, appears the robot should first stop moving then update the map and last plan a new path with the global navigator. | |||

#'''How did you connect the local and global planner?''' | |||

#*To integrate the VFH local planner into the global planner, the method described in the VFH section was used (using the waypoints as goal coordinates for the getClosestFreeAngle() function). The "main.cpp" file already had the creation of the planner set up, and so, the simple local planner was replaced with the code from the "Local_Nav.cpp" file with minor edits such as directory paths and setting the robot's starting coordinates to specific x and y coordinates. | |||

#'''Test the combination of your local and global planner for a longer period of time on the real robot. What do you see that happens in terms of the calculated position of the robot? What is a way to solve this?''' | |||

#'''Run the A* algorithm using the gridmap (with the provided nodelist) and using the PRM. What do you observe? Comment on the advantage of using PRM in an open space.'''<br /> | |||

<table> <tr> <td>[[File:Astar gridmap.gif|none|thumb|600x600px|A* algorithm using the gridmap.]]</td> <td>[[File:Astar PRM.gif|none|thumb|600x600px|A* algorithm using PRM.]]</td> </tr> </table> | |||

''Upload screen recordings of the simulation results and comment on the behavior of the robot. If the robot does not make it to the end, describe what is going wrong and how you would solve it (if time would allow)'' | |||

''Upload video's of the robot's performance in real life (same as simulation videos), and comment the seen behavior (similar to the previous question).'' | |||

==Localisation== | |||

===Assignment 0.1=== | |||

#'''How is the code is structured?'''The main is of course the starting point of the software. It makes use of the resampler and the particlefilter. The resampler resamples the particlefilter. the particle filter runs the tasks of containing the particles and calculating the probability of them being right using the given robot data and world data. | |||

#'''What is the difference between the ParticleFilter and ParticleFilterBase classes, and how are they related to each other?'''ParticleFilterBase is the basic platform of the filter. the functions for populating and changing the filter are written here. ParticleFilter.h then inherits the functionality of particlefilterbase so different paramters of the base can be tested when they get added upon and changed in ParticleFilter | |||

#'''How are the ParticleFilter and Particle class related to eachother?'''Particle class holds all of the information of 1 particle. the ParticleFilters generates allot of particles where it can individually track the properties of each particle | |||

#'''Both the ParticleFilterBase and Particle classes implement a propagation method. What is the difference between the methods?'''the particle filter propagates the samples by running the propagation method of particle for each particle individually. | |||

===Assignment 1.1=== | |||

#'''Explain in a few concise sentences per item:''' | |||

#*'''What are the advantages/disadvantages of using the first constructor, what are the advantages/disadvantages of the second one?'''uniform distribution covers particles over the whole map. the pro's are that the uniform distribution is a more simple algorithm. also when the location of the robot is unknown is scan's the whole map instead of a more defined area around the robot. this way it can find its probable location from over the whole map. the con is then that this is more computationally efficient because you spread over a larger area. Also the robot can find locations similar over the whole map where it would be in the wrong place like corners. with gaussian distributed the particles are spread with a higher likelihood of being around the last known location. the pro's are that the the filter then looks around its last known location, where it is more likely to find the robot than the other side of the map. this makes the filter more efficient because the particles are already more likely to be right. the con's are that if the initial location is unknown the robot has a hard time to find the actual location. no particles from the filter have a high likelihood of being the actual position. also when the location is lost it has a hard time recovering. | |||

#*'''In which cases would we use either of them?'''when we don't know where we are it is better to use the uniform constuctor. this way we can find a initial position. when we then have a location we can better use a Gaussian constructor to more efficiently find the next locations of the robot. | |||

#'''Add a screenshot of rviz to show that the code is working''' | |||

{| class="wikitable" | |||

|+ | |||

![[File:Screenshot from 2024-06-06 11-23-11.png|thumb|working distribution]] | |||

|} | |||

=== Assignment 1.2 === | |||

'''Explain in a few concise sentences per item:''' | |||

'''Interpret the resulting filter average. What does it resemble? Is the estimated robot pose correct? Why?''' | |||

the result is an arrow in the middle of all distributed particles. it is the average of all the particles x,y position and theta rotation. The robot pose is not correct because this is just the first initial guess. the working filter should look at particles near the actual robot and give those particles highers likelihoods of being true. | |||

'''Imagine a case in which the filter average is inadequate for determining the robot position.''' | |||

in this case the filter won't be adequate to find the actual robot position. no particles have a high likelihood to be the robot so the filter doesn't make a good guess. if its close particles closer to the robot would have a higher likelihood and then the filter would eventually find a position. but when it is completely wrong we can temporarily switch to a uniform filter over the whole map. | |||

the green summing arrow is also visible in the image from 1.1 because the assignment were done simultaneously. | |||

=== Assignment 1.3 === | |||

'''Explain in a few concise sentences per item:''' | |||

'''Why do we need to inject noise into the propagation when the received odometry infromation already has an unkown''' | |||

'''noise component?''' | |||

'''What happens when we stop here, and do not incorporate a correction step?''' | |||

The filter will follow the position of the robot just like normal odometry would but way more computationally inefficienct. it would even be worse because of the chance that the randomness and noise in samples let the average drift. Also the particles will distribute more over the map after a while with Gaussian. | |||

[[File:Screencastfrom06-06-2024114350AM-ezgif.com-video-to-gif-converter.gif|thumb|600x600px|working propogation of filter|none]] | |||

=== Assignment 2.1 === | |||

'''Explain in a few concise sentences per item:''' | |||

'''What does each of the component of the measurement model represent, and why is each necessary.''' | |||

there are 4 parts of the measurement model | |||

hit probability (probability if it is the laser reading the wall that is found) | |||

short probability (probability that laser finds reading that is not in the map) | |||

max probability (probability that laser beam is just maxed out like when the laser points at a transparent or black surface) | |||

rand probability (probability that measurement is completely unexpected) | |||

all these probabilities are not only possible but necessary. this is because if you make the assumption that the laser beam is within only the hit probability you will get a low likelihood because more things can go wrong. if you also filter max,short and rand measurements more error is accounted for so the likelihood is more probable. | |||

'''With each particle having N >> 1 rays, and each likelihood being ∈ [0, 1] , where could you see an issue given our''' | |||

'''current implementation of the likelihood computation.''' | |||

because the measurements are between 0 and 1 and there are allot of them almost all likelihoods will go to 0. this because with each iteration the likelihood is decreasing. this leads to skewed results. | |||

=== Assignment 2.2 === | |||

'''Explain in a few concise sentences per item:'''[[File:Localisation sim.gif|thumb|468x468px|Localization in simulation]] | |||

'''What are the benefits and disadvantages of both the multinomial resampling and the stratified resampling?''' | |||

* '''Multinomial Resampling''' is simple and widely used but can lead to high variance. | |||

* '''Stratified Resampling''' offers reduced variance but is slightly more complex and computationally expensive. | |||

'''With each particle having N >> 1 rays, and each likelihood being ∈ [0, 1], where could you see an issue given our current implementation of the likelihood computation?''' | |||

Because the measurements are between 0 and 1 and there are allot of them almost all likelihoods will go to 0. this because with each iteration the likelihood is decreasing. this leads to skewed results.<br /> | |||

=== Assignment 2.3 === | |||

'''Explain in a few concise sentences per item:''' | |||

'''How you tuned the parameters.''' | |||

'''How accurate your localization is, and whether this will be sufficient for the final challenge.''' | |||

'''How your algorithm responds to unmodeled obstacles.''' | |||

'''Whether your algorithm is sufficiently fast and accurate for the final challenge.''' | |||

==System Architecture== | |||

[[File:Requirements Diagram.png|center|thumb|650x650px|Diagram of the system requirements for the final challenge.]] | |||

[[File:Data flow.png|center|thumb|602x602px|Diagram of the data flow.]] | |||

[[File:State flow.png|center|thumb|592x592px|State flow diagram.]] | |||

==Citations== | |||

*https://en.wikipedia.org/wiki/Bresenham%27s_line_algorithm | |||

Latest revision as of 15:16, 6 June 2024

Group members:

| Name | student ID |

|---|---|

| Matijs van Kempen | 2060256 |

| Luc Manders | 1729225 |

| Marc Quelle | 2044749 |

| Marijn Ruiter | 1489496 |

| Luke Alkemade | 1581643 |

| Arif Ashworth | 1544632 |

| Abhidnya Kadu | 1859234 |

Exercise 1

Method 1

In this method the LaserData struct is used to track the measured distances from the walls to the robot. To make the robot drive forward the sendBaseReference command is used, and the robot moves in the x direction at a speed of 0.2. Once it reaches the wall in front of it and the range values drop to below 0.2, motion is halted and a message is printed to the screen before exiting the program.

Some experimentation was done to test different speeds and thresholds for the stopping range values. When the speed was higher than the stopping range value the robot would actually crash into the wall first before stopping, and if it was lower then the robot would stop slightly farther away from the wall. However, this only seemed to be the case when the stopping value was very low (e.g. 0.1), but increasing it to 0.2, for example, allowed the speed to be increased to 0.4 without any crashing.

On your wiki. Compare the different methods to one another. What situations do these methods handle well? Is there one "Best" method?

Method 2

In method 3 a LaserData structure is created to get measurements of the distance between the robot and its surroundings. A variable of type double is created which is named LowerLimit, referring to the minimal distance between the wall and the robot where action should be taken. This lower limit is set at 0.3 meters. Furthermore, four other variables of type double are defined to create two cones, a left and right cone. Namely, LeftCone1 (n = 999) referring to the end of the measurement range, LeftCone2 (n = 500) referring to the beginning of the second half of the measurement range, RightCone1 (n = 499) referring to the end of the first half of the measurement range and RightCone2 (n = 0) referring to the beginning of the measurement range. Lastly, turning angles of pi/6 to the right and left are defined as TurningAngleRight and TurningAngleLeft, respectively.

After creating all necessary variables, a while-loop is created which holds true for io.ok(). First, a base reference with a velocity of 0.2 in the X-direction is send to the robot using the function io.sendBaseReference(0.2,0,0). Then, the LaserData structure is read, checking if there is new data. If this holds true a for-loop is created that iterates over all the distance measurements. Two if-statements then check if a certain distance measurement is in the right or left cone and below the lower limit. When in the right cone and below the lower limit a command is send to the robot to stop moving and then turn left with the function io.sendBaseReference(0,0,TurningAngleLeft). Else, when in the left cone and below the lower limit a command is send to the robot to again stop moving and then turn to the right with the function io.sendBaseReference(0,0,TurningAngleRight).

Testing with the first given map it is clear no collision is made between the robot and the walls. Although when it changes direction it does so with a fixed value, overall not making any significant progress when driving around the space. Testing with different values for turning angles, smaller cones or a higher lower limit all produce different behaviours but one can't be called better than the other.

Exercise 2

Method 1

The previously described method was tested on the two provided maps with input speeds of (0.3, 0, +-0.3) and a stopping value of 0.2. With both maps the robot successfully came to a stop before crashing, although it struggled when driving into a corner and stopped much closer to the wall than it did in previous tests.

method 2:

for method 2 there was a slightly different approach. laserdata was scanned (not full range about 200 to 800). every time it runs into a wall the robot turns randomly. it wil turn the same direction until it sees the robot is free to drive. This added random turning makes it possible that the robot randomly finds exits and explores the map. The code translated wel to bobo where it stopped without bumping and turned around to explore.

Method 3:

In the two videos where both maps are simulated with method 3 of the don_crash algorithm, the results are very similar to the results in the map of exercise 1.

Practical Exercises 1

On the robot-laptop open rviz. Observe the laser data. How much noise is there on the laser? What objects can be seen by the laser? Which objects cannot? How do your own legs look like to the robot.

When running the sim-rviz environment on the Bobo robot there was significant noise even when remaining stationary, as shown in the GIF below. Due to the density in hit points for the lasers, the measurement of solid flat walls was reliable. However, the noise had high amounts of variation when looking at the edges or corners of a wall, especially when corners were slightly behind the robot, which could indicate weak peripheral vision. Then, black coloured and thin objects were placed in front of the sensors and the robot struggled to detect them. Even when they are detected they still appear on the visualization with heavy amounts of noise. In contrast, our own legs, rolled up pieces of paper and contours were more clearly defined when placed in front of the robot.

Take your example of don't crash and test it on the robot. Does it work like in simulation? Why? Why not? (choose the most promising version of don't crash that you have.)

Two different don't crash codes were tested on the robot. The first code contained the method of detecting walls within a certain distance and telling the robot to stop and remain in position once it came too close. The second code contained the more elaborate method where the robot looked for the next direction of movement that did not have any walls within a set distance, and then continued driving in that direction. For the first code, it became apparent that in the simulation there was a higher margin for error in terms of how close the robot could get to the walls without the system alerting the user of a crash. For the real life test to work, the moving speed had to be reduced and the distance threshold increased to account for things like the robot's size. For the second code, the robot was able to follow simulation results and managed to perform well without needing modifications. GIFs of both code tests can be seen below.

If necessary make changes to your code and test again. What changes did you have to make? Why? Was this something you could have found in simulation?

As stated before, some changes had to be made to the moving speed and threshold for distances that counted as a "crash". This is not something that was immediately apparent in the simulations beforehand, but in hindsight it could have been prevented before testing the code on the actual robot. Of course, the robot in the simulations might not have the same dimensions as its real life counterpart, and so, the measure tool in the rviz environment could have been use to precisely measure the distance at which the robot was stopping, and whether or not it would be far enough for an actual robot.

Dynamic Window Approach

The approach

The approach can be divided in the following sequential steps:

- Calculate the union between the Possible (angular) Velocities (Vs, Ws) and the Reachable (angular) Velocities (Vd, Wd)

- EXPLAIN HOW

- Iterate through the discretized values (v, w) of the union of Vs, Vd and Ws and Wd:

- Calculate the Admissable (angular) Velocities (Va,Wa) using v and w

- EXPLAIN HOW

- Check if v and w are also in Va and Wa, if this is the case:

- Calculate the optimization function value. If this value is higher than the previous optimization value, set the current v and w to v_final and w_final

- EXPLAIN HOW

- Calculate the optimization function value. If this value is higher than the previous optimization value, set the current v and w to v_final and w_final

- Calculate the Admissable (angular) Velocities (Va,Wa) using v and w

- Return v_final, w_final

Our approach for the Dynamic Window Approach (DWA) is that we first make a list of samples that fit inside the possible velocity limit (Vs) and reachable velocity and acceleration constraints (Vd). After which we

Questions

1. What are the advantages and disadvantages of your solutions?

Advantages:

- By constantly calculating possible trajectories it is able to avoid dynamic and static obstacles.

- By including the robot acceleration and deceleration constraints it ensures for a smooth navigation without abrupt movements.

Disadvantages:

- DWA may get trapped in a local minima.

- There is a high computational load in calculating the multiple trajectories in real-time

2. What are possible scenarios which can result in failures?

- Like mentioned in question 1 DWA can get trapped in a local minima.

- Solution: Include the global planner so it doesn't drive into a local minima

- We also encounter some problems when the robot is driving to a straight wall and can't decide with direction to take. (see video: https://gitlab.tue.nl/mobile-robot-control/mrc-2024/robocop/-/blob/main/videos_and_photos/dwa_straight_wall.mp4)

- Solution: Increase Ks and decrease Kh so the robot is less constrained to drive directly towards the goal.

3. How are the local and global planner linked together?

Vector Field Histograms

Vector Field Histograms (VFH) are a robot control algorithm used for real-time obstacle avoidance. VFH works by creating a histogram grid that represents the robot's surroundings, where each cell contains a value corresponding to the density of obstacles. The algorithm calculates a polar histogram from this grid to determine the direction with the least obstacle density, allowing the robot to navigate safely by selecting a path with minimal risk of collision. This approach is efficient and effective for navigating through complex and dynamic environments.

Case specific aproach

In our implementation of VFH, the following steps were taken:

- Laser Data Acquisition: Laser data is obtained using the

getLaserdatafunction, ensuring compatibility with all robots. - Data Preparation: The number of laser readings is rounded down to the nearest multiple of ten to avoid erroneous values.

- Data Binning: The laser readings are divided into bins of ten.

- Histogram Calculation: These bins are used to create the vector field histogram. This histogram is a graph with angles on the x-axis and the likelihood of a blocking object on the y-axis.

- Path Selection: Angles where the y-axis value is below a certain threshold indicate unobstructed paths. The algorithm selects the angle that is both unobstructed and closest to the desired goal.

This approach ensures accurate and efficient obstacle avoidance, allowing the robot to navigate safely and effectively.

The optimization

To optimize the Vector Field Histogram (VFH) algorithm for robot control, the robot's dimensions must be considered to ensure it maintains a safe distance from walls and corners. The robot should ideally stay at least 25 cm away from obstacles to account for noise and potential calculation errors. Directly integrating the robot's radius into the measured distance is complex due to the angled measurements of the lasers, which can distort data, especially over longer distances. This distortion may lead to missed corners, falsely indicating large open spaces in the histogram.

To address this, an algorithm was developed that generates circles at the points where the laser detects an object. These circles are used to determine intersections with other laser points. If a laser intersects a circle, the measured distance is adjusted to the closest intersection point. This approach effectively blocks corners and narrow spaces, ensuring the robot maintains a safe distance from walls, as demonstrated in the results.

Results

The robot successfully navigates its environment while maintaining a safe distance from the walls, as demonstrated in the videos. Currently, it operates at a speed of 0.15 m/s, which is relatively low. This slow speed is necessary because the histogram calculations can yield suboptimal values if the decision-making time is too short. The most significant area for improvement in the system is increasing its decision-making efficiency. Future enhancements will include a stop mechanism that allows the robot to halt at critical decision points. At these points, the robot will rotate and scan the environment again before proceeding. This will reduce incorrect movements and enhance the robot's robustness and reliability.

Questions

- What are the advantages and disadvantages of your solutions? the algorithm is computationally efficient. the laser scan data gets looped through a few times (collecting laser data, making the VFH from the laserdata, optional filtering of the VFH, choosing the best angle). This way the algorithm updates the robot without to much delay from the real time data. a disadvantage of the algorithm is that it doesn't account well for size of the robot. even when inflating the walls in software the casting of the laser beam make it unpredictable if you actually drive aground a wall instead of next to the wall. It does drive itself around but the path isn't always optimal.

- What are possible scenarios which can result in failures?the algorithm will not navigate mazes itself. If there are too much obstructions the robot could get stuck between a few points. When entering for example something like a U shape that is larger than its viewing window. The robot will enter the hallway thinking it's is free, when seeing the end walls it will turn around until it doesn't see the wall anymore. At that point the robot will again turn around to get stuck in a loop.

- How would you prevent these scenarios from happening?By integrating a good global planner the robot already won't go into these U shapes. If it finds itself stuck on a point that a global map didn't see the robot can report this back and again find a global route that can be locally navigated.

- How are the local and global planner linked together?The VFH algorithm is structured using header files and separate class files. There is one main file, "Local_Nav.cpp", which runs the EMC environment and executes things like the import of odometry and laser data, and it then feeds those values into functions that will handle the calculations of the algorithm. In "Pos.cpp" there are functions defined for updating the robot's positional information (x, y and alpha), as well as, a function to inform it on its starting position (if it is not equal to (0, 0, 0)). Lastly, the bulk of the code can be found in "VFH.cpp", where actions like forming the bins of the histogram, calculating the next angle of movement, and so forth are completed. Most importantly, the execution of the VFH algorithm can be done with the getClosestFreeAngle() function, which accepts the x and y coordinates of the goal/waypoint as an input. Thus, it would be straightforward to sequentially input the waypoints of the global planner for the local planner to follow.

A* Path Planning

The A* algorithm is a highly regarded pathfinding and graph traversal method, renowned for its efficiency and accuracy. It utilizes the strengths of Dijkstra's Algorithm and Greedy Best-First Search to identify the shortest path between nodes in a weighted graph. While its computational demands are somewhat higher compared to the aforementioned algorithms, A* has the distinct advantage of guaranteeing the discovery of the shortest route, provided one exists.

The algorithm constructs a tree of paths that begin at the start node and progressively extends these paths one edge at a time until the goal node is reached. During each iteration, it evaluates which path to extend next by considering both the actual cost incurred to reach a node and the estimated cost to reach the target from that node, known as the heuristic cost. The heuristic cost, which can differ across various implementations, typically represents the straight-line distance between the current node and the goal. To guarantee the algorithm's optimality, it is essential that this heuristic cost is never overestimated.

By ensuring the heuristic cost remains a lower bound on the true cost to reach the target, the A* algorithm can efficiently prioritize paths that are more likely to lead to the goal. This balance between actual cost and heuristic estimation allows A* to navigate complex graphs effectively, making it a powerful tool in fields ranging from robotics to game development and beyond. The precision and adaptability of the heuristic function are key factors in the algorithm's performance, influencing both its speed and accuracy in finding the shortest path.

Implementation:

The A* algorithm was implemented to find the shortest path from the start to the exit of a maze where node lists and connections are provided.

- Initialization of Nodes and Cost Calculation: The algorithm begins by initializing the necessary data structures and setting up initial conditions. It iterates through the entire node list, setting the cost-to-come (g) to infinity for each node, indicating that the initial cost to reach any node is unknown. The heuristic cost-to-go (h) is calculated as the Euclidean distance from each node to the goal node using the calculate_distance function. The total cost (f) for each node is the sum of g and g. Each node's parent ID is initialized to NODE_ID_NOT_SET indicating that no parent has been assigned yet. Two lists, open_nodes and close_nodes, are maintained to keep track of nodes to be evaluated and nodes that have already been evaluated, respectively. The entrance node is added to the open list with a cost-to-come of 0, and its total cost is recalculated.

- Finding the Node to Expand Next: The first exercise is to find, in every iteration, the node that should be expanded next. The algorithm selects the node in the open list with the lowest f value, representing the node that currently appears to be the most promising for reaching the goal efficiently. This selection process ensures that the node with the smallest estimated total cost to the goal is chosen for expansion. If the selected node is the goal node, the algorithm terminates, having successfully found the optimal path.

- Exploring Neighbors and Updating Costs: The second exercise is to explore its neighbors and update them if necessary. For the selected node, the algorithm examines all its neighboring nodes (nodes connected by an edge). It checks if each neighbor has already been evaluated by looking for it in the closed list. If the neighbor is not in the closed list, the algorithm then checks if it is in the open list. If it is not in the open list, the neighbor is added to the open list. The cost-to-come (g) and total cost (f) for this neighbor are updated based on the cost to reach it from the current node. The current node is also set as the parent of this neighbor. If the neighbor is already in the open list, the algorithm checks if a lower cost path to it is found via the current node. If so, it updates the g, f, and parent node ID for this neighbor. After processing all neighbors, the current node is moved from the open list to the closed list.

- Tracing Back the Optimal Path: The third exercise is to trace back the optimal path. Once the goal node is reached, the algorithm reconstructs the optimal path by backtracking from the goal node to the start node using the parent node IDs. Starting at the goal node, the algorithm moves to its parent node repeatedly until the start node is reached. The node IDs along this path are collected in a list, representing the sequence of nodes from the entrance to the goal. The algorithm then updates the planner's state to indicate that a path has been found and sets the list of node IDs that constitute the optimal path.

The A* algorithm is tested on the small and big map provided for testing, and presented below are the results from the test on small map. It is evidently visible that the A* algorithm finds an optimal path to reach the goal node.

Probabilistic Road Maps (PRM)

The approach:

In the given code for the generation of the PRM, the three incomplete functions were coded using the following methods:

- Inflating the walls of the map: The first step of the approach is to inflate the walls of the map to accommodate for the size of the robot. Thus, providing a path from A to B where it will not hit the walls. Inflating the walls is done by iterating through the pixels of the map. Due to the map having two distinct colors, black for the walls and white for the space accessible by the robot, they can be easily distinguished from one another. When a wall is detected (black pixel) in the original map, it sets the surrounding pixels in a copied map to black. By iterating through one map and making the changes in another the walls will only be inflated once.

- Checking if the vertex is valid: First, an arbitrary distance of 0.8 m was set as the threshold for the distance that the new vertex had to be from existing vertices (this would be optimised later on). Then, by looping through the existing G.vertices given in the class Graph G, the provided distanceBetweenVertices function was used to check the Euclidean distance between each existing vertex and the newly generated one. If any of the distances fell below the set value, the acceptVert boolean was set to False.

- Checking if the vertices are close: This function was completed in the same manner as the previous one. By using the same distanceBetweenVertices function, the two input vertices have their Euclidean distance calculated and compared with a set certain_distance. Should the calculated distance fall below the certain_distance then the boolean verticesAreClose is left as True, and otherwise it is set to False.

- Check if edge crosses obstacleTo complete this function, a pixel based approach was taken for checking the intersections between the line connecting two points and any existing obstacles/walls. The reason for doing so was so that we could implement the Bresenham line algorithm, and more importantly, avoid making the function computationally heavy with calculations such as storing the vectors connecting two points, calculating exact intersection points, and so forth (INSERT CITATION). Through Bresenham's algorithm, the code became more straightforward as loops over each existing pixel value would be sufficient for the check. To actually implement these ideas, the code was structured as follows:

- First, the two vertices accepted as inputs have their coordinates converted from metres to pixels by dividing their x and y values by the resolution. For example, a vertex at (1,1) would be located on the pixel at row 20 and column 20. Note that in the code, the calculations for the y coordinates are mirrored, because without that the Bresenham line generation became flipped in the x axis.

- Variables for iterating over each pixel, as per Bresenham's algorithm, variables are defined to determine the direction in which to iterate through the pixels. So, the algorithm will iterate left to right and up to down depending on the sign of the gradient values, dx and dy. Additionally, the error term, error = dx – dy, is defined which will keep track of whether the algorithm should stay on the same row/column or jump to the next one to keep following the line connecting the two vertices.

- A while loop is initialized to continue running until one of the internal if statements become true. Starting with the x1 and y1 coordinates, an if statement checks that it is within the map boundaries and then checks the greyscale value of the pixel at that location. If the coordinate’s pixel has a greyscale value of less than 5 (essentially black), then EdgeCrossesObstacle is set to True and the code breaks out of the while loop.

- If the starting coordinate does not contain a black/wall pixel, then the gradient and error terms inform the algorithm of which pixel to consider next. A new error term, e2, is declared by doubling the error term, err. If is larger than the negative of dy, then we know that the line connecting the two vertices continues on the next column and the x coordinate is shifted by one. If it is smaller than dx, then the line continues on the next row, so the y coordinate shifts by one. In both cases, err is updated by dx and/or -dy to ensure that the comparison with e2 is accurate.

- Once the pixels on the line are iterated through, and the new pixel coordinate matches that of the final vertex (x2, y2), then the code breaks out of the while loop and EdgeCrossesObstacle remains False.

Results:

When the script is executed, the walls are initially inflated by the radius of the robot to ensure safe navigation. Following this, 100 vertices are randomly generated within the environment, and their placement is confirmed by terminal output and a PNG file depicting the inflated walls and vertices.

Each vertex is then checked for validity to ensure it lies within navigable space and not within any inflated walls. The edges connecting these vertices are also validated to confirm they represent feasible, obstacle-free paths. Once the valid vertices and edges are established, the A* algorithm is used to determine the optimal route from the robot's starting position to the predefined target, ensuring the shortest and most efficient path is selected.

With the roadmap generated, the robot's route is visualized in blue on the R-VIS tool, and the robot follows this route to navigate towards its destination. During navigation, an object is simulated in the environment to demonstrate the integration of local and global navigation strategies. Upon detecting the object, the robot dynamically adjusts its path to maintain a safe distance, using the next checkpoint as an interim target. After successfully maneuvering around the obstacle, the robot resumes following the global navigation path until it reaches its final destination.

This sequence highlights the robustness of the navigation system, combining global and local strategies for efficient and safe travel through the environment.

Questions

- How could finding the shortest path through the maze using the A* algorithm be made more efficient by placing the nodes differently? Sketch the small maze with the proposed nodes and the connections between them. Why would this be more efficient?

- Finding the shortest path through the maze using the A* algorithm can be made more efficient by placing the nodes at the locations in the map where the robot has to take the decision of taking a turn or maintain a straight line. So, the new proposed nodes should be placed at key points such as corners and intersections where paths branch, rather than at every possible point along the path. This reduces the number of nodes the algorithm must evaluate, speeding up the computation while maintaining accurate path representation. By placing nodes at strategic locations, navigation between nodes becomes straightforward, and the algorithm can focus on critical decision points, thereby enhancing overall path planning efficiency.

- Would implementing PRM in a map like the maze be efficient?

- Implementing PRM in a maze would not be efficient. The maze includes a lot of small corridors and very little open space, causing PRM difficulty when adding valid edges. To make it a viable option the amount of vertices should be increased substantially and/or the random distribution of vertices should be changed to an uniform distribution.

- What would change if the map suddenly changes (e.g. the map gets updated)?

- In the case where an avoidable obstacle, like a small block, suddenly appears the local navigation makes sure to that the robot goes around it. In the case where an unavoidable obstacle, like a road block, appears the robot should first stop moving then update the map and last plan a new path with the global navigator.

- How did you connect the local and global planner?

- To integrate the VFH local planner into the global planner, the method described in the VFH section was used (using the waypoints as goal coordinates for the getClosestFreeAngle() function). The "main.cpp" file already had the creation of the planner set up, and so, the simple local planner was replaced with the code from the "Local_Nav.cpp" file with minor edits such as directory paths and setting the robot's starting coordinates to specific x and y coordinates.

- Test the combination of your local and global planner for a longer period of time on the real robot. What do you see that happens in terms of the calculated position of the robot? What is a way to solve this?

- Run the A* algorithm using the gridmap (with the provided nodelist) and using the PRM. What do you observe? Comment on the advantage of using PRM in an open space.

Upload screen recordings of the simulation results and comment on the behavior of the robot. If the robot does not make it to the end, describe what is going wrong and how you would solve it (if time would allow) Upload video's of the robot's performance in real life (same as simulation videos), and comment the seen behavior (similar to the previous question).

Localisation

Assignment 0.1

- How is the code is structured?The main is of course the starting point of the software. It makes use of the resampler and the particlefilter. The resampler resamples the particlefilter. the particle filter runs the tasks of containing the particles and calculating the probability of them being right using the given robot data and world data.

- What is the difference between the ParticleFilter and ParticleFilterBase classes, and how are they related to each other?ParticleFilterBase is the basic platform of the filter. the functions for populating and changing the filter are written here. ParticleFilter.h then inherits the functionality of particlefilterbase so different paramters of the base can be tested when they get added upon and changed in ParticleFilter

- How are the ParticleFilter and Particle class related to eachother?Particle class holds all of the information of 1 particle. the ParticleFilters generates allot of particles where it can individually track the properties of each particle

- Both the ParticleFilterBase and Particle classes implement a propagation method. What is the difference between the methods?the particle filter propagates the samples by running the propagation method of particle for each particle individually.

Assignment 1.1

- Explain in a few concise sentences per item:

- What are the advantages/disadvantages of using the first constructor, what are the advantages/disadvantages of the second one?uniform distribution covers particles over the whole map. the pro's are that the uniform distribution is a more simple algorithm. also when the location of the robot is unknown is scan's the whole map instead of a more defined area around the robot. this way it can find its probable location from over the whole map. the con is then that this is more computationally efficient because you spread over a larger area. Also the robot can find locations similar over the whole map where it would be in the wrong place like corners. with gaussian distributed the particles are spread with a higher likelihood of being around the last known location. the pro's are that the the filter then looks around its last known location, where it is more likely to find the robot than the other side of the map. this makes the filter more efficient because the particles are already more likely to be right. the con's are that if the initial location is unknown the robot has a hard time to find the actual location. no particles from the filter have a high likelihood of being the actual position. also when the location is lost it has a hard time recovering.

- In which cases would we use either of them?when we don't know where we are it is better to use the uniform constuctor. this way we can find a initial position. when we then have a location we can better use a Gaussian constructor to more efficiently find the next locations of the robot.

- Add a screenshot of rviz to show that the code is working

Assignment 1.2

Explain in a few concise sentences per item:

Interpret the resulting filter average. What does it resemble? Is the estimated robot pose correct? Why?

the result is an arrow in the middle of all distributed particles. it is the average of all the particles x,y position and theta rotation. The robot pose is not correct because this is just the first initial guess. the working filter should look at particles near the actual robot and give those particles highers likelihoods of being true.

Imagine a case in which the filter average is inadequate for determining the robot position.

in this case the filter won't be adequate to find the actual robot position. no particles have a high likelihood to be the robot so the filter doesn't make a good guess. if its close particles closer to the robot would have a higher likelihood and then the filter would eventually find a position. but when it is completely wrong we can temporarily switch to a uniform filter over the whole map.

the green summing arrow is also visible in the image from 1.1 because the assignment were done simultaneously.

Assignment 1.3

Explain in a few concise sentences per item:

Why do we need to inject noise into the propagation when the received odometry infromation already has an unkown

noise component?

What happens when we stop here, and do not incorporate a correction step?

The filter will follow the position of the robot just like normal odometry would but way more computationally inefficienct. it would even be worse because of the chance that the randomness and noise in samples let the average drift. Also the particles will distribute more over the map after a while with Gaussian.

Assignment 2.1

Explain in a few concise sentences per item:

What does each of the component of the measurement model represent, and why is each necessary.

there are 4 parts of the measurement model

hit probability (probability if it is the laser reading the wall that is found)

short probability (probability that laser finds reading that is not in the map)

max probability (probability that laser beam is just maxed out like when the laser points at a transparent or black surface)

rand probability (probability that measurement is completely unexpected)

all these probabilities are not only possible but necessary. this is because if you make the assumption that the laser beam is within only the hit probability you will get a low likelihood because more things can go wrong. if you also filter max,short and rand measurements more error is accounted for so the likelihood is more probable.

With each particle having N >> 1 rays, and each likelihood being ∈ [0, 1] , where could you see an issue given our

current implementation of the likelihood computation.

because the measurements are between 0 and 1 and there are allot of them almost all likelihoods will go to 0. this because with each iteration the likelihood is decreasing. this leads to skewed results.

Assignment 2.2

Explain in a few concise sentences per item:

What are the benefits and disadvantages of both the multinomial resampling and the stratified resampling?

- Multinomial Resampling is simple and widely used but can lead to high variance.

- Stratified Resampling offers reduced variance but is slightly more complex and computationally expensive.

With each particle having N >> 1 rays, and each likelihood being ∈ [0, 1], where could you see an issue given our current implementation of the likelihood computation?

Because the measurements are between 0 and 1 and there are allot of them almost all likelihoods will go to 0. this because with each iteration the likelihood is decreasing. this leads to skewed results.

Assignment 2.3

Explain in a few concise sentences per item:

How you tuned the parameters.

How accurate your localization is, and whether this will be sufficient for the final challenge.

How your algorithm responds to unmodeled obstacles.

Whether your algorithm is sufficiently fast and accurate for the final challenge.

System Architecture